Elasticsearch

先来了解一下Elasticsearch

欢迎来到 Elastic — Elasticsearch 和 Kibana 的开发者 | Elastic

Elasticsearch 是一个开源的分布式搜索和分析引擎,它被设计用于处理和存储大规模的实时数据。它的主要特点是快速、强大的搜索能力和灵活的数据分析功能。以下是 Elasticsearch 的一些关键特性和用途:

-

分布式架构:Elasticsearch 被设计为一个分布式系统,它可以在多台服务器上运行,形成一个集群。数据在集群中自动分片和复制,从而提供高可用性和可伸缩性。

-

实时搜索和分析:Elasticsearch 提供了非常快速的实时搜索能力,可以在大规模数据集上进行快速的全文搜索,支持各种查询操作,如模糊搜索、精确匹配、范围查询等。

-

多数据类型支持:Elasticsearch 不仅支持文本数据,还支持数字、日期、地理位置等多种数据类型的索引和查询。

-

全文搜索引擎:Elasticsearch 提供强大的全文搜索功能,支持分词、语义分析、拼写纠错等,能够在大量文本数据中快速找到相关的结果。

-

多种查询和过滤器:Elasticsearch 提供丰富的查询语法和过滤器,使用户能够更精确地检索数据。

-

文档导向型数据库:Elasticsearch 是一种文档导向型数据库,数据以 JSON 格式的文档形式存储,每个文档都有一个唯一的标识符,称为文档 ID。

Elasticsearch 被广泛应用于各种领域,包括企业搜索、日志和事件数据分析、电子商务网站搜索、内容管理系统、业务指标监控和可视化等。它的强大搜索和分析能力使其成为处理大规模数据的重要工具之一。

Spring Boot后端配置

maven配置

Maven Repository: Search/Browse/Explore (mvnrepository.com)

pom.xml文件导相应的maven包

<!-- https://mvnrepository.com/artifact/org.springframework.boot/spring-boot-starter-data-elasticsearch -->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-elasticsearch</artifactId>

</dependency>

application.properties配置

#---------------------------------------

# ELASTICSEARCH 搜索引擎配置

#---------------------------------------

# 连接数据库

spring.elasticsearch.rest.uris=127.0.0.1:9200

# 数据库用户名

spring.elasticsearch.rest.username=elasticsearchmodel层

定义相应的实体类FileSearchEntity,将其置于model层

package com.ikkkp.example.model.po.es;

import lombok.Data;

import org.springframework.data.annotation.Id;

import org.springframework.data.elasticsearch.annotations.Document;

import org.springframework.data.elasticsearch.annotations.Field;

import org.springframework.data.elasticsearch.annotations.FieldType;

@Data

@Document(indexName = "file_search")

public class FileSearchEntity {

@Id

private Integer fileID;

@Field(name = "analyzer_title", type = FieldType.Text, searchAnalyzer = "ik_max_word", analyzer = "ik_smart")

private String title;

@Field(name = "analyzer_abstract_content", type = FieldType.Text, searchAnalyzer = "ik_max_word", analyzer = "ik_smart")

private String abstractContent;

@Field(type = FieldType.Integer)

private Integer size;

@Field(name = "file_type", type = FieldType.Text)

private String fileType;

@Field(name = "upload_username", type = FieldType.Text)

private String uploadUsername;

@Field(name = "preview_picture_object_name", type = FieldType.Text)

private String previewPictureObjectName;

@Field(name = "payment_method", type = FieldType.Integer)

private Integer paymentMethod;

@Field(name = "payment_amount", type = FieldType.Integer)

private Integer paymentAmount;

@Field(name = "is_approved", type = FieldType.Boolean)

private String isApproved;

@Field(name = "hide_score", type = FieldType.Double)

private Double hideScore;

@Field(name = "analyzer_content",type = FieldType.Text, searchAnalyzer = "ik_max_word", analyzer = "ik_smart")

private String content;

@Field(name = "analyzer_keyword",type = FieldType.Keyword)

private String keyword;

@Field(name = "is_vip_income", type = FieldType.Text)

private String isVipIncome;

@Field(name = "score", type = FieldType.Text)

private String score;

@Field(name = "raters_num", type = FieldType.Text)

private String ratersNum;

@Field(name = "read_num", type = FieldType.Text)

private String readNum;

}

service层

定义相应的类ESearchService,将其置于service层

searchFile:通过创建了不同的查询条件和选项,搜索结果中包含了高亮信息,可以在前端界面中用于显示搜索结果时突出显示匹配的关键字。我们通过该方法进行文章全文的内容搜索。

suggestTitle:可以实现在搜索框中提供实时的自动补全建议,根据用户输入的关键字快速展示可能的补全项。

package com.ikkkp.example.service.esImpl;

import com.ikkkp.example.model.po.es.FileSearchEntity;

import org.elasticsearch.common.unit.Fuzziness;

import org.elasticsearch.index.query.BoolQueryBuilder;

import org.elasticsearch.index.query.QueryBuilders;

import org.elasticsearch.search.fetch.subphase.highlight.HighlightBuilder;

import org.elasticsearch.search.suggest.Suggest;

import org.elasticsearch.search.suggest.SuggestBuilder;

import org.elasticsearch.search.suggest.SuggestBuilders;

import org.elasticsearch.search.suggest.completion.CompletionSuggestion;

import org.elasticsearch.search.suggest.completion.CompletionSuggestionBuilder;

import org.springframework.data.domain.PageRequest;

import org.springframework.data.elasticsearch.core.ElasticsearchRestTemplate;

import org.springframework.data.elasticsearch.core.SearchHits;

import org.springframework.data.elasticsearch.core.query.NativeSearchQuery;

import org.springframework.data.elasticsearch.core.query.NativeSearchQueryBuilder;

import org.springframework.stereotype.Service;

import javax.annotation.Resource;

import java.util.*;

@Service

public class ESearchService {

@Resource

private ElasticsearchRestTemplate elasticsearchRestTemplate;

public SearchHits<FileSearchEntity> searchFile(String keywords, Integer page, Integer rows) {

BoolQueryBuilder boolQueryBuilder = QueryBuilders.boolQuery()

.should(QueryBuilders.fuzzyQuery("analyzer_title", keywords).fuzziness(Fuzziness.AUTO))

.should(QueryBuilders.fuzzyQuery("analyzer_content", keywords).fuzziness(Fuzziness.AUTO))

.should(QueryBuilders.fuzzyQuery("analyzer_abstract_content", keywords).fuzziness(Fuzziness.AUTO))

.must(QueryBuilders.multiMatchQuery(keywords,"analyzer_title","analyzer_content","analyzer_abstract_content"))

.must(QueryBuilders.matchQuery("is_approved", "true"));//必须是已经被核准的才能被检索出来

//构建高亮查询

NativeSearchQuery searchQuery = new NativeSearchQueryBuilder()

.withQuery(boolQueryBuilder)

.withHighlightFields(

new HighlightBuilder.Field("analyzer_title"),

new HighlightBuilder.Field("analyzer_abstract_content"),

new HighlightBuilder.Field("analyzer_content"))

.withHighlightBuilder(new HighlightBuilder().preTags("<span class='highlight'>").postTags("</span>"))

.withPageable(PageRequest.of(page - 1, rows)).build();

SearchHits<FileSearchEntity> searchHits = elasticsearchRestTemplate.search(searchQuery, FileSearchEntity.class);

return searchHits;

}

public ArrayList<String> suggestTitle(String keyword,Integer rows) {

return suggest("suggest_title",keyword,rows);

}

public ArrayList<String> suggest(String fieldName, String keyword,Integer rows) {

HashSet<String> returnSet = new LinkedHashSet<>(); // 用于存储查询到的结果

// 创建CompletionSuggestionBuilder

CompletionSuggestionBuilder textBuilder = SuggestBuilders.completionSuggestion(fieldName) // 指定字段名

.size(rows) // 设定返回数量

.skipDuplicates(true); // 去重

// 创建suggestBuilder并将completionBuilder添加进去

SuggestBuilder suggestBuilder = new SuggestBuilder();

suggestBuilder.addSuggestion("suggest_text", textBuilder)

.setGlobalText(keyword);

// 执行请求

Suggest suggest = elasticsearchRestTemplate.suggest(suggestBuilder, elasticsearchRestTemplate.getIndexCoordinatesFor(FileSearchEntity.class)).getSuggest();

// 取出结果

Suggest.Suggestion<Suggest.Suggestion.Entry<CompletionSuggestion.Entry.Option>> textSuggestion = suggest.getSuggestion("suggest_text");

for (Suggest.Suggestion.Entry<CompletionSuggestion.Entry.Option> entry : textSuggestion.getEntries()) {

List<CompletionSuggestion.Entry.Option> options = entry.getOptions();

for (Suggest.Suggestion.Entry.Option option : options) {

returnSet.add(option.getText().toString());

}

}

return new ArrayList<>(returnSet);

}

}

controller层

定义相应的类DocSearchController,将其置于controller层

package com.ikkkp.example.controller;

import com.ikkkp.example.model.vo.MsgEntity;

import com.ikkkp.example.model.po.es.FileSearchEntity;

import com.ikkkp.example.service.esImpl.ESearchService;

import lombok.extern.slf4j.Slf4j;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.data.elasticsearch.core.SearchHits;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RequestMethod;

import org.springframework.web.bind.annotation.RequestParam;

import org.springframework.web.bind.annotation.RestController;

import java.util.ArrayList;

@RestController

@Slf4j

@RequestMapping("/docSearchService")

public class DocSearchController {

@Autowired

ESearchService eSearchService;

@RequestMapping(value = "/search",method = RequestMethod.GET)

public MsgEntity<SearchHits<FileSearchEntity>> searchDoc(@RequestParam String keywords, @RequestParam Integer page, @RequestParam Integer rows) {

return new MsgEntity<>("SUCCESS", "1", eSearchService.searchFile(keywords, page, rows));

}

@RequestMapping(value = "/suggest",method = RequestMethod.GET)

public MsgEntity<ArrayList<String>> suggestTitle(@RequestParam String keyword, @RequestParam Integer rows) {

ArrayList<String> suggests = eSearchService.suggestTitle(keyword, rows);

return new MsgEntity<>("SUCCESS", "1", suggests);

}

}

现在我们已经基本完成了Spring Boot的配置,但要明确几点:

我们现在是在es数据库里面直接拿相应的数据,一般情况下还要涉及到数据导入进es上面的问题(这个数据其实可以是多个方面),我们现在就以数据在mysql上面为例,下面我们通过Logstash来定时拉取mysql的数据到es上。

先来了解一下Logstash:

Logstash

再来了解一下Logstash

Logstash是一个开源的数据收集引擎,具有实时流水线功能。

它从多个源头接收数据,进行数据处理,然后将转化后的信息发送到stash,即存储。

Logstash允许我们将任何格式的数据导入到任何数据存储中,不仅仅是ElasticSearch。

它可以用来将数据并行导入到其他NoSQL数据库,如MongoDB或Hadoop,甚至导入到AWS。

数据可以存储在文件中,也可以通过流等方式进行传递。

Logstash对数据进行解析、转换和过滤。它还可以从非结构化数据中推导出结构,对个人数据进行匿名处理,可以进行地理位置查询等等。

一个Logstash管道有两个必要的元素,输入和输出,以及一个可选的元素,过滤器。

输入组件从源头消耗数据,过滤组件转换数据,输出组件将数据写入一个或多个目的地。

从官网下载Download Logstash Free | Get Started Now | Elastic

开箱即用!!孩子馋的都快哭了

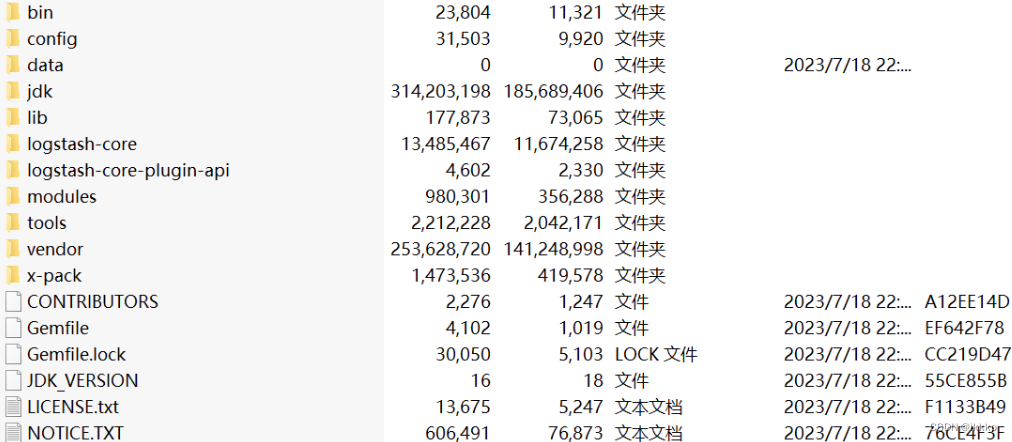

主目录结构

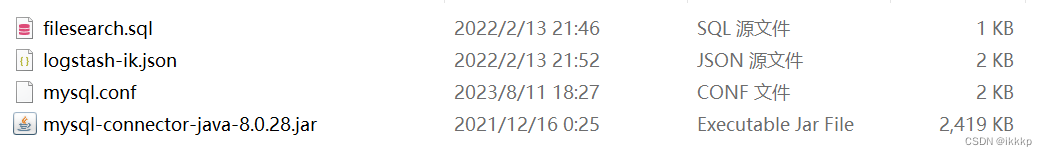

在主目录下面创建mysqletc文件夹

其中filesearch.sql无需多说,是自动化从MySQL收集数据的sql

其中filesearch.sql无需多说,是自动化从MySQL收集数据的sql

SELECT

file.file_id,

file.title as analyzer_title,

file.title as suggest_title,

file.abstract_content as analyzer_abstract_content,

file.size,

file.file_type,

file.upload_username,

file.preview_picture_object_name,

file.payment_amount,

file.payment_method,

file.is_approved,

file.hide_score,

file_search.content as analyzer_content,

file_search.keyword as suggest_keyword,

file_search.keyword as analyzer_keyword,

file_extra.is_vip_income,

file_extra.score,

file_extra.raters_num,

file_extra.read_num

FROM

file,

file_search,

file_extra

WHERE

file.file_id = file_search.file_id AND

file.file_id = file_extra.file_idmysql.conf是Logstash执行的配置文件

input {

stdin {

}

jdbc {

# mysql 数据库链接,shop为数据库名

jdbc_connection_string => "jdbc:mysql://localhost:3306/yourdatabase"

# 用户名和密码

jdbc_user => "root"

jdbc_password => "password"

# 驱动

jdbc_driver_library => "../mysqletc/mysql-connector-java-8.0.28.jar"

# 驱动类名

jdbc_driver_class => "com.mysql.jdbc.Driver"

jdbc_paging_enabled => "true"

jdbc_page_size => "500"

# 执行的sql 文件路径+名称

statement_filepath => "../mysqletc/filesearch.sql"

# 设置监听间隔 各字段含义(由左至右)分、时、天、月、年,全部为*默认含义为每分钟都更新

schedule => "* * * * *"

# 索引类型

type => "_doc"

}

}

filter {

json {

source => "message"

remove_field => ["message"]

}

date {

match => ["timestamp","dd/MM/yyyy:HH:mm:ss Z"]

}

}

output {

elasticsearch {

hosts => ["localhost:9200"]

index => "file_search"

document_type => "_doc"

document_id => "%{file_id}"

template_overwrite => true

template => "../mysqletc/logstash-ik.json"

}

stdout {

codec => json_lines

}

}附上logstash-ik.json。这段 JSON 配置是用于创建 Elasticsearch 索引模板(Index Template),在这个特定的示例中,它是用来定义 Elasticsearch 索引的映射和设置。十分重要!

{

"template": "*",

"version": 50001,

"settings": {

"index.refresh_interval": "5s"

},

"mappings": {

"dynamic_templates": [

{

"suggest_fields": {

"match":"suggest_*",

"match_mapping_type": "string",

"mapping": {

"type": "completion",

"norms": false,

"analyzer": "ik_max_word"

}

}

},{

"analyzer_fields": {

"match":"analyzer_*",

"match_mapping_type": "string",

"mapping": {

"type": "text",

"norms": false,

"analyzer": "ik_max_word",

"fields": {

"keyword": {

"type": "keyword",

"ignore_above": 256

}

}

}

}

},{

"string_fields": {

"match": "*",

"match_mapping_type": "string",

"mapping": {

"type": "text",

"norms": false

}

}

}

],

"properties": {

"@timestamp": {

"type": "date"

},

"@version": {

"type": "keyword"

}

}

}

}bin目录执行批处理

最后再执行批处理 K:\Data\elasticsearch-logstash\bin>logstash -f ../mysqletc/mysql.conf

这时候由于设置的定时处理器

# 设置监听间隔 各字段含义(由左至右)分、时、天、月、年,全部为*默认含义为每分钟都更新

我们就可以看到命令行将MySQL数据库的数据定时导入es里面

注意!!!logstash存放目录是不能含有中文名的