问题一

# /opt/kube/bin/kubectl get nodes

E0221 11:11:16.575740 23693 memcache.go:255] couldn't get resource list for metrics.k8s.io/v1beta1: the server is currently unable to handle the request

E0221 11:11:16.582180 23693 memcache.go:106] couldn't get resource list for metrics.k8s.io/v1beta1: the server is currently unable to handle the request

E0221 11:11:16.584653 23693 memcache.go:106] couldn't get resource list for metrics.k8s.io/v1beta1: the server is currently unable to handle the request

E0221 11:11:16.587073 23693 memcache.go:106] couldn't get resource list for metrics.k8s.io/v1beta1: the server is currently unable to handle the request

NAME STATUS ROLES AGE VERSION

master01 Ready,SchedulingDisabled master 49m v1.26.1

master02 Ready,SchedulingDisabled master 49m v1.26.1

master03 Ready,SchedulingDisabled master 49m v1.26.1

worker01 Ready node 46m v1.26.1

worker02 Ready node 46m v1.26.1

worker03 Ready node 46m v1.26.1解决:

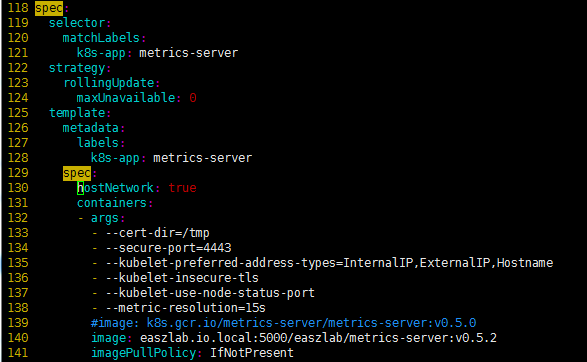

修改/etc/kubeasz/clusters/k8s-01/yml/metrics-server.yaml 文件

118 spec:

119 selector:

120 matchLabels:

121 k8s-app: metrics-server

122 strategy:

123 rollingUpdate:

124 maxUnavailable: 0

125 template:

126 metadata:

127 labels:

128 k8s-app: metrics-server

129 spec:

130 hostNetwork: true #####添加本行内容

131 containers:

132 - args:

133 - --cert-dir=/tmp

134 - --secure-port=4443

/opt/kube/bin/kubectl apply -f /etc/kubeasz/clusters/k8s-01/yml/metrics-server.yaml

[root@master01 yml]# /opt/kube/bin/kubectl get nodes

NAME STATUS ROLES AGE VERSION

master01 Ready,SchedulingDisabled master 49m v1.26.1

master02 Ready,SchedulingDisabled master 49m v1.26.1

master03 Ready,SchedulingDisabled master 49m v1.26.1

worker01 Ready node 46m v1.26.1

worker02 Ready node 46m v1.26.1

worker03 Ready node 46m v1.26.1

问题二、

FAILED - RETRYING: 轮询等待calico-node 运行 (15 retries left). FAILED - RETR

helm pre-upgrade hooks failed: timed out waiting for the condition错误日志:

Kubelet Unable to attach or mount volumes – timed out waiting for the condition

解决原因:k8s,docker,cilium等很多功能、特性需要较新的linux内核支持,所以有必要在集群部署前对内核进行升级

解决办法:升级

Centos7事例:

# 载入公钥

rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org

# 安装ELRepo

rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-3.el7.elrepo.noarch.rpm

# 载入elrepo-kernel元数据

yum --disablerepo=\* --enablerepo=elrepo-kernel repolist

# 查看可用的rpm包

yum --disablerepo=\* --enablerepo=elrepo-kernel list kernel*

# 安装长期支持版本的kernel

yum --disablerepo=\* --enablerepo=elrepo-kernel install -y kernel-lt.x86_64

# 删除旧版本工具包

yum remove kernel-tools-libs.x86_64 kernel-tools.x86_64 -y

# 安装新版本工具包

yum --disablerepo=\* --enablerepo=elrepo-kernel install -y kernel-lt-tools.x86_64

#查看默认启动顺序

awk -F\' '$1=="menuentry " {print $2}' /etc/grub2.cfg

CentOS Linux (4.4.183-1.el7.elrepo.x86_64) 7 (Core)

CentOS Linux (3.10.0-327.10.1.el7.x86_64) 7 (Core)

CentOS Linux (0-rescue-c52097a1078c403da03b8eddeac5080b) 7 (Core)

#默认启动的顺序是从0开始,新内核是从头插入(目前位置在0,而4.4.4的是在1),所以需要选择0。

grub2-set-default 0

#重启并检查

reboot问题三、提示没有kubectl(其它相关命令)命令

添加命令的环境变量

# vim /etc/profile

PATH=$PATH:/opt/kube/bin

export PATH

# source /etc/profile

问题四、安装calico网络中的一些问题

#安装calico网络

如果需要安装calico,请在clusters/xxxx/hosts文件中设置变量 CLUSTER_NETWORK="calico"

在clusters/xxxx/config.yml

# [calico]设置calico 是否使用route reflectors

# 如果集群规模超过50个节点,建议启用该特性

CALICO_RR_ENABLED: true

# CALICO_RR_NODES 配置route reflectors的节点,如果未设置默认使用集群master节点

# CALICO_RR_NODES: ["192.168.1.1", "192.168.1.2"]

CALICO_RR_NODES: ["10.2.1.190", "10.2.1.191", "10.2.1.192", "10.2.1.193", "10.2.1.194", "10.2.1.195"]

错误

TASK [calico : node label] *******************************************************************************************************************************

fatal: [10.2.1.190]: FAILED! => {"changed": true, "cmd": "for ip in 10.2.1.190 10.2.1.191 10.2.1.192 10.2.1.193 10.2.1.194 10.2.1.195 ;do /etc/kubeasz/bin/kubectl label node \"$ip\" route-reflector=true --overwrite; done", "delta": "0:00:00.479707", "end": "2023-02-21 03:47:09.604375", "msg": "non-zero return code", "rc": 1, "start": "2023-02-21 03:47:09.124668", "stderr": "Error from server (NotFound): nodes \"10.2.1.190\" not found\nError from server (NotFound): nodes \"10.2.1.191\" not found\nError from server (NotFound): nodes \"10.2.1.192\" not found\nError from server (NotFound): nodes \"10.2.1.193\" not found\nError from server (NotFound): nodes \"10.2.1.194\" not found\nError from server (NotFound): nodes \"10.2.1.195\" not found", "stderr_lines": ["Error from server (NotFound): nodes \"10.2.1.190\" not found", "Error from server (NotFound): nodes \"10.2.1.191\" not found", "Error from server (NotFound): nodes \"10.2.1.192\" not found", "Error from server (NotFound): nodes \"10.2.1.193\" not found", "Error from server (NotFound): nodes \"10.2.1.194\" not found", "Error from server (NotFound): nodes \"10.2.1.195\" not found"], "stdout": "", "stdout_lines": []}

解决:查看k8s中node的name如果为主机名,需要在RR的nodes中添加主机名

# CALICO_RR_NODES 配置route reflectors的节点,如果未设置默认使用集群master节点

# CALICO_RR_NODES: ["192.168.1.1", "192.168.1.2"]

CALICO_RR_NODES: ["master01", "master02", "master03", "worker01", "worker02", "worker03"]

#执行安装

#dk ezctl setup k8s-01 06

[root@master01 kubeasz]# kubectl get node

NAME STATUS ROLES AGE VERSION

master01 Ready,SchedulingDisabled master 3h48m v1.26.1

master02 Ready,SchedulingDisabled master 3h48m v1.26.1

master03 Ready,SchedulingDisabled master 3h48m v1.26.1

worker01 Ready node 3h45m v1.26.1

worker02 Ready node 3h45m v1.26.1

worker03 Ready node 3h45m v1.26.1

[root@master01 kubeasz]# kubectl get pod -n kube-system -o wide | grep calico

calico-kube-controllers-7bbb6b796b-6cx6m 1/1 Running 1 (3h23m ago) 3h43m 10.2.1.195 worker03 <none> <none>

calico-node-2h65h 1/1 Running 0 3h43m 10.2.1.193 worker01 <none> <none>

calico-node-bxgdz 1/1 Running 0 3h43m 10.2.1.190 master01 <none> <none>

calico-node-d5mgg 1/1 Running 0 3h43m 10.2.1.194 worker02 <none> <none>

calico-node-hh2s4 1/1 Running 0 3h43m 10.2.1.195 worker03 <none> <none>

calico-node-l55rc 1/1 Running 0 3h43m 10.2.1.192 master03 <none> <none>

calico-node-vw9cr 1/1 Running 0 3h43m 10.2.1.191 master02 <none> <none>

[root@master01 kubeasz]# calicoctl get node -o wide

NAME ASN IPV4 IPV6

master01 (64512) 10.2.1.190/23

master02 (64512) 10.2.1.191/23

master03 (64512) 10.2.1.192/23

worker01 (64512) 10.2.1.193/23

worker02 (64512) 10.2.1.194/23

worker03 (64512) 10.2.1.195/23

可以在集群中选择1个或多个节点作为 rr 节点,这里先选择节点:k8s401

calicoctl patch node master01 -p '{"spec": {"bgp": {"routeReflectorClusterID": "244.0.0.1"}}}'

#配置node label

calicoctl patch node master01 -p '{"metadata": {"labels": {"route-reflector": "true"}}}'

[root@master01 BGP]# vim bgp.yml

kind: BGPPeer

apiVersion: projectcalico.org/v3

metadata:

name: peer-with-route-reflectors

spec:

nodeSelector: all()

peerSelector: route-reflector == 'true'

[root@master01 BGP]# calicoctl create -f bgp.yml

Successfully created 1 'BGPPeer' resource(s)

验证增加 rr 之后的bgp 连接情况

dk ansible -i /etc/kubeasz/clusters/k8s-01/hosts all -m shell -a '/opt/kube/bin/calicoctl node status'

依次添加其它的master与worker节点添加到rr

问题五、kubectl get nodes 获取节点时报错

[root@master02 ~]# kubectl get nodes

E0221 14:51:52.209187 17967 memcache.go:238] couldn't get current server API group list: Get "http://localhost:8080/api?timeout=32s": dial tcp [::1]:8080: connect: connection refused

E0221 14:51:52.210167 17967 memcache.go:238] couldn't get current server API group list: Get "http://localhost:8080/api?timeout=32s": dial tcp [::1]:8080: connect: connection refused

E0221 14:51:52.212104 17967 memcache.go:238] couldn't get current server API group list: Get "http://localhost:8080/api?timeout=32s": dial tcp [::1]:8080: connect: connection refused

E0221 14:51:52.213813 17967 memcache.go:238] couldn't get current server API group list: Get "http://localhost:8080/api?timeout=32s": dial tcp [::1]:8080: connect: connection refused

E0221 14:51:52.215677 17967 memcache.go:238] couldn't get current server API group list: Get "http://localhost:8080/api?timeout=32s": dial tcp [::1]:8080: connect: connection refused

The connection to the server localhost:8080 was refused - did you specify the right host or port?

错误解决:

1)token 过期

#kubeadm token generate #生成token

7r3l16.5yzfksso5ty2zzie #下面这条命令中会用到该结果

# kubeadm token create 7r3l16.5yzfksso5ty2zzie --print-join-command --ttl=0 #根据token输出添加命令

W0604 10:35:00.523781 14568 validation.go:28] Cannot validate kube-proxy config - no validator is available

W0604 10:35:00.523827 14568 validation.go:28] Cannot validate kubelet config - no validator is available

kubeadm join 192.168.254.100:6443 --token 7r3l16.5yzfksso5ty2zzie --discovery-token-ca-cert-hash sha256:56281a8be264fa334bb98cac5206aa190527a03180c9f397c253ece41d997e8a

2)k8s api server不可达

[root@master01 ~]#setenforce 0

[root@master01 ~]#sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config

[root@master01 ~]#systemctl disable firewalld --now

3)node节点与master节点的时间不同步

ntpdate time.ntp.org4)k8s初始化后没有创建必要的运行文件

[root@worker01 ~]# kubectl get nodes

E0221 14:55:32.706287 6388 memcache.go:238] couldn't get current server API group list: Get "http://localhost:8080/api?timeout=32s": dial tcp [::1]:8080: connect: connection refused

E0221 14:55:32.706869 6388 memcache.go:238] couldn't get current server API group list: Get "http://localhost:8080/api?timeout=32s": dial tcp [::1]:8080: connect: connection refused

E0221 14:55:32.708772 6388 memcache.go:238] couldn't get current server API group list: Get "http://localhost:8080/api?timeout=32s": dial tcp [::1]:8080: connect: connection refused

E0221 14:55:32.710750 6388 memcache.go:238] couldn't get current server API group list: Get "http://localhost:8080/api?timeout=32s": dial tcp [::1]:8080: connect: connection refused

E0221 14:55:32.712341 6388 memcache.go:238] couldn't get current server API group list: Get "http://localhost:8080/api?timeout=32s": dial tcp [::1]:8080: connect: connection refused

The connection to the server localhost:8080 was refused - did you specify the right host or port?

[root@worker01 ~]# cd .kube/

[root@worker01 .kube]# ll -a

total 0

drwxr-xr-x 2 root root 6 Feb 21 10:15 .

dr-xr-x---. 6 root root 222 Feb 21 11:28 ..

####从正常节点(dev)复制kube这个文件到其它节点

[root@worker01 .kube]# ll -a

total 8

drwxr-xr-x 3 root root 33 Feb 21 14:55 .

dr-xr-x---. 6 root root 222 Feb 21 11:28 ..

drwxr-x--- 4 root root 35 Feb 21 14:55 cache

-r-------- 1 root root 6198 Feb 21 14:55 config

[root@worker01 .kube]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master01 Ready,SchedulingDisabled master 4h34m v1.26.1

master02 Ready,SchedulingDisabled master 4h34m v1.26.1

master03 Ready,SchedulingDisabled master 4h34m v1.26.1

worker01 Ready node 4h30m v1.26.1

worker02 Ready node 4h30m v1.26.1

worker03 Ready node 4h30m v1.26.1

问题五、安装完calico网络后,查看node只有一台

[root@master-02 ~]# calicoctl get node -o wide

NAME ASN IPV4 IPV6

localhost.localdomain (64512) 10.2.1.195/23

[root@master-02 ~]# calicoctl node status

Calico process is running.

IPv4 BGP status

No IPv4 peers found.

IPv6 BGP status

No IPv6 peers found.

问题六、Non-critical error occurred during resource retrieval: pods is forbidden

Non-critical error occurred during resource retrieval: namespaces is forbidden: User “system:serviceaccount:kubernetes-dashboard:kubernetes-dashboard” cannot list resource “namespaces” in API group “” at the cluster scope解决:(删除,重构)

kubectl delete -f admin-user-sa-rbac.yaml

kubectl delete -f read-user-sa-rbac.yaml

kubectl delete -f kubernetes-dashboard.yaml

kubectl apply -f admin-user-sa-rbac.yaml

kubectl apply -f kubernetes-dashboard.yaml

kubectl apply -f read-user-sa-rbac.yaml

#获取token

kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep admin-user | awk '{print $1}')