作用:提取这种不知道有多少页的,数据的链接有规律的,

优点:可以用正则表达式,xpath,css等去获取有规则的url进行解析

简单Demo:

from scrapy.linkextractors import LinkExtractor

link = LinkExtractor(allow=r'book/1188_\d+.html') # \d表示数字 \d+:表示多个数字

# xpath: link1 = LinkExtrcator(restrict_xpaths=r'//div[@id=su]')

print(link.extract_links(response))实际案例

1.创建项目

# 创建项目

scrapy staratproject dushuproject

# 跳转到spiders路径

cd dushuproject/dushuproject/spiders

# 创建爬虫类,项目

scrapy genspider -t crawl read www.dushu.com

# 设置配置 settings 创建实体:items 配置管道piplines# allow:正则表达式 匹配链接url路径 # follow:限制页数,如果是True就是不限制,每一次循环的界面都找,相当于找到所有匹配的,而False:就匹配第一页response中的匹配链接来解析

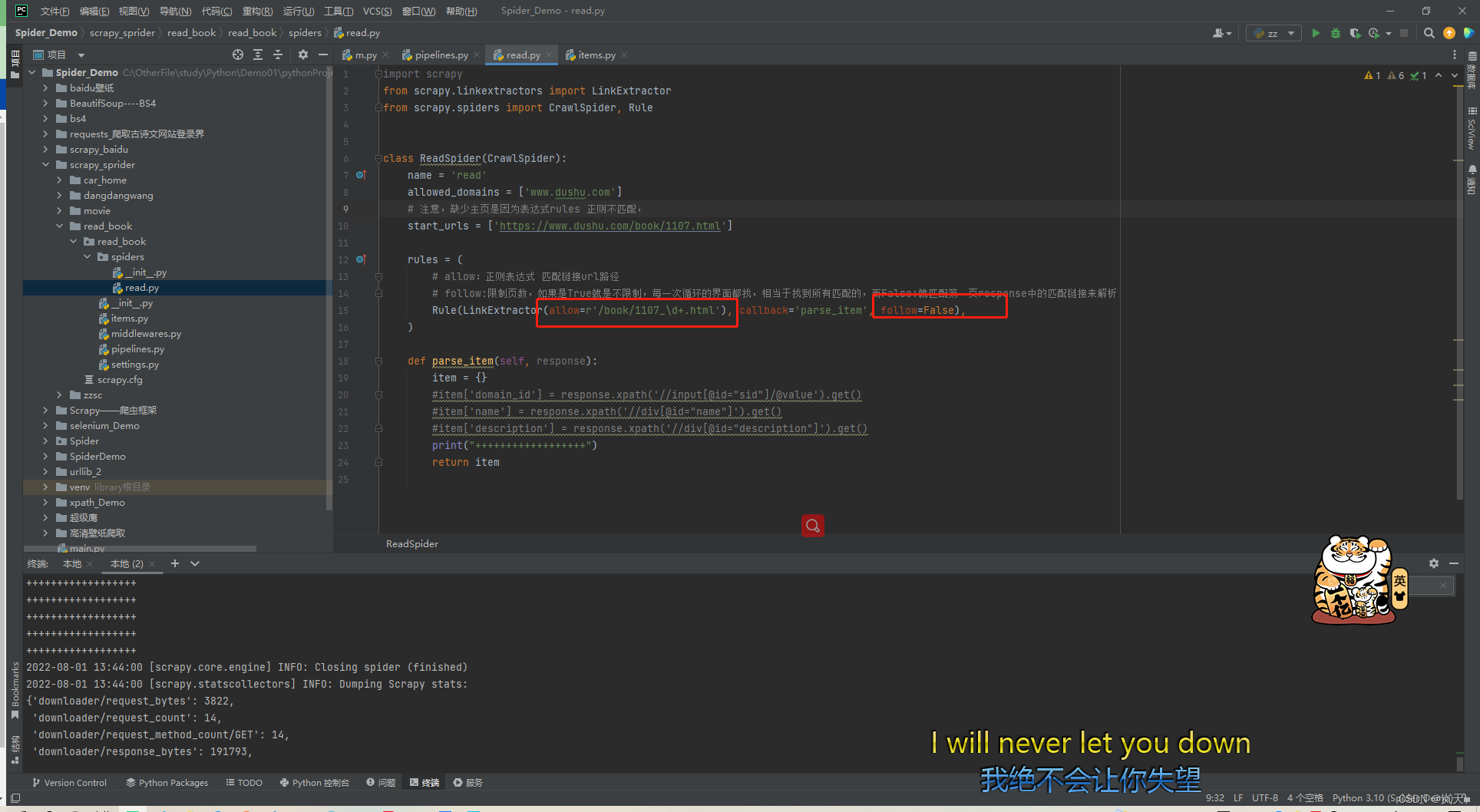

read.py

import scrapy

from scrapy.linkextractors import LinkExtractor

from scrapy.spiders import CrawlSpider, Rule

from read_book.items import ReadBookItem

class ReadSpider(CrawlSpider):

name = 'read'

allowed_domains = ['www.dushu.com']

# 注意,缺少主页是因为表达式rules 正则不匹配,

start_urls = ['https://www.dushu.com/book/1107_1.html']

rules = (

# allow:正则表达式 匹配链接url路径

# follow:限制页数,如果是True就是不限制,每一次循环的界面都找,相当于找到所有匹配的,而False:就匹配第一页response中的匹配链接来解析

Rule(LinkExtractor(allow=r'/book/1107_\d+.html'), callback='parse_item', follow=False),

)

def parse_item(self, response):

item = {}

# print("++++++++++++++++++") #//div[@class='bookslist']/ul/li//div//img/@src data-original

# url_list = response.xpath("//div[@class='bookslist']/ul/li//div//img/@data-original").extract()

# name_list = response.xpath("//div[@class='bookslist']/ul/li//div//img/@alt").extract()

img_list = response.xpath("//div[@class='bookslist']/ul/li//div//img")

for img in img_list:

src = img.xpath("./@data-original").extract_first()

if src is None:

src = img.xpath("./@src").extract_first()

name = img.xpath("./@alt").extract_first()

book = ReadBookItem(name=name,src=src)

yield book

settings.py 开启写入mysql管道 配置mysql信息

# Scrapy settings for read_book project

#

# For simplicity, this file contains only settings considered important or

# commonly used. You can find more settings consulting the documentation:

#

# https://docs.scrapy.org/en/latest/topics/settings.html

# https://docs.scrapy.org/en/latest/topics/downloader-middleware.html

# https://docs.scrapy.org/en/latest/topics/spider-middleware.html

BOT_NAME = 'read_book'

SPIDER_MODULES = ['read_book.spiders']

NEWSPIDER_MODULE = 'read_book.spiders'

# Crawl responsibly by identifying yourself (and your website) on the user-agent

#USER_AGENT = 'read_book (+http://www.yourdomain.com)'

# Obey robots.txt rules

# ROBOTSTXT_OBEY = True

# Configure maximum concurrent requests performed by Scrapy (default: 16)

#CONCURRENT_REQUESTS = 32

# Configure a delay for requests for the same website (default: 0)

# See https://docs.scrapy.org/en/latest/topics/settings.html#download-delay

# See also autothrottle settings and docs

#DOWNLOAD_DELAY = 3

# The download delay setting will honor only one of:

#CONCURRENT_REQUESTS_PER_DOMAIN = 16

#CONCURRENT_REQUESTS_PER_IP = 16

# Disable cookies (enabled by default)

#COOKIES_ENABLED = False

# Disable Telnet Console (enabled by default)

#TELNETCONSOLE_ENABLED = False

# Override the default request headers:

#DEFAULT_REQUEST_HEADERS = {

# 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

# 'Accept-Language': 'en',

#}

# Enable or disable spider middlewares

# See https://docs.scrapy.org/en/latest/topics/spider-middleware.html

#SPIDER_MIDDLEWARES = {

# 'read_book.middlewares.ReadBookSpiderMiddleware': 543,

#}

# Enable or disable downloader middlewares

# See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html

#DOWNLOADER_MIDDLEWARES = {

# 'read_book.middlewares.ReadBookDownloaderMiddleware': 543,

#}

# Enable or disable extensions

# See https://docs.scrapy.org/en/latest/topics/extensions.html

#EXTENSIONS = {

# 'scrapy.extensions.telnet.TelnetConsole': None,

#}

# Configure item pipelines

# See https://docs.scrapy.org/en/latest/topics/item-pipeline.html

ITEM_PIPELINES = {

'read_book.pipelines.ReadBookPipeline': 300,

'read_book.pipelines.MysqlPipline': 301,

}

#Mysql配置

DB_HOST = "127.0.0.1"

DB_PORT = 3306

DB_USER = "root"

DB_PASSWORD = "123456"

DB_CHARSET = "utf8"

DB_NAME = "spider"

# Enable and configure the AutoThrottle extension (disabled by default)

# See https://docs.scrapy.org/en/latest/topics/autothrottle.html

#AUTOTHROTTLE_ENABLED = True

# The initial download delay

#AUTOTHROTTLE_START_DELAY = 5

# The maximum download delay to be set in case of high latencies

#AUTOTHROTTLE_MAX_DELAY = 60

# The average number of requests Scrapy should be sending in parallel to

# each remote server

#AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0

# Enable showing throttling stats for every response received:

#AUTOTHROTTLE_DEBUG = False

# Enable and configure HTTP caching (disabled by default)

# See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings

#HTTPCACHE_ENABLED = True

#HTTPCACHE_EXPIRATION_SECS = 0

#HTTPCACHE_DIR = 'httpcache'

#HTTPCACHE_IGNORE_HTTP_CODES = []

#HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage'

pipeline.py

import pymysql # 导入mysql的依赖包 from scrapy.utils.project import get_project_settings #导入可以获取settings.py中配置

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: https://docs.scrapy.org/en/latest/topics/item-pipeline.html

# useful for handling different item types with a single interface

from itemadapter import ItemAdapter

import pymysql

from scrapy.utils.project import get_project_settings

class ReadBookPipeline:

def open_spider(self,spider):

print("爬虫开始。。。。。。。。。。。。。",spider)

self.fp = open("book.json","w",encoding="utf-8")

def process_item(self, item, spider):

self.fp.write(str(item))

return item

def close_spider(self,spider):

print("爬虫结束。。。。。。。。。。",spider)

self.fp.close()

# 存入mysql

class MysqlPipline:

def open_spider(self,spider):

settings = get_project_settings()

self.host = user = settings['DB_HOST']

self.port = user = settings['DB_PORT']

self.user = user = settings['DB_USER']

self.password = user = settings['DB_PASSWORD']

self.name = user = settings['DB_NAME']

self.charset = user = settings['DB_CHARSET']

# self.conn = pymysql.connect(host=host, prot=prot, user =user, password=password, charset=charset, database=name)

self.conn = pymysql.connect(host=self.host, port=self.port, user=self.user, password=self.password, charset=self.charset, database=self.name)

self.cursor = self.conn.cursor()

def process_item(self, item, spider):

# Mysql配置

# DB_HOST = "127.0.0.1"

# DB_PORT = 3306

# DB_USER = "root"

# DB_PASSWORD = "123456"

# DB_CHARSET = "utf8"

# DB_NAME = "spider"

sql = "insert into book(src,name) values ('{}','{}')".format(item['src'],item['name'])

self.cursor.execute(sql)

self.conn.commit()

return item

def close_spider(self,spider):

self.conn.close()

self.cursor.close()

items.py

import scrapy

class ReadBookItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

# pass

# url name

name = scrapy.Field()

src = scrapy.Field()