第7章

7.1 字符串和文本文件的读写

7.1.1 字符串的创建

string = "Hello World!"

7.1.2 字符串的打印

print(string)

print("1234")

7.1.3 从文本文件中读取字符串

text_1 = readtext("./nlp/data/textbooks/grade0/text0.txt")

print(text_1)

7.1.4 分词

text = readtext("./nlp/data/textbooks/grade0/text0.txt")

words = splitwords(text)

7.1.5 列表及其常用操作

l1 = [1, 3, 5, 7, 9, 11]

l2 = [1, 'a', ['3.14', 1.5], 'bc']

print(l1[2])

print(l2[3])

l1[1] = 20

print(l1)

l2.append('a')

print(l2)

print(len(l1))

print(len(l2))

for i in l1:

print(i)

7.2 字典和词频统计

7.2.1 创建字典

word_freq_dict = {

'you': 0.098, 'the': 0.020, 'friend': 0.059}

print(word_freq_dict)

7.2.2 查询关键字

print(word_freq_dict['you'])

7.2.3 键值对的插入和修改

word_freq_dict['you'] = 0.088

print(word_freq_dict)

word_freq_dict['her'] = 0.0392

print(word_freq_dict)

7.2.4 判断某个关键字是否在字典中

print('you' in word_freq_dict)

print('he' in word_freq_dict)

7.2.5 字典的遍历

for key, value in word_freq_dict.items():

print(key+':'+str(value))

7.2.6 利用字典类型统计词频

def word_freq(words):

freq_dict = {

}

for word in words:

if word in freq_dict:

freq_dict[word] += 1

else:

freq_dict[word] = 1

for word, freq in freq_dict.items():

freq_dict[word] = freq / len(words)

return freq_dict

text = readtext("nlp/data/textbooks/grade0/text0.txt")

words = splitwords(text)

freq_dict = word_freq(words)

print(freq_dict)

7.3 课文文章特征提取

7.3.1 读取课文数据

textbooks_data = load_textbooks_data()

print(len(textbooks_data))

print(textbooks_data[0:4])

7.3.2 构建生词表

def get_diff_level(path_grade):

diff_level = {

}

for path, grade in path_grade:

text = readtext(path)

words = splitwords(text)

grade = int(grade)

for word in words:

if word in diff_level and diff_level[word] <= grade:

continue

else:

diff_level[word] = grade

return diff_level

diff_level = get_diff_level(textbooks_data)

print(diff_level)

7.3.3 保存和载入生词表

save_private(diff_level, "./data/tmp/diff_level")

diff_level = load_private("./data/tmp/diff_level")

print(diff_level)

7.3.4 分词

text = readtext("nlp/data/reading/train/text0.txt")

print(text)

words = splitwords(text)

print(len(words))

7.3.5 统计文章中各年级词汇出现次数

grade_freq = [0,0,0,0,0,0,0,0,0,0,0,0]

l1 = [0]*3

l2 = ['a']*5

print(l1)

print(l2)

grade_freq = [0]*12

for word in words:

if word in diff_level:

grade = diff_level[word]

grade_freq[grade] += 1

else:

continue

print(grade_freq)

7.3.6 统计文章中各年级词汇出现频率

num = sum(grade_freq)

print(num)

for i in range(12):

grade_freq[i] /= num

print(grade_freq)

7.3.7 文章特征提取

def extract_features(path, diff_level):

text = readtext(path)

words = splitwords(text)

grade_freq = [0]*12

for word in words:

if word in diff_level:

grade = diff_level[word]

grade_freq[grade] += 1

else:

continue

num = sum(grade_freq)

for i in range(12):

grade_freq[i] /= num

return grade_freq

grade_freq = extract_features("nlp/data/reading/train/text1.txt", diff_level)

print(grade_freq)

7.4 课文难度分类

7.4.1 载入生词表

diff_level = load_private("./data/tmp/diff_level")

7.4.2 载入数据集

train_data = load_train_data()

print(len(train_data))

print(train_data[0:5])

test_data = load_test_data()

print(len(test_data))

print(test_data[0:5])

7.4.3 提取特征和难度等级并保存

features = []

labels = []

for path, label in train_data:

features.append(extract_features(path, diff_level))

labels.append(int(label))

def get_feats_labels(data, diff_level):

features = []

labels = []

for path, label in data:

features.append(extract_features(path, diff_level))

labels.append(int(label))

return features, labels

train_feats, train_labels = get_feats_labels(train_data, diff_level)

print(train_feats[0:5])

print(train_labels[0:5])

save_private([train_feats, train_labels], "./data/tmp/train_features")

train_feats, train_labels = load_private("./data/tmp/train_features")

print(train_feats[0:5])

print(train_labels[0:5])

test_feats, test_labels = get_feats_labels(test_data, diff_level)

save_private([test_feats, test_labels], "./data/tmp/test_features")

7.4.4 保存二分类数据的特征和难度等级

train_data = load_binary_train_data("primary", "junior")

print(len(train_data))

print(train_data[0:5])

test_data = load_binary_test_data("primary", "junior")

test_feats, test_labels = get_feats_labels(test_data, diff_level)

save_private([train_feats, train_labels], "./data/tmp/pri_jun_train_features")

save_private([test_feats, test_labels], "./data/tmp/pri_jun_test_features")

7.4.5 用线性分类器实现文章难度分类

model = linear_classifier()

model.train(train_feats, train_labels)

pred_y = model.pred(test_feats)

acc = accuracy(pred_y, test_labels)

print(acc)

7.5 提取文本特征

7.5.1 加载数据集

corpus = data.get('corpus')

doc = corpus[87]

fig() + plot(doc)

7.5.2 构建词袋

word_bags = corpus.map(split_words)

7.5.3 加载停止词词表

stop_words = load_stopwords()

fig() + plot(stop_words)

7.5.4 构建词典

vocabulary = build_vocabulary(word_bags, stop_words = stop_words, frequency_threshold = 5)

fig() + plot(vocabulary)

print('Vocabulary Length: ', len(vocabulary))

7.5.5 计算词频向量

tf = TermFrequency(vocabulary)

tf_features = word_bags.map(tf)

feat = tf_features[87]

fig() + plot(feat)

7.5.6 计算 tf - idf 向量

tfidf = TFIDF(vocabulary, word_bags)

tfidf_features = word_bags.map(tfidf)

feat = tfidf_features[87]

fig() + plot(feat)

7.6 发掘文本中的潜在主题

7.6.1 获取数据集

corpus, vocab, tf_feat, tfidf_feat = data.get('text-feat')

7.6.2 构建文档-词矩阵

tfidf_mat = to_matrix(tfidf_feat)

print("文档-词矩阵尺寸:",tfidf_mat.shape)

7.6.3 非负矩阵分解

model = topic_model(vocab, tfidf_mat, num_topics=8)

model.train()

7.6.4 分解结果分析

t_mat = model.tmatrix

w_mat = model.wmatrix

print('Size of T Matrix: ', t_mat.shape)

print('Size of W Matrix: ', w_mat.shape)

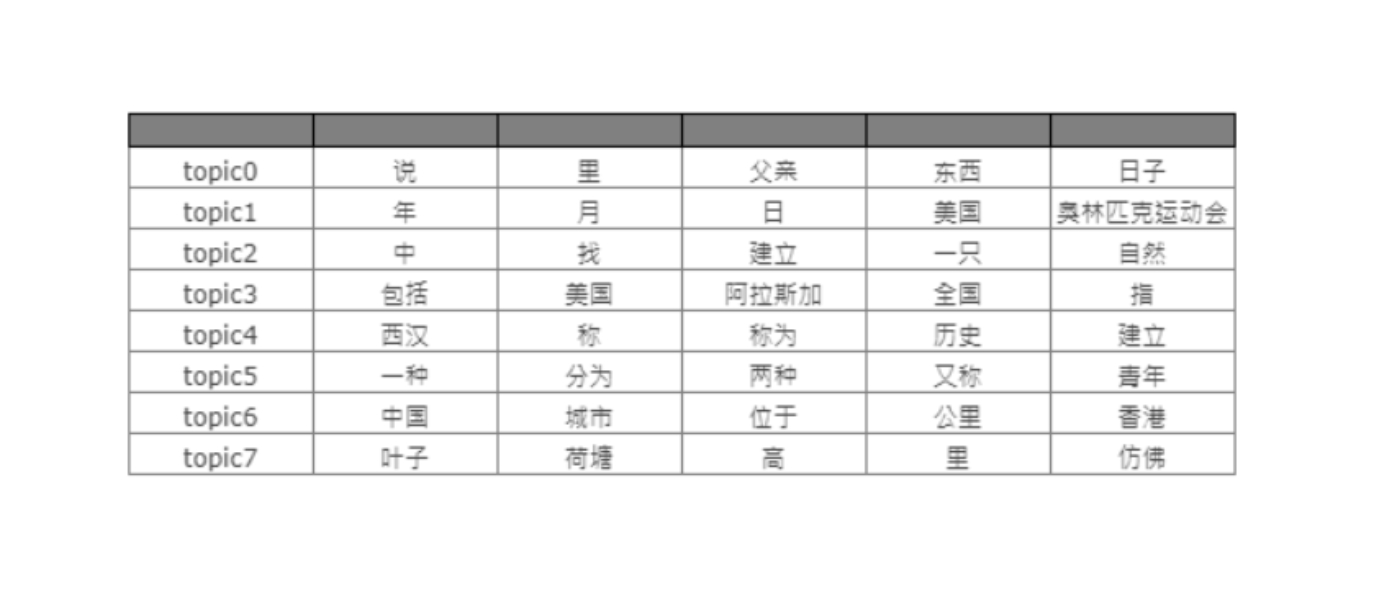

7.6.5 提取高频词

high_freqs = model.extract_highfreqs(top_n=5)

fig() + plot(high_freqs)

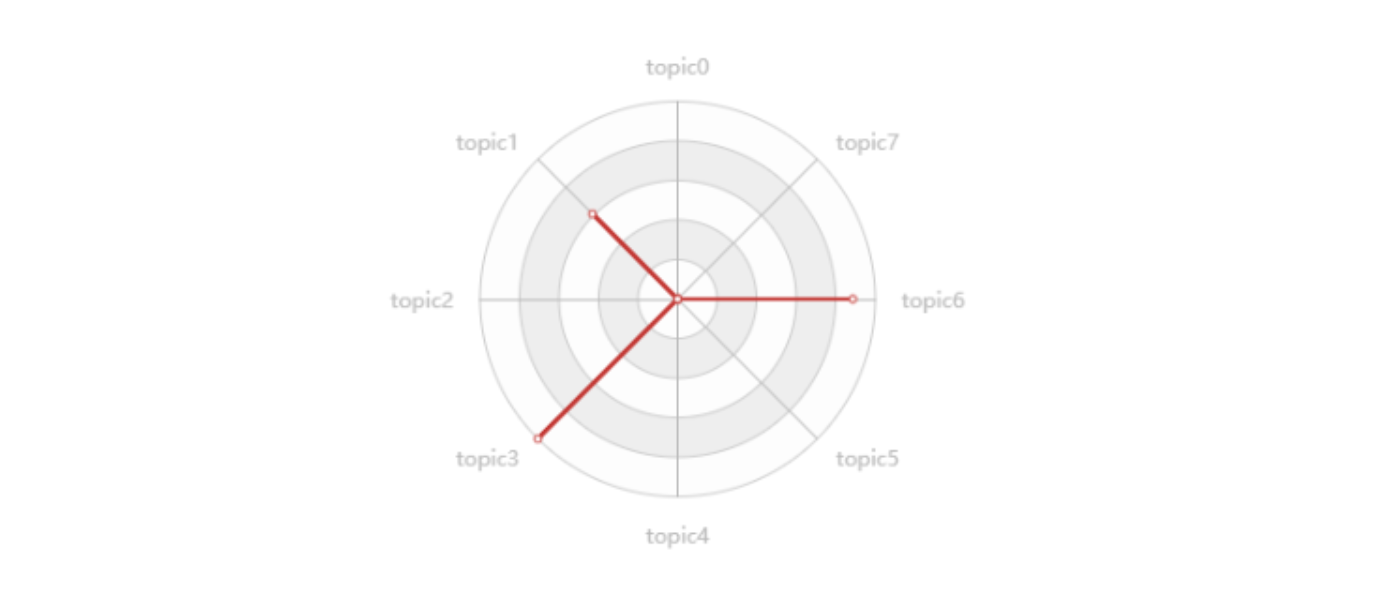

7.6.6 查看文章,概括主题并比较模型结果

参考图像:

id = 87

doc = corpus[id]

fig() + plot(doc)

topic_weights = w_mat[id]

fig(2, 1) + [plot(high_freqs), plot(topic_weights)]