Linux下的摄像头——V4L2(最后有代码完整实现)

V4L2驱动框架是通过访问系统IO接口——ioctl函数(ioctl专用于硬件控制系统IO接口)。其作用位让硬件正常工作及给上层的应用程序提供接口。

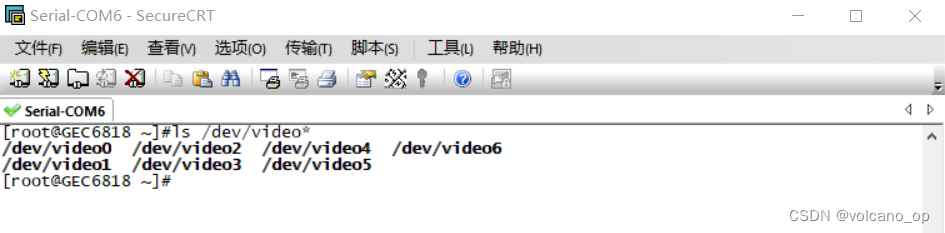

首先我先确定摄像头设备,在SecureCRT中查看

把摄像头接到开发板的USB接口,如果设备正常,驱动框架应该会有提示信息输出,如usb2.0 pc camera等。

通过指令,来找设备

ls /dev/video*

可以看到多出来的/dev/video7为我们的目标设备。

一,打开V4L2的摄像头访问

必要头文件:#include <linux/video2.h>

扫描二维码关注公众号,回复:

15548199 查看本文章

int fd_v4l2 = open("/dev/video7", O_RDWR);二,获取摄像头的功能参数

在videodev2.h可寻,这里只需设置与自己有关参数即可

/**

* struct v4l2_capability - Describes V4L2 device caps returned by VIDIOC_QUERYCAP

*

* @driver: name of the driver module (e.g. "bttv")

* @card: name of the card (e.g. "Hauppauge WinTV")

* @bus_info: name of the bus (e.g. "PCI:" + pci_name(pci_dev) )

* @version: KERNEL_VERSION

* @capabilities: capabilities of the physical device as a whole

* @device_caps: capabilities accessed via this particular device (node)

* @reserved: reserved fields for future extensions

*/

struct v4l2_capability {

__u8 driver[16];

__u8 card[32];

__u8 bus_info[32];

__u32 version; //内核版本

__u32 capabilities; //功能参数(设置这里)

__u32 device_caps;

__u32 reserved[3];

};代码实现:

// 获取功能参数

struct v4l2_capability cap = {};

int res = ioctl(fd_v4l2, VIDIOC_QUERYCAP, &cap);

if (res == -1)

{

perror("ioctl cap");

exit(-1);

}三,获取摄像头支持的格式(YUYV)

/*

* F O R M A T E N U M E R A T I O N

*/

struct v4l2_fmtdesc {

__u32 index; /* Format number */

__u32 type; /* enum v4l2_buf_type */

__u32 flags;

__u8 description[32]; /* Description string */

__u32 pixelformat; /* Format fourcc */

__u32 reserved[4];

};

代码实现:

//有个定义格式的宏

#define v4l2_fourcc(a,b,c,d) \ ((__u32)(a) | (__u32)(b) << 8 | (__u32)(c) << 16) | (__u32)(d) << 16 | (__u32)(d) << 24)

// 获取摄像头支持的格式

struct v4l2_fmtdesc fmt = {};

fmt.index = 0;

fmt.type = V4L2_BUF_TYPE_VIDEO_CAPTURE; // 获取摄像头格式

ioctl(fd,VIDIOC_ENUM_FMT,&fmt);GEC6818的LCD屏为RGB,而V4L2采集的数据为YUYV格式,这里就需要YUYV转RGB,我在GitHub上找到一个移植高的函数

unsigned int sign3 = 0;

int yuyv2rgb(int y, int u, int v)

{

unsigned int pixel24 = 0;

unsigned char *pixel = (unsigned char *)&pixel24;

int r, g, b;

static int ruv, guv, buv;

if (sign3)

{

sign3 = 0;

ruv = 1159 * (v - 128);

guv = 380 * (u - 128) + 813 * (v - 128);

buv = 2018 * (u - 128);

}

r = (1164 * (y - 16) + ruv) / 1000;

g = (1164 * (y - 16) - guv) / 1000;

b = (1164 * (y - 16) + buv) / 1000;

if (r > 255)

r = 255;

if (g > 255)

g = 255;

if (b > 255)

b = 255;

if (r < 0)

r = 0;

if (g < 0)

g = 0;

if (b < 0)

b = 0;

pixel[0] = r;

pixel[1] = g;

pixel[2] = b;

return pixel24;

}

//YUYV转rgb

int yuyv2rgb0(unsigned char *yuv, unsigned char *rgb, unsigned int width, unsigned int height)

{

unsigned int in, out;

int y0, u, y1, v;

unsigned int pixel24;

unsigned char *pixel = (unsigned char *)&pixel24;

unsigned int size = width * height * 2;

for (in = 0, out = 0; in < size; in += 4, out += 6)

{

y0 = yuv[in + 0];

u = yuv[in + 1];

y1 = yuv[in + 2];

v = yuv[in + 3];

sign3 = 1;

pixel24 = yuyv2rgb(y0, u, v);

rgb[out + 0] = pixel[0];

rgb[out + 1] = pixel[1];

rgb[out + 2] = pixel[2];

pixel24 = yuyv2rgb(y1, u, v);

rgb[out + 3] = pixel[0];

rgb[out + 4] = pixel[1];

rgb[out + 5] = pixel[2];

}

return 0;

}四,设置获取摄像头通道

// 设置采集通道

int index = 0; // 使用通道0

res = ioctl(fd_v4l2, VIDIOC_S_INPUT, &index);

if (res == -1)

{

perror("ioctl_s_input");

exit(-1);

}五,设置获取摄像头的采集格式和参数

/**

* struct v4l2_format - stream data format

* @type: enum v4l2_buf_type; type of the data stream

* @pix: definition of an image format

* @pix_mp: definition of a multiplanar image format

* @win: definition of an overlaid image

* @vbi: raw VBI capture or output parameters

* @sliced: sliced VBI capture or output parameters

* @raw_data: placeholder for future extensions and custom formats

*/

struct v4l2_format {

__u32 type;

union {

struct v4l2_pix_format pix; /* V4L2_BUF_TYPE_VIDEO_CAPTURE */

struct v4l2_pix_format_mplane pix_mp; /* V4L2_BUF_TYPE_VIDEO_CAPTURE_MPLANE */

struct v4l2_window win; /* V4L2_BUF_TYPE_VIDEO_OVERLAY */

struct v4l2_vbi_format vbi; /* V4L2_BUF_TYPE_VBI_CAPTURE */

struct v4l2_sliced_vbi_format sliced; /* V4L2_BUF_TYPE_SLICED_VBI_CAPTURE */

struct v4l2_sdr_format sdr; /* V4L2_BUF_TYPE_SDR_CAPTURE */

struct v4l2_meta_format meta; /* V4L2_BUF_TYPE_META_CAPTURE */

__u8 raw_data[200]; /* user-defined */

} fmt;

};这是一个联合体,只需确定为一个设备

代码实现:

// 设置摄像头采集格式

struct v4l2_format format = {};

format.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

format.fmt.pix.width = 640;

format.fmt.pix.height = 480;

format.fmt.pix.pixelformat = V4L2_PIX_FMT_YUYV; // YUYV

format.fmt.pix.field = V4L2_FIELD_NONE;

res = ioctl(fd_v4l2, VIDIOC_S_FMT, &format);

if (res == -1)

{

perror("ioctl s_fmt");

exit(-1);

}六,申请缓存空间,映射到用户空间,缓冲区设计成队列

// 申请缓存空间

struct v4l2_requestbuffers req = {};

req.count = 4;

req.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

req.memory = V4L2_MEMORY_MMAP;

res = ioctl(fd_v4l2, VIDIOC_REQBUFS, &req);

if (res == -1)

{

perror("ioctl reqbufs");

exit(-1);

}

// 分配映射入队

size_t i, max_len = 0;

for (i = 0; i < 4; i++)

{

struct v4l2_buffer buf = {};

buf.index = i; // 0~3展现4帧图片

buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

buf.memory = V4L2_MEMORY_MMAP;

res = ioctl(fd_v4l2, VIDIOC_QUERYBUF, &buf);

if (res == -1)

{

perror("ioctl querybuf");

exit(-1);

}

// 判读并记录最大长度,以适配各个图帧

if (buf.length > max_len)

max_len = buf.length;

// 映射

buffer[i].length = buf.length;

buffer[i].start = mmap(NULL, buf.length, PROT_READ | PROT_WRITE, MAP_SHARED, fd_v4l2, buf.m.offset);

if (buffer[i].start == MAP_FAILED)

{

perror("mmap");

exit(-1);

}

// 入队

res = ioctl(fd_v4l2, VIDIOC_QBUF, &buf);

if (res == -1)

{

perror("ioctl qbuf");

exit(-1);

}

}七,启动摄像头采集

// 启动摄像头

enum v4l2_buf_type buf_type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

res = ioctl(fd_v4l2, VIDIOC_STREAMON, &buf_type);

if (res == -1)

{

perror("ioctl streamon");

exit(-1);

}八,从采集队列中获取摄像头数据

while (1)

{

struct v4l2_buffer buf = {};

buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

buf.memory = V4L2_MEMORY_MMAP;

// 出队

res = ioctl(fd_v4l2, VIDIOC_DQBUF, &buf);

if (res == -1)

{

perror("ioctl dqbuf");

}

// 拷贝数据

memcpy(current.start, buffer[buf.index].start, buf.bytesused);

current.length = buf.bytesused;

// 入队

res = ioctl(fd_v4l2, VIDIOC_QBUF, &buf);

if (res == -1)

{

perror("ioctl qbuf");

}九,关闭摄像头采集

// 关闭摄像头采集

buf_type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

res = ioctl(fd_v4l2, VIDIOC_STREAMOFF, &buf_type);

if (res == -1)

{

perror("ioctl streamoff");

exit(-1);

}完整实现

/*************************************************************************

> File Name: V4L2.c

> Author: volcano.eth

> Mail: [email protected]

> Created Time: 2023年05月10日 星期四 15时46分08秒

************************************************************************/

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

#include <fcntl.h>

#include <sys/stat.h>

#include <sys/types.h>

#include <unistd.h>

#include <sys/ioctl.h>

#include <sys/mman.h>

#include <linux/videodev2.h>

#include <netinet/in.h> // TCP/IP协议所需头文件

#include <sys/socket.h>

typedef struct

{

char *start;

size_t length;

} buffer_t;

buffer_t buffer[4]; // 映射所需的素材缓存在数组中

buffer_t current; // 保存当前输出的一帧

int *plcd; // 用于存储屏幕缓冲区的首地址,以便后续对屏幕进行读写操作

int *lcd_p; // 指向屏幕缓冲区的特定位置

int lcd_fd; // 用于存储屏幕设备文件的文件描述符

void *lcd_init()

{

lcd_fd = open("/dev/fb0", O_RDWR);

if (lcd_fd == -1)

{

perror("open lcd_file error\n");

return MAP_FAILED;

}

plcd = (int *)mmap(NULL, 800 * 480 * 4, PROT_READ | PROT_WRITE, MAP_SHARED, lcd_fd, 0);

return plcd;

}

int uninit_lcd()

{

close(lcd_fd);

if (munmap(lcd_p, 800 * 480 * 4) == -1)

{

return -1;

}

return 0;

}

unsigned int sign3 = 0;

//YUYV转rgb

int yuyv2rgb(int y, int u, int v)

{

unsigned int pixel24 = 0;

unsigned char *pixel = (unsigned char *)&pixel24;

int r, g, b;

static int ruv, guv, buv;

if (sign3)

{

sign3 = 0;

ruv = 1159 * (v - 128);

guv = 380 * (u - 128) + 813 * (v - 128);

buv = 2018 * (u - 128);

}

r = (1164 * (y - 16) + ruv) / 1000;

g = (1164 * (y - 16) - guv) / 1000;

b = (1164 * (y - 16) + buv) / 1000;

if (r > 255)

r = 255;

if (g > 255)

g = 255;

if (b > 255)

b = 255;

if (r < 0)

r = 0;

if (g < 0)

g = 0;

if (b < 0)

b = 0;

pixel[0] = r;

pixel[1] = g;

pixel[2] = b;

return pixel24;

}

//YUYV转rgb(调用yuyv2rgb函数)

int yuyv2rgb0(unsigned char *yuv, unsigned char *rgb, unsigned int width, unsigned int height)

{

unsigned int in, out;

int y0, u, y1, v;

unsigned int pixel24;

unsigned char *pixel = (unsigned char *)&pixel24;

unsigned int size = width * height * 2;

for (in = 0, out = 0; in < size; in += 4, out += 6)

{

y0 = yuv[in + 0];

u = yuv[in + 1];

y1 = yuv[in + 2];

v = yuv[in + 3];

sign3 = 1;

pixel24 = yuyv2rgb(y0, u, v);

rgb[out + 0] = pixel[0];

rgb[out + 1] = pixel[1];

rgb[out + 2] = pixel[2];

pixel24 = yuyv2rgb(y1, u, v);

rgb[out + 3] = pixel[0];

rgb[out + 4] = pixel[1];

rgb[out + 5] = pixel[2];

}

return 0;

}

int main()

{

lcd_init();

// 打开摄像头

int fd_v4l2 = open("/dev/video7", O_RDWR); // 根据secureCRT确定设备

if (fd_v4l2 == -1)

{

perror("open");

exit(-1);

}

// 获取功能参数

struct v4l2_capability cap = {};

int res = ioctl(fd_v4l2, VIDIOC_QUERYCAP, &cap);

if (res == -1)

{

perror("ioctl cap");

exit(-1);

}

// 先确定摄像头功能可以使用

if (cap.capabilities & V4L2_CAP_VIDEO_CAPTURE)

{

printf("is a capture device!\n");

}

else

{

printf("is not a capture device!\n");

exit(-1);

}

// 获取摄像头支持的格式

struct v4l2_fmtdesc fmt = {};

fmt.index = 0;

fmt.type = V4L2_BUF_TYPE_VIDEO_CAPTURE; // 获取摄像头格式

while ((res = ioctl(fd_v4l2, VIDIOC_ENUM_FMT, &fmt)) == 0)

{

printf("pixformat = %c %c %c %c,description = %s\n",

fmt.pixelformat & 0xff,

(fmt.pixelformat >> 8) & 0xff,

(fmt.pixelformat >> 16) & 0xff,

(fmt.pixelformat >> 24) & 0xff,

fmt.description);

fmt.index++;

}

// 设置采集通道

int index = 0; // 使用通道0

res = ioctl(fd_v4l2, VIDIOC_S_INPUT, &index);

if (res == -1)

{

perror("ioctl_s_input");

exit(-1);

}

// 设置摄像头采集格式

struct v4l2_format format = {};

format.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

format.fmt.pix.width = 640;

format.fmt.pix.height = 480;

format.fmt.pix.pixelformat = V4L2_PIX_FMT_YUYV; // YUYV

format.fmt.pix.field = V4L2_FIELD_NONE;

res = ioctl(fd_v4l2, VIDIOC_S_FMT, &format);

if (res == -1)

{

perror("ioctl s_fmt");

exit(-1);

}

// 申请缓存空间

struct v4l2_requestbuffers req = {};

req.count = 4;

req.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

req.memory = V4L2_MEMORY_MMAP;

res = ioctl(fd_v4l2, VIDIOC_REQBUFS, &req);

if (res == -1)

{

perror("ioctl reqbufs");

exit(-1);

}

// 分配映射入队

size_t i, max_len = 0;

for (i = 0; i < 4; i++)

{

struct v4l2_buffer buf = {};

buf.index = i; // 0~3展现4帧图片

buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

buf.memory = V4L2_MEMORY_MMAP;

res = ioctl(fd_v4l2, VIDIOC_QUERYBUF, &buf);

if (res == -1)

{

perror("ioctl querybuf");

exit(-1);

}

// 判读并记录最大长度,以适配各个图帧

if (buf.length > max_len)

max_len = buf.length;

// 映射

buffer[i].length = buf.length;

buffer[i].start = mmap(NULL, buf.length, PROT_READ | PROT_WRITE, MAP_SHARED, fd_v4l2, buf.m.offset);

if (buffer[i].start == MAP_FAILED)

{

perror("mmap");

exit(-1);

}

// 入队

res = ioctl(fd_v4l2, VIDIOC_QBUF, &buf);

if (res == -1)

{

perror("ioctl qbuf");

exit(-1);

}

}

// 申请临时缓冲区

current.start = malloc(max_len);

if (current.start == NULL)

{

perror("malloc");

exit(-1);

}

// 启动摄像头

enum v4l2_buf_type buf_type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

res = ioctl(fd_v4l2, VIDIOC_STREAMON, &buf_type);

if (res == -1)

{

perror("ioctl streamon");

exit(-1);

}

// 延时

sleep(1);

// RGB缓冲区

char rgb[640 * 480 * 3];

// 采集数据

while (1)

{

struct v4l2_buffer buf = {};

buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

buf.memory = V4L2_MEMORY_MMAP;

// 出队

res = ioctl(fd_v4l2, VIDIOC_DQBUF, &buf);

if (res == -1)

{

perror("ioctl dqbuf");

}

// 拷贝数据

memcpy(current.start, buffer[buf.index].start, buf.bytesused);

current.length = buf.bytesused;

// 入队

res = ioctl(fd_v4l2, VIDIOC_QBUF, &buf);

if (res == -1)

{

perror("ioctl qbuf");

}

// YUYV转RGB

yuyv2rgb0(current.start, rgb, 640, 480);

// 显示LCD

int x, y;

for (y = 0; y < 480; y++)

{

for (x = 0; x < 640; x++)

{

*(plcd + y*800 + x) = rgb[3 * (y*640 + x)] << 16 | rgb[3 * (y*640 + x) + 1] << 8 | rgb[3 * (y*640 + x) + 2];

}

}

}

// 关闭摄像头采集

buf_type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

res = ioctl(fd_v4l2, VIDIOC_STREAMOFF, &buf_type);

if (res == -1)

{

perror("ioctl streamoff");

exit(-1);

}

// 解除映射

for (i = 0; i < 4; i++)

{

munmap(buffer[i].start, buffer[i].length);

}

free(current.start);

sleep(1); // 延时一下

close(fd_v4l2);

uninit_lcd();

return 0;

}

最终效果如图