#导入settings文件中的配置

from scrapy.utils.project import get_project_settings

class MyMySqlPipeline(object):

def open_spider(self, spider):

# 连接数据库,需要在settings.py 文件中配置

settings = get_project_settings()

host = settings['DB_HOST']

port = settings['DB_PORT']

user = settings['DB_USER']

password = settings['DB_PASSWORD']

dbname = settings['DB_NAME']

dbcharset = settings['DB_CHARSET']

self.conn = pymysql.Connect(host=host, port=port, user=user, password=password, db=dbname, charset=dbcharset)

self.conn = pymysql.Connect(host=host, port=port, user=user, password=password, db=dbname, charset=dbcharset)

def process_item(self, item, spider):

# 写入数据库中

sql = 'insert into movies(movie_poster, movie_name, movie_score, movie_type, movie_director, movie_screenwriter, movie_actor, movie_time, movie_content) values("%s", "%s", "%s", "%s", "%s", "%s", "%s", "%s", "%s")'% (

item['movie_poster'], item['movie_name'], item['movie_score'], item['movie_type'], item['movie_director'], item['movie_screenwriter'], item['movie_actor'],

item['movie_time'], item['movie_content'])

# 执行sql语句

self.cursor = self.conn.cursor()

try:

#创建游标

self.cursor.execute(sql)

#提交操作

self.conn.commit()

except Exception as e:

print(e)

self.conn.rollback()

return item

def close_spider(self, spider):

self.cursor.close()

self.conn.close()

注意:另外需要在数据库中定义数据结构,应为mysql不会自己创建

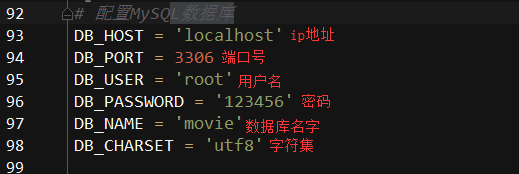

在settings.py 中任意位置配置MySQL

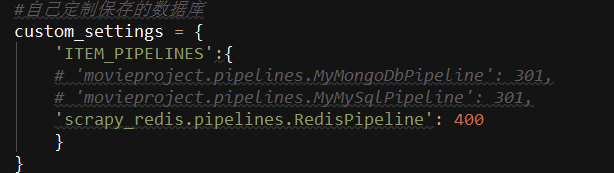

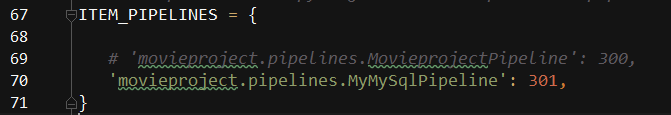

激活使用

也可以在爬虫文件中自定义