实验内容

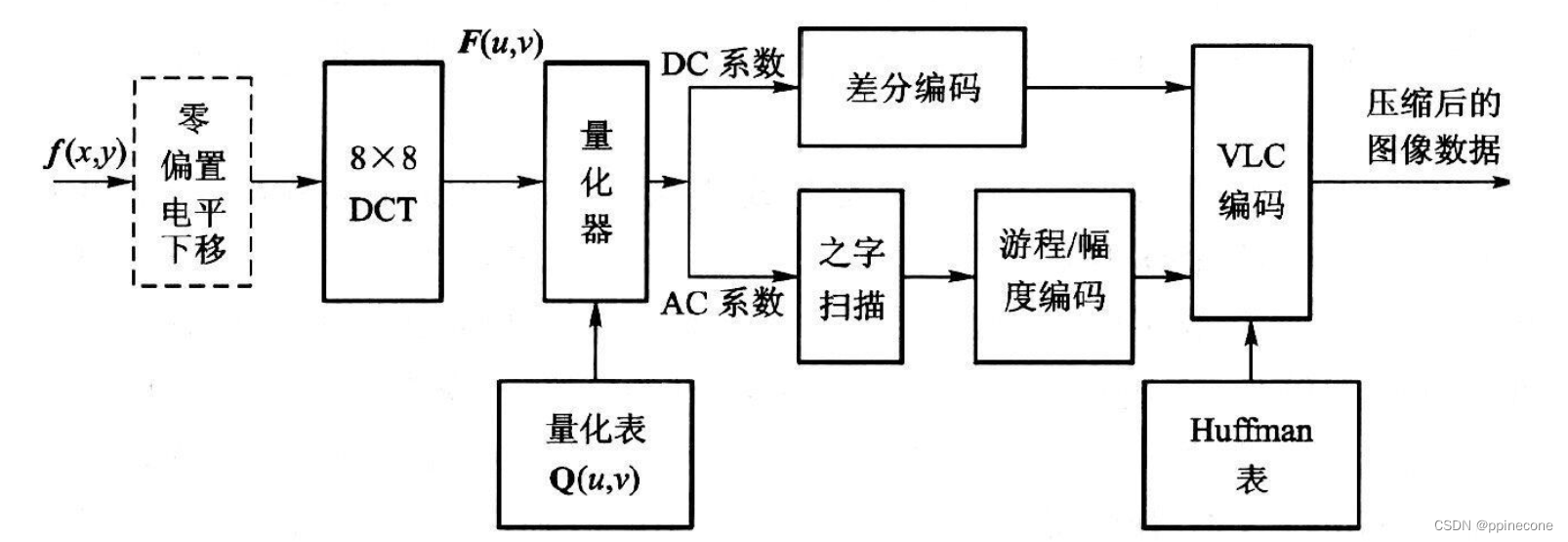

一、JPEG编解码原理

1.亮度信号零偏置电平下移,对于灰度级是2n的像素,通过减去2n-1,将无符号的整数值变成是有符号数。这样做的目的是使像素的绝对值出现3位10进制的概率大大减少。

2.DCT变换

对每个单独的彩色图像分量,把整个分量图像分成8×8的图像块,并进行8×8DCT变换,目的是去除图像数据的相关性,便于量化过程去除图像数据的空间冗余。

3.量化

利用人眼视觉特性设计而成的矩阵量化DCT系数,减小视觉冗余。因为人眼对亮度信号比色差信号更敏感,因此使用了两种量化表:亮度量化值和色差量化值;根据人眼对低频敏感,对高频不太敏感,对低频分量采取较细的量化,对高频分量采取较粗的量化。

4.熵编码

(1)对量化后的DC系数进行差分编码

8×8图像块经过DCT变换之后得到的DC直流系数有两个特点:系数的数值较大;相邻8×8图像块的DC系数值变化不大。根据这个特点,JPEG算法使用了差分脉冲调制编码(DPCM)技术对相邻图像块之间量化DC系数的差值进行编码。

(2)AC系数进行Z字扫描和游程编码后,再分别进行VLC编码

Z字扫描:由于DCT变换后,系数大多数集中在左上角,即低频分量区,因此采用Z字形按频率的高低顺序读出,可以出现很多连零的机会,可以使用游程编码。

游程编码:在JPEG和MPEG编码中规定为(run,level):表示连续run个0,后面跟值为level的系数。

二、JPEG文件格式

JPEG 在文件中以 Segment 的形式组织,其特点为:

1.均以 0xFF 开始,后跟 1 byte 的 Marker 和 2 byte 的 Segment length(包含表示 Length 本身所占用的 2 byte,不含“0xFF” + “Marker” 所占用的 2 byte);

2.采用 Motorola 序(相对于 Intel 序),即保存时高位在前,低位在后;

3.Data 部分中,0xFF 后若为 0x00,则跳过此字节不予处理;

实验步骤

一、实验用图

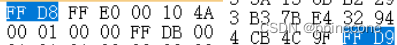

图像开始SOI标记(0xFFD8)和图像结束EOI标记(0xFFD9)

二、JPEG解码代码分析

1.流程

JPEG解码流程

(1)读取文件

(2)解析Segment Marker,获取图像数据流基本信息结构、量化表。

(3)依据每个分量的水平、垂直采样因子计算 MCU 的大小,并得到每个 MCU 中 8*8宏块的个数

(4)对每个 MCU 解码(依照各分量水平、垂直采样因子对 MCU 中每个分量宏块解码)

对每个宏块进行 Huffman 解码,得到 DCT 系数

对每个宏块的 DCT 系数进行 IDCT,得到 Y、 Cb、 Cr

遇到 Segment Marker RST 时,清空之前的 DC DCT 系数

(5)解析到 EOI,解码结束。

(6)将 Y、 Cb、 Cr 转化为需要的色彩空间并保存。

2.代码

main:输入输出文件的获取、输出格式和解码方式的选择

int main(int argc, char *argv[])

{

int output_format = TINYJPEG_FMT_YUV420P;

char *output_filename, *input_filename;

clock_t start_time, finish_time;

unsigned int duration;

int current_argument;

int benchmark_mode = 0;

#if TRACE

p_trace=fopen(TRACEFILE,"w");

if (p_trace==NULL)

{

printf("trace file open error!");

}

#endif

if (argc < 3)

usage();

current_argument = 1;

while (1)

{

if (strcmp(argv[current_argument], "--benchmark")==0)

benchmark_mode = 1;

else

break;

current_argument++;

}

if (argc < current_argument+2)

usage();

input_filename = argv[current_argument];

if (strcmp(argv[current_argument+1],"yuv420p")==0)

output_format = TINYJPEG_FMT_YUV420P;

else if (strcmp(argv[current_argument+1],"rgb24")==0)

output_format = TINYJPEG_FMT_RGB24;

else if (strcmp(argv[current_argument+1],"bgr24")==0)

output_format = TINYJPEG_FMT_BGR24;

else if (strcmp(argv[current_argument+1],"grey")==0)

output_format = TINYJPEG_FMT_GREY;

else

exitmessage("Bad format: need to be one of yuv420p, rgb24, bgr24, grey\n");

output_filename = argv[current_argument+2];

start_time = clock();

if (benchmark_mode)

load_multiple_times(input_filename, output_filename, output_format);

else

convert_one_image(input_filename, output_filename, output_format);

finish_time = clock();

duration = finish_time - start_time;

snprintf(error_string, sizeof(error_string),"Decoding finished in %u ticks\n", duration);

#if TRACE

fclose(p_trace);

#endif

return 0;

}

struct huffman_table :存储哈夫曼表,实现快速查找

struct huffman_table

{

/* Fast look up table, using HUFFMAN_HASH_NBITS bits we can have directly the symbol,

* if the symbol is <0, then we need to look into the tree table */

short int lookup[HUFFMAN_HASH_SIZE];

/* code size: give the number of bits of a symbol is encoded */

unsigned char code_size[HUFFMAN_HASH_SIZE];

/* some place to store value that is not encoded in the lookup table

* FIXME: Calculate if 256 value is enough to store all values

*/

uint16_t slowtable[16-HUFFMAN_HASH_NBITS][256];

};

truct component:定义水平、垂直采样因子和DCT系数

struct component

{

unsigned int Hfactor;//水平采样因子

unsigned int Vfactor;//垂直采样因子

float *Q_table;//量化表 /* Pointer to the quantisation table to use */

struct huffman_table *AC_table;//交流AC huffman表

struct huffman_table *DC_table;//直流DC huffman表

short int previous_DC; /* Previous DC coefficient */

short int DCT[64]; /* DCT coef */

#if SANITY_CHECK

unsigned int cid;

#endif

};

struct jdec_private:定义了图像宽高,数据流起始,数据流长度,量化表和huffman表

struct jdec_private

{

/* Public variables */

uint8_t *components[COMPONENTS];

unsigned int width, height; /* Size of the image */

unsigned int flags;

/* Private variables */

const unsigned char *stream_begin, *stream_end;

unsigned int stream_length;

const unsigned char *stream; /* Pointer to the current stream */

unsigned int reservoir, nbits_in_reservoir;

struct component component_infos[COMPONENTS];

float Q_tables[COMPONENTS][64]; /* quantization tables */

struct huffman_table HTDC[HUFFMAN_TABLES]; /* DC huffman tables */

struct huffman_table HTAC[HUFFMAN_TABLES]; /* AC huffman tables */

int default_huffman_table_initialized;

int restart_interval;

int restarts_to_go; /* MCUs left in this restart interval */

int last_rst_marker_seen; /* Rst marker is incremented each time */

/* Temp space used after the IDCT to store each components */

uint8_t Y[64*4], Cr[64], Cb[64];

jmp_buf jump_state;

/* Internal Pointer use for colorspace conversion, do not modify it !!! */

uint8_t *plane[COMPONENTS];

};

解码

int convert_one_image(const char *infilename, const char *outfilename, int output_format)

{

FILE *fp;

unsigned int length_of_file;

unsigned int width, height;

unsigned char *buf;

struct jdec_private *jdec;

unsigned char *components[3];

/* Load the Jpeg into memory */

fp = fopen(infilename, "rb");

if (fp == NULL)

exitmessage("Cannot open filename\n");

length_of_file = filesize(fp);

buf = (unsigned char *)malloc(length_of_file + 4);

if (buf == NULL)

exitmessage("Not enough memory for loading file\n");

fread(buf, length_of_file, 1, fp);

fclose(fp);

/* Decompress it */

jdec = tinyjpeg_init();

if (jdec == NULL)

exitmessage("Not enough memory to alloc the structure need for decompressing\n");

if (tinyjpeg_parse_header(jdec, buf, length_of_file)<0)

exitmessage(tinyjpeg_get_errorstring(jdec));

/* Get the size of the image */

tinyjpeg_get_size(jdec, &width, &height);

snprintf(error_string, sizeof(error_string),"Decoding JPEG image...\n");

if (tinyjpeg_decode(jdec, output_format) < 0)

exitmessage(tinyjpeg_get_errorstring(jdec));

/*

* Get address for each plane (not only max 3 planes is supported), and

* depending of the output mode, only some components will be filled

* RGB: 1 plane, YUV420P: 3 planes, GREY: 1 plane

*/

tinyjpeg_get_components(jdec, components);

/* Save it */

switch (output_format)

{

case TINYJPEG_FMT_RGB24:

case TINYJPEG_FMT_BGR24:

write_tga(outfilename, output_format, width, height, components);

break;

case TINYJPEG_FMT_YUV420P:

write_yuv(outfilename, width, height, components);

break;

case TINYJPEG_FMT_GREY:

write_pgm(outfilename, width, height, components);

break;

}

/* Only called this if the buffers were allocated by tinyjpeg_decode() */

tinyjpeg_free(jdec);

/* else called just free(jdec); */

free(buf);

return 0;

}

tinyjpeg_parse_header:JPEG文件头解析

int tinyjpeg_parse_header(struct jdec_private *priv, const unsigned char *buf, unsigned int size)

{

int ret;

/* Identify the file */

if ((buf[0] != 0xFF) || (buf[1] != SOI)) //判断是否是jpeg文件

snprintf(error_string, sizeof(error_string),"Not a JPG file ?\n");

priv->stream_begin = buf+2;

priv->stream_length = size-2;

priv->stream_end = priv->stream_begin + priv->stream_length;

ret = parse_JFIF(priv, priv->stream_begin);

return ret;

}

static int parse_JFIF:解析marker标志

static int parse_JFIF(struct jdec_private *priv, const unsigned char *stream)

{

int chuck_len;

int marker;

int sos_marker_found = 0;

int dht_marker_found = 0;

const unsigned char *next_chunck;

/* Parse marker */

while (!sos_marker_found)

{

if (*stream++ != 0xff)

goto bogus_jpeg_format;

/* Skip any padding ff byte (this is normal) */

while (*stream == 0xff)

stream++;

marker = *stream++;

chuck_len = be16_to_cpu(stream);

next_chunck = stream + chuck_len;

switch (marker)

{

case SOF:

if (parse_SOF(priv, stream) < 0)

return -1;

break;

case DQT:

if (parse_DQT(priv, stream) < 0)

return -1;

break;

case SOS:

if (parse_SOS(priv, stream) < 0)

return -1;

sos_marker_found = 1;

break;

case DHT:

if (parse_DHT(priv, stream) < 0)

return -1;

dht_marker_found = 1;

break;

case DRI:

if (parse_DRI(priv, stream) < 0)

return -1;

break;

default:

#if TRACE

fprintf(p_trace,"> Unknown marker %2.2x\n", marker);

fflush(p_trace);

#endif

break;

}

stream = next_chunck;

}

if (!dht_marker_found) {

#if TRACE

fprintf(p_trace,"No Huffman table loaded, using the default one\n");

fflush(p_trace);

#endif

build_default_huffman_tables(priv);

}

#ifdef SANITY_CHECK

if ( (priv->component_infos[cY].Hfactor < priv->component_infos[cCb].Hfactor)

|| (priv->component_infos[cY].Hfactor < priv->component_infos[cCr].Hfactor))

snprintf(error_string, sizeof(error_string),"Horizontal sampling factor for Y should be greater than horitontal sampling factor for Cb or Cr\n");

if ( (priv->component_infos[cY].Vfactor < priv->component_infos[cCb].Vfactor)

|| (priv->component_infos[cY].Vfactor < priv->component_infos[cCr].Vfactor))

snprintf(error_string, sizeof(error_string),"Vertical sampling factor for Y should be greater than vertical sampling factor for Cb or Cr\n");

if ( (priv->component_infos[cCb].Hfactor!=1)

|| (priv->component_infos[cCr].Hfactor!=1)

|| (priv->component_infos[cCb].Vfactor!=1)

|| (priv->component_infos[cCr].Vfactor!=1))

snprintf(error_string, sizeof(error_string),"Sampling other than 1x1 for Cr and Cb is not supported");

#endif

return 0;

bogus_jpeg_format:

#if TRACE

fprintf(p_trace,"Bogus jpeg format\n");

fflush(p_trace);

#endif

return -1;

}

static int parse_DQT:解码量化表

static int parse_DQT(struct jdec_private *priv, const unsigned char *stream)

{

int qi;

float *table;

const unsigned char *dqt_block_end;

#if TRACE

fprintf(p_trace,"> DQT marker\n");

fflush(p_trace);

#endif

dqt_block_end = stream + be16_to_cpu(stream);

stream += 2; /* Skip length */

while (stream < dqt_block_end)

{

qi = *stream++;

#if SANITY_CHECK

if (qi>>4)

snprintf(error_string, sizeof(error_string),"16 bits quantization table is not supported\n");

if (qi>4)

snprintf(error_string, sizeof(error_string),"No more 4 quantization table is supported (got %d)\n", qi);

#endif

table = priv->Q_tables[qi];

build_quantization_table(table, stream);

stream += 64;

}

#if TRACE

fprintf(p_trace,"< DQT marker\n");

fflush(p_trace);

#endif

return 0;

}

static void build_quantization_table:建立量化表

static void build_quantization_table(float *qtable, const unsigned char *ref_table)

{

/* Taken from libjpeg. Copyright Independent JPEG Group's LLM idct.

* For float AA&N IDCT method, divisors are equal to quantization

* coefficients scaled by scalefactor[row]*scalefactor[col], where

* scalefactor[0] = 1

* scalefactor[k] = cos(k*PI/16) * sqrt(2) for k=1..7

* We apply a further scale factor of 8.

* What's actually stored is 1/divisor so that the inner loop can

* use a multiplication rather than a division.

*/

int i, j;

static const double aanscalefactor[8] = {

1.0, 1.387039845, 1.306562965, 1.175875602,

1.0, 0.785694958, 0.541196100, 0.275899379

};

const unsigned char *zz = zigzag;

const unsigned char *zz2 = zigzag;

for (i=0; i<8; i++) {

for (j=0; j<8; j++) {

*qtable++ = ref_table[*zz++] * aanscalefactor[i] * aanscalefactor[j];

}

}

#if TRACE

for (i=0; i<8; i++)

{

for (j=0; j<8; j++)

{

fprintf(p_trace,"%-6d",ref_table[*zz2++]);

}

fprintf(p_trace,"\n");

}

#endif

}

parse_DHT:解码Huffman码表,并同时将Huffman码表写入trace文件

static int parse_DHT(struct jdec_private *priv, const unsigned char *stream)

{

unsigned int count, i;

unsigned char huff_bits[17];

int length, index;

length = be16_to_cpu(stream) - 2;

stream += 2; /* Skip length */

#if TRACE

fprintf(p_trace,"> DHT marker (length=%d)\n", length);

fflush(p_trace);

#endif

while (length>0) {

index = *stream++;

/* We need to calculate the number of bytes 'vals' will takes */

huff_bits[0] = 0;

count = 0;

for (i=1; i<17; i++) {

huff_bits[i] = *stream++;

count += huff_bits[i];

}

#if SANITY_CHECK

if (count >= HUFFMAN_BITS_SIZE)

snprintf(error_string, sizeof(error_string),"No more than %d bytes is allowed to describe a huffman table", HUFFMAN_BITS_SIZE);

if ( (index &0xf) >= HUFFMAN_TABLES)

snprintf(error_string, sizeof(error_string),"No more than %d Huffman tables is supported (got %d)\n", HUFFMAN_TABLES, index&0xf);

#if TRACE

fprintf(p_trace,"Huffman table %s[%d] length=%d\n", (index&0xf0)?"AC":"DC", index&0xf, count);

fflush(p_trace);

#endif

#endif

if (index & 0xf0 )

build_huffman_table(huff_bits, stream, &priv->HTAC[index&0xf]);

else

build_huffman_table(huff_bits, stream, &priv->HTDC[index&0xf]);

length -= 1;

length -= 16;

length -= count;

stream += count;

}

#if TRACE

fprintf(p_trace,"< DHT marker\n");

fflush(p_trace);

#endif

return 0;

}

tinyjpeg_decode:以mcu为单位进行解码

int tinyjpeg_decode(struct jdec_private *priv, int pixfmt)

{

unsigned int x, y, xstride_by_mcu, ystride_by_mcu;

unsigned int bytes_per_blocklines[3], bytes_per_mcu[3];

decode_MCU_fct decode_MCU;

const decode_MCU_fct *decode_mcu_table;

const convert_colorspace_fct *colorspace_array_conv;

convert_colorspace_fct convert_to_pixfmt;

unsigned char* DCbuf, * ACbuf;

unsigned char* uvbuf = 128;

int count = 0;

if (setjmp(priv->jump_state))

return -1;

/* To keep gcc happy initialize some array */

bytes_per_mcu[1] = 0;

bytes_per_mcu[2] = 0;

bytes_per_blocklines[1] = 0;

bytes_per_blocklines[2] = 0;

decode_mcu_table = decode_mcu_3comp_table;

switch (pixfmt) {

case TINYJPEG_FMT_YUV420P:

colorspace_array_conv = convert_colorspace_yuv420p;

if (priv->components[0] == NULL)

priv->components[0] = (uint8_t *)malloc(priv->width * priv->height);

if (priv->components[1] == NULL)

priv->components[1] = (uint8_t *)malloc(priv->width * priv->height/4);

if (priv->components[2] == NULL)

priv->components[2] = (uint8_t *)malloc(priv->width * priv->height/4);

bytes_per_blocklines[0] = priv->width;

bytes_per_blocklines[1] = priv->width/4;

bytes_per_blocklines[2] = priv->width/4;

bytes_per_mcu[0] = 8;

bytes_per_mcu[1] = 4;

bytes_per_mcu[2] = 4;

break;

case TINYJPEG_FMT_RGB24:

colorspace_array_conv = convert_colorspace_rgb24;

if (priv->components[0] == NULL)

priv->components[0] = (uint8_t *)malloc(priv->width * priv->height * 3);

bytes_per_blocklines[0] = priv->width * 3;

bytes_per_mcu[0] = 3*8;

break;

case TINYJPEG_FMT_BGR24:

colorspace_array_conv = convert_colorspace_bgr24;

if (priv->components[0] == NULL)

priv->components[0] = (uint8_t *)malloc(priv->width * priv->height * 3);

bytes_per_blocklines[0] = priv->width * 3;

bytes_per_mcu[0] = 3*8;

break;

case TINYJPEG_FMT_GREY:

decode_mcu_table = decode_mcu_1comp_table;

colorspace_array_conv = convert_colorspace_grey;

if (priv->components[0] == NULL)

priv->components[0] = (uint8_t *)malloc(priv->width * priv->height);

bytes_per_blocklines[0] = priv->width;

bytes_per_mcu[0] = 8;

break;

default:

#if TRACE

fprintf(p_trace,"Bad pixel format\n");

fflush(p_trace);

#endif

return -1;

}

xstride_by_mcu = ystride_by_mcu = 8;

if ((priv->component_infos[cY].Hfactor | priv->component_infos[cY].Vfactor) == 1) {

decode_MCU = decode_mcu_table[0];

convert_to_pixfmt = colorspace_array_conv[0];

#if TRACE

fprintf(p_trace,"Use decode 1x1 sampling\n");

fflush(p_trace);

#endif

} else if (priv->component_infos[cY].Hfactor == 1) {

decode_MCU = decode_mcu_table[1];

convert_to_pixfmt = colorspace_array_conv[1];

ystride_by_mcu = 16;

#if TRACE

fprintf(p_trace,"Use decode 1x2 sampling (not supported)\n");

fflush(p_trace);

#endif

} else if (priv->component_infos[cY].Vfactor == 2) {

decode_MCU = decode_mcu_table[3];

convert_to_pixfmt = colorspace_array_conv[3];

xstride_by_mcu = 16;

ystride_by_mcu = 16;

#if TRACE

fprintf(p_trace,"Use decode 2x2 sampling\n");

fflush(p_trace);

#endif

} else {

decode_MCU = decode_mcu_table[2];

convert_to_pixfmt = colorspace_array_conv[2];

xstride_by_mcu = 16;

#if TRACE

fprintf(p_trace,"Use decode 2x1 sampling\n");

fflush(p_trace);

#endif

}

resync(priv);

/* Don't forget to that block can be either 8 or 16 lines */

bytes_per_blocklines[0] *= ystride_by_mcu;

bytes_per_blocklines[1] *= ystride_by_mcu;

bytes_per_blocklines[2] *= ystride_by_mcu;

bytes_per_mcu[0] *= xstride_by_mcu/8;

bytes_per_mcu[1] *= xstride_by_mcu/8;

bytes_per_mcu[2] *= xstride_by_mcu/8;

/* Just the decode the image by macroblock (size is 8x8, 8x16, or 16x16) */

for (y=0; y < priv->height/ystride_by_mcu; y++)

{

//trace("Decoding row %d\n", y);

priv->plane[0] = priv->components[0] + (y * bytes_per_blocklines[0]);

priv->plane[1] = priv->components[1] + (y * bytes_per_blocklines[1]);

priv->plane[2] = priv->components[2] + (y * bytes_per_blocklines[2]);

for (x=0; x < priv->width; x+=xstride_by_mcu)

{

decode_MCU(priv);

DCbuf = (unsigned char)((priv->component_infos->DCT[0] + 512.0) / 4 + 0.5);

ACbuf = (unsigned char)(priv->component_infos->DCT[1] + 128);

fwrite(&DCbuf, 1, 1, DCfile);

fwrite(&ACbuf, 1, 1, ACfile);

count++;

convert_to_pixfmt(priv);

priv->plane[0] += bytes_per_mcu[0];

priv->plane[1] += bytes_per_mcu[1];

priv->plane[2] += bytes_per_mcu[2];

if (priv->restarts_to_go>0)

{

priv->restarts_to_go--;

if (priv->restarts_to_go == 0)

{

priv->stream -= (priv->nbits_in_reservoir/8);

resync(priv);

if (find_next_rst_marker(priv) < 0)

return -1;

}

}

}

}

#if TRACE

fprintf(p_trace,"Input file size: %d\n", priv->stream_length+2);

fprintf(p_trace,"Input bytes actually read: %d\n", priv->stream - priv->stream_begin + 2);

fflush(p_trace);

#endif

for (int j = 0; j < count * 0.25 * 2; j++)

{

fwrite(&uvbuf, sizeof(unsigned char), 1, DCfile);

fwrite(&uvbuf, sizeof(unsigned char), 1, ACfile);

}

return 0;

}

static void write_yuv:保存YUV文件

static void write_yuv(const char *filename, int width, int height, unsigned char **components)

{

FILE *F;

char temp[1024];

snprintf(temp, 1024, "%s.Y", filename);

F = fopen(temp, "wb");

fwrite(components[0], width, height, F);

fclose(F);

snprintf(temp, 1024, "%s.U", filename);

F = fopen(temp, "wb");

fwrite(components[1], width*height/4, 1, F);

fclose(F);

snprintf(temp, 1024, "%s.V", filename);

F = fopen(temp, "wb");

fwrite(components[2], width*height/4, 1, F);

fclose(F);

printf("write yuv begin!\n");

//yuv都写入一个文件

snprintf(temp, 1024, "%s.yuv", filename);

F = fopen(temp, "wb");

fwrite(components[0], width, height, F); //写Y

fwrite(components[1], width * height / 4, 1, F);

fwrite(components[2], width * height / 4, 1, F); //写UV

fclose(F); //关闭文件

printf("write yuv done!\n");

}

实验结果

DC图像

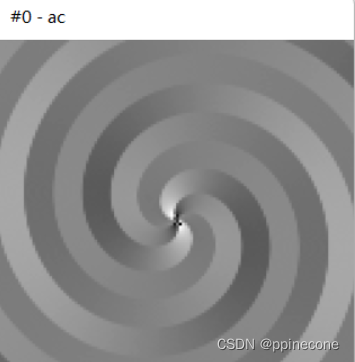

AC图像

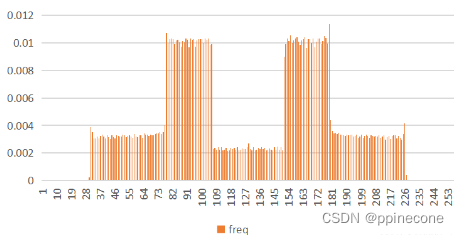

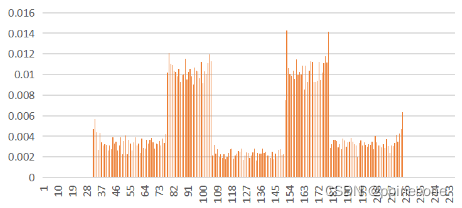

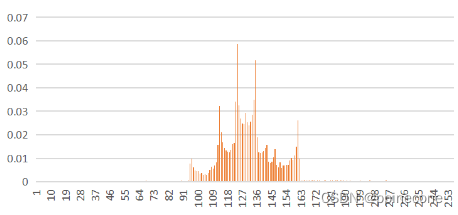

概率分布图

| 原图 | DC | AC |

|---|---|---|

|

|

|

观察可知,DC图的概率分布与原图的概率分布大致相同,且分布图中DC系数是两边高中间低而AC则是中间高两边低。