| 日期 | 内核版本 | 架构 |

|---|---|---|

| 2022-08-7立秋 | Linux5.4.200 | X86 & arm |

Linux物理内存模型

前言

本文是Linux内存管理系列文章的第一篇,先对一些常见概念有一个基本的认知。

提问环节:

- Linux支持哪几种内存模型?

- Multiprocessors系统设计内存架构的两种模式?

- Linux内存有哪三大结构?

去本文中找答案吧!

Linux内存模型

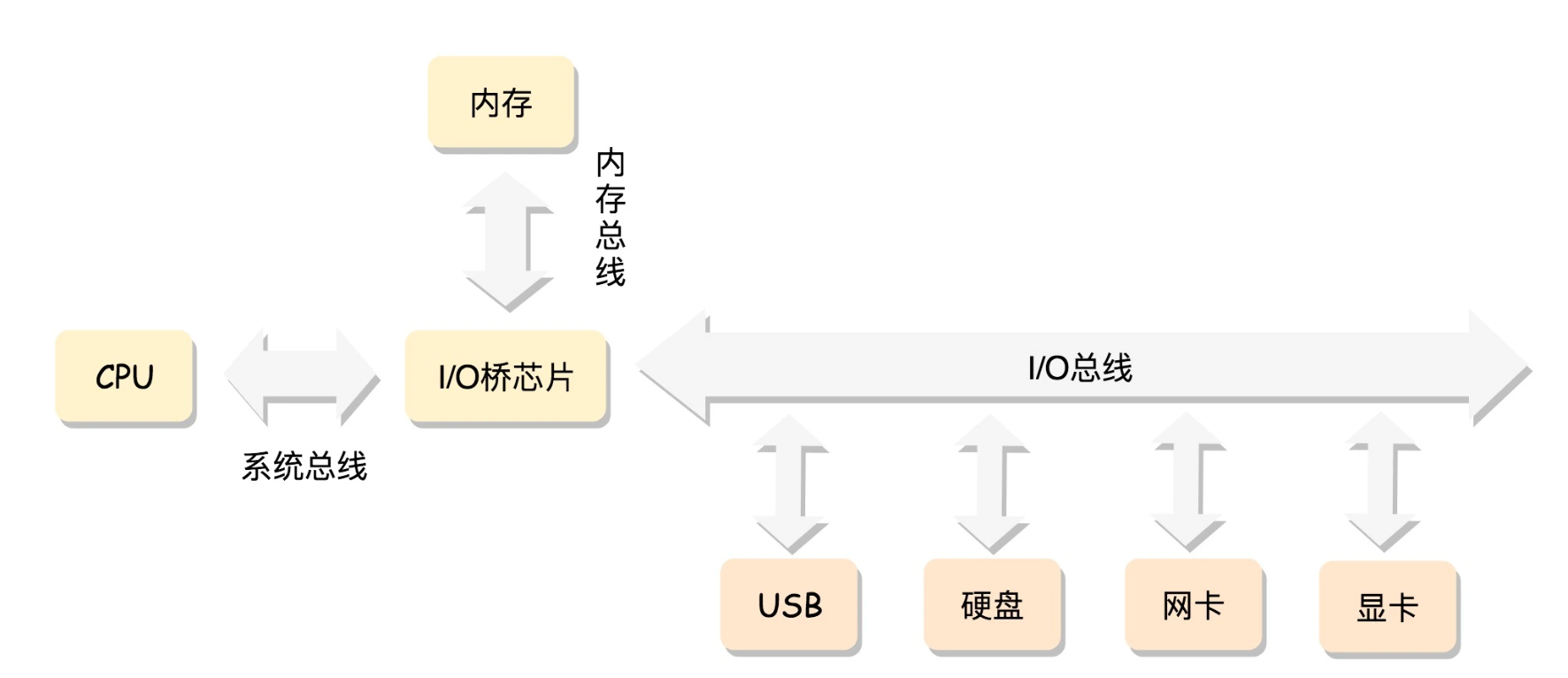

所谓的内存模型,是从CPU的角度来观察物理内存的分布,而CPU 通过总线去访问内存。

Linux kernel支持3种内存模型:

- Flat Memory Model

- Discontiguous Memory Model

- Sparse Memory Model

在经典的**平坦内存模型(Flat Memory Model)**中,物理地址是连续的,页也是连续的,每个页大小也是一样的。每个页有一个结构 struct page 表示,这个结构也是放在一个数组里面,这样根据页号,很容易通过下标找到相应的 struct page 结构。

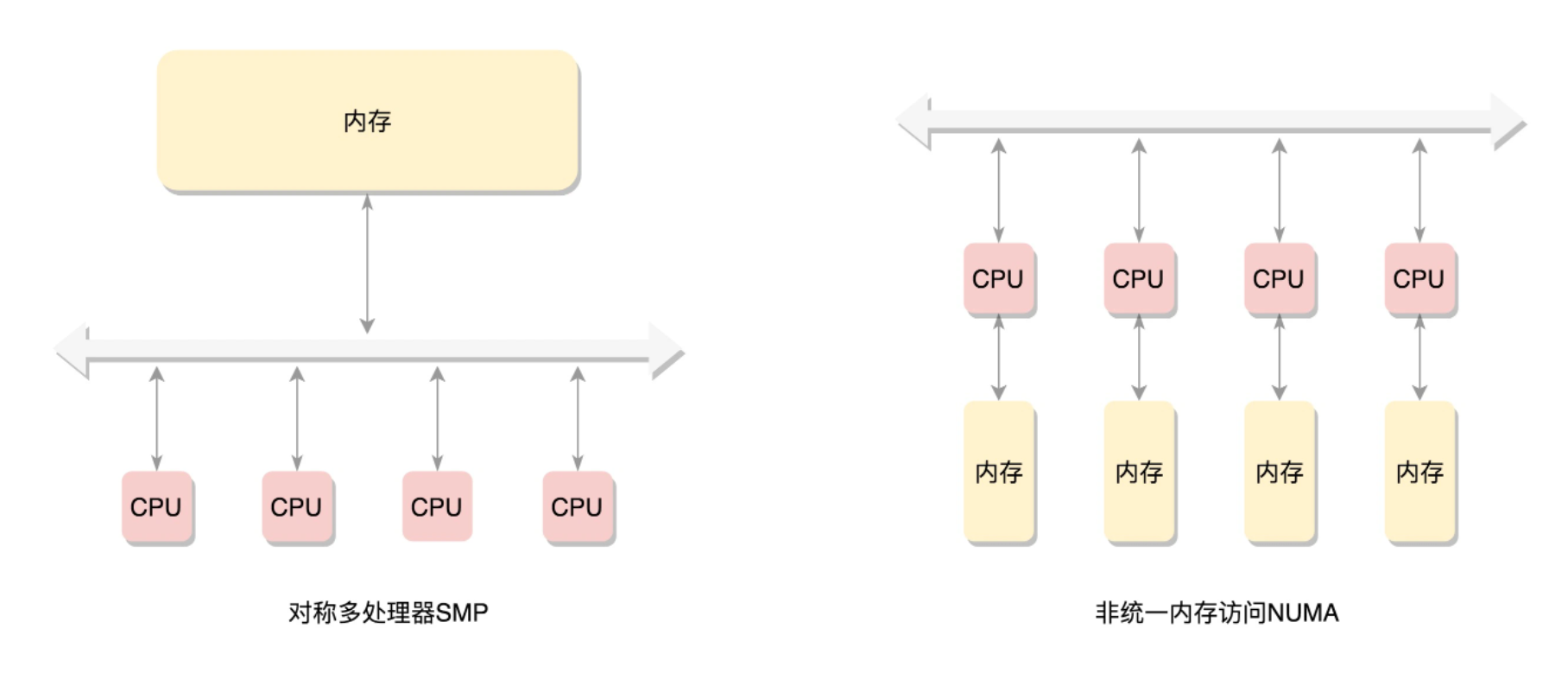

当存在多个CPU时分布在总线的一侧,所有内存组成的整体在内存的另一侧,所有的CPU访问内存都要经过总线,而且距离都相同。这就是我们熟悉的对称多处理器SMP(Symmetric multiprocessing),如下左图所示。这种模式成为UMA(uniform memory access)。针对嵌入式系统,一般采用UMA模式。不过这种模型缺点很明显,就是所有数据都要经过同一个总线,总线会成为瓶颈。

为了提高性能和可拓展性,有了另一个模式NUMA(Non-uniform memory access),非一致内存访问。如上右图所示。仔细观察内存的划分,不再是一整块,而是每个CPU有各自的内存。CPU访问本地内存不需要经过总线,CPU+本地内存成为一个NUMA节点。如果本地不够用,就要通过总线去其他NUMA节点申请内存,时间上肯定会久一些。、

NUMA往往是非连续内存模型(Discontiguous Memory Model)。内存被分成了多个节点,每个节点再被分成一个一个的页面。由于页需要全局唯一定位,页还是需要有全局唯一的页号的。但是由于物理内存不是连起来的了,页号也就不再连续了。注意,非连续内存模型不是NUMA模式的充分条件,一整块内存中物理内存地址也可能不连续。

当memory支持hotplug,**稀疏内存模型(Sparse Memory Model)**也应运而生。sparse memory最终可以替代Discontiguous memory的,这个替代过程正在进行中。

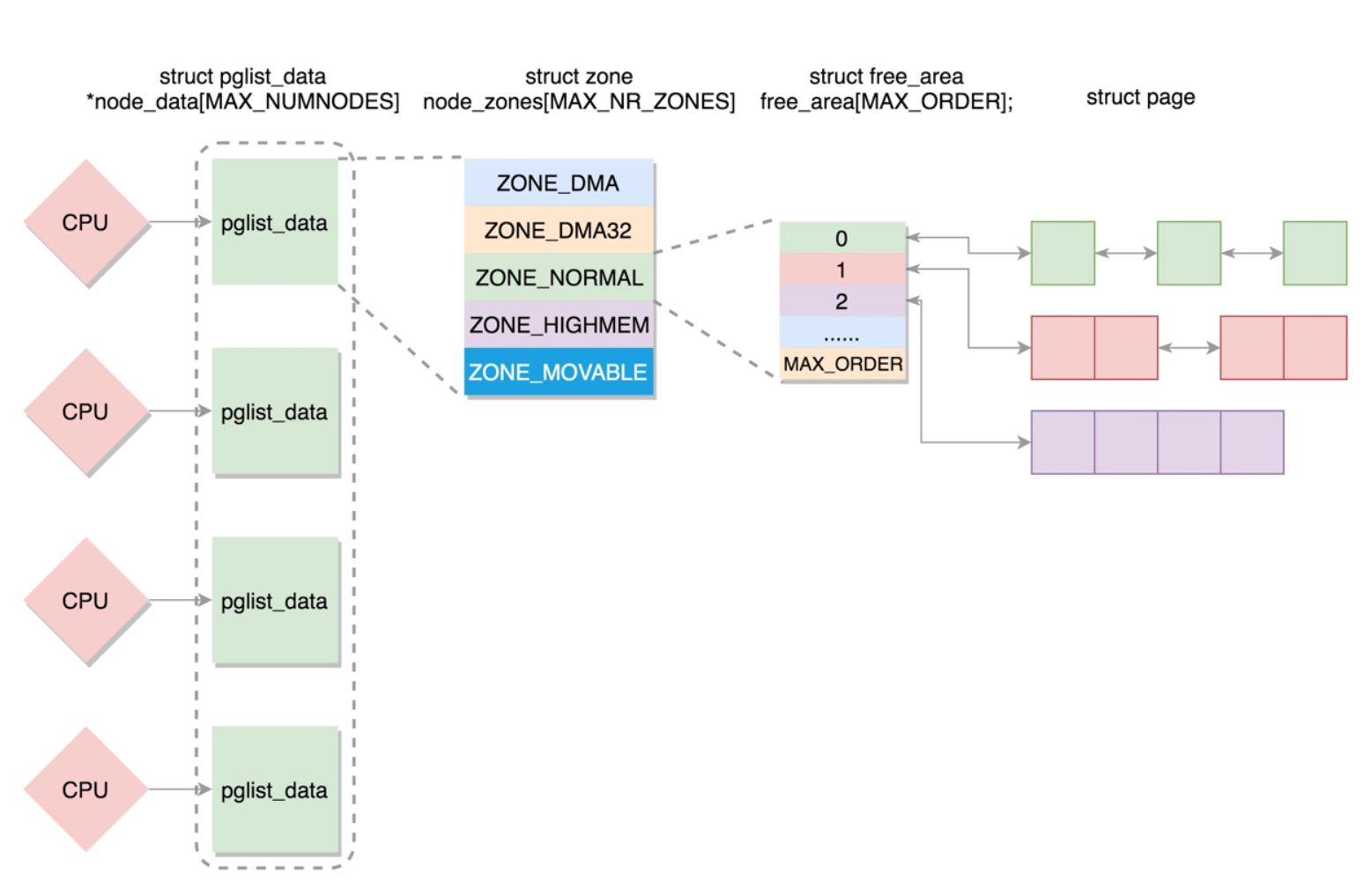

物理内存三大结构

Node

NUMA中CPU加本地内存成为一个Node,UMA中只有一个Node。

Node在Linux中用typedef struct pglist_data pg_data_t表示。

https://elixir.bootlin.com/linux/v5.4.200/source/include/linux/mmzone.h#L698

主要成员变量包括:

- node_id;每一个节点都有自己的 ID:

- node_mem_map 就是这个节点的 struct page 数组,用于描述这个节点里面的所有的页;

- node_start_pfn 是这个节点的起始页号;

- node_spanned_pages 是这个节点中包含不连续的物理内存地址的页面数;

- node_present_pages 是真正可用的物理页面的数目。

- node_zones: 每一个节点分成一个个区域 zone,放在node_zones数组里。

- nr_zones 表示当前节点的区域的数量

- node_zonelists 是备用节点和它的内存区域的情况。当本地内存不够用,就要通过总线去其他NUMA节点觅食。

typedef struct pglist_data {

struct zone node_zones[MAX_NR_ZONES];

struct zonelist node_zonelists[MAX_ZONELISTS];

int nr_zones;

struct page *node_mem_map;

unsigned long node_start_pfn;

unsigned long node_present_pages; /* total number of physical pages */

unsigned long node_spanned_pages; /* total size of physical page range, including holes */

int node_id;

......

} pg_data_t;

整个内存被分为多个节点,pglist_data放在一个数组中。

struct pglist_data *node_data[MAX_NUMNODES] __read_mostly;

Zone

每一个Node分成多个zone,放在数组 node_zones 中,大小为 MAX_NR_ZONES。我们来看区域的定义。

enum zone_type {

#ifdef CONFIG_ZONE_DMA

/*

* ZONE_DMA is used when there are devices that are not able

* to do DMA to all of addressable memory (ZONE_NORMAL). Then we

* carve out the portion of memory that is needed for these devices.

* The range is arch specific.

*

* Some examples

*

* Architecture Limit

* ---------------------------

* parisc, ia64, sparc <4G

* s390, powerpc <2G

* arm Various

* alpha Unlimited or 0-16MB.

*

* i386, x86_64 and multiple other arches

* <16M.

*/

ZONE_DMA,

#endif

#ifdef CONFIG_ZONE_DMA32

/*

* x86_64 needs two ZONE_DMAs because it supports devices that are

* only able to do DMA to the lower 16M but also 32 bit devices that

* can only do DMA areas below 4G.

*/

ZONE_DMA32,

#endif

/*

* Normal addressable memory is in ZONE_NORMAL. DMA operations can be

* performed on pages in ZONE_NORMAL if the DMA devices support

* transfers to all addressable memory.

*/

ZONE_NORMAL,

#ifdef CONFIG_HIGHMEM

/*

* A memory area that is only addressable by the kernel through

* mapping portions into its own address space. This is for example

* used by i386 to allow the kernel to address the memory beyond

* 900MB. The kernel will set up special mappings (page

* table entries on i386) for each page that the kernel needs to

* access.

*/

ZONE_HIGHMEM,

#endif

ZONE_MOVABLE,

#ifdef CONFIG_ZONE_DEVICE

ZONE_DEVICE,

#endif

__MAX_NR_ZONES

};

| 管理内存域 | 描述 |

|---|---|

| ZONE_DMA | 对于不能通过ZONE_NORMAL进行DMA访问的,需要预留这部分内存用于DMA操作 |

| ZONE_DMA32 | 用于低于4G内存访问 |

| ZONE_NORMAL | 直接映射区,从物理内存到虚拟内存的内核区域,通过加上一个常量直接映射 |

| ZONE_HIGHMEM | 高端内存区,对于 32 位系统来说超过 896M 的地方,对于 64 位没必要有的一段区域 |

| ZONE_MOVABLE | 可移动区域,通过将物理内存划分为可移动分配区域和不可移动分配区域来避免内存碎片 |

| ZONE_DEVICE | 为支持热插拔设备而分配的Non Volatile Memory非易失性内存 |

| __MAX_NR_ZONES | 充当结束标记, 在内核中想要迭代系统中所有内存域, 会用到该常量 |

Zone的结构体如下(https://elixir.bootlin.com/linux/v5.4.200/source/include/linux/mmzone.h#L417)

struct zone {

/* Read-mostly fields */

/* zone watermarks, access with *_wmark_pages(zone) macros */

unsigned long _watermark[NR_WMARK];

unsigned long watermark_boost;

unsigned long nr_reserved_highatomic;

/*

* We don't know if the memory that we're going to allocate will be

* freeable or/and it will be released eventually, so to avoid totally

* wasting several GB of ram we must reserve some of the lower zone

* memory (otherwise we risk to run OOM on the lower zones despite

* there being tons of freeable ram on the higher zones). This array is

* recalculated at runtime if the sysctl_lowmem_reserve_ratio sysctl

* changes.

*/

long lowmem_reserve[MAX_NR_ZONES];

#ifdef CONFIG_NUMA

int node;

#endif

struct pglist_data *zone_pgdat;

struct per_cpu_pageset __percpu *pageset;

#ifndef CONFIG_SPARSEMEM

/*

* Flags for a pageblock_nr_pages block. See pageblock-flags.h.

* In SPARSEMEM, this map is stored in struct mem_section

*/

unsigned long *pageblock_flags;

#endif /* CONFIG_SPARSEMEM */

/* zone_start_pfn == zone_start_paddr >> PAGE_SHIFT */

unsigned long zone_start_pfn;

/*

* spanned_pages is the total pages spanned by the zone, including

* holes, which is calculated as:

* spanned_pages = zone_end_pfn - zone_start_pfn;

*

* present_pages is physical pages existing within the zone, which

* is calculated as:

* present_pages = spanned_pages - absent_pages(pages in holes);

*

* managed_pages is present pages managed by the buddy system, which

* is calculated as (reserved_pages includes pages allocated by the

* bootmem allocator):

* managed_pages = present_pages - reserved_pages;

*

* So present_pages may be used by memory hotplug or memory power

* management logic to figure out unmanaged pages by checking

* (present_pages - managed_pages). And managed_pages should be used

* by page allocator and vm scanner to calculate all kinds of watermarks

* and thresholds.

*

* Locking rules:

*

* zone_start_pfn and spanned_pages are protected by span_seqlock.

* It is a seqlock because it has to be read outside of zone->lock,

* and it is done in the main allocator path. But, it is written

* quite infrequently.

*

* The span_seq lock is declared along with zone->lock because it is

* frequently read in proximity to zone->lock. It's good to

* give them a chance of being in the same cacheline.

*

* Write access to present_pages at runtime should be protected by

* mem_hotplug_begin/end(). Any reader who can't tolerant drift of

* present_pages should get_online_mems() to get a stable value.

*/

atomic_long_t managed_pages;

unsigned long spanned_pages;

unsigned long present_pages;

const char *name;

#ifdef CONFIG_MEMORY_ISOLATION

/*

* Number of isolated pageblock. It is used to solve incorrect

* freepage counting problem due to racy retrieving migratetype

* of pageblock. Protected by zone->lock.

*/

unsigned long nr_isolate_pageblock;

#endif

#ifdef CONFIG_MEMORY_HOTPLUG

/* see spanned/present_pages for more description */

seqlock_t span_seqlock;

#endif

int initialized;

/* Write-intensive fields used from the page allocator */

ZONE_PADDING(_pad1_)

/* free areas of different sizes */

struct free_area free_area[MAX_ORDER];

/* zone flags, see below */

unsigned long flags;

/* Primarily protects free_area */

spinlock_t lock;

/* Write-intensive fields used by compaction and vmstats. */

ZONE_PADDING(_pad2_)

/*

* When free pages are below this point, additional steps are taken

* when reading the number of free pages to avoid per-cpu counter

* drift allowing watermarks to be breached

*/

unsigned long percpu_drift_mark;

#if defined CONFIG_COMPACTION || defined CONFIG_CMA

/* pfn where compaction free scanner should start */

unsigned long compact_cached_free_pfn;

/* pfn where async and sync compaction migration scanner should start */

unsigned long compact_cached_migrate_pfn[2];

unsigned long compact_init_migrate_pfn;

unsigned long compact_init_free_pfn;

#endif

#ifdef CONFIG_COMPACTION

/*

* On compaction failure, 1<<compact_defer_shift compactions

* are skipped before trying again. The number attempted since

* last failure is tracked with compact_considered.

*/

unsigned int compact_considered;

unsigned int compact_defer_shift;

int compact_order_failed;

#endif

#if defined CONFIG_COMPACTION || defined CONFIG_CMA

/* Set to true when the PG_migrate_skip bits should be cleared */

bool compact_blockskip_flush;

#endif

bool contiguous;

ZONE_PADDING(_pad3_)

/* Zone statistics */

atomic_long_t vm_stat[NR_VM_ZONE_STAT_ITEMS];

atomic_long_t vm_numa_stat[NR_VM_NUMA_STAT_ITEMS];

} ____cacheline_internodealigned_in_smp;

主要的变量有:

- zone_start_pfn 表示属于这个 zone 的第一个页

- spanned_pages = zone_end_pfn - zone_start_pfn;根据注释: spanned_pages is the total pages spanned by the zone, including

holes, which is calculated as.指的是不管中间有没有物理内存空洞,直接最后的页号减去起始的页号,简单粗暴. - present_pages = spanned_pages - absent_pages(pages in holes);present_pages 是这个 zone 在物理内存中真实存在的所有 page 数目

- managed_pages = present_pages - reserved_pages,也即 managed_pages 是这个 zone 被伙伴系统管理的所有的 page 数目.

- per_cpu_pageset用于区分冷热页.

注: 如果一个页被加载到 CPU 高速缓存里面,这就是一个热页(Hot Page),CPU 读起来速度会快很多,如果没有就是冷页(Cold Page)

Page

page是组成物理内存的基本单位.用struc_page表示.

https://elixir.bootlin.com/linux/v5.4.200/source/include/linux/mm_types.h#L68

struct page {

unsigned long flags; /* Atomic flags, some possibly

* updated asynchronously */

/*

* Five words (20/40 bytes) are available in this union.

* WARNING: bit 0 of the first word is used for PageTail(). That

* means the other users of this union MUST NOT use the bit to

* avoid collision and false-positive PageTail().

*/

union {

struct {

/* Page cache and anonymous pages */

/**

* @lru: Pageout list, eg. active_list protected by

* pgdat->lru_lock. Sometimes used as a generic list

* by the page owner.

*/

struct list_head lru;

/* See page-flags.h for PAGE_MAPPING_FLAGS */

struct address_space *mapping;

pgoff_t index; /* Our offset within mapping. */

/**

* @private: Mapping-private opaque data.

* Usually used for buffer_heads if PagePrivate.

* Used for swp_entry_t if PageSwapCache.

* Indicates order in the buddy system if PageBuddy.

*/

unsigned long private;

};

struct {

/* page_pool used by netstack */

/**

* @dma_addr: might require a 64-bit value on

* 32-bit architectures.

*/

unsigned long dma_addr[2];

};

struct {

/* slab, slob and slub */

union {

struct list_head slab_list;

struct {

/* Partial pages */

struct page *next;

#ifdef CONFIG_64BIT

int pages; /* Nr of pages left */

int pobjects; /* Approximate count */

#else

short int pages;

short int pobjects;

#endif

};

};

struct kmem_cache *slab_cache; /* not slob */

/* Double-word boundary */

void *freelist; /* first free object */

union {

void *s_mem; /* slab: first object */

unsigned long counters; /* SLUB */

struct {

/* SLUB */

unsigned inuse:16;

unsigned objects:15;

unsigned frozen:1;

};

};

};

struct {

/* Tail pages of compound page */

unsigned long compound_head; /* Bit zero is set */

/* First tail page only */

unsigned char compound_dtor;

unsigned char compound_order;

atomic_t compound_mapcount;

};

struct {

/* Second tail page of compound page */

unsigned long _compound_pad_1; /* compound_head */

unsigned long _compound_pad_2;

/* For both global and memcg */

struct list_head deferred_list;

};

struct {

/* Page table pages */

unsigned long _pt_pad_1; /* compound_head */

pgtable_t pmd_huge_pte; /* protected by page->ptl */

unsigned long _pt_pad_2; /* mapping */

union {

struct mm_struct *pt_mm; /* x86 pgds only */

atomic_t pt_frag_refcount; /* powerpc */

};

#if ALLOC_SPLIT_PTLOCKS

spinlock_t *ptl;

#else

spinlock_t ptl;

#endif

};

struct {

/* ZONE_DEVICE pages */

/** @pgmap: Points to the hosting device page map. */

struct dev_pagemap *pgmap;

void *zone_device_data;

/*

* ZONE_DEVICE private pages are counted as being

* mapped so the next 3 words hold the mapping, index,

* and private fields from the source anonymous or

* page cache page while the page is migrated to device

* private memory.

* ZONE_DEVICE MEMORY_DEVICE_FS_DAX pages also

* use the mapping, index, and private fields when

* pmem backed DAX files are mapped.

*/

};

/** @rcu_head: You can use this to free a page by RCU. */

struct rcu_head rcu_head;

};

union {

/* This union is 4 bytes in size. */

/*

* If the page can be mapped to userspace, encodes the number

* of times this page is referenced by a page table.

*/

atomic_t _mapcount;

/*

* If the page is neither PageSlab nor mappable to userspace,

* the value stored here may help determine what this page

* is used for. See page-flags.h for a list of page types

* which are currently stored here.

*/

unsigned int page_type;

unsigned int active; /* SLAB */

int units; /* SLOB */

};

/* Usage count. *DO NOT USE DIRECTLY*. See page_ref.h */

atomic_t _refcount;

#ifdef CONFIG_MEMCG

struct mem_cgroup *mem_cgroup;

#endif

/*

* On machines where all RAM is mapped into kernel address space,

* we can simply calculate the virtual address. On machines with

* highmem some memory is mapped into kernel virtual memory

* dynamically, so we need a place to store that address.

* Note that this field could be 16 bits on x86 ... ;)

*

* Architectures with slow multiplication can define

* WANT_PAGE_VIRTUAL in asm/page.h

*/

#if defined(WANT_PAGE_VIRTUAL)

void *virtual; /* Kernel virtual address (NULL if

not kmapped, ie. highmem) */

#endif /* WANT_PAGE_VIRTUAL */

#ifdef LAST_CPUPID_NOT_IN_PAGE_FLAGS

int _last_cpupid;

#endif

} _struct_page_alignment;

谦哥定眼一看,page结构真的复杂!由于一个物理页面有很多使用模式,所以这里有很多union.

模式1: 从一整页开始用

- 一整页内存直接和虚拟地址空间建立映射的称为匿名页(Anonymous Page)

- 一整页内存先关联文件再和虚拟地址空间建立映射的称为内存映射文件(Memory-mapped File)

此时Union中的变量为:

- struct address_space *mapping 就是用于内存映射,如果是匿名页,最低位为 1;如果是映射文件,最低位为 0;

- pgoff_t index 是在映射区的偏移量;

- atomic_t _mapcount,每个进程都有自己的页表,这里指有多少个页表项指向了这个页;

- struct list_head lru 表示这一页应该在一个链表上,例如这个页面被换出,就在换出页的链表中;

- compound 相关的变量用于复合页(Compound Page),就是将物理上连续的两个或多个页看成一个独立的大页。

模式2: 仅需分配小块内存

如果某一页是用于分割成一小块一小块的内存进行分配的使用模式,则会使用 union 中的以下变量:

- s_mem 是已经分配了正在使用的 slab 的第一个对象;

- freelist 是池子中的空闲对象;

- rcu_head 是需要释放的列表。

struct page {

unsigned long flags;

union {

struct address_space *mapping;

void *s_mem; /* slab first object */

atomic_t compound_mapcount; /* first tail page */

};

union {

pgoff_t index; /* Our offset within mapping. */

void *freelist; /* sl[aou]b first free object */

};

union {

unsigned counters;

struct {

union {

atomic_t _mapcount;

unsigned int active; /* SLAB */

struct {

/* SLUB */

unsigned inuse:16;

unsigned objects:15;

unsigned frozen:1;

};

int units; /* SLOB */

};

atomic_t _refcount;

};

};

union {

struct list_head lru; /* Pageout list */

struct dev_pagemap *pgmap;

struct {

/* slub per cpu partial pages */

struct page *next; /* Next partial slab */

int pages; /* Nr of partial slabs left */

int pobjects; /* Approximate # of objects */

};

struct rcu_head rcu_head;

struct {

unsigned long compound_head; /* If bit zero is set */

unsigned int compound_dtor;

unsigned int compound_order;

};

};

union {

unsigned long private;

struct kmem_cache *slab_cache; /* SL[AU]B: Pointer to slab */

};

......

}

总结

-

Linux kernel支持3种内存模型:

- Flat Memory Model

- Discontiguous Memory Model

- Sparse Memory Model

-

Multiprocessors系统设计内存架构的两种模式

- UMA

- NUMA

-

Linux物理内存的三大结构

- Node: CPU和本地内存组成Node, 用 struct pglist_data 表示,放在一个数组里面。

- Zone: 每个节点分为多个区域,每个区域用 struct zone 表示,也放在一个数组里面

- Page: 每个区域分为多个页,每一页用 struct page 表示