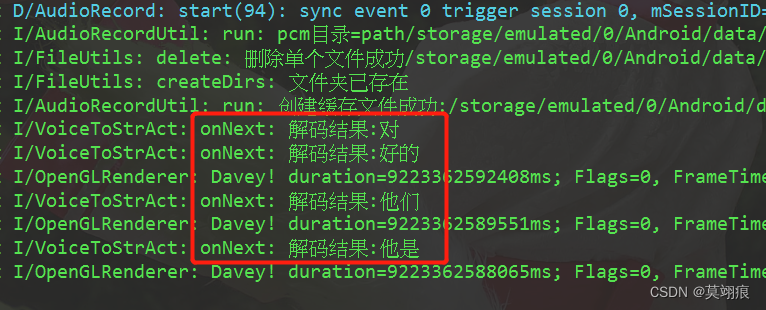

项目需要GRPC实时音频帧数据上传到服务端,算法语音转文字聚类返回客户端,记录学习

1.project根目录build.gradle

添加protobuf插件

buildscript {

dependencies {

classpath "com.google.protobuf:protobuf-gradle-plugin:0.8.17"

}

}

2.app目录build.gradle 配置protobuf和grpc

plugins {

id 'com.google.protobuf'

}

android {

...}

...

protobuf {

protoc {

artifact = 'com.google.protobuf:protoc:3.17.2' }

plugins {

grpc {

artifact = 'io.grpc:protoc-gen-grpc-java:1.40.0' // CURRENT_GRPC_VERSION

}

}

generateProtoTasks {

all().each {

task ->

task.builtins {

java {

option 'lite' }

}

task.plugins {

grpc {

// Options added to --grpc_out

option 'lite' }

}

}

}

}

...

dependencies {

//grpc

implementation 'io.grpc:grpc-okhttp:1.40.0' // CURRENT_GRPC_VERSION

implementation 'io.grpc:grpc-protobuf-lite:1.40.0' // CURRENT_GRPC_VERSION

implementation 'io.grpc:grpc-stub:1.40.0' // CURRENT_GRPC_VERSION

compileOnly 'org.apache.tomcat:annotations-api:6.0.53'

}

3.简单使用

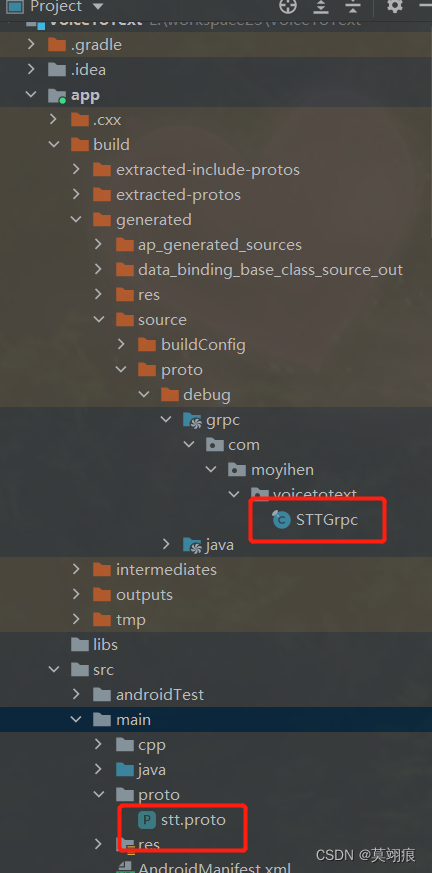

- proto文件放置到 src/main/proto

- 重新同步项目,会显示插件根据proto生成的java代码

stt.proto文件

syntax = "proto3";

package com.moyihen.voicetotext;

// Service that implements NCS Speech To Text (STT) API.

service STT {

// Performs synchronous speech recognition: receive results after all audio

// has been sent and processed.

rpc Recognize(RecognizeRequest) returns (RecognizeResponse) {

}

rpc StreamingRecognize(stream StreamingRecognizeRequest)

returns (stream StreamingRecognizeResponse) {

}

}

// The top-level message sent by the client for the `Recognize` method.

message RecognizeRequest {

// *Required* Provides information to the recognizer that specifies how to

// process the request.

RecognitionConfig config = 1;

// *Required* The audio data to be recognized.

RecognitionAudio audio = 2;

}

message StreamingRecognizeRequest {

// The streaming request, which is either a streaming config or audio content.

oneof streaming_request {

// StreamingRecognitionConfig streaming_config = 1;

RecognitionConfig config = 1;

bool interim_results = 3;

bytes audio_content = 2;

}

}

// Provides information to the recognizer that specifies how to process the

// request.

message StreamingRecognitionConfig {

// *Required* Provides information to the recognizer that specifies how to

// process the request.

RecognitionConfig config = 1;

bool interim_results = 3;

}

// Provides information to the recognizer that specifies how to process the

// request.

message RecognitionConfig {

int32 sample_rate_hertz = 16000;

bool enable_phoneme_recognition = 11;

}

message RecognitionAudio {

// The audio source, which is either inline content or a Google Cloud

// Storage uri.

oneof audio_source {

bytes content = 1;

}

}

message RecognizeResponse {

// Output only. Sequential list of transcription results corresponding to

// sequential portions of audio.

repeated SpeechRecognitionResult results = 2;

}

message StreamingRecognizeResponse {

repeated StreamingRecognitionResult results = 2;

}

// A streaming speech recognition result corresponding to a portion of the audio

// that is currently being processed.

message StreamingRecognitionResult {

repeated SpeechRecognitionAlternative alternatives = 1;

string vp=2;

}

// A speech recognition result corresponding to a portion of the audio.

message SpeechRecognitionResult {

repeated SpeechRecognitionAlternative alternatives = 1;

string vp = 2;

}

// Alternative hypotheses (a.k.a. n-best list).

message SpeechRecognitionAlternative {

// Output only. Transcript text representing the words that the user spoke.

string transcript = 1;

}

3.1初始化GRPC

private void initGPRC() {

Log.i(TAG, "grpc: 开始创建channel");

isFirst = true;

//1.创建channel

mChannel = ManagedChannelBuilder

.forAddress("112.26.212.59", 11157)

.enableRetry()

.usePlaintext().build();

//2.创建阻塞请求服务

//STTGrpc.STTBlockingStub sttBlockingStub = STTGrpc.newBlockingStub(channel);

mSttStub = STTGrpc.newStub(mChannel);

//双向流GRPC

mObserver = mSttStub.streamingRecognize(new StreamObserver<Stt.StreamingRecognizeResponse>() {

@Override

public void onNext(Stt.StreamingRecognizeResponse value) {

Log.i(TAG, "onNext: 服务端发送的数据:"+value.getResults(0).getAlternatives(0).getTranscript());

});

}

@Override

public void onError(Throwable t) {

Log.i(TAG, "onError: " + t.toString() + "--" + t.getMessage());

//连接异常

//onError: io.grpc.StatusRuntimeException: UNAVAILABLE

//if (t instanceof StatusRuntimeException)

}

@Override

public void onCompleted() {

Log.i(TAG, "onCompleted: ");

}

});

}

3.2 发送数据到服务端

这边测试实时推送音频数据到服务端,语音转文字.

//往服务端发送数据

if (isFirst) {

isFirst = false;

Stt.StreamingRecognizeRequest request = Stt.StreamingRecognizeRequest.newBuilder()

.setConfig(config)

.build();

mObserver.onNext(request);

// Log.i(TAG, "voice: 第一次,配置config");

} else {

Stt.StreamingRecognizeRequest request = Stt.StreamingRecognizeRequest.newBuilder()

.setAudioContent(ByteString.copyFrom(voice))

.build();

//IDLE 查询channel状态,如果为IDLE 尝试重新连接.

ConnectivityState state = mChannel.getState(true);

Log.i(TAG, "voice:ConnectivityState: "+state);

if ( state !=ConnectivityState.READY){

Log.i(TAG, "voice: 状态异常 不发送数据");

return;

}

mObserver.onNext(request);

}