前言

这部分我们定制一个“经典网络”,即提供对内和对外的网络模型。对外由provider网络提供,对内由internal网络提供。

一、准备工作

在VMware的control和compute主机上添加仅主机模型的网卡。

添加完成后启动control和compute主机,然后分别在两个主机中配置新网卡eth1。

cd /etc/sysconfig/network-scripts

cp ifcfg-eth0 ifcfg-eth1

vim ifcfg-eth1

NAME=eth1

DEVICE=eth1

IPADDR=192.168.162.14 # 控制节点 ip 地址设置为 192.168.162.15

NETMASK=255.255.255.0注意:因为是仅主机模式的网卡,这里不需要设置Gateway和DNS。

设置完成后启动eth1网络。

ifup eth1二、定制网络

1. 控制节点配置

- 添加内网标识:

vim /etc/neutron/plugins/ml2/ml2_conf.ini

[ml2_type_flat]

flat_networks = provider,internal- 设置内网使用 eth1 进行通信:

vim /etc/neutron/plugins/ml2/linuxbridge_agent.ini

[linux_bridge]

physical_interface_mappings = provider:eth0,internal:eth1设置完成后启动网络相关服务。

systemctl restart neutron-server neutron-linuxbridge-agent

systemctl status neutron-server neutron-linuxbridge-agent2. 计算节点配置

计算节点只需要修改linuxbridge_agent.ini文件即可。

vim /etc/neutron/plugins/ml2/linuxbridge_agent.ini

[linux_bridge]

physical_interface_mappings = provider:eth0,internal:eth1设置完成后重启服务。

systemctl restart neutron-linuxbridge-agent

systemctl status neutron-linuxbridge-agent3. 创建internal网络

- 检查网络现状和服务是否正常

- 创建internal网络

- 创建internal子网

# 在control节点上查看网络现状和服务是否正常

source openstack-admin.sh

openstack network agent list

openstack compute service list

openstack network list

# 创建internal网络,网络类型选择flat

openstack network create --share --internal --provider-physical-network internal --provider-network-type flat internal

# 创建子网

openstack subnet create --network internal --allocation-pool start=192.168.162.100,end=192.168.162.120 --dns-nameserver 223.5.5.5 --gateway 192.168.162.2 --subnet-range 192.168.162.0/24 internal

# 检查

openstack network list4. 开启双网卡功能

1)首先在任意一台虚拟机中绑定上面的internal网络,具体操作可以在dashboard完成;

2)ssh连接该虚拟机;

3)开启支持双网卡的功能;

sudo su -

vi /etc/network/interfaces

# 追加以下两条配置,表示支持eth1启动

auto eth1

iface eth1 inet dhcp配置完成后,重新启动eth1网络即可。

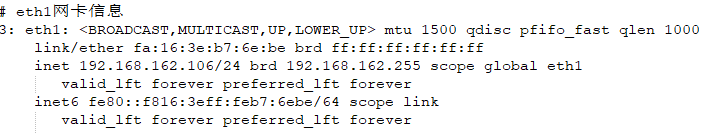

ifup eth1

ip addr li eth1

三、节点扩容

下面演示如何增加一个新的计算节点。

1. 准备工作

- 检查网络以及相关服务是否正常

- 修改源

- 安装nova相关软件

- 安装网络软件

# 检查网络和服务是否正常

source openstack-admin.sh

openstack compute service list

openstack network agent list

# 修改源

scp [email protected]:/etc/yum.repos.d/CentOS-Base-163.repo /etc/yum.repos.d

# 安装nova

yum install -y openstack-nova-compute --nogpgchec

# 安装网络软件

yum install -y openstack-neutron-linuxbridge --nogpgchec

yum install -y conntrack-tools --nogpgchec2. 配置nova

- 配置网络

- 开启nova-api功能

- 配置rabbitmq地址

- 配置keystone认证

- 配置kvm

- 配置资源跟踪

- 配置vnc

vim /etc/nova/nova.conf

[DEFAULT]

use_neutron = True

firewall_driver = nova.virt.firewall.NoopFirewallDriver

enabled_apis = osapi_compute,metadata

transport_url = rabbit://openstack:[email protected]:5672

[api]

auth_strategy = keystone

[glance]

api_servers = http://192.168.88.14:9292

[keystone_authtoken]

www_authenticate_uri = http://192.168.88.14:5000

auth_url = http://192.168.88.14:35357

memcached_servers = 192.168.88.14:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = nova

password = nova

[libvirt]

virt_type = qemu

vif_plugging_is_fatal=false

vif_plugging_timeout=10

[oslo_concurrency]

lock_path = /var/lib/nova/tmp

[placement]

os_region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://192.168.88.14:35357/v3

username = placement

password = placement

[vnc]

enabled = true

vncserver_listen = 0.0.0.0

vncserver_proxyclient_address = 192.168.88.15

novncproxy_base_url = http://192.168.88.14:6080/vnc_auto.html配置完成后重启nova相关服务。

systemctl start libvirtd openstack-nova-compute

systemctl status libvirtd openstack-nova-compute

systemctl enable libvirtd openstack-nova-compute最后,验证服务是否正常。

# 切换admin用户

source openstack-admin.sh

# 检查nova-compute服务是否正常

openstack compute service list

# 数据库增加计算节点记录

su -s /bin/sh -c "nova-manage cell_v2 discover_hosts --verbose" nova

# 验证所有API是否正常

nova-status upgrade check3. 配置neutron

- 将网卡配置文件中增加ipv6的功能

- 配置keystone认证

- 配置rabbitmq地址

- 配置锁文件

# 将网卡配置文件中增加ipv6的功能:

vim /etc/sysctl.conf

net.ipv6.conf.all.disable_ipv6 = 0

net.ipv6.conf.default.disable_ipv6 = 0

net.ipv6.conf.lo.disable_ipv6 = 0

# 永久生效

echo 'sysctl -p' >> /etc/profile

vim /etc/neutron/neutron.conf

[DEFAULT]

auth_strategy = keystone

transport_url = rabbit://openstack:[email protected]:5672

[keystone_authtoken]

auth_uri = http://192.168.88.14:5000

auth_url = http://192.168.88.14:35357

memcached_servers = 192.168.88.14:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = neutron

[oslo_concurrency]

lock_path = /var/lib/neutron/tmp4. 配置网桥

- 配置使用的物理网卡

- 关闭vxlan

- 配置安全组

vim /etc/neutron/plugins/ml2/linuxbridge_agent.ini

[linux_bridge]

physical_interface_mappings = provider:eth0

[vxlan]

enable_vxlan = false

[securitygroup]

enable_security_group = true

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver 5. 修改nova配置

- 添加网络配置

vim /etc/nova/nova.conf

[neutron]

url = http://192.168.88.14:9696

auth_url = http://192.168.88.14:35357

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = neutron配置完成后,重启相关服务。

# 重启openstack-nova-compute

systemctl restart openstack-nova-compute

systemctl status openstack-nova-compute

# 启动neutron-linuxbridge-agent

systemctl start neutron-linuxbridge-agent

systemctl status neutron-linuxbridge-agent

systemctl enable neutron-linuxbridge-agent最后,验证服务是否正常。

# 查看所有的网络

openstack network agent list

# 查看所有的计算节点服务

openstack compute service list四、删除节点

1. 删除节点

- 查看要删除计算节点的ID

- 删除计算节点

- 停止nova相关服务

# 查看所有计算节点

openstack compute service list

+----+----------------+----------+----------+----------+-------+----------------------------+

| ID | Binary | Host | Zone | Status | State | Updated At |

+----+----------------+----------+----------+----------+-------+----------------------------+

| 5 | nova-console | control | internal | enabled | up | 2021-04-02T10:05:44.000000 |

| 6 | nova-scheduler | control | internal | enabled | up | 2021-04-02T10:05:43.000000 |

| 7 | nova-conductor | control | internal | enabled | up | 2021-04-02T10:05:44.000000 |

| 8 | nova-compute | compute | nova | enabled | down | 2021-04-02T07:38:49.000000 |

| 9 | nova-compute | image | nova | disabled | down | 2021-04-02T09:58:55.000000 |

| 10 | nova-compute | compute2 | nova | enabled | up | 2021-04-02T10:05:39.000000 |

+----+----------------+----------+----------+----------+-------+----------------------------+

# 选择要删除的计算节点

openstack compute service delete 10

# 在计算节点上停止相关服务

systemctl stop libvirtd openstack-nova-compute2. 清除网络信息

# 查询网络节点ID

openstack network agent list

# 删除网络节点

openstack network agent delete <agent_id>

# 停止服务

systemctl stop neutron-linuxbridge-agent3. 清除数据库信息

# 登录数据库

mysql -unova -pnova

use nova

# 删除计算记录

select host from nova.services;

delete from nova.services where host="compute2";

# 删除网络记录

select hypervisor_hostname from compute_nodes;

delete from compute_nodes where hypervisor_hostname="compute2";

exit;最后检查一下:

openstack compute service list

openstack network agent list总结

这部分介绍了如何实现经典网络的双网卡功能。虽然现在经典网络已经过时,但是对于openstack新手而言,是一个不错的学习材料。除此以外,我们还了解如何对节点进行扩容和缩容。到目前为止,整个openstack的最小化部署已经基本完成。