一、前言

之前使用shufflenet-nanodet时,思考过它里面的shuffle op的部署,因为很多开发板不支持5 维 tensor,所以python中的shuffle方式,是没法继续使用的,所以就要用其他的思路来做。去年事情多,一直没有空把这个心得记录下来,现在有空了,特此记录,方便自己并抛砖引玉,如有错误,还请指出,谢谢!

二、试验

(一)思路

shufflenet的代码来自nanodet,至于它是否与原版一致,我没去比较。

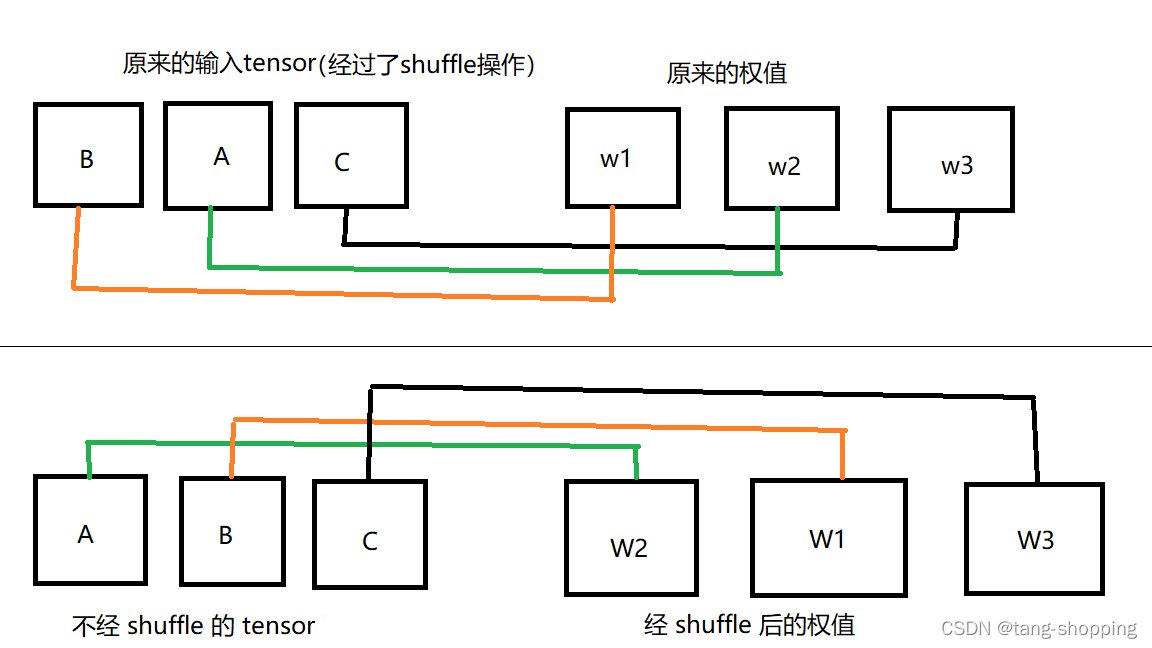

上文说过的,python的shuffle方式没法用,乍一看可能觉得没法继续。但是仔细想想,shuffle操作只是对通道这一维度做了shuffle,其他维度是没有动的。

上图是一个shuffle + 卷积的粗略展示,很直白了。python里我们是对tensor进行shuffle操作。部署到板端时,由于硬件的限制,所以我们对权值进行shuffle操作,最后的结果是一致的,而且还省略了一个op。

(二)试验

shuffle op代码如下:

def channel_shuffle(x, groups):

# type: (torch.Tensor, int) -> torch.Tensor

batchsize, num_channels, height, width = x.data.size()

channels_per_group = num_channels // groups

# reshape

x = x.view(batchsize, groups, channels_per_group, height, width)

x = torch.transpose(x, 1, 2).contiguous()

# flatten

x = x.view(batchsize, -1, height, width)

return x

本试验的核心代码,weight shuffle代码:

def weight_shuffle(weights, groups):

# type: (torch.Tensor, int) -> torch.Tensor

out_channels, in_channels, kernel_h, kernel_w = weights.data.size()

channels_per_group = in_channels // groups

# reshape

# 注意此处,一开始用的是下面这行做 reshape,后来发现结果不对

#weights = weights.view(out_channels, groups, channels_per_group, kernel_h, kernel_w)

# 下面这行代码运行结果无误,可仔细看与上面的区别。可根据log仔细思考下

weights = weights.view(out_channels, channels_per_group, groups, kernel_h, kernel_w)

weights = torch.transpose(weights, 1, 2).contiguous()

# flatten

weights = weights.view(out_channels, -1, kernel_h, kernel_w)

return weights

试验代码如下:

import torch

import torch.nn as nn

import numpy as np

def test():

in_channels = 8

out_channels = 2

inputs = torch.rand(1, in_channels, 4, 4)

weights = torch.rand(out_channels, in_channels, 3, 3)

inputs_shuffle = channel_shuffle(inputs, groups=2)

groups = 1

conv_inputs_shuffle = nn.Conv2d(in_channels, out_channels, kernel_size=3, stride=1, padding=0, groups=groups, bias=False)

conv_inputs_shuffle.weight.data = weights

conv_weight_shuffle = nn.Conv2d(in_channels, out_channels, kernel_size=3, stride=1, padding=0, groups=groups, bias=False)

conv_weight_shuffle.weight.data = weight_shuffle(weights.clone(), 2)

with torch.no_grad():

b = conv_inputs_shuffle(inputs_shuffle)

print("inputs_shuffle output = \n", b)

print("====================")

c = conv_weight_shuffle(inputs)

print("weight_shuffle output = \n", c)

可运行代码并打印出来结果,查看是否一致。其他的像模型转换代码就不写了,换工作之后,之前的环境都没了,也没条件写。大体就是把weight_shuffle函数写入转换代码就完事了,具体你们自己去理解吧。

三、后语

通过变换权值的操作,达到了与变换tensor一样的效果。所以在以后工作里,当在一个方向上卡了很久的时候,可以想一下,是否有其他路线,可以绕开当前困难,取得同样的结果!