Docker搭建Clickhouse集群

环境说明

2C 2G 30G

| hostname | IP | 操作系统 | 服务 |

|---|---|---|---|

| localhost | 192.168.88.171 | CentOs 7.8 | clickhouse-server zookeeper kafka |

| localhost | 192.168.88.172 | CentOs 7.8 | clickhouse-server zookeeper kafka |

| localhost | 192.168.88.173 | CentOs 7.8 | clickhouse-server zookeeper kafka |

1.安装Zookeeper

docker pull wurstmeister/zookeeper

docker pull wurstmeister/kafka

# 拉取完毕后查看

docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

wurstmeister/kafka latest 161b1a19ddd6 13 hours ago 618MB

wurstmeister/zookeeper latest 3f43f72cb283 3 years ago 510MB

# 启动

docker run --restart=always \

--name zk01 -d \

-p 2181:2181 -p 2888:2888 -p 3888:3888 \

wurstmeister/zookeeper

# 进入容器

docker exec -it zookeeper bash

pwd

/opt/zookeeper-3.4.13

# 进入conf文件夹,修改zoo.cfg,在最下方添加

vi zoo.cfg

server.1=0.0.0.0:2888:3888

server.2=192.168.88.172:2888:3888

server.3=192.168.88.173:2888:3888

# 另外两台一样添加

docker run --restart=always --name zk02 -d -p 2181:2181 -p 2888:2888 -p 3888:3888 wurstmeister/zookeeper

docker run --restart=always --name zk03 -d -p 2181:2181 -p 2888:2888 -p 3888:3888 wurstmeister/zookeeper

# 第二台

server.1=192.168.88.171:2888:3888

server.2=0.0.0.0:2888:3888

server.3=192.168.88.173:2888:3888

# 第三台

server.1=192.168.88.171:2888:3888

server.2=192.168.88.172:2888:3888

server.3=0.0.0.0:2888:3888

# 请修改本机所在节点的ip为0.0.0.0

# 而我当前节点是server.1,则ip修改为0.0.0.0

# 修改完毕后添加myid文件内的值为1

# 第一台

echo 1 > data/myid

# 第二台

echo 2 > data/myid

# 第三台

echo 3 > data/myid

# 到此我们就配置好了,重新启动zookeeper容器即可。

docker restart zookeeper

# 接下来验证集群

./bin/zkServer.sh status

# 可以看到集群状态

# server.1

Mode: follower

# server.2

Mode: follower

# server.3

Mode: leader

2.搭建kafka

# 运行准备好的镜像

# 1

docker run -it --restart=always \

--name kafka01 -p 9092:9092 -e KAFKA_BROKER_ID=0 \

-e KAFKA_ZOOKEEPER_CONNECT=192.168.88.171:2181,192.168.88.172:2181,192.168.88.173:2181 \

-e KAFKA_ADVERTISED_LISTENERS=PLAINTEXT://192.168.88.171:9092 \

-e KAFKA_LISTENERS=PLAINTEXT://0.0.0.0:9092 \

-d wurstmeister/kafka:latest

# 2

docker run -it --restart=always \

--name kafka02 -p 9092:9092 -e KAFKA_BROKER_ID=1 \

-e KAFKA_ZOOKEEPER_CONNECT=192.168.88.171:2181,192.168.88.172:2181,192.168.88.173:2181 \

-e KAFKA_ADVERTISED_LISTENERS=PLAINTEXT://192.168.88.172:9092 \

-e KAFKA_LISTENERS=PLAINTEXT://0.0.0.0:9092 \

-d wurstmeister/kafka:latest

# 3

docker run -it --restart=always \

--name kafka03 -p 9092:9092 -e KAFKA_BROKER_ID=2 \

-e KAFKA_ZOOKEEPER_CONNECT=192.168.88.171:2181,192.168.88.172:2181,192.168.88.173:2181 \

-e KAFKA_ADVERTISED_LISTENERS=PLAINTEXT://192.168.88.173:9092 \

-e KAFKA_LISTENERS=PLAINTEXT://0.0.0.0:9092 \

-d wurstmeister/kafka:latest

# 查看zookeeper

docker exec -it zk01 bash

./bin/zkCli.sh

ls /brokers/ids

[2, 1, 0]

# 创建topic

# 进入容器

docker exec -it kafka01 bash

cd /opt/kafka/

./bin/kafka-topics.sh --create --zookeeper 192.168.88.171:2181 --topic test --partitions 1 --replication-factor 1

Created topic test.

# 到另外两台查看新创建的test

# 查看topics

# 192.168.88.172

./bin/kafka-topics.sh --list --zookeeper 192.168.88.172:2181

test

# 192.168.88.173

./bin/kafka-topics.sh --list --zookeeper 192.168.88.173:2181

test

test

# 写(CTRL+D结束写内容)

# 192.168.88.171

./bin/kafka-console-producer.sh --broker-list 192.168.88.171:9092 --topic test

>hello

# 读(CTRL+C结束读内容)

# 192.168.88.173

./bin/kafka-console-consumer.sh --bootstrap-server 192.168.88.173:9092 --topic test --from-beginning

hello

3.Clickhouse集群环境搭建

3.1 修改clickhouse配置文件

创建本地持久化数据目录

# 三台主机依次创建,目录可定义

mkdir /data/clickhouse

# 获取clickhouse-server的配置

# 找一台先启动clickhouse

docker run -d --name clickhouse-server --ulimit nofile=262144:262144 -v /data/clickhouse/:/var/lib/clickhouse yandex/clickhouse-server:latest

# 拷贝容器内容的配置到/data/clickhouse/目录下

docker cp clickhouse-server:/etc/clickhouse-server/ /data/clickhouse/

需要修改的配置为:

- config.xml

- users.xml 如果有需要也可以配置user.xml,不需要配置账户不用此配置

vim /data/clickhouse/clickhouse-server/config.xml

# 打开listen_host

<listen_host>0.0.0.0</listen_host> # 限制来源主机的请求,如果要服务器回答所有请求,请指定“::”

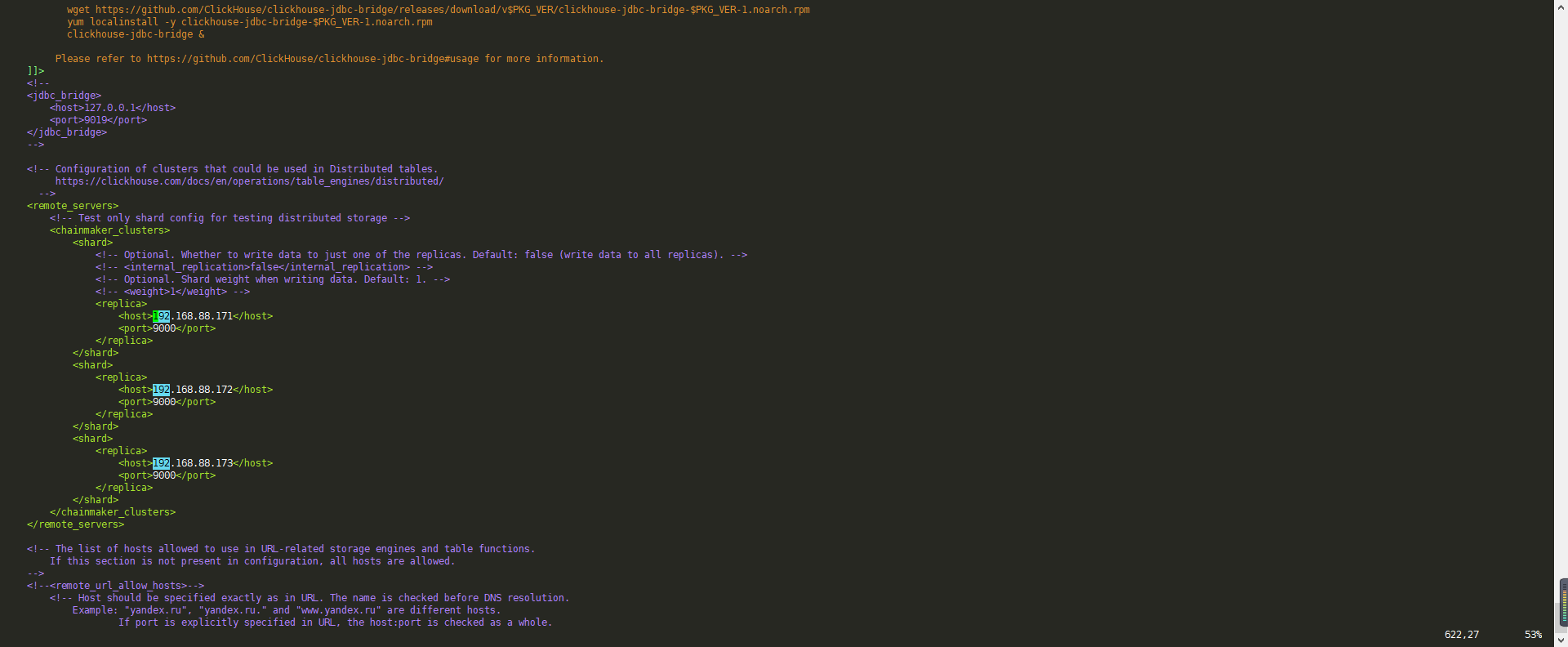

# 修改集群配置remote_servers标签

<remote_servers>

<!-- Test only shard config for testing distributed storage -->

<chainmaker_clusters>

<shard>

<!-- Optional. Whether to write data to just one of the replicas. Default: false (write data to all replicas). -->

<!-- <internal_replication>false</internal_replication> -->

<!-- Optional. Shard weight when writing data. Default: 1. -->

<!-- <weight>1</weight> -->

<replica>

<host>192.168.88.171</host>

<port>9000</port>

</replica>

</shard>

<shard>

<replica>

<host>192.168.88.172</host>

<port>9000</port>

</replica>

</shard>

<shard>

<replica>

<host>192.168.88.173</host>

<port>9000</port>

</replica>

</shard>

</chainmaker_clusters>

</remote_servers>

chainmaker_clusters集群标识,可以自行规定,在创建分布式表(引擎为Distributed)时需要用到

# 修改zookeeper集群配置

<zookeeper>

<node>

<host>192.168.88.171</host>

<port>2181</port>

</node>

<node>

<host>192.168.88.172</host>

<port>2181</port>

</node>

<node>

<host>192.168.88.173</host>

<port>2181</port>

</node>

</zookeeper>

# 倒数第二行添加时区

<timezone>Asia/Shanghai</timezone>

# 如果需要添加密码就需要修改users.xml

# 找到此标签添加

<password>密码</password>

3.2 启动Clickhouse

# clickhouse-server01

docker run --restart always -d \

--name clickhouse-server01 \

--ulimit nofile=262144:262144 \

-v /data/clickhouse/data/:/var/lib/clickhouse/ \

-v /data/clickhouse/clickhouse-server/:/etc/clickhouse-server/ \

-v /data/clickhouse/logs/:/var/log/clickhouse-server/ \

-p 9000:9000 -p 8123:8123 -p 9009:9009 \

yandex/clickhouse-server:latest

# clickhouse-server02

docker run --restart always -d \

--name clickhouse-server02 \

--ulimit nofile=262144:262144 \

-v /data/clickhouse/data/:/var/lib/clickhouse/ \

-v /data/clickhouse/clickhouse-server/:/etc/clickhouse-server/ \

-v /data/clickhouse/logs/:/var/log/clickhouse-server/ \

-p 9000:9000 -p 8123:8123 -p 9009:9009 \

yandex/clickhouse-server:latest

# clickhouse-server03

docker run --restart always -d \

--name clickhouse-server03 \

--ulimit nofile=262144:262144 \

-v /data/clickhouse/data/:/var/lib/clickhouse/ \

-v /data/clickhouse/clickhouse-server/:/etc/clickhouse-server/ \

-v /data/clickhouse/logs/:/var/log/clickhouse-server/ \

-p 9000:9000 -p 8123:8123 -p 9009:9009 \

yandex/clickhouse-server:latest

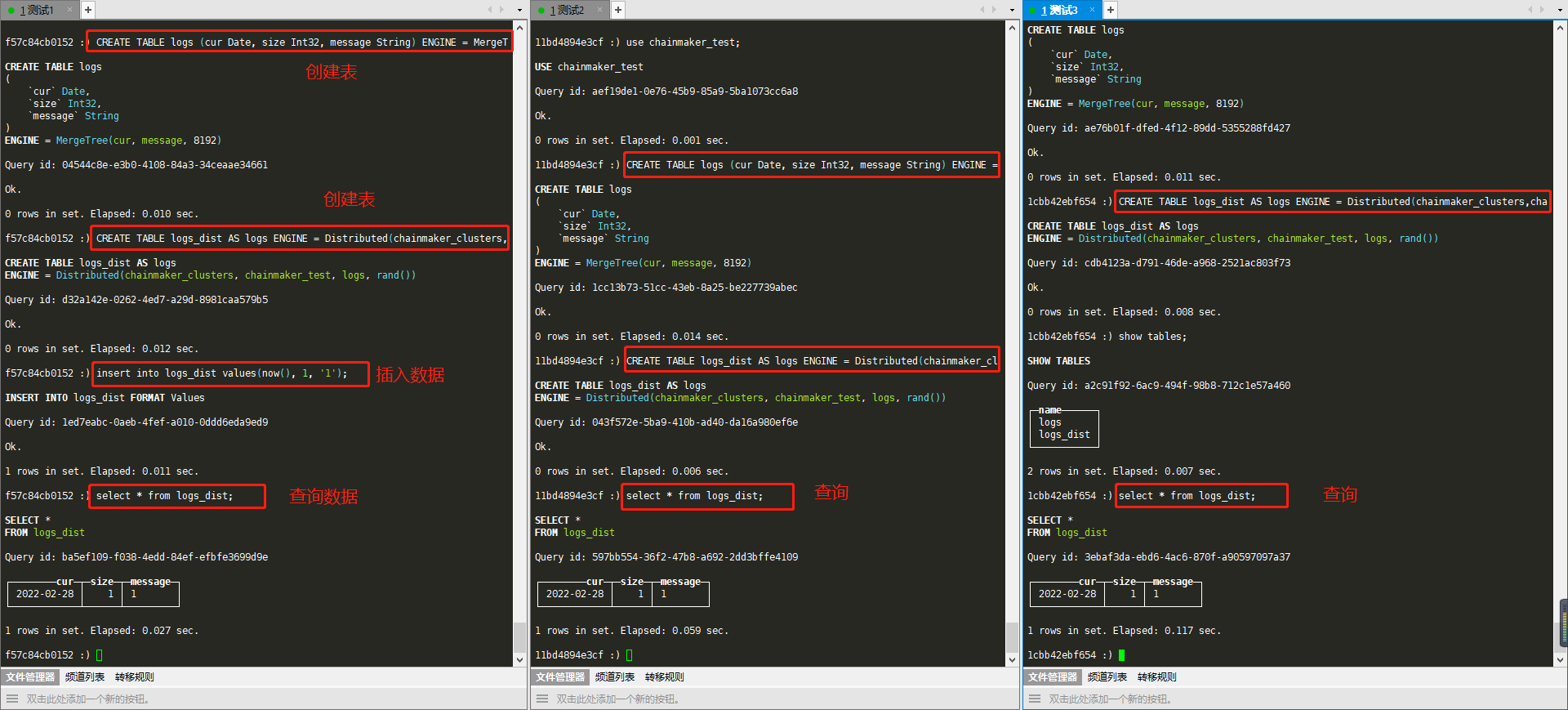

3.3 测试集群可用性

# 进入clickhouse-server01创建库表

docker exec -it clickhouse-server01 bash

clickhouse-client

# 使用密码登录

# clickhouse-client -h 192.168.88.171 --port 9000 --password 密码

# 默认密码为default

# 查看ClickHouse的集群信息

select * from system.clusters;

SELECT *

FROM system.clusters

Query id: cdd6249a-1ba4-46c6-98bc-0041f55464c0

┌─cluster─────────────┬─shard_num─┬─shard_weight─┬─replica_num─┬─host_name──────┬─host_address───┬─port─┬─is_local─┬─user────┬─default_database─┬─errors_count─┬─slowdowns_count─┬─estimated_recovery_time─┐

│ chainmaker_clusters │ 1 │ 1 │ 1 │ 192.168.88.171 │ 192.168.88.171 │ 9000 │ 0 │ default │ │ 0 │ 0 │ 0 │

│ chainmaker_clusters │ 2 │ 1 │ 1 │ 192.168.88.172 │ 192.168.88.172 │ 9000 │ 0 │ default │ │ 0 │ 0 │ 0 │

│ chainmaker_clusters │ 3 │ 1 │ 1 │ 192.168.88.173 │ 192.168.88.173 │ 9000 │ 0 │ default │ │ 0 │ 0 │ 0 │

└─────────────────────┴───────────┴──────────────┴─────────────┴────────────────┴────────────────┴──────┴──────────┴─────────┴──────────────────┴──────────────┴─────────────────┴─────────────────────────┘

3 rows in set. Elapsed: 0.007 sec.

# 创建库表,三台主机一起创建

create database chainmaker_test;

use chainmaker_test;

CREATE TABLE logs (cur Date, size Int32, message String) ENGINE = MergeTree(cur, message, 8192);

CREATE TABLE logs_dist AS logs ENGINE = Distributed(chainmaker_clusters,chainmaker_test,logs,rand());

# 下列命令在Clickhouse的一个主机上执行即可;

insert into logs_dist values(now(), 1, '1');

# 在任何一个Clickhouse的主机上查询数据一致即表示集群 状态正常

select * from logs_dist;

SELECT *

FROM logs_dist

Query id: ba5ef109-f038-4edd-84ef-efbfe3699d9e

┌────────cur─┬─size─┬─message─┐

│ 2022-02-28 │ 1 │ 1 │

└────────────┴──────┴─────────┘

1 rows in set. Elapsed: 0.027 sec.