文章目录

- 第1关 K8s一窥真容

- 第2关 部署安装包及系统环境准备

- 第3关 二进制高可用安装k8s生产级集群

- 第4关 K8s最得意的小弟Docker

- 第5关 K8s攻克作战攻略之一

- 第5关 K8s攻克作战攻略之二-Deployment

- 第5关 K8s攻克作战攻略之三-服务pod的健康检测

- 第5关 k8s架构师课程攻克作战攻略之四-Service

- 第5关 k8s架构师课程攻克作战攻略之五 - labels

- 第6关 k8s架构师课程之流量入口Ingress上部

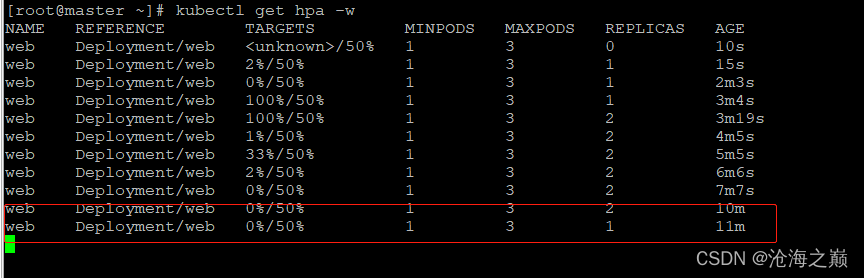

- 第7关 k8s架构师课程之HPA 自动水平伸缩pod

- 第8关 k8s架构师课程之持久化存储第一节

- 第8关 k8s架构师课程之持久化存储第二节PV和PVC

- 第8关 k8s架构师课程之持久化存储StorageClass

- 第9关 k8s架构师课程之有状态服务StatefulSet

- 第10关 k8s架构师课程之一次性和定时任务

- 第11关 k8s架构师课程之RBAC角色访问控制

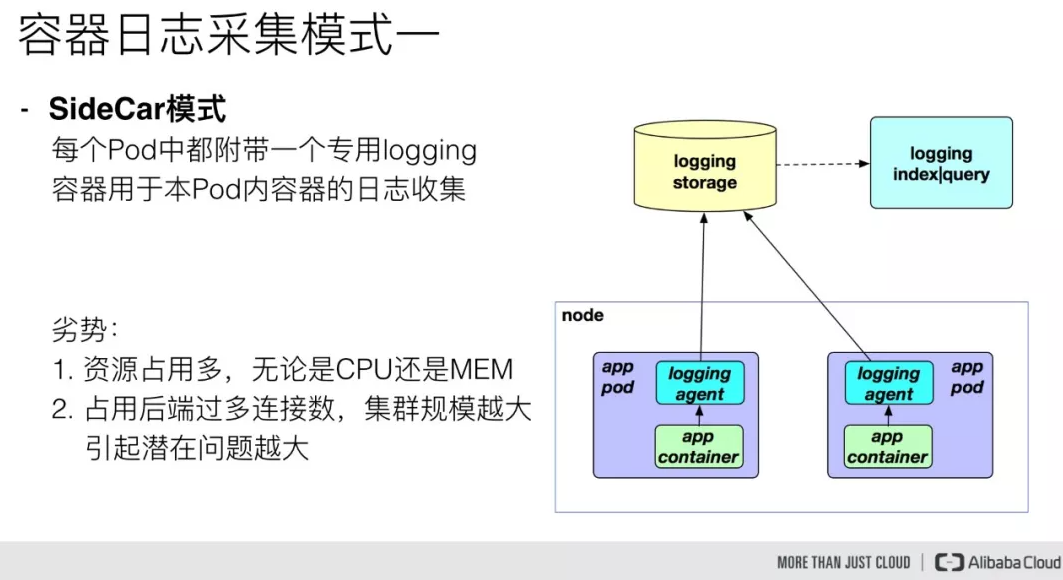

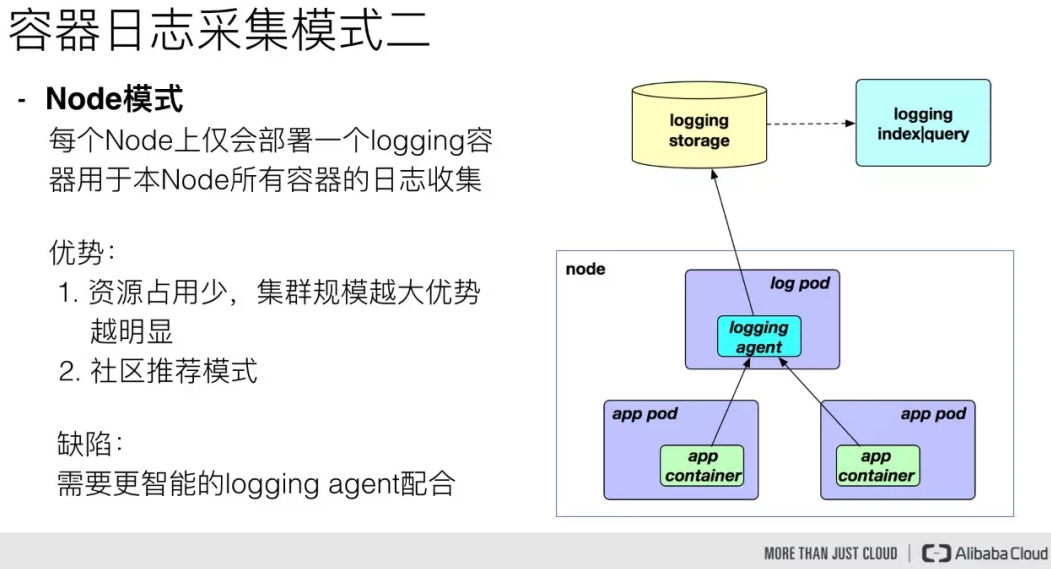

- 第12关 k8s架构师课程之业务日志收集上节介绍、下节实战

- 第13关 k8s架构师课程之私有镜像仓库-Harbor

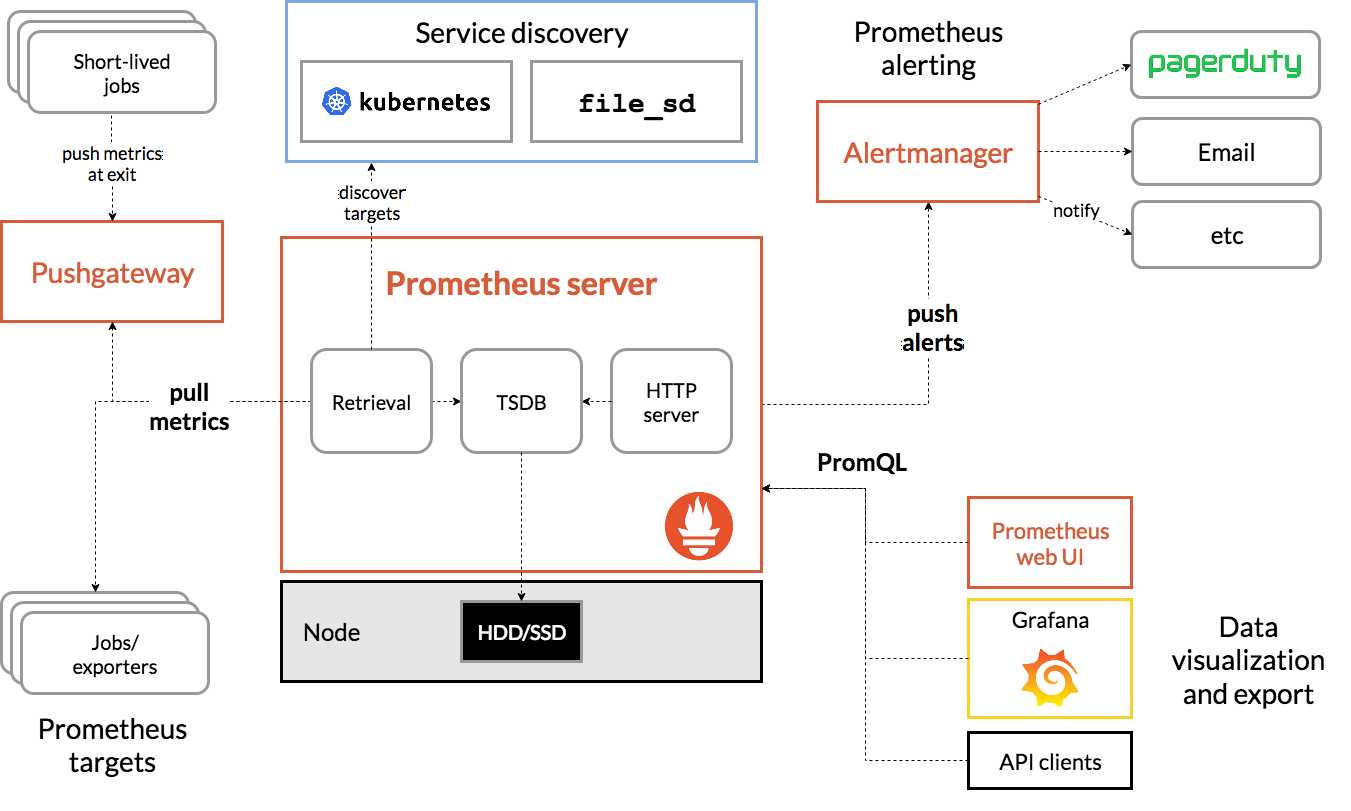

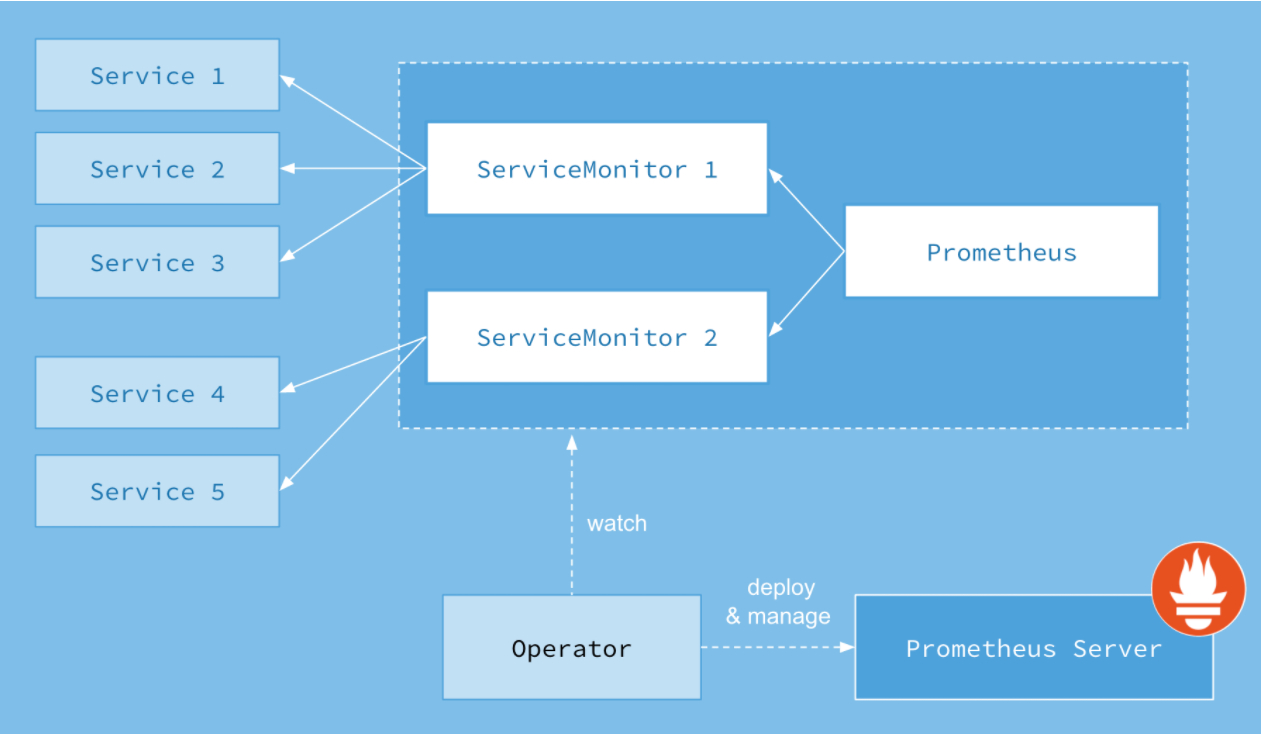

- 第14关k8s架构师课程之业务Prometheus监控实战一

- 第14关k8s架构师课程之业务Prometheus监控实战二

- 第14关k8s架构师课程之业务Prometheus监控实战三

- 第14关k8s架构师课程之业务Prometheus监控实战四

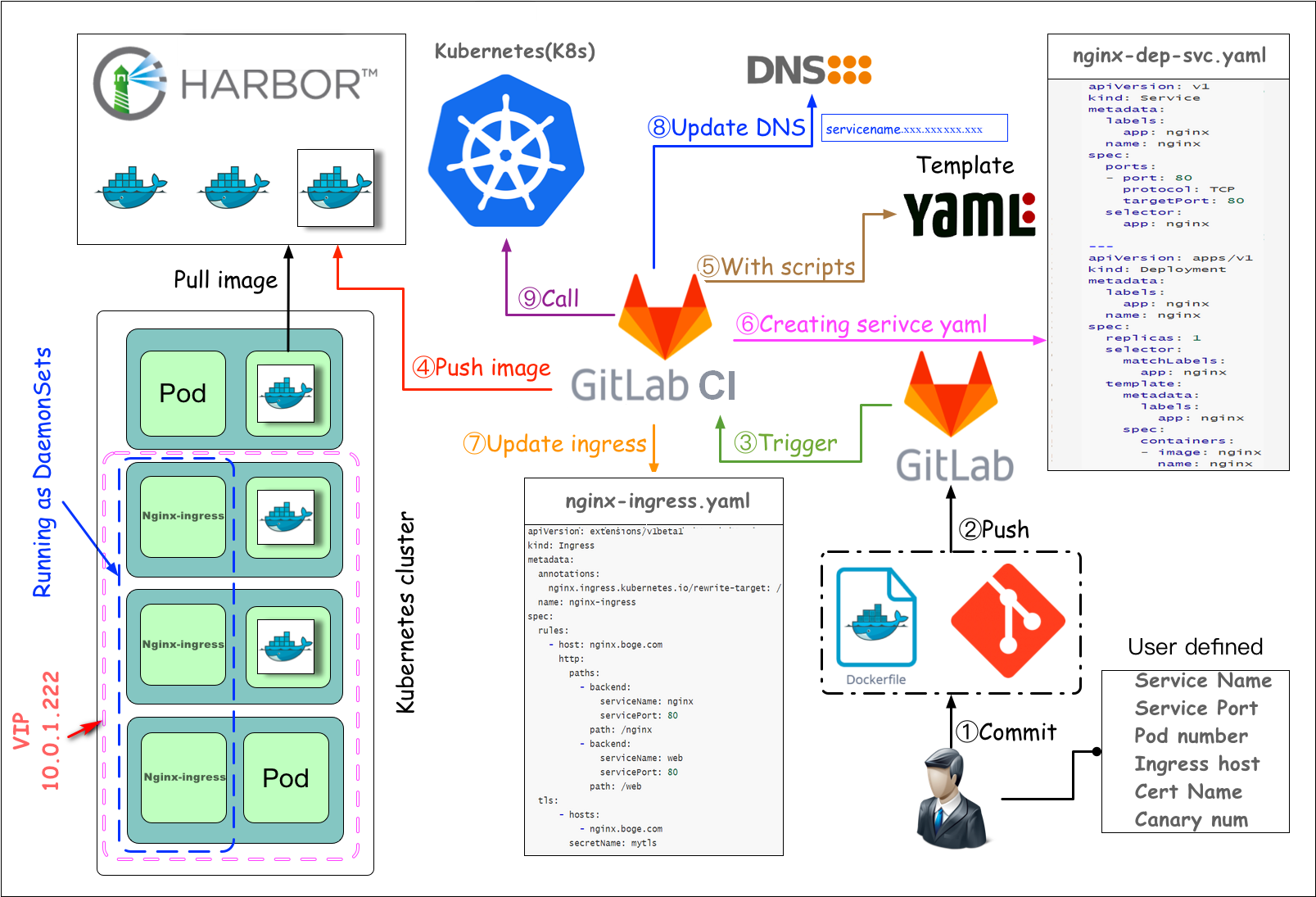

- 第15关 k8s架构师课程基于gitlab的CICD自动化二

- 第15关 k8s架构师课程基于gitlab的CICD自动化三

- 第15关k8s架构师课程之基于gitlab的CICD自动化四

- 第15关k8s架构师课程之基于gitlab的CICD自动化五

- 第15关k8s架构师课程之基于gitlab的CICD自动化六

- 第15关 k8s架构师课程之CICD自动化devops大结局

- 快速生成k8s的yaml配置的4种方法

- 关于K8S服务健康检测方式补充说明

视频教程下载:

链接:https://pan.baidu.com/s/1rAMDFPwda4Pl3wO2DsGh1w

提取码:txpy

第1关 K8s一窥真容

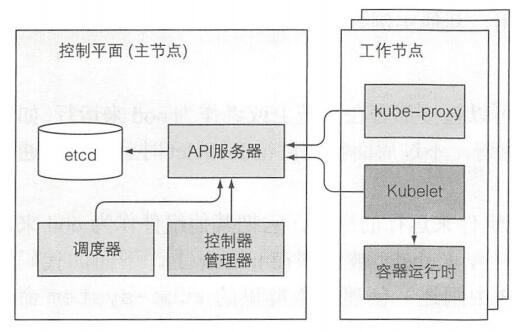

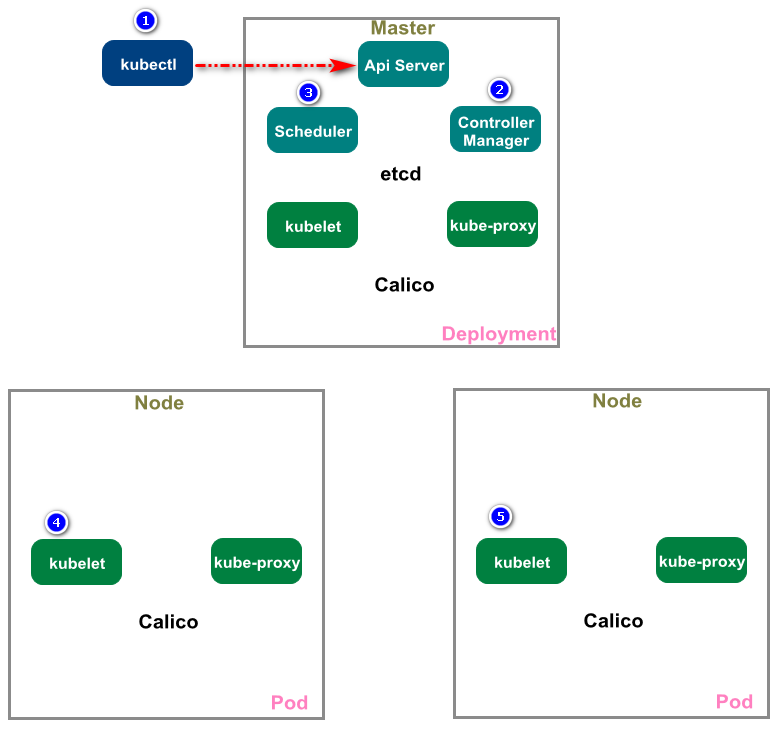

首先来一张简结版的K8s架构图

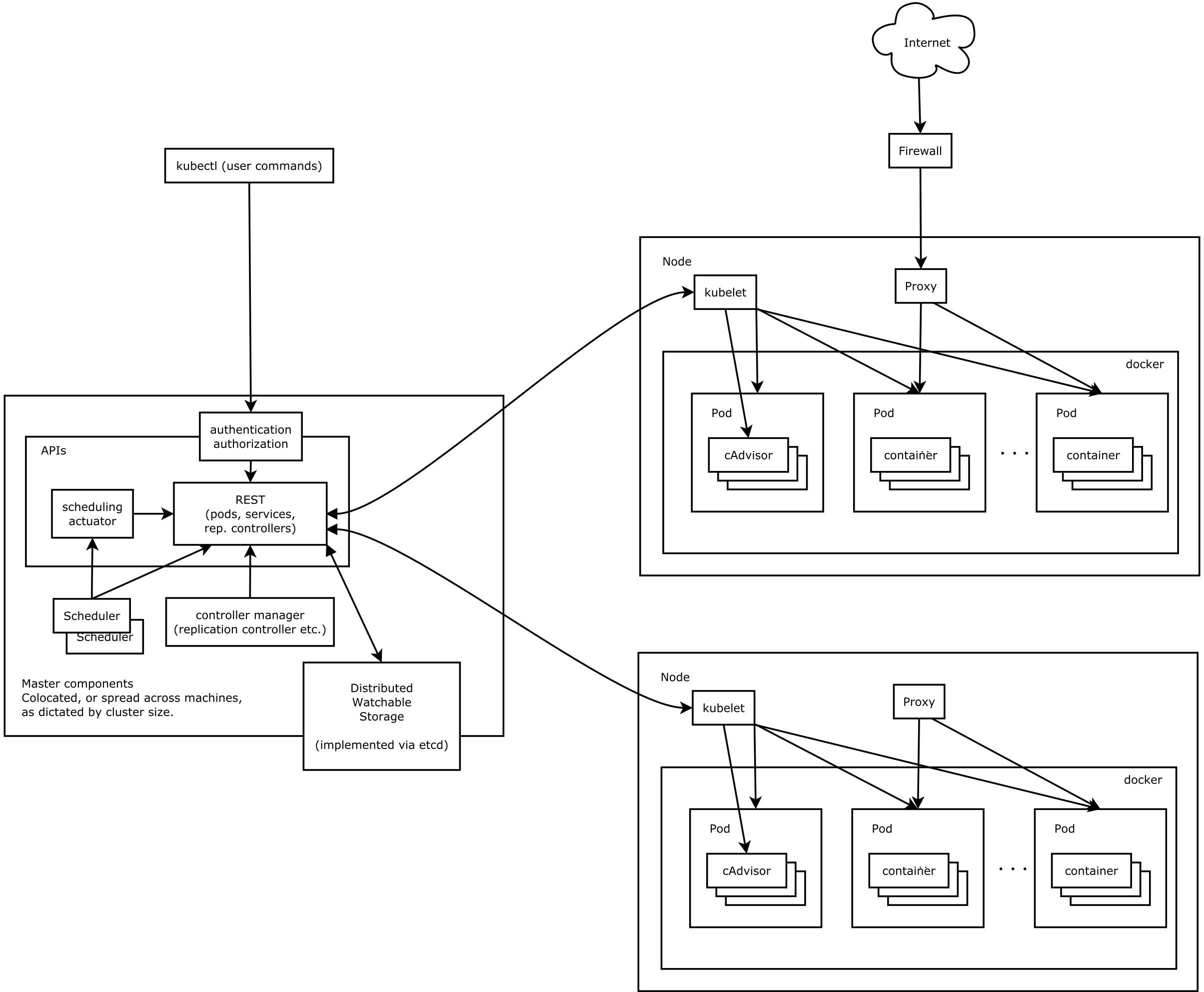

接着来一张详细的K8s架构图

从上面的图可以看出整个K8s集群分为两大部分:

- K8s控制平面

- (工作)节点

让我们具体看下这两个部分做了什么,以及其内部运行的内容又是什么。

控制平面的组件

控制平面负责控制并使得整个K8s集群正常运转。 回顾一下,控制平面包含如下组件:

- ETCD分布式持久化存储 – etcd保存了整个K8s集群的状态;

- API服务器 – apiserver提供了资源操作的唯一入口,并提供认证、授权、访问控制、API注册和发现等机制;

- 调度器 – scheduler负责资源的调度,按照预定的调度策略将Pod调度到相应的机器上;

- 控制器管理器 – controller manager负责维护集群的状态,比如故障检测、自动扩展、滚动更新等;

这些组件用来存储、管理集群状态,但它们不是运行应用的容器。

工作节点上运行的组件

运行容器的任务依赖于每个工作节点上运行的组件:

- Kubelet – 是 Node 的 agent,负责维护容器的生命周期,同时也负责Volume(CSI)和网络(CNI)的管理;

- Kubelet服务代理(kube-proxy) – 负责为Service提供cluster内部的服务发现和负载均衡;

- 容器运行时(Docker、rkt或者其他) – Container runtime负责镜像管理以及Pod和容器的真正运行(CRI);

附加组件

除了控制平面(和运行在节点上的组件),还要有几个附加组件,这样才能提供所有之前讨论的功能。包含:

- K8s DNS服务器 – CoreDNS负责为整个集群提供DNS服务

- 仪表板(可选) – Dashboard提供GUI,作为高级运维人员,使用kubectl命令行工具管理足矣

- Ingress控制器 – Ingress Controller为服务提供外网流量入口

- 容器集群监控 – Metrics-server为K8s资源指标获取工具; Prometheus提供资源监控

- CNI容器网络接口插件 – calico, flannel(如果没有实施网络策略的需求,那么就直接用flannel,开箱即用;否则就用calico了,但要注意如果网络使用了巨型帧,那么注意calico配置里面的默认值是1440,需要根据实际情况进行修改才能达到最佳性能)

简单概括:

API服务器只做了存储资源到etcd和通知客户端有变更的工作。 调度器则只是给pod分配节点(由kubelet来启动容器) 控制管理器里的控制器始终保持活跃的状态,来确保系统真实状态朝API服务器定义的期望的状态收敛

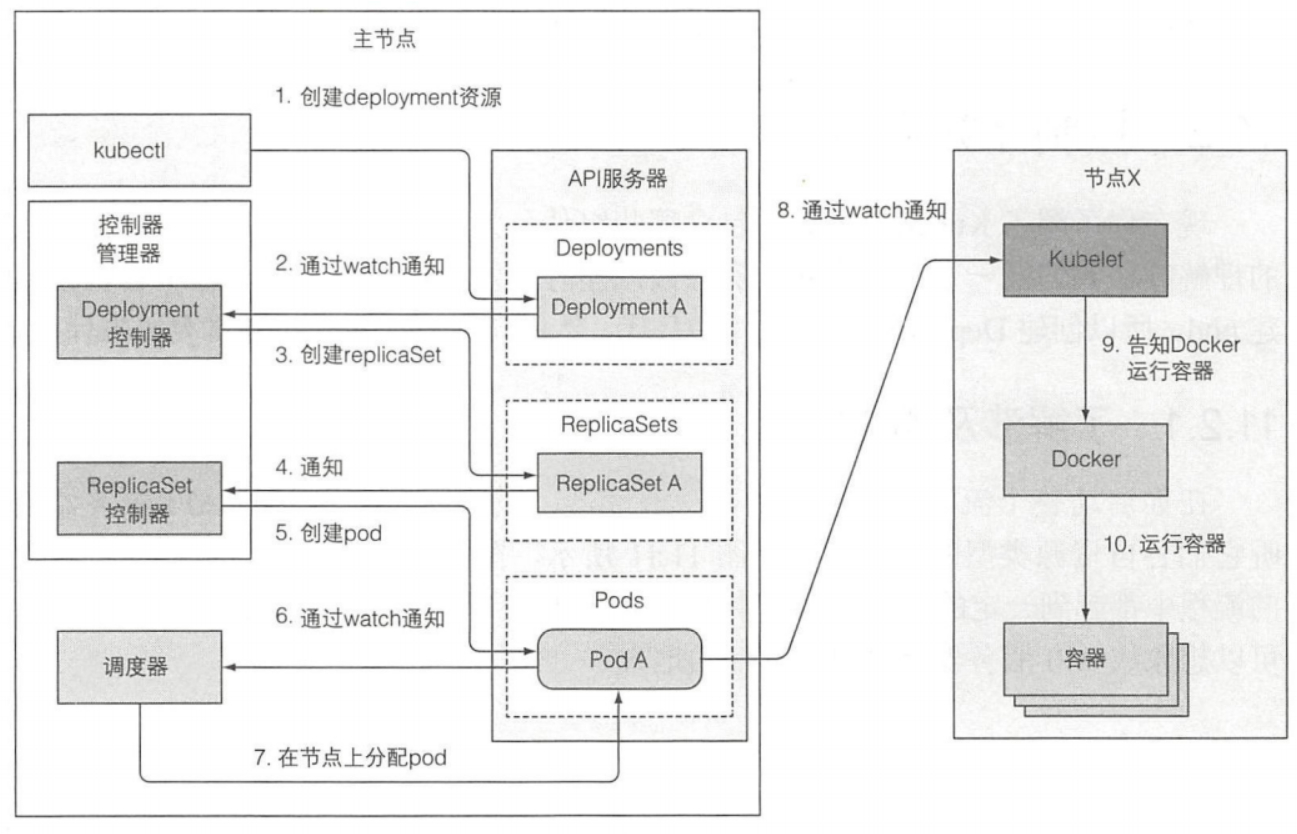

Deployment资源提交到API服务器的事件链

准备包含Deployment清单的YAML文件,通过kubectl提交到Kubernetes。kubectl通过HTTP POST请求发送清单到Kubernetes API服务器。API服务器检查Deployment定义,存储到etcd,返回响应给kubectl,如下图所示:

第2关 部署安装包及系统环境准备

下面是相关软件安装包及系统镜像下载地址

# VMware Workstation15

https://www.52pojie.cn/forum.php?mod=viewthread&tid=1027984&highlight=vmware%2B15.5.0

# CentOS-7.9-2009-x86_64-Minimal

https://mirrors.aliyun.com/centos/7.9.2009/isos/x86_64/CentOS-7-x86_64-Minimal-2009.iso

安装centos7这块不算很复杂,作为想学习k8s的同学,是有必要打好linux系统这些基本功的,相关安装教程百度下也会有很多,我这里就不再重复写相关安装教程了。

这里我就先啰嗦两句… 看了下现在市面上很多k8s相关的视频教程,光说将安装就占去整个教程一半以上的时间,剩下真正生产实战的时间寥寥无几。 当然我这里并不是说这种方式有什么大问题,我只是根据我自己的快速学习及生产实践来给大家做下分享,希望的是大家少走,能更快速的在工作生产中上手使用k8s。 k8s的安装,我的工作生产实践经验是选取开源的二进制包的形式来安装,正所谓工欲善其事必先利其器,我们先用成熟的工具把符合生产标准的k8s集群给部署起来,边实战边理解k8s各个组成部分的原理,这样会达到事半功倍的效果,并且现在实际情况是各种云平台都推出了自家的k8s托管服务,你连搭建都不需要了,直接买机器它就帮你部署好了,直接用就行。这也好比你想开车,不一定非得自己先把车的所有组件及运行原理、还有维修手段都掌握了再买辆车开吧,估计人都没兴趣去开车了。真实生活中,大家大部分都是拿了驾照就直接去买车,开起来体验再说,在开的过程中,慢慢学会了一些汽车的保养知识。 然后开始讲解工具安装步骤。。。

为什么要学习K8s呢? k8s是容器编排管理平台,满足了大量使用docker容器的一切弊端,如果还非要说出为什么要学习掌握k8s,我只能说未来几年,k8s是基本所有互联网企业的技术平台会使用的技术,不会就只能被淘汰 或者拿不到自己满意的高薪。

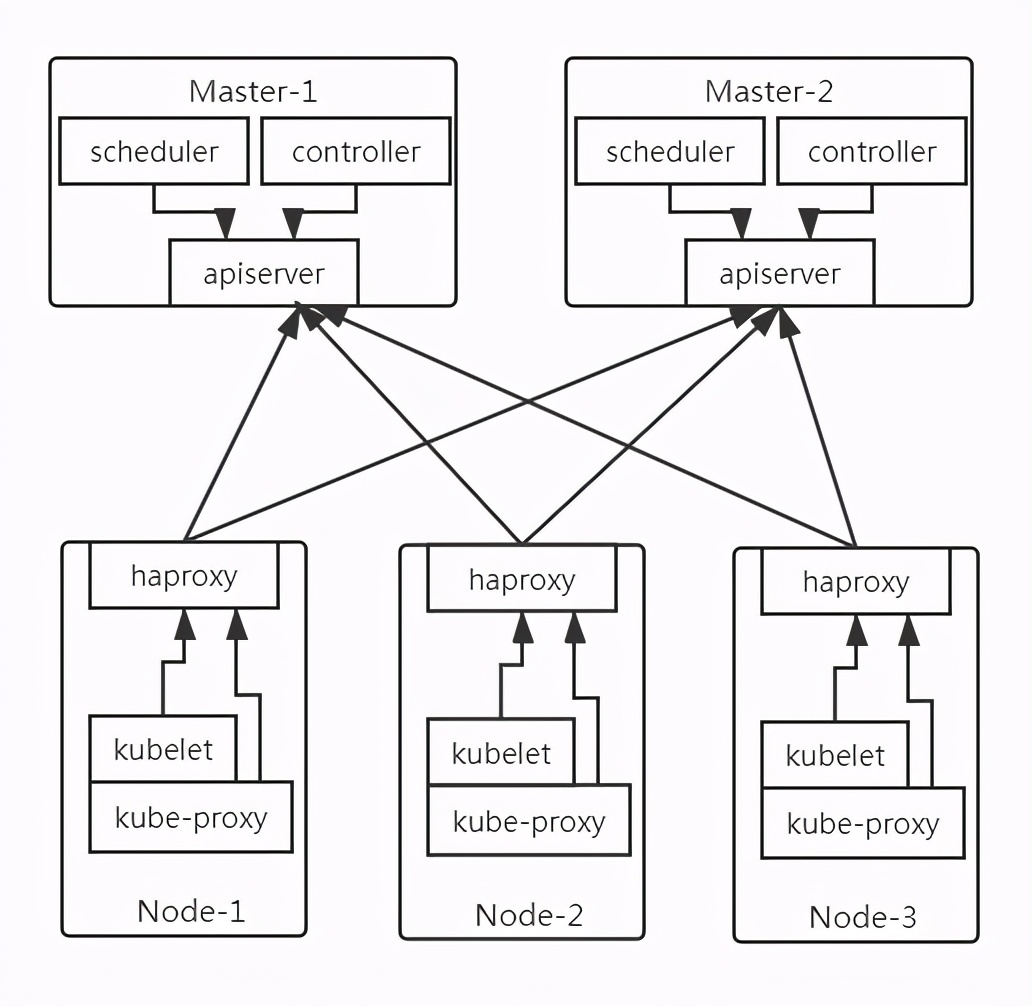

第3关 二进制高可用安装k8s生产级集群

下面是这次安装k8s集群相关系统及组件的详细版本号

- CentOS Linux release 7.9.2009 (Core)

- k8s: v.1.20.2

- docker: 19.03.14

- etcd: v3.4.13

- coredns: v1.7.1

- cni-plugins: v0.8.7

- calico: v3.15.3

下面是此次虚拟机集群安装前的IP等信息规划,这里大家就按我教程里面的信息来做,等第一遍跑通了后,后面可以按照自己的需求改变IP信息,这时候大家就会比较顺利了

| IP | hostname | role |

|---|---|---|

| 10.0.1.201【100.50】 | node-1【master】 | master/work node |

| 10.0.1.202 | node-2 | master/work node |

| 10.0.1.203【100.60】 | node-3【node】 | work node |

| 10.0.1.204 | node-4 | work node |

显然目前为止,前面几关给我们的装备还不太够,我们继续在这一关获取充足的装备弹药,为最终战胜K8s而奋斗吧!

这里采用开源项目https://github.com/easzlab/kubeasz,以二进制安装的方式,此方式安装支持系统有CentOS/RedHat 7, Debian 9/10, Ubuntu 1604/1804。

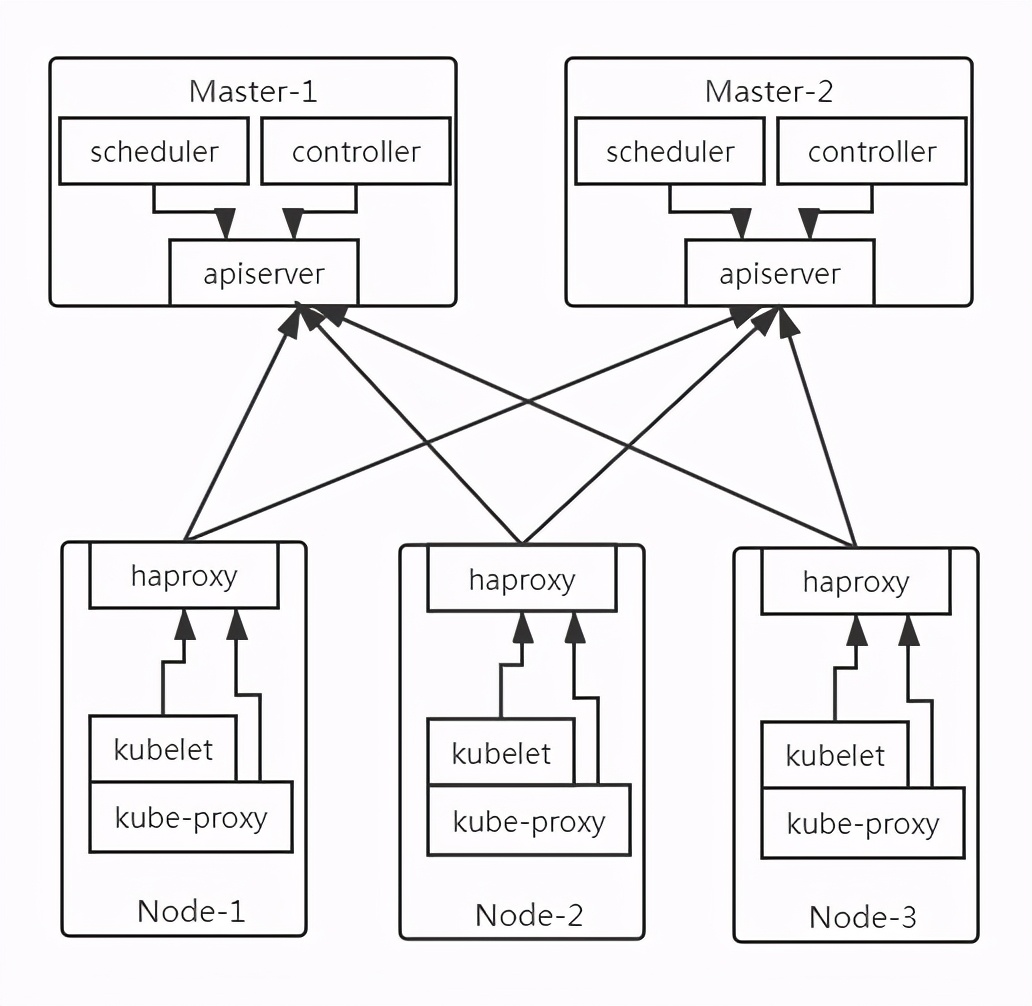

部署网络架构图

安装步骤清单:

- deploy机器做好对所有k8s node节点的免密登陆操作

- deploy机器安装好python2版本以及pip,然后安装ansible

- 对k8s集群配置做一些定制化配置并开始部署

对于这个开源项目,我自己编写了一个shell脚本对其进行了一层封装,说简单点就是想偷点懒o,这里我就以这个脚本来讲解整个安装的步骤:

将下面脚本内容复杂到k8s_install_new.sh脚本内准备执行安装

如果在这里面不好复杂的话,可以直接到我的github仓库里面下载这个脚本,地址:

https://github.com/bogeit/LearnK8s/blob/main/k8s_install_new.sh

#!/bin/bash

# auther: boge

# descriptions: the shell scripts will use ansible to deploy K8S at binary for siample

# 传参检测

[ $# -ne 6 ] && echo -e "Usage: $0 rootpasswd netnum nethosts cri cni k8s-cluster-name\nExample: bash $0 bogedevops 10.0.1 201\ 202\ 203\ 204 [containerd|docker] [calico|flannel] test\n" && exit 11

# 变量定义

export release=3.0.0

export k8s_ver=v.1.20.2 # v1.20.2, v.1.19.7, v1.18.15, v1.17.17

rootpasswd=$1

netnum=$2

nethosts=$3

cri=$4

cni=$5

clustername=$6

if ls -1v ./kubeasz*.tar.gz &>/dev/null;then software_packet="$(ls -1v ./kubeasz*.tar.gz )";else software_packet="";fi

pwd="/etc/kubeasz"

# deploy机器升级软件库

if cat /etc/redhat-release &>/dev/null;then

yum update -y

else

apt-get update && apt-get upgrade -y && apt-get dist-upgrade -y

[ $? -ne 0 ] && apt-get -yf install

fi

# deploy机器检测python环境

python2 -V &>/dev/null

if [ $? -ne 0 ];then

if cat /etc/redhat-release &>/dev/null;then

yum install gcc openssl-devel bzip2-devel

wget https://www.python.org/ftp/python/2.7.16/Python-2.7.16.tgz

tar xzf Python-2.7.16.tgz # ?? tar xvf Python-2.7.16.tgz

cd Python-2.7.16

./configure --enable-optimizations

make install

ln -s -f /usr/bin/python2.7 /usr/bin/python

cd -

else

apt-get install -y python2.7 && ln -s -f /usr/bin/python2.7 /usr/bin/python

fi

fi

# deploy机器设置pip安装加速源

if [[ $clustername != 'aws' ]]; then

mkdir ~/.pip

cat > ~/.pip/pip.conf <<CB

[global]

index-url = https://mirrors.aliyun.com/pypi/simple

[install]

trusted-host=mirrors.aliyun.com

CB

fi

# deploy机器安装相应软件包

# get-pip.py 详见 https://bootstrap.pypa.io

if cat /etc/redhat-release &>/dev/null;then

yum install git python-pip sshpass -y

[ -f ./get-pip.py ] && python ./get-pip.py || {

wget https://bootstrap.pypa.io/pip/2.7/get-pip.py && python get-pip.py

}

else

apt-get install git python-pip sshpass -y

[ -f ./get-pip.py ] && python ./get-pip.py || {

wget https://bootstrap.pypa.io/pip/2.7/get-pip.py && python get-pip.py

}

fi

python -m pip install --upgrade "pip < 21.0"

pip -V

pip install --no-cache-dir ansible netaddr

# 在deploy机器做其他node的ssh免密操作

for host in `echo "${nethosts}"`

do

echo "============ ${netnum}.${host} ===========";

if [[ ${USER} == 'root' ]];then

[ ! -f /${USER}/.ssh/id_rsa ] &&\

ssh-keygen -t rsa -P '' -f /${USER}/.ssh/id_rsa

else

[ ! -f /home/${USER}/.ssh/id_rsa ] &&\

ssh-keygen -t rsa -P '' -f /home/${USER}/.ssh/id_rsa

fi

sshpass -p ${rootpasswd} ssh-copy-id -o StrictHostKeyChecking=no ${USER}@${netnum}.${host}

if cat /etc/redhat-release &>/dev/null;then

ssh -o StrictHostKeyChecking=no ${USER}@${netnum}.${host} "yum update -y"

else

ssh -o StrictHostKeyChecking=no ${USER}@${netnum}.${host} "apt-get update && apt-get upgrade -y && apt-get dist-upgrade -y"

[ $? -ne 0 ] && ssh -o StrictHostKeyChecking=no ${USER}@${netnum}.${host} "apt-get -yf install"

fi

done

# deploy机器下载k8s二进制安装脚本

if [[ ${software_packet} == '' ]];then

curl -C- -fLO --retry 3 https://github.com/easzlab/kubeasz/releases/download/${release}/ezdown

sed -ri "s+^(K8S_BIN_VER=).*$+\1${k8s_ver}+g" ezdown

chmod +x ./ezdown

# 使用工具脚本下载

./ezdown -D && ./ezdown -P

else

tar xvf ${software_packet} -C /etc/

chmod +x ${pwd}/{ezctl,ezdown}

fi

# 初始化一个名为my的k8s集群配置

CLUSTER_NAME="$clustername"

${pwd}/ezctl new ${CLUSTER_NAME}

if [[ $? -ne 0 ]];then

echo "cluster name [${CLUSTER_NAME}] was exist in ${pwd}/clusters/${CLUSTER_NAME}."

exit 1

fi

if [[ ${software_packet} != '' ]];then

# 设置参数,启用离线安装

sed -i 's/^INSTALL_SOURCE.*$/INSTALL_SOURCE: "offline"/g' ${pwd}/clusters/${CLUSTER_NAME}/config.yml

fi

# to check ansible service

ansible all -m ping

#---------------------------------------------------------------------------------------------------

#修改二进制安装脚本配置 config.yml

sed -ri "s+^(CLUSTER_NAME:).*$+\1 \"${CLUSTER_NAME}\"+g" ${pwd}/clusters/${CLUSTER_NAME}/config.yml

## k8s上日志及容器数据存独立磁盘步骤(参考阿里云的)

[ ! -d /var/lib/container ] && mkdir -p /var/lib/container/{kubelet,docker}

## cat /etc/fstab

# UUID=105fa8ff-bacd-491f-a6d0-f99865afc3d6 / ext4 defaults 1 1

# /dev/vdb /var/lib/container/ ext4 defaults 0 0

# /var/lib/container/kubelet /var/lib/kubelet none defaults,bind 0 0

# /var/lib/container/docker /var/lib/docker none defaults,bind 0 0

## tree -L 1 /var/lib/container

# /var/lib/container

# ├── docker

# ├── kubelet

# └── lost+found

# docker data dir

DOCKER_STORAGE_DIR="/var/lib/container/docker"

sed -ri "s+^(STORAGE_DIR:).*$+STORAGE_DIR: \"${DOCKER_STORAGE_DIR}\"+g" ${pwd}/clusters/${CLUSTER_NAME}/config.yml

# containerd data dir

CONTAINERD_STORAGE_DIR="/var/lib/container/containerd"

sed -ri "s+^(STORAGE_DIR:).*$+STORAGE_DIR: \"${CONTAINERD_STORAGE_DIR}\"+g" ${pwd}/clusters/${CLUSTER_NAME}/config.yml

# kubelet logs dir

KUBELET_ROOT_DIR="/var/lib/container/kubelet"

sed -ri "s+^(KUBELET_ROOT_DIR:).*$+KUBELET_ROOT_DIR: \"${KUBELET_ROOT_DIR}\"+g" ${pwd}/clusters/${CLUSTER_NAME}/config.yml

if [[ $clustername != 'aws' ]]; then

# docker aliyun repo

REG_MIRRORS="https://pqbap4ya.mirror.aliyuncs.com"

sed -ri "s+^REG_MIRRORS:.*$+REG_MIRRORS: \'[\"${REG_MIRRORS}\"]\'+g" ${pwd}/clusters/${CLUSTER_NAME}/config.yml

fi

# [docker]信任的HTTP仓库

sed -ri "s+127.0.0.1/8+${netnum}.0/24+g" ${pwd}/clusters/${CLUSTER_NAME}/config.yml

# disable dashboard auto install

sed -ri "s+^(dashboard_install:).*$+\1 \"no\"+g" ${pwd}/clusters/${CLUSTER_NAME}/config.yml

# 融合配置准备

CLUSEER_WEBSITE="${CLUSTER_NAME}k8s.gtapp.xyz"

lb_num=$(grep -wn '^MASTER_CERT_HOSTS:' ${pwd}/clusters/${CLUSTER_NAME}/config.yml |awk -F: '{print $1}')

lb_num1=$(expr ${lb_num} + 1)

lb_num2=$(expr ${lb_num} + 2)

sed -ri "${lb_num1}s+.*$+ - "${CLUSEER_WEBSITE}"+g" ${pwd}/clusters/${CLUSTER_NAME}/config.yml

sed -ri "${lb_num2}s+(.*)$+#\1+g" ${pwd}/clusters/${CLUSTER_NAME}/config.yml

# node节点最大pod 数

MAX_PODS="120"

sed -ri "s+^(MAX_PODS:).*$+\1 ${MAX_PODS}+g" ${pwd}/clusters/${CLUSTER_NAME}/config.yml

# 修改二进制安装脚本配置 hosts

# clean old ip

sed -ri '/192.168.1.1/d' ${pwd}/clusters/${CLUSTER_NAME}/hosts

sed -ri '/192.168.1.2/d' ${pwd}/clusters/${CLUSTER_NAME}/hosts

sed -ri '/192.168.1.3/d' ${pwd}/clusters/${CLUSTER_NAME}/hosts

sed -ri '/192.168.1.4/d' ${pwd}/clusters/${CLUSTER_NAME}/hosts

# 输入准备创建ETCD集群的主机位

echo "enter etcd hosts here (example: 203 202 201) ↓"

read -p "" ipnums

for ipnum in `echo ${ipnums}`

do

echo $netnum.$ipnum

sed -i "/\[etcd/a $netnum.$ipnum" ${pwd}/clusters/${CLUSTER_NAME}/hosts

done

# 输入准备创建KUBE-MASTER集群的主机位

echo "enter kube-master hosts here (example: 202 201) ↓"

read -p "" ipnums

for ipnum in `echo ${ipnums}`

do

echo $netnum.$ipnum

sed -i "/\[kube_master/a $netnum.$ipnum" ${pwd}/clusters/${CLUSTER_NAME}/hosts

done

# 输入准备创建KUBE-NODE集群的主机位

echo "enter kube-node hosts here (example: 204 203) ↓"

read -p "" ipnums

for ipnum in `echo ${ipnums}`

do

echo $netnum.$ipnum

sed -i "/\[kube_node/a $netnum.$ipnum" ${pwd}/clusters/${CLUSTER_NAME}/hosts

done

# 配置容器运行时CNI

case ${cni} in

flannel)

sed -ri "s+^CLUSTER_NETWORK=.*$+CLUSTER_NETWORK=\"${cni}\"+g" ${pwd}/clusters/${CLUSTER_NAME}/hosts

;;

calico)

sed -ri "s+^CLUSTER_NETWORK=.*$+CLUSTER_NETWORK=\"${cni}\"+g" ${pwd}/clusters/${CLUSTER_NAME}/hosts

;;

*)

echo "cni need be flannel or calico."

exit 11

esac

# 配置K8S的ETCD数据备份的定时任务

if cat /etc/redhat-release &>/dev/null;then

if ! grep -w '94.backup.yml' /var/spool/cron/root &>/dev/null;then echo "00 00 * * * `which ansible-playbook` ${pwd}/playbooks/94.backup.yml &> /dev/null" >> /var/spool/cron/root;else echo exists ;fi

chown root.crontab /var/spool/cron/root

chmod 600 /var/spool/cron/root

else

if ! grep -w '94.backup.yml' /var/spool/cron/crontabs/root &>/dev/null;then echo "00 00 * * * `which ansible-playbook` ${pwd}/playbooks/94.backup.yml &> /dev/null" >> /var/spool/cron/crontabs/root;else echo exists ;fi

chown root.crontab /var/spool/cron/crontabs/root

chmod 600 /var/spool/cron/crontabs/root

fi

rm /var/run/cron.reboot

service crond restart

#---------------------------------------------------------------------------------------------------

# 准备开始安装了

rm -rf ${pwd}/{dockerfiles,docs,.gitignore,pics,dockerfiles} &&\

find ${pwd}/ -name '*.md'|xargs rm -f

read -p "Enter to continue deploy k8s to all nodes >>>" YesNobbb

# now start deploy k8s cluster

cd ${pwd}/

# to prepare CA/certs & kubeconfig & other system settings

${pwd}/ezctl setup ${CLUSTER_NAME} 01

sleep 1

# to setup the etcd cluster

${pwd}/ezctl setup ${CLUSTER_NAME} 02

sleep 1

# to setup the container runtime(docker or containerd)

case ${cri} in

containerd)

sed -ri "s+^CONTAINER_RUNTIME=.*$+CONTAINER_RUNTIME=\"${cri}\"+g" ${pwd}/clusters/${CLUSTER_NAME}/hosts

${pwd}/ezctl setup ${CLUSTER_NAME} 03

;;

docker)

sed -ri "s+^CONTAINER_RUNTIME=.*$+CONTAINER_RUNTIME=\"${cri}\"+g" ${pwd}/clusters/${CLUSTER_NAME}/hosts

${pwd}/ezctl setup ${CLUSTER_NAME} 03

;;

*)

echo "cri need be containerd or docker."

exit 11

esac

sleep 1

# to setup the master nodes

${pwd}/ezctl setup ${CLUSTER_NAME} 04

sleep 1

# to setup the worker nodes

${pwd}/ezctl setup ${CLUSTER_NAME} 05

sleep 1

# to setup the network plugin(flannel、calico...)

${pwd}/ezctl setup ${CLUSTER_NAME} 06

sleep 1

# to setup other useful plugins(metrics-server、coredns...)

${pwd}/ezctl setup ${CLUSTER_NAME} 07

sleep 1

# [可选]对集群所有节点进行操作系统层面的安全加固 https://github.com/dev-sec/ansible-os-hardening

#ansible-playbook roles/os-harden/os-harden.yml

#sleep 1

cd `dirname ${software_packet:-/tmp}`

k8s_bin_path='/opt/kube/bin'

echo "------------------------- k8s version list ---------------------------"

${k8s_bin_path}/kubectl version

echo

echo "------------------------- All Healthy status check -------------------"

${k8s_bin_path}/kubectl get componentstatus

echo

echo "------------------------- k8s cluster info list ----------------------"

${k8s_bin_path}/kubectl cluster-info

echo

echo "------------------------- k8s all nodes list -------------------------"

${k8s_bin_path}/kubectl get node -o wide

echo

echo "------------------------- k8s all-namespaces's pods list ------------"

${k8s_bin_path}/kubectl get pod --all-namespaces

echo

echo "------------------------- k8s all-namespaces's service network ------"

${k8s_bin_path}/kubectl get svc --all-namespaces

echo

echo "------------------------- k8s welcome for you -----------------------"

echo

# you can use k alias kubectl to siample

echo "alias k=kubectl && complete -F __start_kubectl k" >> ~/.bashrc

# get dashboard url

${k8s_bin_path}/kubectl cluster-info|grep dashboard|awk '{print $NF}'|tee -a /root/k8s_results

# get login token

${k8s_bin_path}/kubectl -n kube-system describe secret $(${k8s_bin_path}/kubectl -n kube-system get secret | grep admin-user | awk '{print $1}')|grep 'token:'|awk '{print $NF}'|tee -a /root/k8s_results

echo

echo "you can look again dashboard and token info at >>> /root/k8s_results <<<"

#echo ">>>>>>>>>>>>>>>>> You can excute command [ source ~/.bashrc ] <<<<<<<<<<<<<<<<<<<<"

echo ">>>>>>>>>>>>>>>>> You need to excute command [ reboot ] to restart all nodes <<<<<<<<<<<<<<<<<<<<"

rm -f $0

[ -f ${software_packet} ] && rm -f ${software_packet}

#rm -f ${pwd}/roles/deploy/templates/${USER_NAME}-csr.json.j2

#sed -ri "s+${USER_NAME}+admin+g" ${pwd}/roles/prepare/tasks/main.yml

如下是开始安装执行脚本

# 开始在线安装(这里选择容器运行时是docker,CNI为calico,K8S集群名称为test)

bash k8s_install_new.sh bogedevops 10.0.1 201\ 202\ 203\ 204 docker calico test

# 需要注意的在线安装因为会从github及dockerhub上下载文件及镜像,有时候访问这些国外网络会非常慢,这里我也会大家准备好了完整离线安装包,下载地址如下,和上面的安装脚本放在同一目录下,再执行上面的安装命令即可

# 此离线安装包里面的k8s版本为v1.20.2

https://cloud.189.cn/t/3YBV7jzQZnAb (访问码:0xde)

# 脚本基本是自动化的,除了下面几处提示按要求复制粘贴下,再回车即可

# 输入准备创建ETCD集群的主机位,复制 203 202 201 粘贴并回车

echo "enter etcd hosts here (example: 203 202 201) ↓"

# 输入准备创建KUBE-MASTER集群的主机位,复制 202 201 粘贴并回车

echo "enter kube-master hosts here (example: 202 201) ↓"

# 输入准备创建KUBE-NODE集群的主机位,复制 204 203 粘贴并回车

echo "enter kube-node hosts here (example: 204 203) ↓"

# 这里会提示你是否继续安装,没问题的话直接回车即可

Enter to continue deploy k8s to all nodes >>>

# 安装完成后重新加载下环境变量以实现kubectl命令补齐

. ~/.bashrc

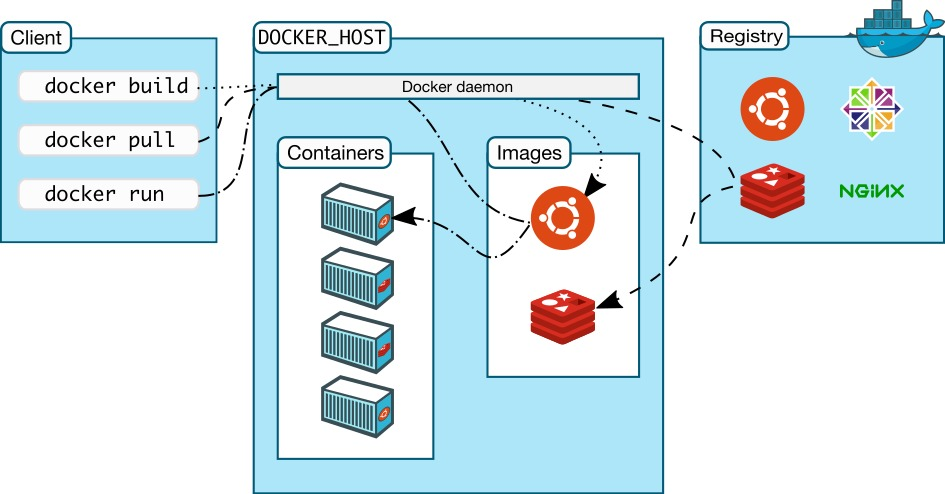

第4关 K8s最得意的小弟Docker

知己知彼方能百战百胜,无论是游戏还是技术都是同一个道理,docker只是容器化引擎中的一种,但由于它入行较早,深得K8s的青睐,所以现在大家提到容器技术就想到docker,docker俨然成为了容器技术的代名词了,那我们该如何击败这个docker呢,下面我们仔细分析下docker它的各个属性和技能吧。

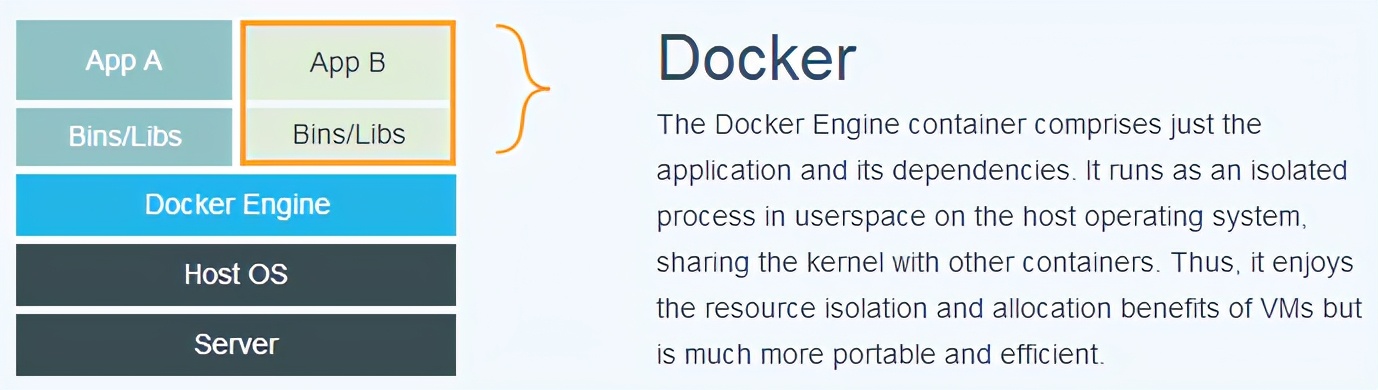

Docker是dotCloud公司用Google公司推出的Go语言开发实现,基于Linux内核的namespace、cgroup,以及AUFS类的Union FS等技术,对进程进行封装隔离,属于操作系统层面的虚拟化技术。

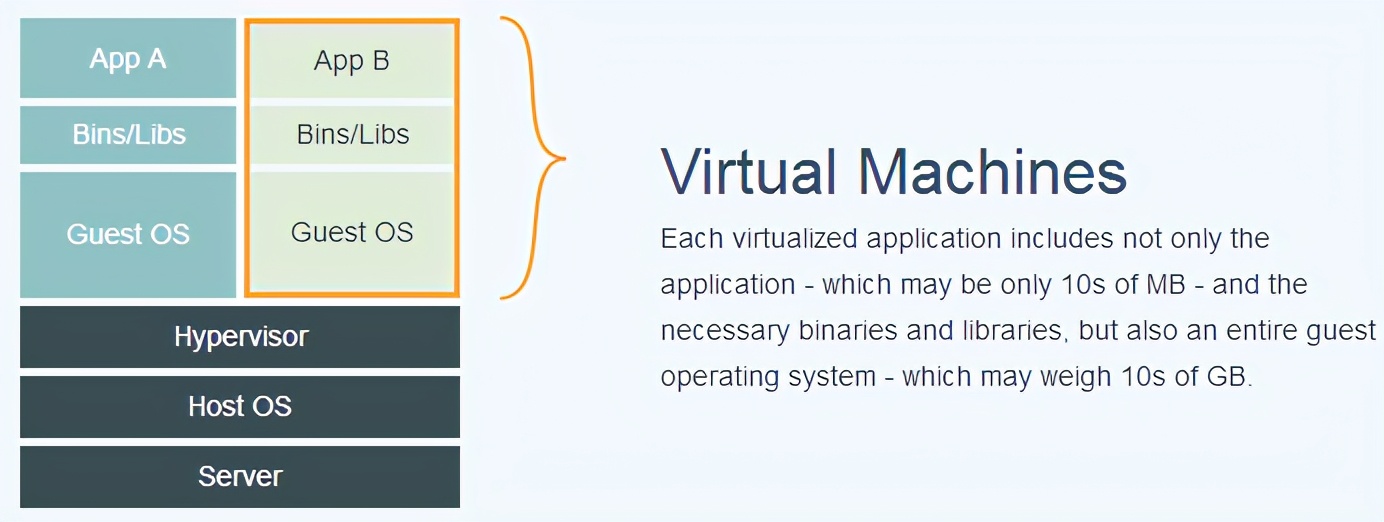

下面的图片比较了 Docker 和传统虚拟化方式的不同之处。传统虚拟机技术是虚拟出一套硬件后,在其上运行一个完整操作系统,在该系统上再运行所需应用进程;而容器内的应用进程直接运行于宿主的内核,容器内没有自己的内核,而且也没有进行硬件虚拟。因此容器要比传统虚拟机更为轻便。

为什么要使用 Docker?

- 更高效的利用系统资源

- 更快速的启动时间

- 一致的运行环境

- 持续交付和部署

- 更轻松的迁移

- 更轻松的维护和扩展

对比传统虚拟机总结

| 特性 | 容器 | 虚拟机 |

|---|---|---|

| 启动 | 秒级 | 分钟级 |

| 硬盘使用 | 一般为 MB | 一般为 GB |

| 性能 | 接近原生 | 弱于 |

| 系统支持量 | 单机支持上千个容器 | 一般几十个 |

docker的三板斧分别是:

- 镜像(Image)

- 容器(Container)

- 仓库(Repository)

docker的必杀技是:

- Dockerfile

下面以生产中实际的案例来让大家熟悉docker的整个生命周期,确保将其一击即溃。

python

FROM python:3.7-slim-stretch

MAINTAINER boge <[email protected]>

WORKDIR /app

COPY requirements.txt .

RUN sed -i 's/deb.debian.org/ftp.cn.debian.org/g' /etc/apt/sources.list \

&& sed -i 's/security.debian.org/ftp.cn.debian.org/g' /etc/apt/sources.list \

&& apt-get update -y \

&& apt-get install -y wget gcc libsm6 libxext6 libglib2.0-0 libxrender1 git vim \

&& apt-get clean && apt-get autoremove -y && rm -rf /var/lib/apt/lists/*

RUN pip install --no-cache-dir -i https://mirrors.aliyun.com/pypi/simple -r requirements.txt \

&& rm requirements.txt

COPY . .

EXPOSE 5000

HEALTHCHECK CMD curl --fail http://localhost:5000 || exit 1

ENTRYPOINT ["gunicorn", "app:app", "-c", "gunicorn_config.py"]

golang

# stage 1: build src code to binary

FROM golang:1.13-alpine3.10 as builder

MAINTAINER boge <[email protected]>

ENV GOPROXY https://goproxy.cn

# ENV GO111MODULE on

COPY *.go /app/

RUN cd /app && CGO_ENABLED=0 GOOS=linux GOARCH=amd64 go build -ldflags "-s -w" -o hellogo .

# stage 2: use alpine as base image

FROM alpine:3.10

RUN sed -i 's/dl-cdn.alpinelinux.org/mirrors.aliyun.com/g' /etc/apk/repositories && \

apk update && \

apk --no-cache add tzdata ca-certificates && \

cp -f /usr/share/zoneinfo/Asia/Shanghai /etc/localtime && \

# apk del tzdata && \

rm -rf /var/cache/apk/*

COPY --from=builder /app/hellogo /hellogo

CMD ["/hellogo"]

nodejs

FROM node:12.6.0-alpine

MAINTAINER boge <[email protected]>

WORKDIR /app

COPY package.json .

RUN sed -i 's/dl-cdn.alpinelinux.org/mirrors.aliyun.com/g' /etc/apk/repositories && \

apk update && \

yarn config set registry https://registry.npm.taobao.org && \

yarn install

RUN yarn build

COPY . .

EXPOSE 6868

ENTRYPOINT ["yarn", "start"]

java

FROM maven:3.6.3-adoptopenjdk-8 as target

ENV MAVEN_HOME /usr/share/maven

ENV PATH $MAVEN_HOME/bin:$PATH

COPY settings.xml /usr/share/maven/conf/

WORKDIR /build

COPY pom.xml .

RUN mvn dependency:go-offline # use docker cache

COPY src/ /build/src/

RUN mvn clean package -Dmaven.test.skip=true

FROM java:8

WORKDIR /app

RUN rm /etc/localtime && cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

COPY --from=target /build/target/*.jar /app/app.jar

EXPOSE 8080

ENTRYPOINT ["java","-Xmx768m","-Xms256m","-Djava.security.egd=file:/dev/./urandom","-jar","/app/app.jar"]

docker的整个生命周期展示

# 登陆docker镜像仓库

docker login "仓库地址" -u "仓库用户名" -p "仓库密码"

# 从仓库下载镜像

docker pull "仓库地址"/"仓库命名空间"/"镜像名称":latest || true

# 基于Dockerfile构建本地镜像

docker build --network host --build-arg PYPI_IP="xx.xx.xx.xx" --cache-from "仓库地址"/"仓库命名空间"/"镜像名称":latest --tag "仓库地址"/"仓库命名空间"/"镜像名称":"镜像版本号" --tag "仓库地址"/"仓库命名空间"/"镜像名称":latest .

# 将构建好的本地镜像推到远端镜像仓库里面

docker push "仓库地址"/"仓库命名空间"/"镜像名称":"镜像版本号"

docker push "仓库地址"/"仓库命名空间"/"镜像名称":latest

# 基于redis的镜像运行一个docker实例

docker run --name myredis --net host -d redis:6.0.2-alpine3.11 redis-server --requirepass boGe666

开始实战,迎击第4关的小BOOS战,获取属于我们的经验值

我这里将上面的flask和golang项目上传到了网盘,地址如下:

https://cloud.189.cn/t/M36fYrIrEbui (访问码:hy47)

大家下载解压后,会得到2个目录,一个python,一个golang

# 解压

unzip docker-file.zip

# 先打包python项目的镜像并运行测试

cd python

docker build -t python/flask:v0.0.1 .

docker run -d -p 80:5000 python/flask:v0.0.1

# 再打包golang项目的镜像并运行测试

docker build -t boge/golang:v0.0.1 .

docker run -d -p80:3000 boge/golang:v0.0.1

第5关 K8s攻克作战攻略之一

第3关我们以二进制的形式部署好了一套K8S集群,现在我们就来会会K8S吧

K8s的API对象(所有怪物角色列表)

- Namespace – 命令空间实现同一集群上的资源隔离

- Pod – K8s的最小运行单元

- ReplicaSet – 实现pod平滑迭代更新及回滚用,这个不需要我们实际操作

- Deployment – 用来发布无状态应用

- Health Check – Readiness/Liveness/maxSurge/maxUnavailable 服务健康状态检测

- Service, Endpoint – 实现同一lables下的多个pod流量负载均衡

- Labels – 标签,服务间选择访问的重要依据

- Ingress – K8s的流量入口

- DaemonSet – 用来发布守护应用,例如我们部署的CNI插件

- HPA – Horizontal Pod Autoscaling 自动水平伸缩

- Volume – 存储卷

- Pv, pvc, StorageClass – 持久化存储,持久化存储 声明,动态存储pv

- StatefulSet – 用来发布有状态应用

- Job, CronJob – 一次性任务及定时任务

- Configmap, serect – 服务配置及服务加密配置

- Kube-proxy – 提供service服务流量转发的功能支持,这个不需要我们实际操作

- RBAC, serviceAccount, role, rolebindings, clusterrole, clusterrolebindings – 基于角色的访问控制

- Events – K8s事件流,可以用来监控相关事件用,这个不需要我们实际操作

看了上面这一堆知识点,大家是不是有点头晕了? 别担心,上述这些小怪在后面的过关流程中均会一一遇到,并且我会也教会大家怎么去战胜它们,Let’ Go!

OK,此关卡较长,这节课我们先会会Namespace和Pod这两个小怪

Namespace

namespace命令空间,后面简称ns。在K8s上面,大部分资源都受ns的限制,来做资源的隔离,少部分如pv,clusterRole等不受ns控制,这个后面会讲到。

# 查看目前集群上有哪些ns

# kubectl get ns

NAME STATUS AGE

default Active 5d3h

kube-node-lease Active 5d3h

kube-public Active 5d3h

kube-system Active 5d3h

# 通过kubectl 接上 -n namespaceName 来查看对应ns上面的资源信息

# kubectl -n kube-system get pod

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-7fdc86d8ff-2mcm9 1/1 Running 1 29h

calico-node-dlt57 1/1 Running 1 29h

calico-node-tvzqj 1/1 Running 1 29h

calico-node-vh6sk 1/1 Running 1 29h

calico-node-wpsfh 1/1 Running 1 29h

coredns-d9b6857b5-tt7j2 1/1 Running 1 29h

metrics-server-869ffc99cd-n2dc4 1/1 Running 2 29h

nfs-provisioner-01-77549d5487-dbmv5 1/1 Running 2 29h

# kubectl -n kube-system top pod #显示pod资源使用情况

# 我们通过不接-n 的情况下,都是在默认命令空间default下进行操作,在生产中,通过测试一些资源就在这里进行

[root@node-1 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-867c95f465-njv78 1/1 Running 0 12m

[root@node-1 ~]# kubectl -n default get pod

NAME READY STATUS RESTARTS AGE

nginx-867c95f465-njv78 1/1 Running 0 12m

# 创建也很简单

[root@node-1 ~]# kubectl create ns test

namespace/test created

[root@node-1 ~]# kubectl get ns|grep test

test

# 删除ns

# kubectl delete ns test

namespace "test" deleted

生产中的小技巧:k8s删除namespaces状态一直为terminating问题处理

# kubectl get ns

NAME STATUS AGE

default Active 5d4h

ingress-nginx Active 30h

kube-node-lease Active 5d4h

kube-public Active 5d4h

kube-system Active 5d4h

kubevirt Terminating 2d2h # <------ here

1、新开一个窗口运行命令 kubectl proxy

> 此命令启动了一个代理服务来接收来自你本机的HTTP连接并转发至API服务器,同时处理身份认证

2、新开一个终端窗口,将下面shell脚本整理到文本内`1.sh`并执行,$1参数即为删除不了的ns名称

#------------------------------------------------------------------------------------

#!/bin/bash

set -eo pipefail

die() { echo "$*" 1>&2 ; exit 1; }

need() {

which "$1" &>/dev/null || die "Binary '$1' is missing but required"

}

# checking pre-reqs

need "jq"

need "curl"

need "kubectl"

PROJECT="$1"

shift

test -n "$PROJECT" || die "Missing arguments: kill-ns <namespace>"

kubectl proxy &>/dev/null &

PROXY_PID=$!

killproxy () {

kill $PROXY_PID

}

trap killproxy EXIT

sleep 1 # give the proxy a second

kubectl get namespace "$PROJECT" -o json | jq 'del(.spec.finalizers[] | select("kubernetes"))' | curl -s -k -H "Content-Type: application/json" -X PUT -o /dev/null --data-binary @- http://localhost:8001/api/v1/namespaces/$PROJECT/finalize && echo "Killed namespace: $PROJECT"

#------------------------------------------------------------------------------------

3. 执行脚本删除

# bash 1.sh kubevirt

Killed namespace: kubevirt

1.sh: line 23: kill: (9098) - No such process

5、查看结果

# kubectl get ns

NAME STATUS AGE

default Active 5d4h

ingress-nginx Active 30h

kube-node-lease Active 5d4h

kube-public Active 5d4h

kube-system Active 5d4h

Pod

kubectl作为管理K8s的重要cli命令行工具,运维人员必须掌握它,但里面这么多的子命令,记不住怎么办?这里就以创建pod举例

擅用-h 帮助参数

# 在新版本的K8s中,明确了相关命令就是用来创建对应资源的,不再像老版本那样混合使用,这个不是重点,创建pod,我们用kubectl run -h,来查看命令帮助,是不是豁然开朗

# kubectl run -h

Create and run a particular image in a pod.

Examples:

# Start a nginx pod.

kubectl run nginx --image=nginx

......

# 我们就用示例给出的第一个示例,来创建一个nginx的pod

# kubectl run nginx --image=nginx

pod/nginx created

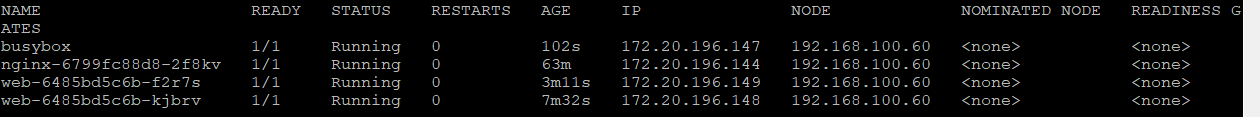

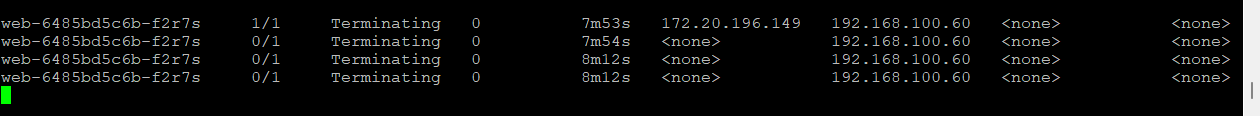

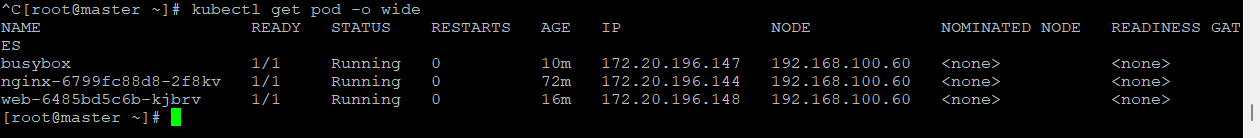

# 等待镜像下载完成后,pod就会正常running了(这里介绍两个实用参数 -w代表持久监听当前namespace下的指定资源的变化;-o wide代表列出更为详细的信息,比如这里pod运行的node节点显示)

# 注: READY下面的含义是后面数字1代表这个pod里面期望的容器数量,前面的数字1代表服务正常运行就绪的容器数量

# kubectl get pod -w -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx 1/1 Running 0 2m35s 172.20.139.67 10.0.1.203 <none> <none>

# 我们来请求下这个pod的IP

# curl 172.20.139.67

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

......

# 我们进到这个pod服务内,修改下页面信息看看,这里会学到exec子命令,-it代表保持tty连接,不会一连上pod就断开了

# ************************************************************

# kubectl -it exec nginx -- sh

# echo 'hello, world!' > /usr/share/nginx/html/index.html

# exit

# curl 172.20.139.67

hello, world!

# 我们来详细分析的这个pod启动的整个流程,这里会用到kubectl的子命令 describe,它是用来描述后面所接资源的详细信息的,划重点,这个命令对于我们生产中排查K8s的问题尤其重要

# kubectl describe pod nginx # 这里显示内容较多,目前我只把当前关键的信息列出来

Name: nginx

Namespace: default

Priority: 0

Node: 10.0.1.203/10.0.1.203

Start Time: Tue, 24 Nov 2020 14:23:56 +0800

Labels: run=nginx

Annotations: <none>

Status: Running

IP: 172.20.139.67

IPs:

IP: 172.20.139.67

Containers:

nginx:

Container ID: docker://2578019be269d7b1ad02ab4dd0a8b883e79fc491ae9c5db6164120f3e1dde8c7

Image: nginx

Image ID: docker-pullable://nginx@sha256:c3a1592d2b6d275bef4087573355827b200b00ffc2d9849890a4f3aa2128c4ae

Port: <none>

Host Port: <none>

State: Running

......中间内容省略

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 5m41s default-scheduler Successfully assigned default/nginx to 10.0.1.203

Normal Pulling 5m40s kubelet Pulling image "nginx"

Normal Pulled 5m25s kubelet Successfully pulled image "nginx"

Normal Created 5m25s kubelet Created container nginx

Normal Started 5m25s kubelet Started container nginx

# 重点分析下最后面的Events事件链

1. kubectl 发送部署pod的请求到 API Server

2. API Server 通知 Controller Manager 创建一个 pod 资源

3. Scheduler 执行调度任务,Events的第一条打印信息就明确显示了这个pod被调度到10.0.1.203这个node节点上运行,接着开始拉取相应容器镜像,拉取完成后开始创建nginx服务,至到最后服务创建完成,在有时候服务报错的时候,这里也会显示相应详细的报错信息

但我们在生产中是不建议直接用来创建pod,先直接演示下:

# 我们删除掉这个nginx的pod

# kubectl delete pod nginx

pod "nginx" deleted

# kubectl get pod

现在已经看不到这个pod了,假设这里是我们运行的一个服务,而恰好运行这个pod的这台node当机了,那么这个服务就没了,它不会自动飘移到其他node上去,也就发挥不了K8s最重要的保持期待的服务特性了。

小技巧之列出镜像的相关tag,方便进行镜像tag版本选择:

这个脚本是从二进制安装K8S那个项目里面提取的一个小脚本,因为用来查docker镜像版本很方便,所以在这里分享给大家

# cat /opt/kube/bin/docker-tag

#!/bin/bash

#

MTAG=$2

CONTAIN=$3

function usage() {

cat << HELP

docker-tag -- list all tags for a Docker image on a remote registry

EXAMPLe:

- list all tags for nginx:

docker-tag tags nginx

- list all nginx tags containing alpine:

docker-tag tags nginx alpine

HELP

}

if [ $# -lt 1 ]; then

usage

exit 2

fi

function tags() {

TAGS=$(curl -ksL https://registry.hub.docker.com/v1/repositories/${MTAG}/tags | sed -e 's/[][]//g' -e 's/"//g' -e 's/ //g' | tr '}' '\n' | awk -e: '{print $3}')

if [ "${CONTAIN}" != "" ]; then

echo -e $(echo "${TAGS}" | grep "${CONTAIN}") | tr ' ' '\n'

else

echo "${TAGS}"

fi

}

case $1 in

tags)

tags

;;

*)

usage

;;

esac

显示结果如下:

# docker-tag tags nginx

latest

1

1-alpine

1-alpine-perl

1-perl

1.10

1.10-alpine

pod小怪战斗(作业)

# 试着创建一个redis服务的pod,并且使用exec进入这个pod,通过客户端命令redis-cli连接到redis-server ,插入一个key a ,value 为666,最后删除这个redis的pod

root@redis:/data# redis-cli

127.0.0.1:6379> get a

(nil)

127.0.0.1:6379> set a 666

OK

127.0.0.1:6379> get a

"666"

第5关 K8s攻克作战攻略之二-Deployment

Deployment

这节课大家跟随博哥爱运维来会会deployment这个怪物

K8s会通过各种Controller来管理Pod的生命周期,为了满足不同的业务场景,K8s开发了Deployment、ReplicaSet、DaemonSet、StatefuleSet、Job、cronJob等多种Controller ,这里我们首先来学习下最常用的Deployment,这是我们生产中用的最多的一个controller,适合用来发布无状态应用.

我们先来运行一个Deployment实例:

# 创建一个deployment,引用nginx的服务镜像,这里的副本数量默认是1,nginx容器镜像用的是latest

# 在K8s新版本开始,对服务api进行了比较大的梳理,明确了各个api的具体职责,而不像以前旧版本那样混为一谈

# kubectl create deployment nginx --image=nginx

deployment.apps/nginx created

# 查看创建结果

# kubectl get deployments.apps

NAME READY UP-TO-DATE AVAILABLE AGE

nginx 0/1 1 0 6s

# kubectl get rs # <-- 看下自动关联创建的副本集replicaset

NAME DESIRED CURRENT READY AGE

nginx-f89759699 1 1 0 10s

# kubectl get pod # <-- 查看生成的pod,注意镜像下载需要一定时间,耐心等待,注意观察pod名称的f89759699,是不是和上面rs的一样,对了,因为这里的pod就是由上面的rs创建出来,为什么要设置这么一个环节呢,后面会以实例来演示

NAME READY STATUS RESTARTS AGE

nginx-f89759699-26fzd 0/1 ContainerCreating 0 13s

# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-f89759699-26fzd 1/1 Running 0 98s

# 扩容pod的数量

# kubectl scale deployment nginx --replicas=2

deployment.apps/nginx scaled

# 查看扩容后的pod结果

# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-f89759699-26fzd 1/1 Running 0 112s

nginx-f89759699-9s4dw 0/1 ContainerCreating 0 2s

# 具体看下pod是不是分散运行在不同的node上呢

# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-f89759699-26fzd 1/1 Running 0 45m 172.20.0.16 10.0.1.202 <none> <none>

nginx-f89759699-9s4dw 1/1 Running 0 43m 172.20.1.14 10.0.1.201 <none> <none>

# 接下来替换下这个deployment里面nginx的镜像版本,来讲解下为什么需要rs副本集呢,这个很重要哦

# 我们先看看目前nginx是哪个版本,随便输入一个错误的uri,页面就会打印出nginx的版本号了

curl 10.68.86.85/1

<html>

<head><title>404 Not Found</title></head>

<body>

<center><h1>404 Not Found</h1></center>

<hr><center>nginx/1.19.4</center>

</body>

</html>

# 根据输出可以看到版本号是nginx/1.19.4,这里利用上面提到的命令docker-tag来看下nginx有哪些其他的版本,然后我在里面挑选了1.9.9这个tag号

# 注意命令最后面的 `--record` 参数,这个在生产中作为资源创建更新用来回滚的重要标记,强烈建议在生产中操作时都加上这个参数

# kubectl set image deployment/nginx nginx=nginx:1.9.9 --record

deployment.apps/nginx image updated

# 观察下pod的信息,可以看到旧nginx的2个pod逐渐被新的pod一个一个的替换掉

# kubectl get pod -w

NAME READY STATUS RESTARTS AGE

nginx-89fc8d79d-4z876 1/1 Running 0 41s

nginx-89fc8d79d-jd78f 0/1 ContainerCreating 0 3s

nginx-f89759699-9cx7l 1/1 Running 0 4h53m

# 我们再看下nginx的rs,可以看到现在有两个了

# kubectl get rs

NAME DESIRED CURRENT READY AGE

nginx-89fc8d79d 2 2 2 9m6s

nginx-f89759699 0 0 0 6h15m

# 看下现在nginx的描述信息,我们来详细分析下这个过程

# kubectl describe deployment nginx

Name: nginx

Namespace: default

CreationTimestamp: Tue, 24 Nov 2020 09:40:54 +0800

Labels: app=nginx

......

RollingUpdateStrategy: 25% max unavailable, 25% max surge # 注意这里,这个就是用来控制rs新旧版本迭代更新的一个频率,滚动更新的副本总数最大值(以2的基数为例):2+2*25%=2.5 -- > 3,可用副本数最大值(默认值两个都是25%):2-2*25%=1.5 --> 2

......

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal ScalingReplicaSet 21m deployment-controller Scaled up replica set nginx-89fc8d79d to 1 # 启动1个新版本的pod

Normal ScalingReplicaSet 20m deployment-controller Scaled down replica set nginx-f89759699 to 1 # 上面完成就释放掉一个旧版本的

Normal ScalingReplicaSet 20m deployment-controller Scaled up replica set nginx-89fc8d79d to 2 # 然后再启动1个新版本的pod

Normal ScalingReplicaSet 20m deployment-controller Scaled down replica set nginx-f89759699 to 0 # 释放掉最后1个旧的pod

# 回滚

# 还记得我们上面提到的 --record 参数嘛,这里它就会发挥很重要的作用了

# 这里还以nginx服务为例,先看下当前nginx的版本号

# curl 10.68.18.121/1

<html>

<head><title>404 Not Found</title></head>

<body bgcolor="white">

<center><h1>404 Not Found</h1></center>

<hr><center>nginx/1.9.9</center>

</body>

</html>

# 升级nginx的版本

# kubectl set image deployments/nginx nginx=nginx:1.19.5 --record

# 已经升级完成

# curl 10.68.18.121/1

<html>

<head><title>404 Not Found</title></head>

<body>

<center><h1>404 Not Found</h1></center>

<hr><center>nginx/1.19.5</center>

</body>

</html>

# 这里假设是我们在发版服务的新版本,结果线上反馈版本有问题,需要马上回滚,看看在K8s上怎么操作吧

# 首先通过命令查看当前历史版本情况,只有接了`--record`参数的命令操作才会有详细的记录,这就是为什么在生产中操作一定得加上的原因了

# kubectl rollout history deployment nginx

deployment.apps/nginx

REVISION CHANGE-CAUSE

1 <none>

2 kubectl set image deployments/nginx nginx=nginx:1.9.9 --record=true

3 kubectl set image deployments/nginx nginx=nginx:1.19.5 --record=true

# 根据历史发布版本前面的阿拉伯数字序号来选择回滚版本,这里我们回到上个版本号,也就是选择2 ,执行命令如下:

# kubectl rollout undo deployment nginx --to-revision=2

deployment.apps/nginx rolled back

# 等一会pod更新完成后,看下结果已经回滚完成了,怎么样,在K8s操作就是这么简单:

# curl 10.68.18.121/1

<html>

<head><title>404 Not Found</title></head>

<body bgcolor="white">

<center><h1>404 Not Found</h1></center>

<hr><center>nginx/1.9.9</center>

</body>

</html>

# 可以看到现在最新版本号是4了,具体版本看操作的命令显示是1.9.9 ,并且先前回滚过的版本号2已经没有了,因为它已经变成4了

# kubectl rollout history deployment nginx

deployment.apps/nginx

REVISION CHANGE-CAUSE

1 <none>

3 kubectl set image deployments/nginx nginx=nginx:1.19.5 --record=true

4 kubectl set image deployments/nginx nginx=nginx:1.9.9 --record=true

Deployment很重要,我们这里再来回顾下整个部署过程,加深理解

10.0.1.201 10.0.1.202

- kubectl 发送部署请求到 API Server

- API Server 通知 Controller Manager 创建一个 deployment 资源(scale扩容)

- Scheduler 执行调度任务,将两个副本 Pod 分发到 10.0.1.201 和 10.0.1.202

- 10.0.1.201 和 10.0.1.202 上的 kubelet在各自的节点上创建并运行 Pod

- 升级deployment的nginx服务镜像

这里补充一下:

这些应用的配置和当前服务的状态信息都是保存在ETCD中,执行kubectl get pod等操作时API Server会从ETCD中读取这些数据

calico会为每个pod分配一个ip,但要注意这个ip不是固定的,它会随着pod的重启而发生变化

附:Node管理

禁止pod调度到该节点上

kubectl cordon

驱逐该节点上的所有pod kubectl drain 该命令会删除该节点上的所有Pod(DaemonSet除外),在其他node上重新启动它们,通常该节点需要维护时使用该命令。直接使用该命令会自动调用kubectl cordon 命令。当该节点维护完成,启动了kubelet后,再使用kubectl uncordon 即可将该节点添加到kubernetes集群中。

上面我们是用命令行来创建的deployment,但在生产中,很多时候,我们是直接写好yaml配置文件,再通过kubectl apply -f xxx.yaml来创建这个服务,我们现在用yaml配置文件的方式实现上面deployment服务的创建

需要注意的是,yaml文件格式缩进和python语法类似,对于缩进格式要求很严格,任何一处错误,都会造成无法创建,这里教大家一招实用的技巧来生成规范的yaml配置

# 这条命令是不是很眼熟,对了,这就是上面创建deployment的命令,我们在后面加上`--dry-run -o yaml`,--dry-run代表这条命令不会实际在K8s执行,-o yaml是会将试运行结果以yaml的格式打印出来,这样我们就能轻松获得yaml配置了

# kubectl create deployment nginx --image=nginx --dry-run -o yaml

apiVersion: apps/v1 # <--- apiVersion 是当前配置格式的版本

kind: Deployment #<--- kind 是要创建的资源类型,这里是 Deployment

metadata: #<--- metadata 是该资源的元数据,name 是必需的元数据项

creationTimestamp: null

labels:

app: nginx

name: nginx

spec: #<--- spec 部分是该 Deployment 的规格说明

replicas: 1 #<--- replicas 指明副本数量,默认为 1

selector:

matchLabels:

app: nginx

strategy: {}

template: #<--- template 定义 Pod 的模板,这是配置文件的重要部分

metadata: #<--- metadata 定义 Pod 的元数据,至少要定义一个 label。label 的 key 和 value 可以任意指定

creationTimestamp: null

labels:

app: nginx

spec: #<--- spec 描述 Pod 的规格,此部分定义 Pod 中每一个容器的属性,name 和 image 是必需的

containers:

- image: nginx

name: nginx

resources: {}

status: {}

我们这里用这个yaml文件来创建nginx的deployment试试,我们先删除掉先用命令行创建的nginx

# 在K8s上命令行删除一个资源直接用delete参数

# kubectl delete deployment nginx

deployment.apps "nginx" deleted

# 可以看到关联的rs副本集也被自动清空了

# kubectl get rs

No resources found in default namespace.

# 相关的pod也没了

# kubectl get pod

No resources found in default namespace.

生成nginx.yaml文件

# kubectl create deployment nginx --image=nginx --dry-run -o yaml > nginx.yaml

我们注意到执行上面命令时会有一条告警提示... --dry-run is deprecated and can be replaced with --dry-run=client. ,虽然并不影响我们生成正常的yaml配置,但如果看着不爽可以按命令提示将--dry-run换成--dry-run=client

# 接着我们vim nginx.yaml,将replicas: 1的数量改成replicas: 2

# 开始创建,我们后面这类基于yaml文件来创建资源的命令统一都用apply了

# kubectl apply -f nginx.yaml

deployment.apps/nginx created

# 查看创建的资源,这个有个小技巧,同时查看多个资源可以用,分隔,这样一条命令就可以查看多个资源了

# kubectl get deployment,rs,pod

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/nginx 2/2 2 2 116s

NAME DESIRED CURRENT READY AGE

replicaset.apps/nginx-f89759699 2 2 2 116s

NAME READY STATUS RESTARTS AGE

pod/nginx-f89759699-bzwd2 1/1 Running 0 116s

pod/nginx-f89759699-qlc8q 1/1 Running 0 116s

# 删除通过kubectl apply -f nginx.yaml创建的资源

kubectl delete -f nginx.yaml

基于这两种资源创建的方式作个总结:

基于命令的方式:

1.简单直观快捷,上手快。

2.适合临时测试或实验。

基于配置文件的方式:

1.配置文件描述了 What,即应用最终要达到的状态。

2.配置文件提供了创建资源的模板,能够重复部署。

3.可以像管理代码一样管理部署。

4.适合正式的、跨环境的、规模化部署。

5.这种方式要求熟悉配置文件的语法,有一定难度。

deployment小怪战斗(作业)

试着用命令行和yaml配置这两种方式,来创建redis的deployment服务,同时可以将pod后面的作业再复习下

第5关 K8s攻克作战攻略之三-服务pod的健康检测

大家好,我是博哥爱运维,这节课内容给大家讲解下在K8S上,我们如果对我们的业务服务进行健康检测。

Health Check

这里我们再进一步,来聊聊K8s上面服务的健康检测特性。在K8s上,强大的自愈能力是这个容器编排引擎的非常重要的一个特性,自愈的默认实现方式是通过自动重启发生故障的容器,使之恢复正常。除此之外,我们还可以利用Liveness 和 Readiness检测机制来设置更为精细的健康检测指标,从而实现如下的需求:

- 零停机部署

- 避免部署无效的服务镜像

- 更加安全地滚动升级

下面我们先来实践学习下K8s的Healthz Check功能,我们先来学习下K8s默认的健康检测机制:

每个容器启动时都会执行一个进程,此进程是由Dockerfile的CMD 或 ENTRYPOINT来指定,当容器内进程退出时返回状态码为非零,则会认为容器发生了故障,K8s就会根据restartPolicy来重启这个容器,以达到自愈的效果。

下面我们来动手实践下,模拟一个容器发生故障时的场景 :

# 先来生成一个pod的yaml配置文件,并对其进行相应修改

# kubectl run busybox --image=busybox --dry-run=client -o yaml > testHealthz.yaml

# vim testHealthz.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: busybox

name: busybox

spec:

containers:

- image: busybox

name: busybox

resources: {}

args:

- /bin/sh

- -c

- sleep 10; exit 1 # 并添加pod运行指定脚本命令,模拟容器启动10秒后发生故障,退出状态码为1

dnsPolicy: ClusterFirst

restartPolicy: OnFailure # 将默认的Always修改为OnFailure

status: {}

| 重启策略 | 说明 |

|---|---|

| Always | 当容器失效时,由kubelet自动重启该容器 |

| OnFailure | 当容器终止运行且退出码不为0时,由kubelet自动重启该容器 |

| Never | 不论容器运行状态如何,kubelet都不会重启该容器 |

执行配置创建pod

# kubectl apply -f testHealthz.yaml

pod/busybox created

# 观察几分钟,利用-w 参数来持续监听pod的状态变化

# kubectl get pod -w

NAME READY STATUS RESTARTS AGE

busybox 0/1 ContainerCreating 0 4s

busybox 1/1 Running 0 6s

busybox 0/1 Error 0 16s

busybox 1/1 Running 1 22s

busybox 0/1 Error 1 34s

busybox 0/1 CrashLoopBackOff 1 47s

busybox 1/1 Running 2 63s

busybox 0/1 Error 2 73s

busybox 0/1 CrashLoopBackOff 2 86s

busybox 1/1 Running 3 109s

busybox 0/1 Error 3 2m

busybox 0/1 CrashLoopBackOff 3 2m15s

busybox 1/1 Running 4 3m2s

busybox 0/1 Error 4 3m12s

busybox 0/1 CrashLoopBackOff 4 3m23s

busybox 1/1 Running 5 4m52s

busybox 0/1 Error 5 5m2s

busybox 0/1 CrashLoopBackOff 5 5m14s

上面可以看到这个测试pod被重启了5次,然而服务始终正常不了,就会保持在CrashLoopBackOff了,等待运维人员来进行下一步错误排查

注:kubelet会以指数级的退避延迟(10s,20s,40s等)重新启动它们,上限为5分钟

这里我们是人为模拟服务故障来进行的测试,在实际生产工作中,对于业务服务,我们如何利用这种重启容器来恢复的机制来配置业务服务呢,答案是`liveness`检测

Liveness

Liveness检测让我们可以自定义条件来判断容器是否健康,如果检测失败,则K8s会重启容器,我们来个例子实践下,准备如下yaml配置并保存为liveness.yaml:

apiVersion: v1

kind: Pod

metadata:

labels:

test: liveness

name: liveness

spec:

restartPolicy: OnFailure

containers:

- name: liveness

image: busybox

args:

- /bin/sh

- -c

- touch /tmp/healthy; sleep 30; rm -f /tmp/healthy; sleep 600

livenessProbe:

exec:

command:

- cat

- /tmp/healthy

initialDelaySeconds: 10 # 容器启动 10 秒之后开始检测

periodSeconds: 5 # 每隔 5 秒再检测一次

启动进程首先创建文件 /tmp/healthy,30 秒后删除,在我们的设定中,如果 /tmp/healthy 文件存在,则认为容器处于正常状态,反正则发生故障。

livenessProbe 部分定义如何执行 Liveness 检测:

检测的方法是:通过 cat 命令检查 /tmp/healthy 文件是否存在。如果命令执行成功,返回值为零,K8s 则认为本次 Liveness 检测成功;如果命令返回值非零,本次 Liveness 检测失败。

initialDelaySeconds: 10 指定容器启动 10 s之后开始执行 Liveness 检测,我们一般会根据应用启动的准备时间来设置。比如某个应用正常启动要花 30 秒,那么 initialDelaySeconds 的值就应该大于 30。

periodSeconds: 5 指定每 5 秒执行一次 Liveness 检测。K8s 如果连续执行 3 次 Liveness 检测均失败,则会杀掉并重启容器。

接着来创建这个Pod:

# kubectl apply -f liveness.yaml

pod/liveness created

从配置文件可知,最开始的 30 秒,/tmp/healthy 存在,cat 命令返回 0,Liveness 检测成功,这段时间 kubectl describe pod liveness 的 Events部分会显示正常的日志

# kubectl describe pod liveness

......

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 53s default-scheduler Successfully assigned default/liveness to 10.0.1.203

Normal Pulling 52s kubelet Pulling image "busybox"

Normal Pulled 43s kubelet Successfully pulled image "busybox"

Normal Created 43s kubelet Created container liveness

Normal Started 42s kubelet Started container liveness

35 秒之后,日志会显示 /tmp/healthy 已经不存在,Liveness 检测失败。再过几十秒,几次检测都失败后,容器会被重启。

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 3m53s default-scheduler Successfully assigned default/liveness to 10.0.1.203

Normal Pulling 73s (x3 over 3m52s) kubelet Pulling image "busybox"

Normal Pulled 62s (x3 over 3m43s) kubelet Successfully pulled image "busybox"

Normal Created 62s (x3 over 3m43s) kubelet Created container liveness

Normal Started 62s (x3 over 3m42s) kubelet Started container liveness

Warning Unhealthy 18s (x9 over 3m8s) kubelet Liveness probe failed: cat: can't open '/tmp/healthy': No such file or directory

Normal Killing 18s (x3 over 2m58s) kubelet Container liveness failed liveness probe, will be restarted

除了 Liveness 检测,Kubernetes Health Check 机制还包括 Readiness 检测。

Readiness

我们可以通过Readiness检测来告诉K8s什么时候可以将pod加入到服务Service的负载均衡池中,对外提供服务,这个在生产场景服务发布新版本时非常重要,当我们上线的新版本发生程序错误时,Readiness会通过检测发布,从而不导入流量到pod内,将服务的故障控制在内部,在生产场景中,建议这个是必加的,Liveness不加都可以,因为有时候我们需要保留服务出错的现场来查询日志,定位问题,告之开发来修复程序。

Readiness 检测的配置语法与 Liveness 检测完全一样,下面是个例子:

apiVersion: v1

kind: Pod

metadata:

labels:

test: liveness

name: liveness

spec:

restartPolicy: OnFailure

containers:

- name: liveness

image: busybox

args:

- /bin/sh

- -c

- touch /tmp/healthy; sleep 30; rm -f /tmp/healthy; sleep 600

readinessProbe: # 这里将livenessProbe换成readinessProbe即可,其它配置都一样

exec:

command:

- cat

- /tmp/healthy

initialDelaySeconds: 10 # 容器启动 10 秒之后开始检测

periodSeconds: 5 # 每隔 5 秒再检测一次

保存上面这个配置为readiness.yaml,并执行它生成pod:

# kubectl apply -f readiness.yaml

pod/liveness created

# 观察,在刚开始创建时,文件并没有被删除,所以检测一切正常

# kubectl get pod

NAME READY STATUS RESTARTS AGE

liveness 1/1 Running 0 50s

# 然后35秒后,文件被删除,这个时候READY状态就会发生变化,K8s会断开Service到pod的流量

# kubectl describe pod liveness

......

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 56s default-scheduler Successfully assigned default/liveness to 10.0.1.203

Normal Pulling 56s kubelet Pulling image "busybox"

Normal Pulled 40s kubelet Successfully pulled image "busybox"

Normal Created 40s kubelet Created container liveness

Normal Started 40s kubelet Started container liveness

Warning Unhealthy 5s (x2 over 10s) kubelet Readiness probe failed: cat: can't open '/tmp/healthy': No such file or directory

# 可以看到pod的流量被断开,这时候即使服务出错,对外界来说也是感知不到的,这时候我们运维人员就可以进行故障排查了

# kubectl get pod

NAME READY STATUS RESTARTS AGE

liveness 0/1 Running 0 61s

下面对 Liveness 检测和 Readiness 检测做个比较:

Liveness 检测和 Readiness 检测是两种 Health Check 机制,如果不特意配置,Kubernetes 将对两种检测采取相同的默认行为,即通过判断容器启动进程的返回值是否为零来判断检测是否成功。

两种检测的配置方法完全一样,支持的配置参数也一样。不同之处在于检测失败后的行为:Liveness 检测是重启容器;Readiness 检测则是将容器设置为不可用,不接收 Service 转发的请求。

Liveness 检测和 Readiness 检测是独立执行的,二者之间没有依赖,所以可以单独使用,也可以同时使用。用 Liveness 检测判断容器是否需要重启以实现自愈;用 Readiness 检测判断容器是否已经准备好对外提供服务。

Health Check 在 业务生产中滚动更新(rolling update)的应用场景

对于运维人员来说,将服务的新项目代码更新上线,确保其稳定运行是一项很关键,且重复性很高的任务,在传统模式下,我们一般是用saltsatck或者ansible等批量管理工具来推送代码到各台服务器上进行更新,那么在K8s上,这个更新流程就被简化了,在后面高阶章节我会讲到CI/CD自动化流程,大致就是开发人员开发好代码上传代码仓库即会触发CI/CD流程,这之间基本无需运维人员的参与。那么在这么高度自动化的流程中,我们运维人员怎么确保服务能稳定上线呢?Health Check里面的Readiness 能发挥很关键的作用,这个其实在上面也有讲过,这里我们再以实例来说一遍,加深印象:

我们准备一个deployment资源的yaml文件

# cat myapp-v1.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: mytest

spec:

replicas: 10 # 这里准备10个数量的pod

selector:

matchLabels:

app: mytest

template:

metadata:

labels:

app: mytest

spec:

containers:

- name: mytest

image: busybox

args:

- /bin/sh

- -c

- sleep 10; touch /tmp/healthy; sleep 30000 # 生成 /tmp/healthy文件,用于健康检查

readinessProbe:

exec:

command:

- cat

- /tmp/healthy

initialDelaySeconds: 10

periodSeconds: 5

运行这个配置

# kubectl apply -f myapp-v1.yaml --record

deployment.apps/mytest created

# 等待一会,可以看到所有pod已正常运行

# kubectl get pod

NAME READY STATUS RESTARTS AGE

mytest-d9f48585b-2lmh2 1/1 Running 0 3m22s

mytest-d9f48585b-5lh9l 1/1 Running 0 3m22s

mytest-d9f48585b-cwb8l 1/1 Running 0 3m22s

mytest-d9f48585b-f6tzc 1/1 Running 0 3m22s

mytest-d9f48585b-hb665 1/1 Running 0 3m22s

mytest-d9f48585b-hmqrw 1/1 Running 0 3m22s

mytest-d9f48585b-jm8bm 1/1 Running 0 3m22s

mytest-d9f48585b-kxm2m 1/1 Running 0 3m22s

mytest-d9f48585b-lqpr9 1/1 Running 0 3m22s

mytest-d9f48585b-pk75z 1/1 Running 0 3m22s

接着我们来准备更新这个服务,并且人为模拟版本故障来进行观察,新准备一个配置myapp-v2.yaml

# cat myapp-v2.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: mytest

spec:

strategy:

rollingUpdate:

maxSurge: 35% # 滚动更新的副本总数最大值(以10的基数为例):10 + 10 * 35% = 13.5 --> 14

maxUnavailable: 35% # 可用副本数最大值(默认值两个都是25%): 10 - 10 * 35% = 6.5 --> 7

replicas: 10

selector:

matchLabels:

app: mytest

template:

metadata:

labels:

app: mytest

spec:

containers:

- name: mytest

image: busybox

args:

- /bin/sh

- -c

- sleep 30000 # 可见这里并没有生成/tmp/healthy这个文件,所以下面的检测必然失败

readinessProbe:

exec:

command:

- cat

- /tmp/healthy

initialDelaySeconds: 10

periodSeconds: 5

很明显这里因为我们更新的这个v2版本里面不会生成/tmp/healthy文件,那么自然是无法通过Readiness 检测的,详情如下:

# kubectl apply -f myapp-v2.yaml --record

deployment.apps/mytest configured

# kubectl get deployment mytest

NAME READY UP-TO-DATE AVAILABLE AGE

mytest 7/10 7 7 4m58s

# READY 现在正在运行的只有7个pod

# UP-TO-DATE 表示当前已经完成更新的副本数:即 7 个新副本

# AVAILABLE 表示当前处于 READY 状态的副本数

# kubectl get pod

NAME READY STATUS RESTARTS AGE

mytest-7657789bc7-5hfkc 0/1 Running 0 3m2s

mytest-7657789bc7-6c5lg 0/1 Running 0 3m2s

mytest-7657789bc7-c96t6 0/1 Running 0 3m2s

mytest-7657789bc7-nbz2q 0/1 Running 0 3m2s

mytest-7657789bc7-pt86c 0/1 Running 0 3m2s

mytest-7657789bc7-q57gb 0/1 Running 0 3m2s

mytest-7657789bc7-x77cg 0/1 Running 0 3m2s

mytest-d9f48585b-2bnph 1/1 Running 0 5m4s

mytest-d9f48585b-965t4 1/1 Running 0 5m4s

mytest-d9f48585b-cvq7l 1/1 Running 0 5m4s

mytest-d9f48585b-hvpnq 1/1 Running 0 5m4s

mytest-d9f48585b-k89zs 1/1 Running 0 5m4s

mytest-d9f48585b-wkb4b 1/1 Running 0 5m4s

mytest-d9f48585b-wrkzf 1/1 Running 0 5m4s

# 上面可以看到,由于 Readiness 检测一直没通过,所以新版本的pod都是Not ready状态的,这样就保证了错误的业务代码不会被外界请求到

# kubectl describe deployment mytest

# 下面截取一些这里需要的关键信息

......

Replicas: 10 desired | 7 updated | 14 total | 7 available | 7 unavailable

......

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal ScalingReplicaSet 5m55s deployment-controller Scaled up replica set mytest-d9f48585b to 10

Normal ScalingReplicaSet 3m53s deployment-controller Scaled up replica set mytest-7657789bc7 to 4 # 启动4个新版本的pod

Normal ScalingReplicaSet 3m53s deployment-controller Scaled down replica set mytest-d9f48585b to 7 # 将旧版本pod数量降至7

Normal ScalingReplicaSet 3m53s deployment-controller Scaled up replica set mytest-7657789bc7 to 7 # 新增3个启动至7个新版本

综合上面的分析,我们很真实的模拟一次K8s上次错误的代码上线流程,所幸的是这里有Health Check的Readiness检测帮我们屏蔽了有错误的副本,不至于被外面的流量请求到,同时保留了大部分旧版本的pod,因此整个服务的业务并没有因这此更新失败而受到影响。

接下来我们详细分析下滚动更新的原理,为什么上面服务新版本创建的pod数量是7个,同时只销毁了3个旧版本的pod呢?

原因就在于这段配置:

我们不显式配置这段的话,默认值均是25%

strategy:

rollingUpdate:

maxSurge: 35%

maxUnavailable: 35%

滚动更新通过参数maxSurge和maxUnavailable来控制pod副本数量的更新替换。

maxSurge

这个参数控制滚动更新过程中pod副本总数超过设定总副本数量的上限。maxSurge 可以是具体的整数(比如 3),也可以是百分比,向上取整。maxSurge 默认值为 25%

在上面测试的例子里面,pod的总副本数量是10,那么在更新过程中,总副本数量的上限大最值计划公式为:

10 + 10 * 35% = 13.5 --> 14

我们查看下更新deployment的描述信息:

Replicas: 10 desired | 7 updated | 14 total | 7 available | 7 unavailable

旧版本available 的数量7个 + 新版本unavailable`的数量7个 = 总数量 14 total

maxUnavailable

这个参数控制滚动更新过程中不可用的pod副本总数量的值,同样,maxUnavailable 可以是具体的整数(比如 3),也可以是百分百,向下取整。maxUnavailable 默认值为 25%。

在上面测试的例子里面,pod的总副本数量是10,那么要保证正常可用的pod副本数量为:

10 - 10 * 35% = 6.5 --> 7

所以我们在上面查看的描述信息里,7 available 正常可用的pod数量值就为7

maxSurge 值越大,初始创建的新副本数量就越多;maxUnavailable 值越大,初始销毁的旧副本数量就越多。

正常更新理想情况下,我们这次版本发布案例滚动更新的过程是:

- 首先创建4个新版本的pod,使副本总数量达到14个

- 然后再销毁3个旧版本的pod,使可用的副本数量降为7个

- 当这3个旧版本的pod被 成功销毁后,可再创建3个新版本的pod,使总的副本数量保持为14个

- 当新版本的pod通过Readiness 检测后,会使可用的pod副本数量增加超过7个

- 然后可以继续销毁更多的旧版本的pod,使整体可用的pod数量回到7个

- 随着旧版本的pod销毁,使pod副本总数量低于14个,这样就可以继续创建更多的新版本的pod

- 这个新增销毁流程会持续地进行,最终所有旧版本的pod会被新版本的pod逐渐替换,整个滚动更新完成

而我们这里的实际情况是在第4步就卡住了,新版本的pod数量无法能过Readiness 检测。上面的描述信息最后面的事件部分的日志也详细说明了这一切:

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal ScalingReplicaSet 5m55s deployment-controller Scaled up replica set mytest-d9f48585b to 10

Normal ScalingReplicaSet 3m53s deployment-controller Scaled up replica set mytest-7657789bc7 to 4 # 启动4个新版本的pod

Normal ScalingReplicaSet 3m53s deployment-controller Scaled down replica set mytest-d9f48585b to 7 # 将旧版本pod数量降至7

Normal ScalingReplicaSet 3m53s deployment-controller Scaled up replica set mytest-7657789bc7 to 7 # 新增3个启动至7个新版本

这里按正常的生产处理流程,在获取足够的新版本错误信息提交给开发分析后,我们可以通过kubectl rollout undo 来回滚到上一个正常的服务版本:

# 先查看下要回滚版本号前面的数字,这里为1

# kubectl rollout history deployment mytest

deployment.apps/mytest

REVISION CHANGE-CAUSE

1 kubectl apply --filename=myapp-v1.yaml --record=true

2 kubectl apply --filename=myapp-v2.yaml --record=true

# kubectl rollout undo deployment mytest --to-revision=1

deployment.apps/mytest rolled back

# kubectl get deployment mytest

NAME READY UP-TO-DATE AVAILABLE AGE

mytest 10/10 10 10 96m

# kubectl get pod

NAME READY STATUS RESTARTS AGE

mytest-d9f48585b-2bnph 1/1 Running 0 96m

mytest-d9f48585b-8nvhd 1/1 Running 0 2m13s

mytest-d9f48585b-965t4 1/1 Running 0 96m

mytest-d9f48585b-cvq7l 1/1 Running 0 96m

mytest-d9f48585b-hvpnq 1/1 Running 0 96m

mytest-d9f48585b-k89zs 1/1 Running 0 96m

mytest-d9f48585b-qs5c6 1/1 Running 0 2m13s

mytest-d9f48585b-wkb4b 1/1 Running 0 96m

mytest-d9f48585b-wprlz 1/1 Running 0 2m13s

mytest-d9f48585b-wrkzf 1/1 Running 0 96m

OK,到这里为止,我们真实的模拟了一次有问题的版本发布及回滚,并且可以看到,在这整个过程中,虽然出现了问题,但我们的业务依然是没有受到任何影响的,这就是K8s的魅力所在。

pod小怪战斗(作业)

# 把上面整个更新及回滚的案例,自己再测试一遍,注意观察其中的pod变化,加深理解

第5关 k8s架构师课程攻克作战攻略之四-Service

这节课内容给大家讲解下在K8S上如何来使用service做内部服务pod的流量负载均衡。

Service、Endpoint

K8s是运维人员的救星,为什么这么说呢,因为它里面的运行机制能确保你需要运行的服务,一直保持所期望的状态,还是以上面nginx服务举例,我们不能确保其pod运行的node节点什么时候会当掉,同时在pod环节也说过,pod的IP每次重启都会发生改变,所以我们不应该期望K8s的pod是健壮的,而是要按最坏的打算来假设服务pod中的容器会因为代码有bug、所以node节点不稳定等等因素发生故障而挂掉,这时候如果我们用的Deployment,那么它的controller会通过动态创建新pod到可用的node上,同时删除旧的pod来保证应用整体的健壮性;并且流量入口这块用一个能固定IP的service来充当抽象的内部负载均衡器,提供pod的访问,所以这里等于就是K8s成为了一个7 x 24小时在线处理服务pod故障的运维机器人。

创建一个service服务来提供固定IP轮询访问上面创建的nginx服务的2个pod(nodeport)

# 创建nginx的deployment

# kubectl apply -f nginx.yaml

# 给这个nginx的deployment生成一个service(简称svc)

# 同时也可以用生成yaml配置的形式来创建 kubectl expose deployment nginx --port=80 --target-port=80 --dry-run=client -o yaml

# 我们可以先把上面的yaml配置导出为svc.yaml提供后面,这里就直接用命令行创建了

# kubectl expose deployment nginx --port=80 --target-port=80

service/nginx exposed

# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.68.0.1 <none> 443/TCP 4d23h

nginx ClusterIP 10.68.18.121 <none> 80/TCP 5s

# 看下自动关联生成的endpoint

# kubectl get endpoints nginx

NAME ENDPOINTS AGE

nginx 172.20.139.72:80,172.20.217.72:80 27s

# 接下来测试下svc的负载均衡效果吧,这里我们先进到pod里面,把nginx的页面信息改为各自pod的hostname

# kubectl exec -it nginx-6799fc88d8-2kgn8 -- bash

root@nginx-f89759699-bzwd2:/# echo nginx-6799fc88d8-2kgn8 > /usr/share/nginx/html/index.html

root@nginx-f89759699-bzwd2:/# exit

# kubectl exec -it nginx-6799fc88d8-gn7r7 -- bash

root@nginx-f89759699-qlc8q:/# echo nginx-6799fc88d8-gn7r7 > /usr/share/nginx/html/index.html

root@nginx-f89759699-qlc8q:/# exit

# kubectl exec -it nginx-6799fc88d8-npm5g -- bash

root@nginx-f89759699-qlc8q:/# echo nginx-6799fc88d8-npm5g > /usr/share/nginx/html/index.html

root@nginx-f89759699-qlc8q:/# exit

# curl 10.68.18.121

nginx-f89759699-bzwd2

# curl 10.68.18.121

nginx-f89759699-qlc8q

# 修改svc的类型来提供外部访问

# kubectl patch svc nginx -p '{"spec":{"type":"NodePort"}}'

service/nginx patched

# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.68.0.1 <none> 443/TCP 3d21h

nginx NodePort 10.68.86.85 <none> 80:33184/TCP 30m

# 具体看下pod是不是分散运行在不同的node上呢

# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-f89759699-26fzd 1/1 Running 0 45m 172.20.0.16 10.0.1.202 <none> <none>

nginx-f89759699-9s4dw 1/1 Running 0 43m 172.20.1.14 10.0.1.201 <none> <none>

# node + port

# node是kubectl get pod 中对应的node的ip

# port是kubectl get svc 中查到的对应服务的外部端口

[root@node-2 ~]# curl 10.0.1.201:20651

nginx-f89759699-bzwd2

[root@node-2 ~]# curl 10.0.1.201:20651

nginx-f89759699-qlc8q

我们这里也来分析下这个svc的yaml配置

cat svc.yaml

apiVersion: v1 # <<<<<< v1 是 Service 的 apiVersion

kind: Service # <<<<<< 指明当前资源的类型为 Service

metadata:

creationTimestamp: null

labels:

app: nginx

name: nginx # <<<<<< Service 的名字为 nginx

spec:

ports:

- port: 80 # <<<<<< 将 Service 的 80 端口映射到 Pod 的 80 端口,使用 TCP 协议

protocol: TCP

targetPort: 80

selector:

app: nginx # <<<<<< selector 指明挑选那些 label 为 run: nginx 的 Pod 作为 Service 的后端

status:

loadBalancer: {}

我们来看下这个nginx的svc描述

# kubectl describe svc nginx

Name: nginx

Namespace: default

Labels: app=nginx

Annotations: <none>

Selector: app=nginx

Type: NodePort

IP: 10.68.18.121

Port: <unset> 80/TCP

TargetPort: 80/TCP

NodePort: <unset> 20651/TCP

Endpoints: 172.20.139.72:80,172.20.217.72:80

Session Affinity: None

External Traffic Policy: Cluster

Events: <none>

我们可以看到在Endpoints列出了2个pod的IP和端口,pod的ip是在容器中配置的,那么这里Service cluster IP又是在哪里配置的呢?cluster ip又是自律映射到pod ip上的呢?

# 首先看下kube-proxy的配置

# cat /etc/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Kube-Proxy Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

[Service]

WorkingDirectory=/var/lib/kube-proxy

ExecStart=/opt/kube/bin/kube-proxy \

--bind-address=10.0.1.202 \

--cluster-cidr=172.20.0.0/16 \

--hostname-override=10.0.1.202 \

--kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig \

--logtostderr=true \

--proxy-mode=ipvs #<------- 我们在最开始部署kube-proxy的时候就设定它的转发模式为ipvs,因为默认的iptables在存在大量svc的情况下性能很低

Restart=always

RestartSec=5

LimitNOFILE=65536

# 看下本地网卡,会有一个ipvs的虚拟网卡

# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens32: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:20:b8:39 brd fe:fe:fe:fe:fe:ff

inet 10.0.1.202/24 brd 10.0.1.255 scope global noprefixroute ens32

valid_lft forever preferred_lft forever

inet6 fe80::20c:29fe:fe20:b839/64 scope link

valid_lft forever preferred_lft forever

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:91:ac:ce:13 brd fe:fe:fe:fe:fe:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

4: dummy0: <BROADCAST,NOARP> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether 22:50:98:a6:f9:e4 brd fe:fe:fe:fe:fe:ff

5: kube-ipvs0: <BROADCAST,NOARP> mtu 1500 qdisc noop state DOWN group default

link/ether 96:6b:f0:25:1a:26 brd fe:fe:fe:fe:fe:ff

inet 10.68.0.2/32 brd 10.68.0.2 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 10.68.0.1/32 brd 10.68.0.1 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 10.68.120.201/32 brd 10.68.120.201 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 10.68.50.42/32 brd 10.68.50.42 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 10.68.18.121/32 brd 10.68.18.121 scope global kube-ipvs0 # <-------- SVC的IP配置在这里

valid_lft forever preferred_lft forever

6: caliaeb0378f7a4@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether ee:ee:ee:ee:ee:ee brd fe:fe:fe:fe:fe:ff link-netnsid 0

inet6 fe80::ecee:eefe:feee:eeee/64 scope link

valid_lft forever preferred_lft forever

7: tunl0@NONe: <NOARP,UP,LOWER_UP> mtu 1440 qdisc noqueue state UNKNOWN group default qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

inet 172.20.247.0/32 brd 172.20.247.0 scope global tunl0

valid_lft forever preferred_lft forever

# 来看下lvs的虚拟服务器列表

# ipvsadm -ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 172.17.0.1:20651 rr

-> 172.20.139.72:80 Masq 1 0 0

-> 172.20.217.72:80 Masq 1 0 0

TCP 172.20.247.0:20651 rr

-> 172.20.139.72:80 Masq 1 0 0

-> 172.20.217.72:80 Masq 1 0 0

TCP 10.0.1.202:20651 rr

-> 172.20.139.72:80 Masq 1 0 0

-> 172.20.217.72:80 Masq 1 0 0

TCP 10.68.0.1:443 rr

-> 10.0.1.201:6443 Masq 1 0 0

-> 10.0.1.202:6443 Masq 1 3 0

TCP 10.68.0.2:53 rr

-> 172.20.247.2:53 Masq 1 0 0

TCP 10.68.0.2:9153 rr

-> 172.20.247.2:9153 Masq 1 0 0

TCP 10.68.18.121:80 rr #<----------- SVC转发Pod的明细在这里

-> 172.20.139.72:80 Masq 1 0 0

-> 172.20.217.72:80 Masq 1 0 0

TCP 10.68.50.42:443 rr

-> 172.20.217.71:4443 Masq 1 0 0

TCP 10.68.120.201:80 rr

-> 10.0.1.201:80 Masq 1 0 0

-> 10.0.1.202:80 Masq 1 0 0

TCP 10.68.120.201:443 rr

-> 10.0.1.201:443 Masq 1 0 0

-> 10.0.1.202:443 Masq 1 0 0

TCP 10.68.120.201:10254 rr

-> 10.0.1.201:10254 Masq 1 0 0

-> 10.0.1.202:10254 Masq 1 0 0

TCP 127.0.0.1:20651 rr

-> 172.20.139.72:80 Masq 1 0 0

-> 172.20.217.72:80 Masq 1 0 0

UDP 10.68.0.2:53 rr

-> 172.20.247.2:53 Masq 1 0 0

除了直接用cluster ip,以及上面说到的NodePort模式来访问Service,我们还可以用K8s的DNS来访问

# 我们前面装好的CoreDNS,来提供K8s集群的内部DNS访问

# kubectl -n kube-system get deployment,pod|grep dns

deployment.apps/coredns 1/1 1 1 5d2h

pod/coredns-d9b6857b5-tt7j2 1/1 Running 1 27h

# coredns是一个DNS服务器,每当有新的Service被创建的时候,coredns就会添加该Service的DNS记录,然后我们通过serviceName.namespaceName就可以来访问到对应的pod了,下面来演示下:

# kubectl run -it --rm busybox --image=busybox -- sh # --rm代表等我退出这个pod后,它会被自动删除,当作一个临时pod在用

If you don't see a command prompt, try pressing enter.

/ # ping nginx.default

PING nginx.default (10.68.18.121): 56 data bytes

64 bytes from 10.68.18.121: seq=0 ttl=64 time=0.096 ms

64 bytes from 10.68.18.121: seq=1 ttl=64 time=0.067 ms

64 bytes from 10.68.18.121: seq=2 ttl=64 time=0.065 ms

^C

--- nginx.default ping statistics ---

3 packets transmitted, 3 packets received, 0% packet loss

round-trip min/avg/max = 0.065/0.076/0.096 ms

/ # wget nginx.default

Connecting to nginx.default (10.68.18.121:80)

saving to 'index.html'

index.html 100% |********************************************************************| 22 0:00:00 ETA

'index.html' saved

/ # cat index.html

nginx-f89759699-bzwd2

service生产小技巧 通过svc来访问非K8s上的服务

上面我们提到了创建service后,会自动创建对应的endpoint,这里面的关键在于 selector: app: nginx 基于lables标签选择了一组存在这个标签的pod,然而在我们创建svc时,如果没有定义这个selector,那么系统是不会自动创建endpoint的,我们可不可以手动来创建这个endpoint呢?答案是可以的,在生产中,我们可以通过创建不带selector的Service,然后创建同样名称的endpoint,来关联K8s集群以外的服务,这个具体能带给我们运维人员什么好处呢,就是我们可以直接复用K8s上的ingress(这个后面会讲到,现在我们就当它是一个nginx代理),来访问K8s集群以外的服务,省去了自己搭建前面Nginx代理服务器的麻烦

开始实践测试

这里我们挑选node-2节点,用python运行一个简易web服务器

[root@node-2 mnt]# python -m SimpleHTTPServer 9999

Serving HTTP on 0.0.0.0 port 9999 ...

然后我们用之前学会的方法,来生成svc和endpoint的yaml配置,并修改成如下内容,并保存为mysvc.yaml

注意Service和Endpoints的名称必须一致

# 注意我这里把两个资源的yaml写在一个文件内,在实际生产中,我们经常会这么做,方便对一个服务的所有资源进行统一管理,不同资源之间用"---"来分隔

apiVersion: v1

kind: Service

metadata:

name: mysvc

namespace: default

spec:

type: ClusterIP

ports:

- port: 80

protocol: TCP

---

apiVersion: v1

kind: Endpoints

metadata:

name: mysvc

namespace: default

subsets:

- addresses:

- ip: 10.0.1.202

nodeName: 10.0.1.202

ports:

- port: 9999

protocol: TCP

开始创建并测试

# kubectl apply -f mysvc.yaml

service/mysvc created

endpoints/mysvc created

# kubectl get svc,endpoints |grep mysvc

service/mysvc ClusterIP 10.68.71.166 <none> 80/TCP 14s

endpoints/mysvc 10.0.1.202:9999 14s

# curl 10.68.71.166

mysvc

# 我们回到node-2节点上,可以看到有一条刚才的访问日志打印出来了

10.0.1.201 - - [25/Nov/2020 14:42:45] "GET / HTTP/1.1" 200 -

外部网络如何访问到Service呢?

在上面其实已经给大家演示过了将Service的类型改为NodePort,然后就可以用node节点的IP加端口就能访问到Service了,我们这里来详细分析下原理,以便加深印象

# 我们看下先创建的nginx service的yaml配置

# kubectl get svc nginx -o yaml

apiVersion: v1

kind: Service

metadata:

creationTimestamp: "2020-11-25T03:55:05Z"

labels:

app: nginx

managedFields: # 在新版的K8s运行的资源配置里面,会输出这么多的配置信息,这里我们可以不用管它,实际我们在创建时,这些都是忽略的

- apiVersion: v1

fieldsType: FieldsV1

fieldsV1:

e:metadata:

e:labels:

.: {}

e:app: {}

e:spec:

e:externalTrafficPolicy: {}

e:ports:

.: {}

k:{"port":80,"protocol":"TCP"}:

.: {}

e:port: {}

e:protocol: {}

e:targetPort: {}

e:selector:

.: {}

e:app: {}

e:sessionAffinity: {}

e:type: {}

manager: kubectl

operation: Update

time: "2020-11-25T04:00:28Z"

name: nginx

namespace: default

resourceVersion: "591029"

selfLink: /api/v1/namespaces/default/services/nginx

uid: 84fea557-e19d-486d-b879-13743c603091

spec:

clusterIP: 10.68.18.121

externalTrafficPolicy: Cluster

ports:

- nodePort: 20651 # 我们看下这里,它定义的一个nodePort配置,并分配了20651端口,因为我们先前创建时并没有指定这个配置,所以它是随机生成的

port: 80

protocol: TCP

targetPort: 80

selector:

app: nginx

sessionAffinity: None

type: NodePort

status:

loadBalancer: {}

# 我们看下apiserver的配置

# cat /etc/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

[Service]

ExecStart=/opt/kube/bin/kube-apiserver \

--advertise-address=10.0.1.201 \

--allow-privileged=true \

--anonymous-auth=false \

--authorization-mode=Node,RBAC \

--token-auth-file=/etc/kubernetes/ssl/basic-auth.csv \

--bind-address=10.0.1.201 \

--client-ca-file=/etc/kubernetes/ssl/ca.pem \

--endpoint-reconciler-type=lease \

--etcd-cafile=/etc/kubernetes/ssl/ca.pem \

--etcd-certfile=/etc/kubernetes/ssl/kubernetes.pem \

--etcd-keyfile=/etc/kubernetes/ssl/kubernetes-key.pem \

--etcd-servers=https://10.0.1.201:2379,https://10.0.1.202:2379,https://10.0.1.203:2379 \

--kubelet-certificate-authority=/etc/kubernetes/ssl/ca.pem \

--kubelet-client-certificate=/etc/kubernetes/ssl/myk8s.pem \

--kubelet-client-key=/etc/kubernetes/ssl/myk8s-key.pem \

--kubelet-https=true \

--service-account-key-file=/etc/kubernetes/ssl/ca.pem \

--service-cluster-ip-range=10.68.0.0/16 \

--service-node-port-range=20000-40000 \ # 这就是NodePor随机生成端口的范围,这个在我们部署时就指定了

--tls-cert-file=/etc/kubernetes/ssl/kubernetes.pem \

--tls-private-key-file=/etc/kubernetes/ssl/kubernetes-key.pem \

--requestheader-client-ca-file=/etc/kubernetes/ssl/ca.pem \

--requestheader-allowed-names= \

--requestheader-extra-headers-prefix=X-Remote-Extra- \

--requestheader-group-headers=X-Remote-Group \

--requestheader-username-headers=X-Remote-User \

--proxy-client-cert-file=/etc/kubernetes/ssl/aggregator-proxy.pem \

--proxy-client-key-file=/etc/kubernetes/ssl/aggregator-proxy-key.pem \

--enable-aggregator-routing=true \

--v=2

Restart=always

RestartSec=5

Type=notify

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

# NodePort端口会在所在K8s的node节点上都生成一个同样的端口,这就使我们无论所以哪个node的ip接端口都能方便的访问到Service了,但在实际生产中,这个NodePort不建议经常使用,因为它会造成node上端口管理混乱,等用到了ingress后,你就不会想使用NodePort模式了,这个接下来会讲到

[root@node-1 ~]# ipvsadm -ln|grep -C6 20651

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

......

TCP 10.0.1.201:20651 rr # 这里

-> 172.20.139.72:80 Masq 1 0 0

-> 172.20.217.72:80 Masq 1 0 0

[root@node-2 mnt]# ipvsadm -ln|grep -C6 20651

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

......

TCP 10.0.1.202:20651 rr # 这里

-> 172.20.139.72:80 Masq 1 0 0

-> 172.20.217.72:80 Masq 1 0 0

生产中Service的调优

# 先把nginx的pod数量调整为1,方便呆会观察

# kubectl scale deployment nginx --replicas=1

deployment.apps/nginx scaled

# 看下这个nginx的pod运行情况,-o wide显示更详细的信息,这里可以看到这个pod运行在node 203上面

# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-f89759699-qlc8q 1/1 Running 0 3h27m 172.20.139.72 10.0.1.203 <none> <none>

# 我们先直接通过pod运行的node的IP来访问测试

[root@node-1 ~]# curl 10.0.1.203:20651

nginx-f89759699-qlc8q

# 可以看到日志显示这条请求的来源IP是203,而不是node-1的IP 10.0.1.201

# 注: kubectl logs --tail=1 代表查看这个pod的日志,并只显示倒数第一条

[root@node-1 ~]# kubectl logs --tail=1 nginx-f89759699-qlc8q

10.0.1.203 - - [25/Nov/2020:07:22:54 +0000] "GET / HTTP/1.1" 200 22 "-" "curl/7.29.0" "-"

# 再来通过201来访问

[root@node-1 ~]# curl 10.0.1.201:20651

nginx-f89759699-qlc8q

# 可以看到显示的来源IP非node节点的

[root@node-1 ~]# kubectl logs --tail=1 nginx-f89759699-qlc8q

172.20.84.128 - - [25/Nov/2020:07:23:18 +0000] "GET / HTTP/1.1" 200 22 "-" "curl/7.29.0" "-"

# 这就是一个虚拟网卡转发的

[root@node-1 ~]# ip a|grep -wC2 172.20.84.128

9: tunl0@NONe: <NOARP,UP,LOWER_UP> mtu 1440 qdisc noqueue state UNKNOWN group default qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

inet 172.20.84.128/32 brd 172.20.84.128 scope global tunl0

valid_lft forever preferred_lft forever

# 可以看下lvs的虚拟服务器列表,正好是转到我们要访问的pod上的

[root@node-1 ~]# ipvsadm -ln|grep -A1 172.20.84.128

TCP 172.20.84.128:20651 rr

-> 172.20.139.72:80 Masq 1 0 0

详细处理流程如下:

* 客户端发送数据包 10.0.1.201:20651

* 10.0.1.201 用自己的IP地址替换数据包中的源IP地址(SNAT)

* 10.0.1.201 使用 pod IP 替换数据包上的目标 IP

* 数据包路由到 10.0.1.203 ,然后路由到 endpoint

* pod的回复被路由回 10.0.1.201

* pod的回复被发送回客户端

client

\ ^

\ \

v \

10.0.1.203 <--- 10.0.1.201

| ^ SNAT

| | --->

v |

endpoint

为避免这种情况, Kubernetes 具有保留客户端IP 的功能。设置 service.spec.externalTrafficPolicy 为 Local 会将请求代理到本地端点,不将流量转发到其他节点,从而保留原始IP地址。如果没有本地端点,则丢弃发送到节点的数据包,因此您可以在任何数据包处理规则中依赖正确的客户端IP。

# 设置 service.spec.externalTrafficPolicy 字段如下:

# kubectl patch svc nginx -p '{"spec":{"externalTrafficPolicy":"Local"}}'

service/nginx patched

# 现在通过非pod所在node节点的IP来访问是不通了

[root@node-1 ~]# curl 10.0.1.201:20651

curl: (7) Failed connect to 10.0.1.201:20651; Connection refused

# 通过所在node的IP发起请求正常

[root@node-1 ~]# curl 10.0.1.203:20651

nginx-f89759699-qlc8q

# 可以看到日志显示的来源IP就是201,这才是我们想要的结果

[root@node-1 ~]# kubectl logs --tail=1 nginx-f89759699-qlc8q

10.0.1.201 - - [25/Nov/2020:07:33:42 +0000] "GET / HTTP/1.1" 200 22 "-" "curl/7.29.0" "-"

# 去掉这个优化配置也很简单

# kubectl patch svc nginx -p '{"spec":{"externalTrafficPolicy":""}}'

第5关 k8s架构师课程攻克作战攻略之五 - labels

大家好,今天给大家讲讲k8s里面的labels标签。

Labels

labels标签,在kubernetes我们会经常见到,它的功能非常关键,就相关于服务pod的身份证信息,如果我们创建一个deployment资源,它之所以能守护下面启动的N个pod以达到期望的数据,service之所以能把流量准确无误的转发到指定的pod上去,归根结底都是labels在这里起作用,下面我们来实际操作下,相信大家跟着操作完成后,就会理解labels的功效了

# 我们先来创建一个nginx的deployment资源

kubectl create deployment nginx --image=nginx --replicas=3

# 等服务pod都运行好,这时候按我们期待的状态就是3个pod,没问题

kubectl get pod -w

# 我们现在来修改其中一个pod的label,你会发现这个pod会被deployment抛弃,因为失去了labels这个标签,deployment已经不认识这个pod了,它就成了无主的pod,这时我们直接删除这个pod,它就会直接消失,就和我们用kubectl run 一个独立的pod资源一样

# 我们再来基于这个nginx的deployment来创建一个service服务

kubectl expose deployment nginx --port=80 --target-port=80 --name=nginx

# 直接利用svc的ip来请求下,发现都是正常的对吧

kubectl get svc nginx

# 这个时候我来来修改下svc资源的选择labels,看看会出现什么情况

kubectl patch services nginx -p '{"spec":{"selector":{"app": "nginxaaa"}}}'

# 这时再请求这个svc的ip,你会发现已经请求不通了,这也证明了它已经关联不到后面对应label的pod了

# 我们修改回来后,会发现一切恢复正常了

kubectl patch services nginx -p '{"spec":{"selector":{"app": "nginx"}}}'

labels受namespace管控,在同一个namespace下面的服务labels,如果只有一个,就需要注意其唯一性,不要有重复的存在,不然服务就会跑串,出现一些奇怪的现象,我们在资源中可以配置多个lables来一起组合使用,这样就会大大降低重复的情况了。

第6关 k8s架构师课程之流量入口Ingress上部

大家好,这节课带来k8s的流量入口ingress,作为业务对外服务的公网入口,它的重要性不言而喻,大家一定要仔细阅读,跟着博哥的教程一步步实操去理解。

这节课所用到的yaml配置比较多,但我发现在头条这里发的格式会有问题,所以我另外把笔记文字部分存了一份在我的github上面,大家可以从这里面来复制yaml配置创建服务:

https://github.com/bogeit/LearnK8s/blob/main/%E7%AC%AC6%E5%85%B3%20k8s%E6%9E%B6%E6%9E%84%E5%B8%88%E8%AF%BE%E7%A8%8B%E4%B9%8B%E6%B5%81%E9%87%8F%E5%85%A5%E5%8F%A3Ingress%E4%B8%8A%E9%83%A8.md

我们上面学习了通过Service服务来访问pod资源,另外通过修改Service的类型为NodePort,然后通过一些手段作公网IP的端口映射来提供K8s集群外的访问,但这并不是一种很优雅的方式。

通常,services和Pod只能通过集群内网络访问。 所有在边界路由器上的流量都被丢弃或转发到别处。

从概念上讲,这可能看起来像:

internet

|

------------

[ Services ]

另外可以我们通过LoadBalancer负载均衡来提供外部流量的的访问,但这种模式对于实际生产来说,用起来不是很方便,而且用这种模式就意味着每个服务都需要有自己的的负载均衡器以及独立的公有IP。

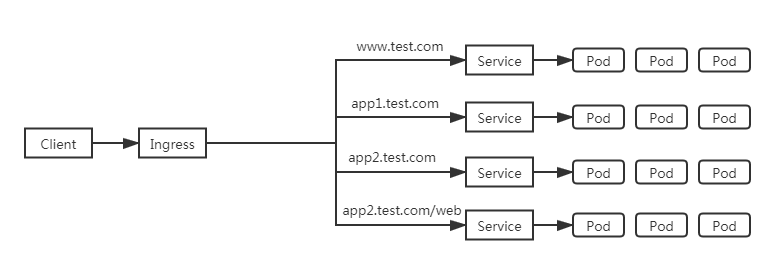

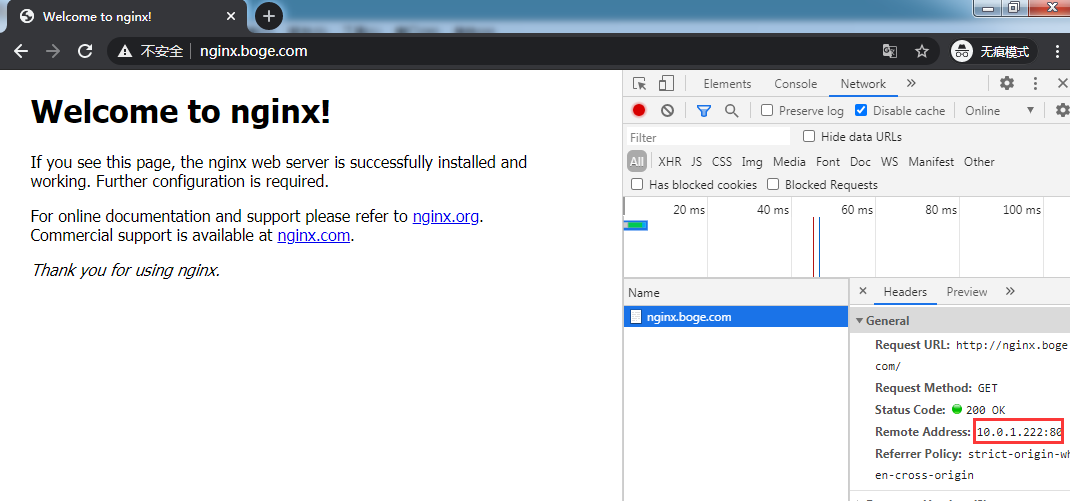

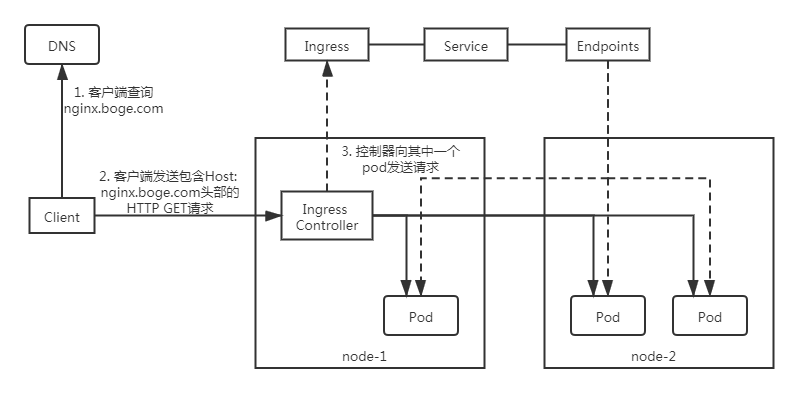

我们这是用Ingress,因为Ingress只需要一个公网IP就能为K8s上所有的服务提供访问,Ingress工作在7层(HTTP),Ingress会根据请求的主机名以及路径来决定把请求转发到相应的服务,如下图所示:

Ingress是允许入站连接到达集群服务的一组规则。即介于物理网络和群集svc之间的一组转发规则。

其实就是实现L4 L7的负载均衡:

注意:这里的Ingress并非将外部流量通过Service来转发到服务pod上,而只是通过Service来找到对应的Endpoint来发现pod进行转发

internet

|

[ Ingress ] ---> [ Services ] ---> [ Endpoint ]

--|-----|-- |

[ Pod,pod,...... ]<-------------------------|

要在K8s上面使用Ingress,我们就需要在K8s上部署Ingress-controller控制器,只有它在K8s集群中运行,Ingress依次才能正常工作。Ingress-controller控制器有很多种,比如traefik,但我们这里要用到ingress-nginx这个控制器,它的底层就是用Openresty融合nginx和一些lua规则等实现的。

重点来了,我在讲课中一直强调,本课程带给大家的都是基于生产中实战经验,所以这里我们用的ingress-nginx不是普通的社区版本,而是经过了超大生产流量检验,国内最大的云平台阿里云基于社区版分支出来,进行了魔改而成,更符合生产,基本属于开箱即用,下面是aliyun-ingress-controller的介绍:

下面介绍只截取了最新的一部分,更多文档资源可以查阅官档:

https://developer.aliyun.com/article/598075

服务简介

在Kubernetes集群中,Ingress是授权入站连接到达集群服务的规则集合,为您提供七层负载均衡能力,您可以通过 Ingress 配置提供外部可访问的 URL、负载均衡、SSL、基于名称的虚拟主机,阿里云容器服务K8S Ingress Controller在完全兼容社区版本的基础上提供了更多的特性和优化。

版本说明

v0.30.0.2-9597b3685-aliyun:

新增FastCGI Backend支持

默认启用Dynamic SSL Cert Update模式

新增流量Mirror配置支持

升级NGINX版本到1.17.8,OpenResty版本到1.15.8,更新基础镜像为Alpine

新增Ingress Validating Webhook支持

修复CVE-2018-16843、CVE-2018-16844、CVE-2019-9511、CVE-2019-9513和CVE-2019-9516漏洞

[Breaking Change] lua-resty-waf、session-cookie-hash、force-namespace-isolation等配置被废弃;x-forwarded-prefix类型从boolean转成string类型;log-format配置中的the_real_ip变量下个版本将被废弃,统一采用remote_addr替代

同步更新到社区0.30.0版本,更多详细变更记录参考社区Changelog

aliyun-ingress-controller有一个很重要的修改,就是它支持路由配置的动态更新,大家用过Nginx的可以知道,在修改完Nginx的配置,我们是需要进行nginx -s reload来重加载配置才能生效的,在K8s上,这个行为也是一样的,但由于K8s运行的服务会非常多,所以它的配置更新是非常频繁的,因此,如果不支持配置动态更新,对于在高频率变化的场景下,Nginx频繁Reload会带来较明显的请求访问问题:

- 造成一定的QPS抖动和访问失败情况

- 对于长连接服务会被频繁断掉

- 造成大量的处于shutting down的Nginx Worker进程,进而引起内存膨胀

详细原理分析见这篇文章: https://developer.aliyun.com/article/692732

我们准备来部署aliyun-ingress-controller,下面直接是生产中在用的yaml配置,我们保存了aliyun-ingress-nginx.yaml准备开始部署:

详细讲解下面yaml配置的每个部分

apiVersion: v1

kind: Namespace

metadata:

name: ingress-nginx

labels:

app: ingress-nginx

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: nginx-ingress-controller

namespace: ingress-nginx

labels:

app: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: nginx-ingress-controller

labels:

app: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

- namespaces

- services

verbs:

- get

- list

- watch

- apiGroups:

- "extensions"

- "networking.k8s.io"

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- apiGroups:

- "extensions"

- "networking.k8s.io"

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- ""

resources:

- configmaps

verbs:

- create

- apiGroups:

- ""