FFmpeg在libavfilter模块提供音视频滤镜,而buffersrc与buffersink是连接AVFilter滤镜的桥梁。其中buffersrc是输入缓冲区,buffersink是输出缓冲区。通过调用av_buffersrc_add_frame_flags(),把待滤波的音视频帧推送到输入缓冲区;调用av_buffersink_get_frame_flags()从输出缓冲区取出滤波后的音视频帧。

一、buffersrc

buffersrc是滤波器的输入缓冲区。主要有BufferSourceContext结构体和av_buffersrc_add_frame_flags函数。

1、BufferSourceContext

输入缓存源上下文主要包含视频宽高、像素格式,音频采样率、声道数、声道布局、采样格式,结构体如下:

typedef struct BufferSourceContext {

const AVClass *class;

AVRational time_base;

AVRational frame_rate;

unsigned nb_failed_requests;

/* video only */

int w, h;

AVRational pixel_aspect;

AVBufferRef *hw_frames_ctx;

enum AVPixelFormat pix_fmt;

/* audio only */

int channels;

int sample_rate;

uint64_t channel_layout;

char *channel_layout_str;

enum AVSampleFormat sample_fmt;

int eof;

} BufferSourceContext;

2、av_buffersrc_add_frame_flags

av_buffersrc_add_frame_flags(),向输入缓冲区添加音视频帧,带有flag。其中flag包括不检测格式是否发生变化、立即推送帧到输出队列、保持音视频帧的引用,枚举类型如下:

enum {

// 不检测格式是否发生变化

AV_BUFFERSRC_FLAG_NO_CHECK_FORMAT = 1,

// 立即推送帧到输出队列

AV_BUFFERSRC_FLAG_PUSH = 4,

// 保持音视频帧的引用

AV_BUFFERSRC_FLAG_KEEP_REF = 8

};

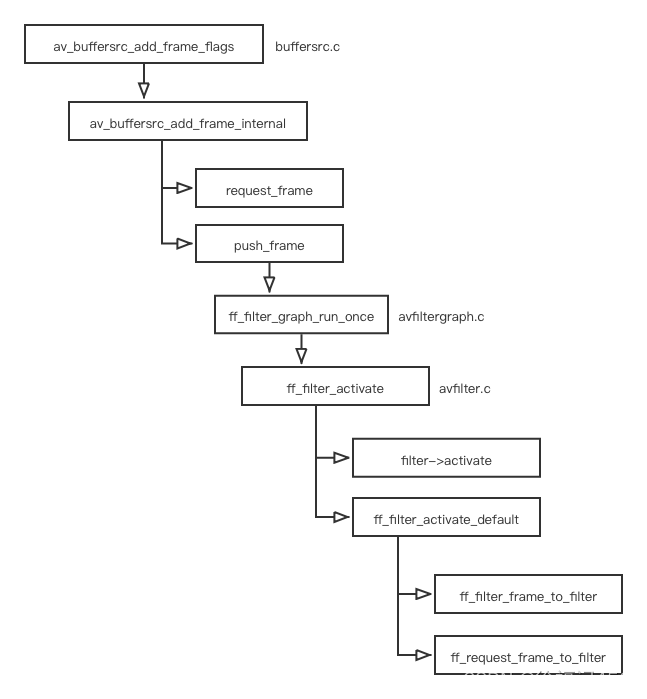

与之相似函数是av_buffersrc_add_frame(),默认flag为AV_BUFFERSRC_FLAG_KEEP_REF。调用函数流程如下图所示:

av_buffersrc_add_frame_flags函数位于libavfilter/buffersrc.c,具体源码如下:

int av_buffersrc_add_frame_flags(AVFilterContext *ctx, AVFrame *frame, int flags)

{

BufferSourceContext *s = ctx->priv;

AVFrame *copy;

int refcounted, ret;

if (frame && frame->channel_layout &&

av_get_channel_layout_nb_channels(frame->channel_layout) != frame->channels) {

return AVERROR(EINVAL);

}

s->nb_failed_requests = 0;

if (!frame)

return av_buffersrc_close(ctx, AV_NOPTS_VALUE, flags);

if (s->eof)

return AVERROR(EINVAL);

refcounted = !!frame->buf[0];

if (!(flags & AV_BUFFERSRC_FLAG_NO_CHECK_FORMAT)) {

switch (ctx->outputs[0]->type) {

case AVMEDIA_TYPE_VIDEO:

// 检测视频参数:宽、高、像素格式

CHECK_VIDEO_PARAM_CHANGE(ctx, s, frame->width, frame->height,

frame->format, frame->pts);

break;

case AVMEDIA_TYPE_AUDIO:

if (!frame->channel_layout)

frame->channel_layout = s->channel_layout;

// 检测音频参数:采样格式、采样率、声道布局、声道数

CHECK_AUDIO_PARAM_CHANGE(ctx, s, frame->sample_rate, frame->channel_layout,

frame->channels, frame->format, frame->pts);

break;

default:

return AVERROR(EINVAL);

}

}

if (!(copy = av_frame_alloc()))

return AVERROR(ENOMEM);

if (refcounted && !(flags & AV_BUFFERSRC_FLAG_KEEP_REF)) {

av_frame_move_ref(copy, frame);

} else {

ret = av_frame_ref(copy, frame);

if (ret < 0) {

av_frame_free(©);

return ret;

}

}

// 执行滤波

ret = ff_filter_frame(ctx->outputs[0], copy);

if (ret < 0)

return ret;

// 推送到输出队列

if ((flags & AV_BUFFERSRC_FLAG_PUSH)) {

ret = push_frame(ctx->graph);

if (ret < 0)

return ret;

}

return 0;

}

其中,push_frame()函数只做一件事:调用ff_filter_graph_run_once进行滤波,代码如下:

static int push_frame(AVFilterGraph *graph)

{

int ret;

while (1) {

// 遍历查找滤波器进行滤波

ret = ff_filter_graph_run_once(graph);

if (ret == AVERROR(EAGAIN))

break;

if (ret < 0)

return ret;

}

return 0;

}

而ff_filter_graph_run_once()函数位于avfiltergraph.c,主要是for循环遍历查找滤波器进行滤波:

int ff_filter_graph_run_once(AVFilterGraph *graph)

{

AVFilterContext *filter;

unsigned i;

av_assert0(graph->nb_filters);

filter = graph->filters[0];

for (i = 1; i < graph->nb_filters; i++)

if (graph->filters[i]->ready > filter->ready)

filter = graph->filters[i];

if (!filter->ready)

return AVERROR(EAGAIN);

return ff_filter_activate(filter);

}

二、buffersink

buffersink是滤波器的输出缓冲区。主要有BufferSinkContext结构体和av_buffersink_get_frame_flags函数。

1、BufferSinkContext

输出缓存池上下文主要包含视频像素格式,音频采样率、声道数、声道布局、采样格式,结构体如下:

typedef struct BufferSinkContext {

const AVClass *class;

unsigned warning_limit;

/* only used for video */

enum AVPixelFormat *pixel_fmts;

int pixel_fmts_size;

/* only used for audio */

enum AVSampleFormat *sample_fmts;

int sample_fmts_size;

int64_t *channel_layouts;

int channel_layouts_size;

int *channel_counts;

int channel_counts_size;

int all_channel_counts;

int *sample_rates;

int sample_rates_size;

AVFrame *peeked_frame;

} BufferSinkContext;

2、av_buffersink_get_frame_flags

该函数主要从输出缓冲池取出一帧数据,内部调用get_frame_internal()函数,代码如下:

static int get_frame_internal(AVFilterContext *ctx, AVFrame *frame,

int flags, int samples)

{

BufferSinkContext *buf = ctx->priv;

AVFilterLink *inlink = ctx->inputs[0];

int status, ret;

AVFrame *cur_frame;

int64_t pts;

if (buf->peeked_frame)

return return_or_keep_frame(buf, frame, buf->peeked_frame, flags);

while (1) {

// 获取采样数或帧数据

ret = samples ? ff_inlink_consume_samples(inlink, samples, samples, &cur_frame) :

ff_inlink_consume_frame(inlink, &cur_frame);

if (ret < 0) {

return ret;

} else if (ret) {

return return_or_keep_frame(buf, frame, cur_frame, flags);

} else if (ff_inlink_acknowledge_status(inlink, &status, &pts)) {

return status;

} else if ((flags & AV_BUFFERSINK_FLAG_NO_REQUEST)) {

return AVERROR(EAGAIN);

} else if (inlink->frame_wanted_out) {

ret = ff_filter_graph_run_once(ctx->graph);

if (ret < 0)

return ret;

} else {

ff_inlink_request_frame(inlink);

}

}

}

int av_buffersink_get_frame_flags(AVFilterContext *ctx, AVFrame *frame, int flags)

{

return get_frame_internal(ctx, frame, flags, ctx->inputs[0]->min_samples);

}