h264文件不能直接在网页上播放,比如在浏览器上输入http://10.0.0.2/2022-01-08T22-32-58.h264,变成了下载。

若在浏览器上输入http://10.0.0.2/2022-01-08T22-32-58.mp4,则可以播放。

本文讲解用ffmpeg将h264文件转换成mp4。

首先,准备h264文件,这个可以用ffmpeg将一个mp4的视频部分转成h264,命令如下:

ffmpeg -i 2022-01-08T22-32-58.mp4 -an -vcodec copy 2022-01-08T22-32-58.h264

注意,我这里mp4里面的视频编码格式是h264,故用的vcodec copy,否则用vcodec libx264。

下面是将这个2022-01-08T22-32-58.h264封装成mp4,注意,没有解码编码过程,速度会很快。

大概过程如下:

1.启动两个线程

2.第一个线程av_read_frame读到packet,然后av_packet_clone拷贝出一个packet,塞入队列

3.第二个线程从队列中读取packet,进行pts,dts,duration设置,然后调用av_interleaved_write_frame写入。

这里面需要注意的地方在于pts,dts的设置,从ffmpeg源码上看,对于pts,dts的设置,也是很麻烦的,源码中,很多地方,动不动就看pts,dts是否需要设置。

本人之前采取下列方式进行dts和pts的设置,即由于读的context和写入的context的时间基(time_base)不一致,需要转换成目标文件context的时间基。

pPacketVideoA->dts = av_rescale_q_rnd(pPacketVideoA->dts, m_pFormatCtx_File->streams[m_iVideoIndex]->time_base, m_pFormatCtx_Out->streams[m_iVideoIndex]->time_base, AVRounding(1));

但实际运行时,出现了问题,读取出来的packet的pts和dts都是AV_NOPTS_VALUE,经过转换还是AV_NOPTS_VALUE,然后调用av_interleaved_write_frame出错。

最后调试ffmpeg源码,得到pts和dts的处理如下:

pPacketVideoA->duration = av_rescale_q_rnd(pPacketVideoA->duration, m_pFormatCtx_File->streams[m_iVideoIndex]->time_base, m_pFormatCtx_Out->streams[m_iVideoIndex]->time_base, AVRounding(1));

if (pPacketVideoA->dts != AV_NOPTS_VALUE)

{

pPacketVideoA->dts = av_rescale_q_rnd(pPacketVideoA->dts, m_pFormatCtx_File->streams[m_iVideoIndex]->time_base, m_pFormatCtx_Out->streams[m_iVideoIndex]->time_base, AVRounding(1));

pPacketVideoA->pts = pPacketVideoA->dts;

}

else

{

pPacketVideoA->pts = pPacketVideoA->dts = m_iTotalDuration;

}

m_iTotalDuration += pPacketVideoA->duration;

m_iTotalDuration的初始值,本人设置的是0,即第一帧的pts和dts都设置成0,下一帧的dts就是前面总帧的duration和,这个是ffmpeg里面的逻辑。

当然,ffmpeg里面判断复杂多了,比如参考了是否有B帧,本人所写的没考虑B帧,只考虑一个文件里面只有I帧,P帧的情况。

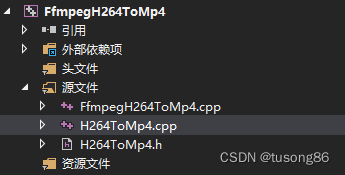

下面是代码结构

其中FfmpegH264ToMp4.cpp的内容如下:

// FfmpegH264ToMp4.cpp : 此文件包含 "main" 函数。程序执行将在此处开始并结束。

//

#include <iostream>

#include "H264ToMp4.h"

int main()

{

CVideoConvert cVideoConvert;

std::string strVideoFile = "2022-01-08T22-32-58.h264";

std::string strOutFile = "out.mp4";

cVideoConvert.StartConvert(strVideoFile, strOutFile);

cVideoConvert.WaitFinish();

return 0;

}

H264ToMp4.h里面的内容如下:

#pragma once

#include <string>

#include <Windows.h>

#include <deque>

#define MAX_VIDEOPACKET_NUM 100

#ifdef __cplusplus

extern "C"

{

#endif

#include "libavcodec/avcodec.h"

#include "libavformat/avformat.h"

#include "libswscale/swscale.h"

#include "libswresample/swresample.h"

#include "libavdevice/avdevice.h"

#include "libavutil/audio_fifo.h"

#include "libavutil/avutil.h"

#include "libavutil/fifo.h"

#include "libavutil/frame.h"

#include "libavutil/imgutils.h"

#include "libavfilter/avfilter.h"

#include "libavfilter/buffersink.h"

#include "libavfilter/buffersrc.h"

#ifdef __cplusplus

};

#endif

class CVideoConvert

{

public:

CVideoConvert();

~CVideoConvert();

public:

int StartConvert(std::string strFileName, std::string strFileOut);

void StopConvert();

public:

int OpenFile(const char *pFile);

int OpenOutPut(const char *pFileOut);

private:

static DWORD WINAPI FileReadProc(LPVOID lpParam);

void FileRead();

static DWORD WINAPI FileWriteProc(LPVOID lpParam);

void FileWrite();

public:

void WaitFinish();

public:

AVFormatContext *m_pFormatCtx_File = NULL;

AVFormatContext *m_pFormatCtx_Out = NULL;

std::deque<AVPacket *> m_vecReadVideo;

int m_iVideoWidth = 1920;

int m_iVideoHeight = 1080;

int m_iYuv420FrameSize = 0;

int m_iVideoFrameNum = 0;

int m_iVideoIndex = -1;

bool m_bStartConvert = false;

int64_t m_iTotalDuration = 0;

private:

CRITICAL_SECTION m_csVideoReadSection;

HANDLE m_hFileReadThread = NULL;

HANDLE m_hFileWriteThread = NULL;

};

H264ToMp4.cpp里面的内容如下:

#include "H264ToMp4.h"

#ifdef __cplusplus

extern "C"

{

#endif

#pragma comment(lib, "avcodec.lib")

#pragma comment(lib, "avformat.lib")

#pragma comment(lib, "avutil.lib")

#pragma comment(lib, "avdevice.lib")

#pragma comment(lib, "avfilter.lib")

#pragma comment(lib, "postproc.lib")

#pragma comment(lib, "swresample.lib")

#pragma comment(lib, "swscale.lib")

#ifdef __cplusplus

};

#endif

CVideoConvert::CVideoConvert()

{

InitializeCriticalSection(&m_csVideoReadSection);

}

CVideoConvert::~CVideoConvert()

{

DeleteCriticalSection(&m_csVideoReadSection);

}

int CVideoConvert::StartConvert(std::string strFileName, std::string strFileOut)

{

int ret = -1;

do

{

ret = OpenFile(strFileName.c_str());

if (ret != 0)

{

break;

}

ret = OpenOutPut(strFileOut.c_str());

if (ret != 0)

{

break;

}

if (ret < 0)

{

break;

}

m_bStartConvert = true;

m_hFileReadThread = CreateThread(NULL, 0, FileReadProc, this, 0, NULL);

m_hFileWriteThread = CreateThread(NULL, 0, FileWriteProc, this, 0, NULL);

} while (0);

return ret;

}

void CVideoConvert::StopConvert()

{

m_bStartConvert = false;

}

int CVideoConvert::OpenFile(const char *pFile)

{

int ret = -1;

do

{

if ((ret = avformat_open_input(&m_pFormatCtx_File, pFile, 0, 0)) < 0) {

printf("Could not open input file.");

break;

}

if ((ret = avformat_find_stream_info(m_pFormatCtx_File, 0)) < 0) {

printf("Failed to retrieve input stream information");

break;

}

if (m_pFormatCtx_File->nb_streams > 2)

{

break;

}

for (int i = 0; i < m_pFormatCtx_File->nb_streams; i++)

{

if (m_pFormatCtx_File->streams[i]->codecpar->codec_type == AVMEDIA_TYPE_VIDEO)

{

m_iVideoIndex = i;

}

}

if (m_iVideoIndex == -1)

{

break;

}

ret = 0;

} while (0);

return ret;

}

int CVideoConvert::OpenOutPut(const char *pFileOut)

{

int iRet = -1;

AVStream *pVideoStream = NULL;

do

{

avformat_alloc_output_context2(&m_pFormatCtx_Out, NULL, NULL, pFileOut);

if (m_iVideoIndex != -1)

{

AVCodecID eTargetVideoCodecId = m_pFormatCtx_File->streams[m_iVideoIndex]->codecpar->codec_id;

AVCodec* pCodecEncode_Video = (AVCodec *)avcodec_find_encoder(eTargetVideoCodecId);

pVideoStream = avformat_new_stream(m_pFormatCtx_Out, pCodecEncode_Video);

if (!pVideoStream)

{

break;

}

avcodec_parameters_copy(pVideoStream->codecpar, m_pFormatCtx_File->streams[m_iVideoIndex]->codecpar);

pVideoStream->codecpar->codec_tag = 0;

}

if (!(m_pFormatCtx_Out->oformat->flags & AVFMT_NOFILE))

{

if (avio_open(&m_pFormatCtx_Out->pb, pFileOut, AVIO_FLAG_WRITE) < 0)

{

break;

}

}

if (avformat_write_header(m_pFormatCtx_Out, NULL) < 0)

{

break;

}

iRet = 0;

} while (0);

if (iRet != 0)

{

if (m_pFormatCtx_Out != NULL)

{

avformat_free_context(m_pFormatCtx_Out);

m_pFormatCtx_Out = NULL;

}

}

return iRet;

}

DWORD WINAPI CVideoConvert::FileReadProc(LPVOID lpParam)

{

CVideoConvert *pVideoConvert = (CVideoConvert *)lpParam;

if (pVideoConvert != NULL)

{

pVideoConvert->FileRead();

}

return 0;

}

void CVideoConvert::FileRead()

{

AVPacket packet = {

0 };

int ret = 0;

int iPicCount = 0;

const int genpts = m_pFormatCtx_File->flags & AVFMT_FLAG_GENPTS;

while (m_bStartConvert)

{

av_packet_unref(&packet);

ret = av_read_frame(m_pFormatCtx_File, &packet);

if (ret == AVERROR(EAGAIN))

{

continue;

}

else if (ret == AVERROR_EOF)

{

break;

}

else if (ret < 0)

{

break;

}

if (packet.stream_index == m_iVideoIndex)

{

while (1)

{

int iAudioPacketNum = m_vecReadVideo.size();

if (iAudioPacketNum >= MAX_VIDEOPACKET_NUM)

{

Sleep(10);

continue;

}

else

{

AVPacket *pAudioPacket = av_packet_clone(&packet);

if (pAudioPacket != NULL)

{

EnterCriticalSection(&m_csVideoReadSection);

m_vecReadVideo.push_back(pAudioPacket);

LeaveCriticalSection(&m_csVideoReadSection);

}

}

break;

}

}

}

//FlushVideoDecoder(iPicCount);

}

DWORD WINAPI CVideoConvert::FileWriteProc(LPVOID lpParam)

{

CVideoConvert *pVideoConvert = (CVideoConvert *)lpParam;

if (pVideoConvert != NULL)

{

pVideoConvert->FileWrite();

}

return 0;

}

void CVideoConvert::FileWrite()

{

int ret = 0;

AVPacket packet = {

0 };

int iRealPicCount = 0;

while (m_bStartConvert)

{

int iVideoSize = m_vecReadVideo.size();

if (iVideoSize > 0)

{

AVPacket *pPacketVideoA = NULL;

EnterCriticalSection(&m_csVideoReadSection);

if (!m_vecReadVideo.empty())

{

pPacketVideoA = m_vecReadVideo.front();

m_vecReadVideo.pop_front();

}

LeaveCriticalSection(&m_csVideoReadSection);

pPacketVideoA->stream_index = m_iVideoIndex;

pPacketVideoA->duration = av_rescale_q_rnd(pPacketVideoA->duration, m_pFormatCtx_File->streams[m_iVideoIndex]->time_base, m_pFormatCtx_Out->streams[m_iVideoIndex]->time_base, AVRounding(1));

if (pPacketVideoA->dts != AV_NOPTS_VALUE)

{

pPacketVideoA->dts = av_rescale_q_rnd(pPacketVideoA->dts, m_pFormatCtx_File->streams[m_iVideoIndex]->time_base, m_pFormatCtx_Out->streams[m_iVideoIndex]->time_base, AVRounding(1));

pPacketVideoA->pts = pPacketVideoA->dts;

}

else

{

pPacketVideoA->pts = pPacketVideoA->dts = m_iTotalDuration;

}

m_iTotalDuration += pPacketVideoA->duration;

//ret = av_write_frame(m_pFormatCtx_Out, pPacketVideoA);

ret = av_interleaved_write_frame(m_pFormatCtx_Out, pPacketVideoA);

av_packet_free(&pPacketVideoA);

if (ret == AVERROR(EAGAIN))

{

continue;

}

else if (ret == AVERROR_EOF)

{

break;

}

iRealPicCount++;

}

else

{

Sleep(10);

}

}

///FlushEncoder(iRealPicCount);

av_write_trailer(m_pFormatCtx_Out);

avio_close(m_pFormatCtx_Out->pb);

}

void CVideoConvert::WaitFinish()

{

int ret = 0;

do

{

if (NULL == m_hFileReadThread)

{

break;

}

WaitForSingleObject(m_hFileReadThread, INFINITE);

CloseHandle(m_hFileReadThread);

m_hFileReadThread = NULL;

while (m_vecReadVideo.size() > 0)

{

Sleep(1000);

}

m_bStartConvert = false;

WaitForSingleObject(m_hFileWriteThread, INFINITE);

CloseHandle(m_hFileWriteThread);

m_hFileWriteThread = NULL;

} while (0);

}