先在idea中导入相应的依赖(这里我的scala是2.11 flink是1.9.1版本 可自行修改)

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<maven.compiler.source>1.8</maven.compiler.source>

<maven.compiler.target>1.8</maven.compiler.target>

<flink.version>1.9.1</flink.version>

</properties>

<dependencies>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.11</version>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-scala_2.11</artifactId>

<version>${flink.version}</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.flink/flink-streaming-scala -->

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-scala_2.11</artifactId>

<version>${flink.version}</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.flink/flink-clients -->

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-clients_2.11</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-kafka_2.11</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>8.0.20</version>

</dependency>

<dependency>

<groupId>redis.clients</groupId>

<artifactId>jedis</artifactId>

<version>2.9.0</version>

</dependency>

</dependencies>Flink Job在提交执行计算时,需要首先建立和Flink框架之间的联系,也就指的是当前的flink运行环境,只有获取了环境信息,才能将task调度到不同的taskManager执行。而这个环境对象的获取方式相对比较简单

// 批处理环境

val env = ExecutionEnvironment.getExecutionEnvironment

// 流式数据处理环境

val env = StreamExecutionEnvironment.getExecutionEnvironment我们先看Source端:

1.从集合读数据

import org.apache.flink.streaming.api.scala._

object SourceList {

def main(args: Array[String]): Unit = {

//1.创建执行的环境

val env: StreamExecutionEnvironment =

StreamExecutionEnvironment.getExecutionEnvironment

//2.从集合中读取数据

val ds = env.fromCollection(Seq(

WaterSensor("ws_001",1577844001,45.0),

WaterSensor("ws_002",1577853001,42.0),

WaterSensor("ws_003",1577844444,41.0)

)

)

//3.打印

ds.print()

//4.执行

env.execute("sensor")

}

/**

* 定义样例类:水位传感器:用于接收空高数据

*

* @param id 传感器编号

* @param ts 时间戳

* @param vc 空高

*/

case class WaterSensor(id: String, ts: Long, vc: Double)

}输出结果:

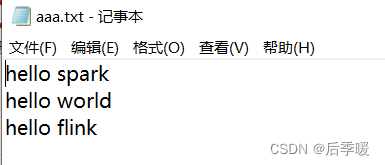

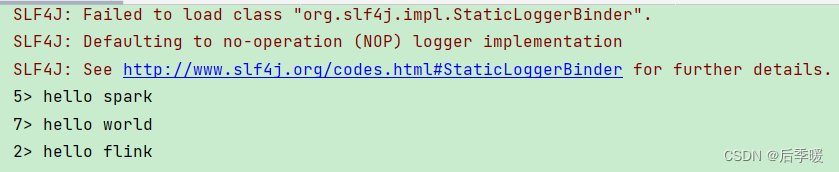

2.从文件中读取数据

// *******读取文件创建DataStream*******

val ds = env.readTextFile("d:/aaa.txt")

ds.print()

3.读取socket端口创建DataStream

打开虚拟机 输入:nc -lp 9999

扫描二维码关注公众号,回复:

14643764 查看本文章

val ds = env.socketTextStream("192.168.189.20",9999)

ds.print()然后执行程序 我们就可以读到端口输入的内容啦

4.读取kafka数据(要加依赖 上面我们已经加过了!)

先在kafka中创建主题,打开生产端生产数据,然后我们就可以

//*******读取kafka*******

// val prop = new Properties()

// prop.setProperty(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG,"192.168.189.20:9092")

// prop.setProperty(ConsumerConfig.GROUP_ID_CONFIG,"cm")

// prop.setProperty(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG,classOf[StringDeserializer].getName)

// prop.setProperty(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG,classOf[StringDeserializer].getName)

//

// val ds = env.addSource(

// new FlinkKafkaConsumer[String](

// "mydemo02",

// new SimpleStringSchema(),

// prop

// ).setStartFromEarliest()

// )

// ds.print()5.自定义数据源

读取mysql数据源

// *********读取mysql数据库(自定义源)*********

var ds =env.addSource(new RichSourceFunction[WaterSensor] {

var flag = false

var conn:Connection = _

var pstat:PreparedStatement = _

//在构建对象时候只会执行1次

override def open(parameters: Configuration): Unit = {

Class.forName("com.mysql.cj.jdbc.Driver")

conn = DriverManager.getConnection("jdbc:mysql://192.168.189.20:3306/mydemo","root","ok")

pstat = conn.prepareStatement("select * from watersensor")

}

//每次读取数据库都会执行 每行数据就执行一次

override def run(sourceContext: SourceFunction.SourceContext[WaterSensor]): Unit = {

if(!flag){

val rs = pstat.executeQuery()

while (rs.next()) {

val id = rs.getString("id")

val ts = rs.getLong("ts")

val vc = rs.getDouble("vc")

//将数据库中获取的数据转为WaterSensor 然后再输出

sourceContext.collect(WaterSensor(id, ts, vc))

}

}

}

//退出时候执行一次

override def cancel(): Unit = {

flag = true

pstat.close()

conn.close()

}

})

ds.print()

env.execute("first001")读取redis数据源

//读取redis

env.addSource(new RichSourceFunction[WaterSensor] {

var flag = false

var jedis:Jedis = _

override def open(parameters: Configuration): Unit = {

jedis = new Jedis("192.168.189.20")

}

override def run(sourceContext: SourceFunction.SourceContext[WaterSensor]): Unit = {

import scala.collection.JavaConversions._

if (!flag) {

val infos = jedis.lrange("temps", 0, 3)

infos.foreach(line => {

val params = line.split(",", -1)

sourceContext.collect(WaterSensor(params(0), params(1).toLong, params(2).toDouble))

})

}

}

override def cancel(): Unit = {

flag = true

jedis.close()

}

}).print()

env.execute("first001")读取hbase数据源数据

//读取hbase

val ds=env.addSource(new RichSourceFunction[WaterSensor] {

var flag= false

var conn:Connection= _

var table:Table= _

var scan:Scan= _

var results:ResultScanner= _

override def open(parameters: Configuration): Unit = {

val conf =HBaseConfiguration.create()

conf.set("hbase.zookeeper.quorum","192.168.189.20:2181")

conn=ConnectionFactory.createConnection(conf)

table=conn.getTable(TableName.valueOf("mydemo:WaterSensor"))

scan=new Scan()

}

override def run(sourceContext: SourceFunction.SourceContext[WaterSensor]): Unit = {

results=table.getScanner(scan)

val iter = results.iterator()

if (!flag){

while (iter.hasNext){

val result = iter.next()

//获取rowkey

val rowkey = Bytes.toString(result.getRow)

//获取列簇下面的修饰符

val ts = Bytes.toString(result.getValue("base".getBytes(), "ts".getBytes()))

val vc=Bytes.toString(result.getValue("base".getBytes(),"vc".getBytes()))

sourceContext.collect(WaterSensor(rowkey,ts.toLong,vc.toDouble))}

}

}

override def cancel(): Unit ={

flag=true

table.close()

conn.close()}

})

ds.print()

env.execute("first")读取mongodb数据源数据

val ds= env.addSource(new RichSourceFunction[WaterSensor] {

var flag=false

var client:MongoClient= _

var database:MongoDatabase= _

override def open(parameters: Configuration): Unit = {

client=new MongoClient("192.168.30.181",27017)

database=client.getDatabase("mydemo")}

override def run(sourceContext: SourceFunction.SourceContext[WaterSensor]): Unit = {

val coll = database.getCollection("watersensor")

val iter = coll.find().iterator()

if (!flag){

while (iter.hasNext){

val infos = iter.next()

sourceContext.collect(WaterSensor(infos.get("id").toString,

infos.get("ts").toString.toDouble.toLong,infos.get("vc").toString.toDouble))}}}

override def cancel(): Unit = {

flag=true

client.close()}})

ds.print()

env.execute("one")}}