K8s中集成Heketi使用Glusterfs

K8s中集成Heketi使用Glusterfs,Heketi是一个GlusterFs管理软件,提供相应的rest api服务,

一、Glusterfs准备

略,即准备好已安装的glusterfs集群,参考Centos7下glusterfs分布式存储集群安装与使用测试,参考此文进度做到安装、启动、加入集群、格式化磁盘并挂载即可,可以先不创建分卷。

二、Heketi部署安装

Heketi部署的安装有两种方式,直接在虚机上部署或通过k8s容器部署,使用其中一种就可,heketi服务的目的就是申请pvc使用,申请成功后,即例heketi挂掉了,也不会影响k8s的正常使用

1.部署方式1:普通虚机上部署

这里我以heketi部署在192.168.56.101为例,在虚机上进行安装,直接采用yum安装即可。

(1) heketi服务端和heketi-cli安装

yum list heketi --showduplicates | sort -r

yum install -y heketi

yum install -y heketi-client

(2) ssh免密

配置heketi所在的虚机,免密能登陆到glusterfs的三台服务器上,这里因为我的heketi也是部署在192.168.56.101上,所以ssh-copy-id 192.168.56.101相当于免密登陆本机了。

ssh-keygen -t rsa -P ''

ssh-copy-id 192.168.56.101

ssh-copy-id 192.168.56.102

ssh-copy-id 192.168.56.103

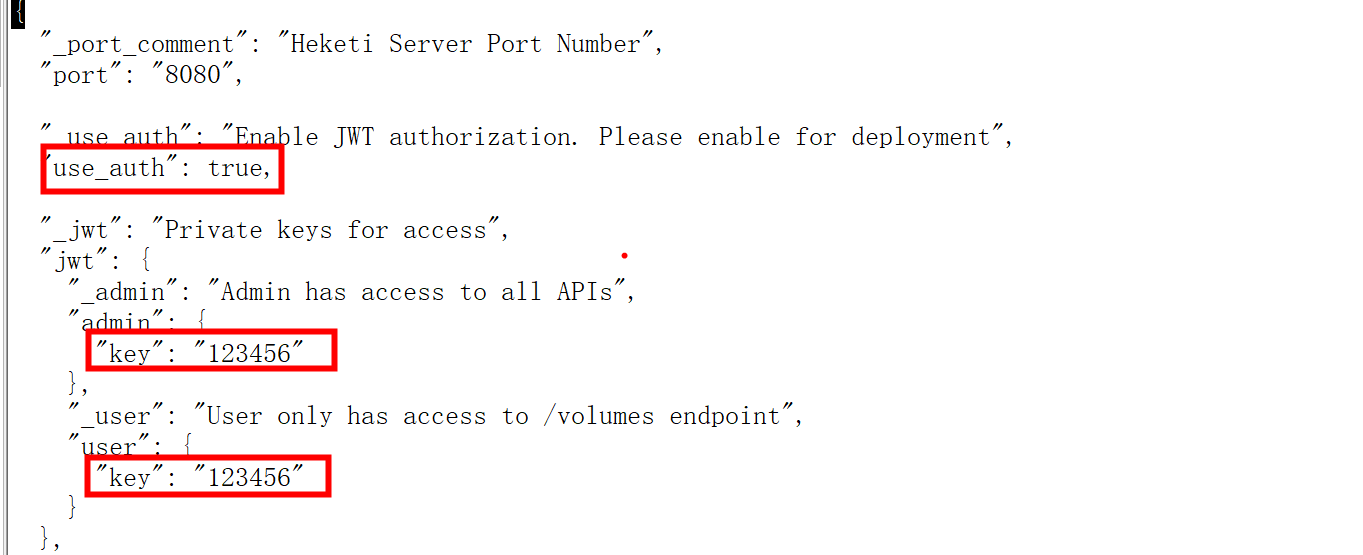

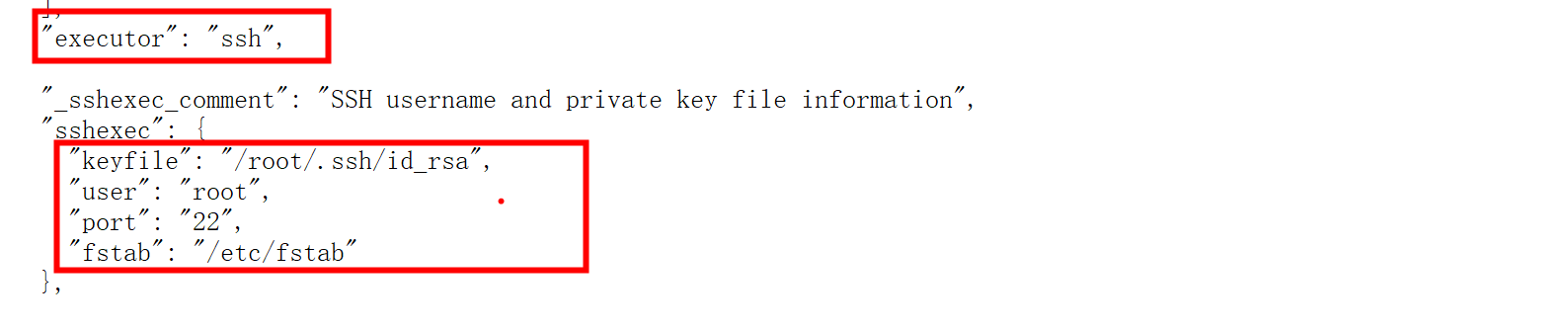

(3) heketi配置

cd /etc/heketi/

##备份旧配置

cp heketi.json heketi.json.default

##修改配置,"use_auth": true,以及key修改为123456,executor修改为"ssh",以及相应的ssh信息修改。

vi /etc/heketi/heketi.json

...

(4) heketi服务启动

##

systemctl enable heketi

##vi /usr/lib/systemd/system/heketi.service将启动用户改为root

[root@localhost ~]# more /usr/lib/systemd/system/heketi.service

[Service]

User=root

##重启

systemctl daemon-reload

systemctl start heketi && systemctl status heketi

(5) heketi服务测试

测试返回Hello from Heketi说明服务正常了.

[root@localhost ~]# curl http://192.168.56.101:8080/hello

Hello from Heketi[root@localhost ~]#

(6) heketi-cli创建集群

##集群显示信息

heketi-cli --user admin --secret 123456 --server http://192.168.56.101:8080 cluster list

##集群建立

heketi-cli --user admin --secret 123456 --server http://192.168.56.101:8080 --json cluster create

[root@localhost ~]# heketi-cli --user admin --secret 123456 --server http://192.168.56.101:8080 --json cluster create

{

"id":"2bfe06768a30c3e464065a86494f663a","nodes":[],"volumes":[],"block":true,"file":true,"blockvolumes":[]}

##节点加入

heketi-cli --user admin --secret 123456 --server http://192.168.56.101:8080 --json node add --cluster "2bfe06768a30c3e464065a86494f663a" --management-host-name 192.168.56.101 --storage-host-name 192.168.56.101 --zone 1

heketi-cli --user admin --secret 123456 --server http://192.168.56.101:8080 --json node add --cluster "2bfe06768a30c3e464065a86494f663a" --management-host-name 192.168.56.102 --storage-host-name 192.168.56.102 --zone 1

heketi-cli --user admin --secret 123456 --server http://192.168.56.101:8080 --json node add --cluster "2bfe06768a30c3e464065a86494f663a" --management-host-name 192.168.56.103 --storage-host-name 192.168.56.103 --zone 1

##节点加入后查看

heketi-cli --user admin --secret 123456 --server http://192.168.56.101:8080 node list

[root@localhost ~]# heketi-cli --user admin --secret 123456 --server http://192.168.56.101:8080 node list

Id:3128158ceb40e97d51a23fbe3e652bcf Cluster:2bfe06768a30c3e464065a86494f663a

Id:3e6c3bc3c079015e239bfdf2f3b03726 Cluster:2bfe06768a30c3e464065a86494f663a

Id:6df7f798e29c049b40e03f9c20d4eb19 Cluster:2bfe06768a30c3e464065a86494f663a

##磁盘设备加入

heketi-cli --user admin --secret 123456 --server http://192.168.56.101:8080 device add --name "/dev/sdb" --node 3128158ceb40e97d51a23fbe3e652bcf --destroy-existing-data

heketi-cli --user admin --secret 123456 --server http://192.168.56.101:8080 device add --name "/dev/sdb" --node 3e6c3bc3c079015e239bfdf2f3b03726 --destroy-existing-data

heketi-cli --user admin --secret 123456 --server http://192.168.56.101:8080 device add --name "/dev/sdb" --node 6df7f798e29c049b40e03f9c20d4eb19 --destroy-existing-data

##集群信息查看

heketi-cli --user admin --secret 123456 --server http://192.168.56.101:8080 topology info

##删除磁盘设备

要先device disable,然后device remove然后device delete

##删除集群(需要先删除磁盘)

heketi-cli --user admin --secret 123456 --server http://192.168.56.101:8080 --json cluster delete 2bfe06768a30c3e464065a86494f663a

也可以使用配置文件方式创建,示例如下:

cat > /etc/heketi/topology.json << EOF

{

"clusters": [

{

"nodes": [

{

"node": {

"hostnames": {

"manage": [

"192.168.56.101"

],

"storage": [

"192.168.56.101"

]

},

"zone": 1

},

"devices": [

{

"name": "/dev/sdb",

"destroydata": false

}

]

},

{

"node": {

"hostnames": {

"manage": [

"192.168.56.102"

],

"storage": [

"192.168.56.102"

]

},

"zone": 1

},

"devices": [

{

"name": "/dev/sdb",

"destroydata": false

}

]

},

{

"node": {

"hostnames": {

"manage": [

"192.168.56.103"

],

"storage": [

"192.168.56.103"

]

},

"zone": 1

},

"devices": [

{

"name": "/dev/sdb",

"destroydata": false

}

]

}

]

}

]

}

EOF

heketi-cli --user admin --secret 123456 --server http://192.168.56.101:8080 topology load --json=/etc/heketi/topology.json

(7) 非root账号时需要格外处理

如果具备root权限,这一步不需要理。

如果是非root方式和ssh还需要格外处理:比如进行sudo的授权

由于可能root是禁止远程登录的,所有有时会使用其他用户如heketi来执行启动,这时需要授权heketi sudo权限。

# 给testuser sudo权限

[root@k8s-master heketi]# echo "heketi ALL=(ALL) NOPASSWD: ALL" >> /etc/sudoers

对应此时的启动变成:

sudo systemctl start heketi

另外heketi的数据目录相关也需要授权如:

chown heketi:heketi /etc/heketi/ -R

chown heketi:heketi /var/lib/heketi -R

参考:https://www.cnblogs.com/zhaojiedi1992/p/zhaojiedi_liunx_54_kubernates_glusterfs-heketi.html

2.部署方式2:k8s上容器部署

(1)heketi容器制作

下载centos7.6镜像

docker pull centos:7.6.1810

启动一个容器

##启动容器

docker run -itd --name centos7.6 --privileged=true 192.168.56.1:6000/centos:7.6.1810 /usr/sbin/init

##进入容器

##2741ea603980为上面容器启动后的id

docker exec -it 2741ea603980 /bin/bash

在容里面里面安装好heketi

yum install -y heketi

yum install -y heketi-client

yum install -y openssh openssh-clients

##然后进行免密登陆处理

ssh-keygen -t rsa -P ''

ssh-copy-id 192.168.56.101

ssh-copy-id 192.168.56.102

ssh-copy-id 192.168.56.103

##heketi配置

参考部署方式1普通虚机上部署上的heketi配置

配置好/etc/heketi/heketi.json

##heketi启动测试

/usr/bin/heketi --config=/etc/heketi/heketi.json

##停止容器

docker stop 2741ea603980

将安装好heketi的容器打包成镜像:

docker commit -a "liu" -m "sendi_heketi" 2741ea603980 sendi_heketi:latest

(2)使用sendi_heketi:latest进行dockerfile制作

cat > Dockerfile_heketi <<EOF

FROM sendi_heketi:latest

ENV LANG="en_US.UTF-8"

EXPOSE 8080

ENTRYPOINT /usr/bin/heketi --config=/etc/heketi/heketi.json

EOF

##制作成myheketi:1.0的镜像包

docker build -t myheketi:1.0 -f Dockerfile_heketi .

(3)制作好的myheketi:1.0测试

docker run -itd --name myheketi --privileged=true myheketi:1.0 /usr/sbin/init

docker exec -it b147a435bbb0 /bin/bash

##测试在容器中使用heketi-cli是否正常

heketi-cli --user admin --secret 123456 --server http://127.0.0.1:8080 --json cluster list

(8)k8s部署heketi

得到了上面的myheketi:1.0后,就可以在k8s上部署heketi了。

yaml文件准备:

######准备yaml部署文件######

cat > heketi-deployment.yaml <<EOF

# ------------------- heketi Deployment ------------------- #

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: heketi

name: heketi

namespace: default

spec:

replicas: 1

selector:

matchLabels:

app: heketi

template:

metadata:

labels:

app: heketi

spec:

containers:

- image: myheketi:1.0

imagePullPolicy: IfNotPresent

name: heketi

ports:

- containerPort: 8080

resources:

limits:

cpu: 100m

memory: 100Mi

---

# ------------------- heketi Service ------------------- #

kind: Service

apiVersion: v1

metadata:

labels:

app: heketi

name: heketi

namespace: default

spec:

ports:

- port: 8080

targetPort: 8080

selector:

app: heketi

externalIPs:

- 192.168.56.101

EOF

部署:

##部署

kubectl apply -f heketi-deployment.yaml

三、K8s上集成Heketi管理glusterfs

参考:https://kubernetes.io/docs/concepts/storage/storage-classes/#glusterfs

注意:k8s的工作节点需要安装gulster客户端,即:yum install -y glusterfs-fuse

1.在k8s中创建secret

##1.

cat > glusterfs_secret.yaml <<EOF

apiVersion: v1

kind: Secret

metadata:

name: heketi-secret

namespace: default

data:

# base64 encoded password. E.g.: echo -n "123456" | base64

key: MTIzNDU2

type: kubernetes.io/glusterfs

EOF

##2.

kubectl apply -f glusterfs_secret.yaml

##3.

kubectl get secret -n default

[root@localhost ~]# kubectl get secret -n default

NAME TYPE DATA AGE

default-token-mg9nr kubernetes.io/service-account-token 3 6m18s

heketi-secret kubernetes.io/glusterfs 1 30s

2.在k8s中创建storageclass

allowVolumeExpansion: true表示允许创建的存储类型支持动态扩容

##1.

cat > glusterfs_storageclass.yaml <<EOF

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: glusterfs

provisioner: kubernetes.io/glusterfs

parameters:

resturl: "http://192.168.56.101:8080"

clusterid: "2bfe06768a30c3e464065a86494f663a"

restauthenabled: "true"

restuser: "admin"

secretNamespace: "default"

secretName: "heketi-secret"

gidMin: "40000"

gidMax: "50000"

volumetype: "none"

allowVolumeExpansion: true

EOF

##2.

kubectl apply -f glusterfs_storageclass.yaml

[root@localhost ~]# kubectl get StorageClass --all-namespaces

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

glusterfs kubernetes.io/glusterfs Delete Immediate false 4m56s

3.使用storageclass创建pod测试

以部署mysql为例:

(1).创建命名空间

kubectl create namespace liutest

(2).创建一个PersistentVolumeClaim

volume.beta.kubernetes.io/storage-class的值指定为之前创建的storageclass的名称即glusterfs,同时可以指定命令空间。

##部署yaml准备

cat > mysql-claim.yaml <<EOF

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: mysql-claim

annotations:

volume.beta.kubernetes.io/storage-class: "glusterfs"

namespace: liutest

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi

EOF

##应用生效

kubectl apply -f mysql-claim.yaml

##查看

kubectl get PersistentVolumeClaim -n liutest

(3).创建一个Pod使用上面创建的PersistentVolumeClaim

注:这里采用的是Deployment进行测试,正式环境部署mysql建议有状态的StatfulSet进行部署

cat > mysql-deployment.yaml <<EOF

# ------------------- mysql Deployment ------------------- #

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: mysql

name: mysql

namespace: liutest

spec:

replicas: 1

selector:

matchLabels:

app: mysql

template:

metadata:

labels:

app: mysql

spec:

volumes:

- name: mysql-data

persistentVolumeClaim:

claimName: mysql-claim

containers:

- env:

- name: MYSQL_ROOT_PASSWORD

value: "123456"

- name: MYSQL_USER

value: "sendinmuser"

- name: MYSQL_PASSWORD

value: "sendinmpw"

image: "192.168.56.1:6000/mysql:5.6"

imagePullPolicy: IfNotPresent

name: mysql

ports:

- containerPort: 3306

protocol: TCP

name: http

volumeMounts:

- name: mysql-data

mountPath: /var/lib/mysql

---

# ------------------- mysql Service ------------------- #

kind: Service

apiVersion: v1

metadata:

labels:

app: mysql

name: mysql

namespace: liutest

spec:

type: NodePort

ports:

- port: 3306

targetPort: 3306

nodePort: 31306

selector:

app: mysql

EOF

##应用生效

kubectl apply -f mysql-deployment.yaml

##查看状态

[root@localhost ~]# kubectl get pods -n liutest -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

mysql-948f99cd7-mzxtp 1/1 Running 0 3m30s 10.244.169.131 k8s-node2 <none> <none>

[root@localhost ~]# kubectl get svc -n liutest -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

glusterfs-dynamic-32209f26-b267-4dc9-83f6-bead01a56c3a ClusterIP 10.108.40.125 <none> 1/TCP 7m11s <none>

mysql NodePort 10.103.68.3 <none> 3306:31306/TCP 3m31s app=mysql

四、其他

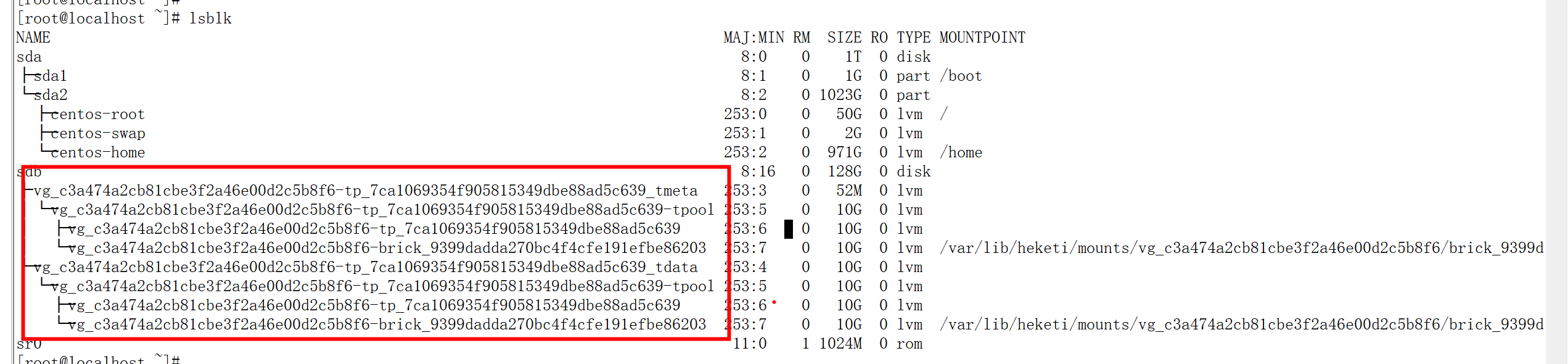

1.mkfs.xfs: cannot open /dev/sdb: Device or resource busy

参考:https://www.cnblogs.com/30go/p/15233966.html

在glusterfs中进行磁盘格式化时,可能出现上面的问题,解决办法为:

lsblk

dmsetup remove ***

比如:dmsetup remove vg_c3a474a2cb81cbe3f2a46e00d2c5b8f6-tp_7ca1069354f905815349dbe88ad5c639_tmeta