近两年,越来越多的企业在生产环境中,基于Docker、Kubernetes构建容器云平台,例如国内阿里巴巴、腾讯、京东、奇虎360等公司。互联网公司使用容器技术份额在持续上升,企业容器化部署已成为趋势。

一、Kubernetes概述

1、Kubernetes简介

Kubernetes是一个轻便的和可扩展的开源平台,用于管理容器化应用和服务。通过Kubernetes能够进行应用的自动化部署和扩缩容。在Kubernetes中,会将组成应用的容器组合成一个逻辑单元以更易管理和发现。Kubernetes积累了作为Google生产环境运行工作负载15年的经验,并吸收了来自于社区的最佳想法和实践。Kubernetes经过这几年的快速发展,形成了一个大的生态环境,Google在2014年将Kubernetes作为开源项目。Kubernetes的关键特性包括:

- 自动化装箱:在不牺牲可用性的条件下,基于容器对资源的要求和约束自动部署容器。同时,为了提高利用率和节省更多资源,将关键和最佳工作量结合在一起。

- 自愈能力:当容器失败时,会对容器进行重启;当所部署的Node节点有问题时,会对容器进行重新部署和重新调度;当容器未通过监控检查时,会关闭此容器;直到容器正常运行时,才会对外提供服务。

- 水平扩容:通过简单的命令、用户界面或基于CPU的使用情况,能够对应用进行扩容和缩容。

- 服务发现和负载均衡:开发者不需要使用额外的服务发现机制,就能够基于Kubernetes进行服务发现和负载均衡。

- 自动发布和回滚:Kubernetes能够程序化的发布应用和相关的配置。如果发布有问题,Kubernetes将能够回归发生的变更。

- 保密和配置管理:在不需要重新构建镜像的情况下,可以部署和更新保密和应用配置。

- 存储编排:自动挂接存储系统,这些存储系统可以来自于本地、公共云提供商(例如:GCP和AWS)、网络存储(例如:NFS、iSCSI、Gluster、Ceph、Cinder和Floker等)。

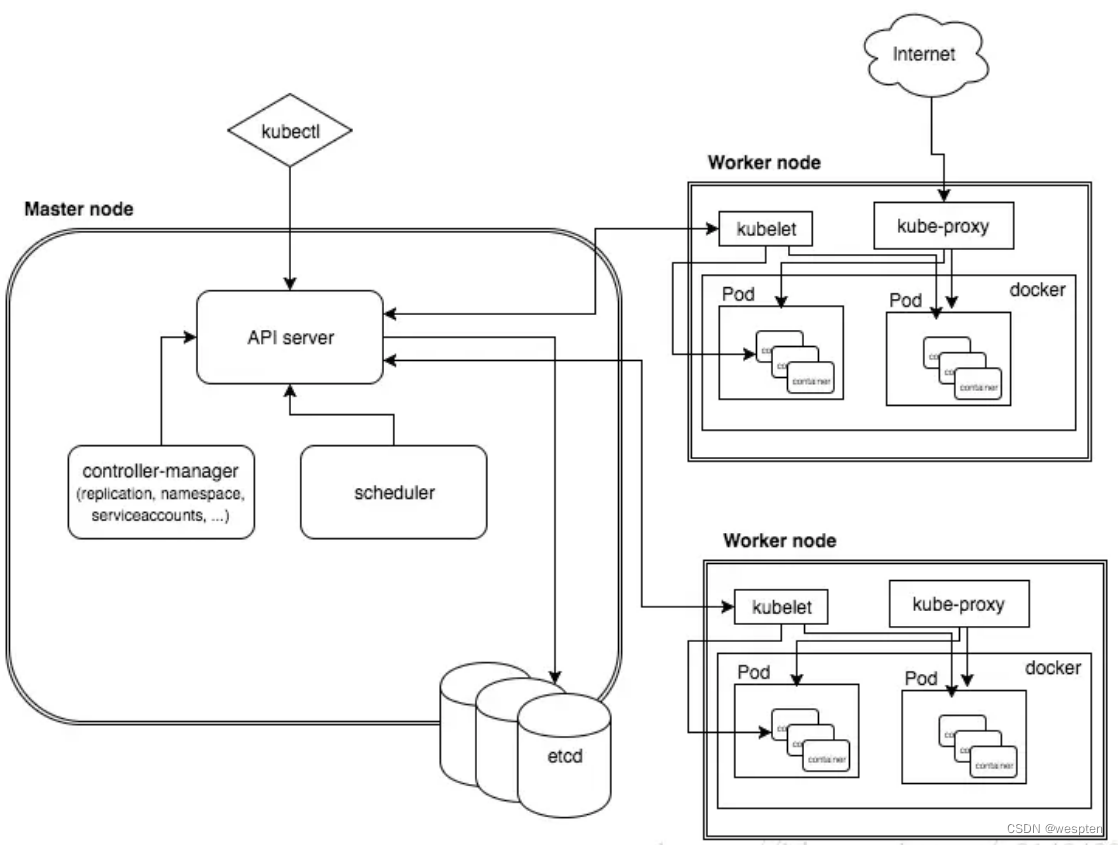

2、Kubernetes整体架构

- Master节点: 作为控制节点,对集群进行调度管理;Master由kube-apiserver、kube-scheduler和kube-controller-manager所组成。

- Node节点: 作为真正的工作节点,运行业务应用的容器;Node包含kubelet、kube-proxy和Container Runtime;kubectl用于通过命令行与API Server进行交互,而对Kubernetes进行操作,实现在集群中进行各种资源的增删改查等操作。

- ETCD:是Kubernetes用来备份所有集群数据的数据库。它存储集群的整个配置和状态。主节点查询etcd以检索节点,容器和容器的状态参数。

- Add-on:是对Kubernetes核心功能的扩展,例如增加网络和网络策略等能力。

1. Master节点

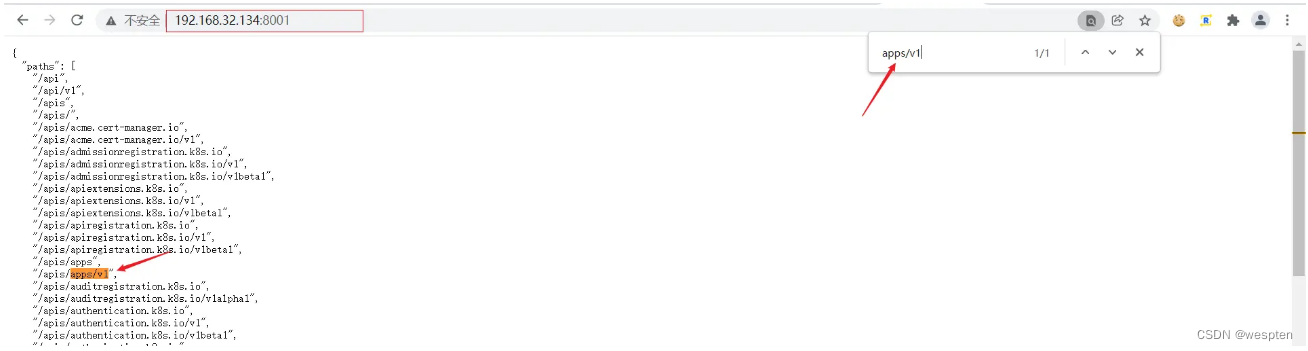

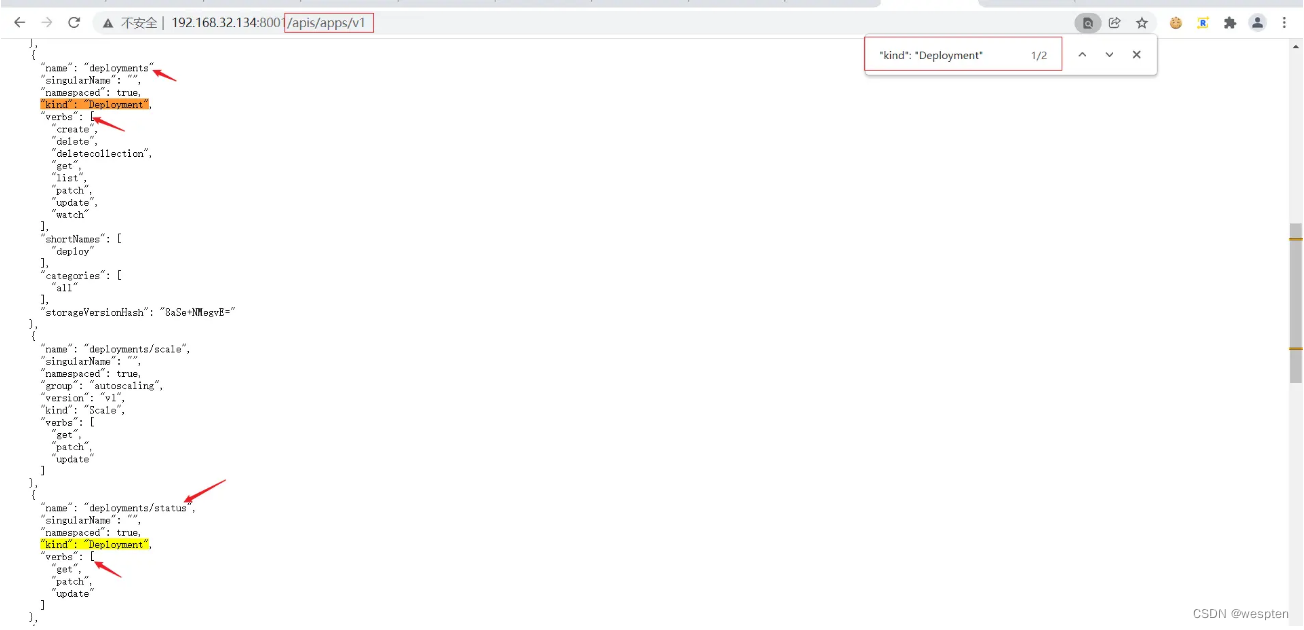

kube-apiserver:主要用来处理REST的操作,确保它们生效,并执行相关业务逻辑,以及更新etcd(或者其他存储)中的相关对象。API Server是所有REST命令的入口,它的相关结果状态将被保存在etcd(或其他存储)中。API Server的基本功能包括:

- REST语义,监控,持久化和一致性保证,API 版本控制,放弃和生效

- 内置准入控制语义,同步准入控制钩子,以及异步资源初始化

- API注册和发现

另外,API Server也作为集群的网关。默认情况,客户端通过API Server对集群进行访问,客户端需要通过认证,并使用API Server作为访问Node和Pod(以及service)的堡垒和代理/通道。

kube-controller-manager:用于执行大部分的集群层次的功能,它既执行生命周期功能(例如:命名空间创建和生命周期、事件垃圾收集、已终止垃圾收集、级联删除垃圾收集、node垃圾收集),也执行API业务逻辑(例如:pod的弹性扩容)。控制管理提供自愈能力、扩容、应用生命周期管理、服务发现、路由、服务绑定和提供。Kubernetes默认提供Replication Controller、Node Controller、Namespace Controller、Service Controller、Endpoints Controller、Persistent Controller、DaemonSet Controller等控制器。

kube-scheduler:scheduler组件为容器自动选择运行的主机。依据请求资源的可用性,服务请求的质量等约束条件,scheduler监控未绑定的pod,并将其绑定至特定的node节点。Kubernetes也支持用户自己提供的调度器,Scheduler负责根据调度策略自动将Pod部署到合适Node中,调度策略分为预选策略和优选策略,Pod的整个调度过程分为两步:

- 预选Node:遍历集群中所有的Node,按照具体的预选策略筛选出符合要求的Node列表。如没有Node符合预选策略规则,该Pod就会被挂起,直到集群中出现符合要求的Node。

- 优选Node:预选Node列表的基础上,按照优选策略为待选的Node进行打分和排序,从中获取最优Node。

2. Node节点

kubelet:Kubelet是Kubernetes中最主要的控制器,它是Pod和Node API的主要实现者,Kubelet负责驱动容器执行层。在Kubernetes中,应用容器彼此是隔离的,并且与运行其的主机也是隔离的,这是对应用进行独立解耦管理的关键点。

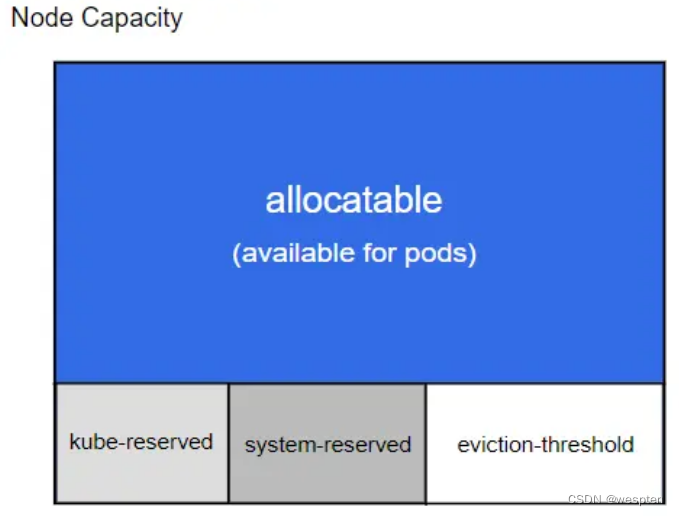

在Kubernets中,Pod作为基本的执行单元,它可以拥有多个容器和存储数据卷,能够方便在每个容器中打包一个单一的应用,从而解耦了应用构建时和部署时的所关心的事项,已经能够方便在物理机/虚拟机之间进行迁移。API准入控制可以拒绝或者Pod,或者为Pod添加额外的调度约束,但是Kubelet才是Pod是否能够运行在特定Node上的最终裁决者,而不是scheduler或者DaemonSet。kubelet默认情况使用cAdvisor进行资源监控。负责管理Pod、容器、镜像、数据卷等,实现集群对节点的管理,并将容器的运行状态汇报给Kubernetes API Server。

Container Runtime:每一个Node都会运行一个Container Runtime,其负责下载镜像和运行容器。Kubernetes本身并不停容器运行时环境,但提供了接口,可以插入所选择的容器运行时环境。kubelet使用Unix socket之上的gRPC框架与容器运行时进行通信,kubelet作为客户端,而CRI shim作为服务器。常用于docker。不过1.24版本已经不支持docker-shim。

protocol buffers API提供两个gRPC服务,ImageService和RuntimeService。ImageService提供拉取、查看、和移除镜像的RPC。RuntimeSerivce则提供管理Pods和容器生命周期管理的RPC,以及与容器进行交互(exec/attach/port-forward)。容器运行时能够同时管理镜像和容器(例如:Docker和Rkt),并且可以通过同一个套接字提供这两种服务。在Kubelet中,这个套接字通过–container-runtime-endpoint和–image-service-endpoint字段进行设置。Kubernetes CRI支持的容器运行时包括docker、rkt、cri-o、frankti、kata-containers和clear-containers等。

kube-proxy:基于一种公共访问策略(例如:负载均衡),服务提供了一种访问一群pod的途径。此方式通过创建一个虚拟的IP来实现,客户端能够访问此IP,并能够将服务透明的代理至Pod。每一个Node都会运行一个kube-proxy,kube proxy通过iptables规则引导访问至服务IP,并将重定向至正确的后端应用,通过这种方式kube-proxy提供了一个高可用的负载均衡解决方案。服务发现主要通过DNS实现。

在Kubernetes中,kube proxy负责为Pod创建代理服务;引到访问至服务;并实现服务到Pod的路由和转发,以及通过应用的负载均衡。

二、Kubernetes高可用集群部署

1、kubeadm部署

sudo hostnamectl set-hostname <HOSTNAME>注:<HOSTNAME> 根据实际的填写。

sudo systemctl stop firewalld && sudo systemctl disable firewalld

sudo setenforce 0

sudo sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/configcat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

overlay

br_netfilter

EOF

sudo modprobe overlay

sudo modprobe br_netfilter

# 设置所需的 sysctl 参数,参数在重新启动后保持不变

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

EOF

# 应用 sysctl 参数而不重新启动

sudo sysctl --systemcat <<-EOF | sudo tee /etc/yum.repos.d/kubernetes.repo > /dev/null

[kubernetes]

name=Aliyun-kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

EOF

sudo yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes2、二进制安装基础组件

1. 环境变量

规划端口:

| - | 端口范围 |

|---|---|

| etcd数据库 | 2379、2380、2381; |

| k8s组件端口 | 6443、10257、10257、10250、10249、10256 |

| k8s插件端口 | Calico: 179、9099; |

| k8s NodePort端口 | 30000 - 32767 |

| ip_local_port_range | 32768 - 65535 |

下面对上面的各端口类型进行解释:

- etcd端口:所需端口

- k8s组件端口:基础组件

- k8s插件端口:calico端口、nginx-ingress-controller端口

- k8s NodePort端口:跑在容器里面的应用,可以通过这个范围内的端口向外暴露服务,所以应用的对外端口要在这个范围内

- ip_local_port_range:主机上一个进程访问外部应用时,需要与外部应用建立TCP连接,TCP连接需要本机的一个端口,主机会从这个范围内选择一个没有使用的端口建立TCP连接;

设置主机名:

$ hostnamectl set-hostname k8s-master01

$ hostnamectl set-hostname k8s-node01

$ hostnamectl set-hostname k8s-node02注意:主机名不要用 _ 。不然启动 kubelet 有问题。识别不到主机名。

设置主机名映射:

$ cat >> /etc/hosts <<-EOF

192.168.31.103 k8s-master01

192.168.31.95 k8s-node01

192.168.31.78 k8s-node02

192.168.31.253 k8s-node03

EOF关闭防火墙:

$ sudo systemctl stop firewalld

$ sudo systemctl disable firewalld关闭selinux:

#临时生效

$ sudo setenforce 0

sed -ri 's/(SELINUX=).*/\1disabled/g' /etc/selinux/config关闭交换分区:

#临时生效

$ swapoff -a

#永久生效,需要重启

$ sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab加载ipvs模块:

$ cat > /etc/sysconfig/modules/ipvs.modules <<-EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

modprobe -- br_netfilter

modprobe -- ipip

EOF

# 生效ipvs模块

$ chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules

# 验证

$ lsmod | grep -e ip_vs -e nf_conntrack_ipv4 -e br_netfilter注意:在 /etc/sysconfig/modules/ 目录下的modules文件,重启会自动加载。

安装ipset依赖包:

$ yum install ipvsadm wget vim -y # 确保安装ipset包优化内核参数:

$ cat > /etc/sysctl.d/kubernetes.conf << EOF

net.bridge.bridge-nf-call-iptables=1

net.bridge.bridge-nf-call-ip6tables=1

net.ipv4.tcp_tw_recycle=0

vm.swappiness=0

vm.overcommit_memory=1

vm.panic_on_oom=0

fs.inotify.max_user_watches=89100

fs.file-max=52706963

fs.nr_open=52706963

net.ipv6.conf.all.disable_ipv6=1

net.netfilter.nf_conntrack_max=2310720

net.ipv4.conf.all.rp_filter=1

kernel.sem=250 32000 100 128

net.core.netdev_max_backlog = 32768

net.core.rmem_default = 8388608

net.core.rmem_max = 16777216

net.core.somaxconn = 32768

net.core.wmem_default = 8388608

net.core.wmem_max = 16777216

net.ipv4.ip_local_port_range = 32768 65535

net.ipv4.ip_forward = 1

net.ipv4.tcp_fin_timeout = 30

net.ipv4.tcp_keepalive_time = 1200

net.ipv4.tcp_max_orphans = 3276800

net.ipv4.tcp_max_syn_backlog = 65536

net.ipv4.tcp_max_tw_buckets = 6000

net.ipv4.tcp_mem = 94500000 91500000 92700000

net.ipv4.tcp_rmem = 32768 436600 873200

net.ipv4.tcp_syn_retries = 2

net.ipv4.tcp_synack_retries = 2

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp_wmem = 8192 436600 873200

EOF

# 生效 kubernetes.conf 文件

$ sysctl -p /etc/sysctl.d/kubernetes.conf

# 设置资源限制

cat >> /etc/security/limits.conf <<-EOF

* - nofile 65535

* - core 65535

* - nproc 65535

* - stack 65535

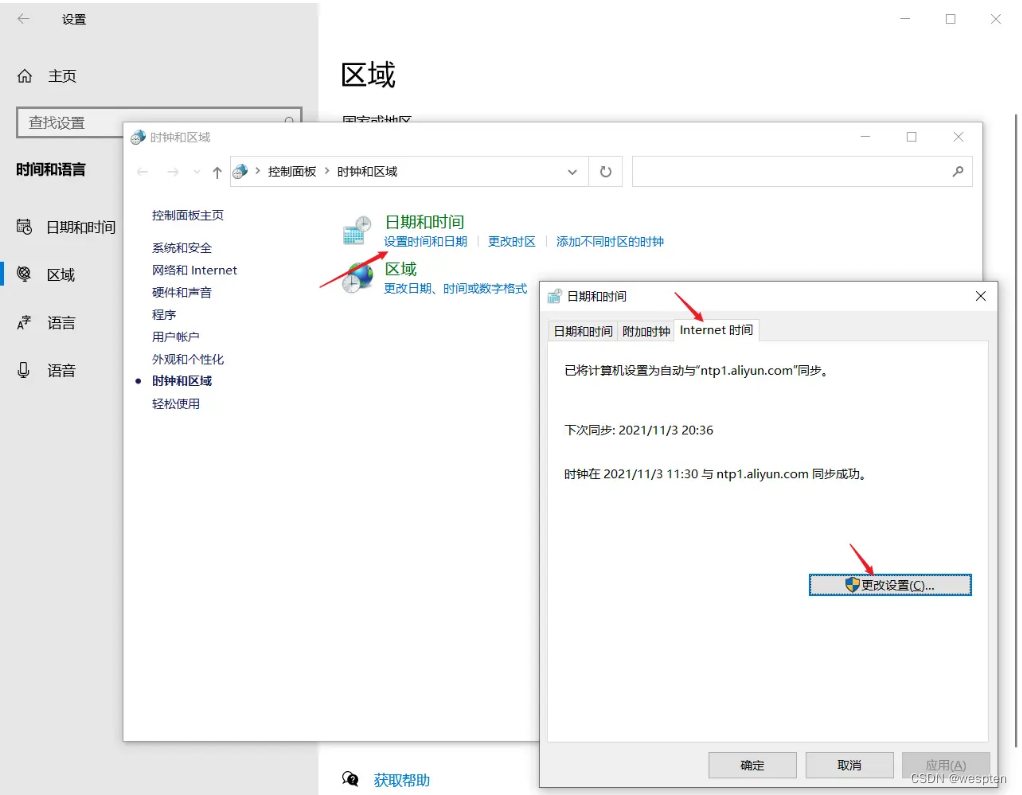

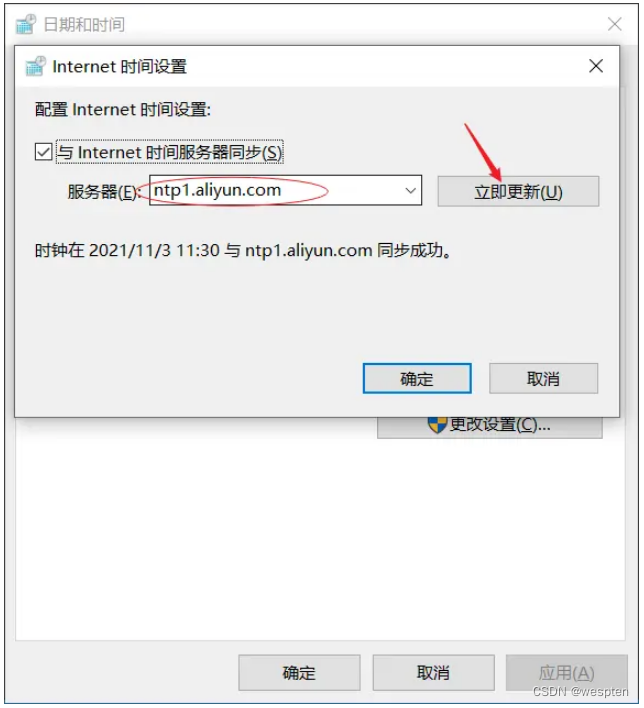

EOF设置时间同步:

$ yum install ntp -y

$ vim /etc/ntp.conf

#server 0.centos.pool.ntp.org iburst 注释以下四行

#server 1.centos.pool.ntp.org iburst

#server 2.centos.pool.ntp.org iburst

#server 3.centos.pool.ntp.org iburst

server ntp1.aliyun.com iburst #添加同步 ntp.aliyun.com

#启动并加入开机自启

$ systemctl start ntpd.service

$ systemctl enable ntpd.service2. 安装etcd

创建etcd目录及加入环境变量:

$ mkdir -p /data/etcd/{bin,conf,certs,data}

$ chmod 700 /data/etcd/data

$ echo 'PATH=/data/etcd/bin:$PATH' > /etc/profile.d/etcd.sh && source /etc/profile.d/etcd.sh下载生成证书工具:

$ mkdir ~/cfssl && cd ~/cfssl/

$ wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

$ wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

$ wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64

$ cp cfssl-certinfo_linux-amd64 /usr/local/bin/cfssl-certinfo

$ cp cfssljson_linux-amd64 /usr/local/bin/cfssljson

$ cp cfssl_linux-amd64 /usr/local/bin/cfssl

$ chmod u+x /usr/local/bin/cfssl*创建根证书(CA):

$ cat > /data/etcd/certs/ca-config.json <<-EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "87600h"

}

}

}

}

EOF创建证书签名请求文件:

$ cat > /data/etcd/certs/ca-csr.json <<-EOF

{

"CN": "etcd CA",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "GuangDong",

"L": "Guangzhou",

"O": "Personal",

"OU": "Personal"

}

]

}

EOF生成CA证书和私钥:

$ cd /data/etcd/certs/ && cfssl gencert -initca ca-csr.json | cfssljson -bare ca -分发CA证书和私钥到etcd节点:

$ scp /data/etcd/certs/ca*pem root@k8s-node01:/data/etcd/certs/

$ scp /data/etcd/certs/ca*pem root@k8s-node02:/data/etcd/certs/

$ scp /data/etcd/certs/ca*pem root@k8s-node03:/data/etcd/certs/创建etcd证书签名请求:

$ cat > /data/etcd/certs/etcd-csr.json << EOF

{

"CN": "etcd",

"hosts": [

"192.168.31.95",

"192.168.31.78",

"192.168.31.253"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "GuangDong",

"L": "Guangzhou",

"O": "Personal",

"OU": "Personal"

}

]

}

EOF说明:需要修改上面的 IP 地址。上述文件 hosts 字段中IP为所有 etcd 节点的集群内部通信IP,一个都不能少!为了方便后期扩容可以多写几个预留的IP。

生成证书与私钥:

$ cd /data/etcd/certs/ && cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes etcd-csr.json | cfssljson -bare etcd -

说明:-profile对应根(CA)证书的profile。

分发etcd证书和私钥到各个节点:

$ scp /data/etcd/certs/etcd*pem root@k8s-node01:/data/etcd/certs/

$ scp /data/etcd/certs/etcd*pem root@k8s-node02:/data/etcd/certs/

$ scp /data/etcd/certs/etcd*pem root@k8s-node03:/data/etcd/certs/载etcd包:

$ mkdir ~/etcd && cd ~/etcd

$ wget https://mirrors.huaweicloud.com/etcd/v3.4.18/etcd-v3.4.18-linux-amd64.tar.gz

$ tar xf etcd-v3.4.18-linux-amd64.tar.gz

$ cd etcd-v3.4.18-linux-amd64

$ cp -r etcd* /data/etcd/bin/分发etcd程序到各个etcd节点:

$ scp -r /data/etcd/bin/etcd* root@k8s-node01:/data/etcd/bin/

$ scp -r /data/etcd/bin/etcd* root@k8s-node02:/data/etcd/bin/

$ scp -r /data/etcd/bin/etcd* root@k8s-node03:/data/etcd/bin/创建etcd配置文件:

$ cat > /data/etcd/conf/etcd.conf << EOF

#[Member]

ETCD_NAME="etcd01"

ETCD_DATA_DIR="/data/etcd/data/"

ETCD_LISTEN_PEER_URLS="https://192.168.31.95:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.31.95:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.31.95:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.31.95:2379"

ETCD_INITIAL_CLUSTER="etcd01=https://192.168.31.95:2380,etcd02=https://192.168.31.78:2380,etcd03=https://192.168.31.253:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

EOF说明:需要修改上面的IP地址。

分发etcd配置文件:

$ scp /data/etcd/conf/etcd.conf root@k8s-node01:/data/etcd/conf/

$ scp /data/etcd/conf/etcd.conf root@k8s-node02:/data/etcd/conf/

$ scp /data/etcd/conf/etcd.conf root@k8s-node03:/data/etcd/conf/说明:需要在各个节点修改上面的IP地址和ETCD_NAME 。

创建etcd的systemd模板:

$ cat > /usr/lib/systemd/system/etcd.service <<EOF

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

Documentation=https://github.com/coreos

[Service]

Type=notify

EnvironmentFile=/data/etcd/conf/etcd.conf

ExecStart=/data/etcd/bin/etcd \\

--cert-file=/data/etcd/certs/etcd.pem \\

--key-file=/data/etcd/certs/etcd-key.pem \\

--peer-cert-file=/data/etcd/certs/etcd.pem \\

--peer-key-file=/data/etcd/certs/etcd-key.pem \\

--trusted-ca-file=/data/etcd/certs/ca.pem \\

--peer-trusted-ca-file=/data/etcd/certs/ca.pem

LimitNOFILE=65536

Restart=always

RestartSec=30

StartLimitBurst=3

StartLimitInterval=60s

[Install]

WantedBy=multi-user.target

EOF**注意:**确认ExecStart启动参数是否正确。

分发etcd 的systemd 模板:

$ scp /usr/lib/systemd/system/etcd.service k8s-node01:/usr/lib/systemd/system/

$ scp /usr/lib/systemd/system/etcd.service k8s-node02:/usr/lib/systemd/system/

$ scp /usr/lib/systemd/system/etcd.service k8s-node03:/usr/lib/systemd/system/启动etcd:

$ systemctl daemon-reload

$ systemctl start etcd.service

$ systemctl enable etcd.service验证etcd:

$ ETCDCTL_API=3 /data/etcd/bin/etcdctl --cacert=/data/etcd/certs/ca.pem --cert=/data/etcd/certs/etcd.pem --key=/data/etcd/certs/etcd-key.pem --endpoints="https://192.168.31.95:2379,https://192.168.31.78:2379,https://192.168.31.253:2379" endpoint health -w table

说明:需要修改上面的IP地址。

下载docker二进制包:

$ mkdir ~/docker && cd ~/docker

$ wget https://download.docker.com/linux/static/stable/x86_64/docker-19.03.15.tgz创建docker安装目录及环境变量:

$ mkdir -p /data/docker/{bin,conf,data}

$ echo 'PATH=/data/docker/bin:$PATH' > /etc/profile.d/docker.sh && source /etc/profile.d/docker.sh解压二进制包:

$ tar xf docker-19.03.15.tgz

$ cd docker/

$ cp * /usr/local/bin/分发docker命令:

$ scp /data/docker/bin/* k8s-node01:/usr/local/bin/

$ scp /data/docker/bin/* k8s-node02:/usr/local/bin/

$ scp /data/docker/bin/* k8s-node03:/usr/local/bin/创建docker的systemd模板:

$ cat > /usr/lib/systemd/system/docker.service <<EOF

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network-online.target firewalld.service

Wants=network-online.target

[Service]

Type=notify

ExecStart=/usr/local/bin/dockerd --config-file=/data/docker/conf/daemon.json

ExecReload=/bin/kill -s HUP

LimitNOFILE=infinity

LimitNPROC=infinity

TimeoutStartSec=0

Delegate=yes

KillMode=process

Restart=on-failure

StartLimitBurst=3

StartLimitInterval=60s

[Install]

WantedBy=multi-user.target

EOF创建daemon.json文件:

$ cat > /data/docker/conf/daemon.json << EOF

{

"data-root": "/data/docker/data/",

"exec-opts": ["native.cgroupdriver=systemd"],

"registry-mirrors": [

"https://1nj0zren.mirror.aliyuncs.com",

"https://docker.mirrors.ustc.edu.cn",

"http://f1361db2.m.daocloud.io",

"https://registry.docker-cn.com"

],

"log-driver": "json-file",

"log-level": "info"

}

}

EOF分发docker配置文件:

$ scp /usr/lib/systemd/system/docker.service k8s_node01:/usr/lib/systemd/system/

$ scp /usr/lib/systemd/system/docker.service k8s_node02:/usr/lib/systemd/system/

$ scp /usr/lib/systemd/system/docker.service k8s_node03:/usr/lib/systemd/system/

$ scp /data/docker/conf/daemon.json k8s_node01:/data/docker/conf/

$ scp /data/docker/conf/daemon.json k8s_node02:/data/docker/conf/

$ scp /data/docker/conf/daemon.json k8s_node03:/data/docker/conf/启动docker:

$ systemctl daemon-reload

$ systemctl start docker.service

$ systemctl enable docker.service安装docker-compose:

curl -L https://get.daocloud.io/docker/compose/releases/download/1.28.6/docker-compose-`uname -s`-`uname -m` > /usr/local/bin/docker-compose

chmod +x /usr/local/bin/docker-compose4. 部署master节点

1)master节点环境配置

创建k8s目录及环境变量:

$ mkdir -p /data/k8s/{bin,conf,certs,logs,data}

$ echo 'PATH=/data/k8s/bin:$PATH' > /etc/profile.d/k8s.sh && source /etc/profile.d/k8s.sh创建CA签名请求文件:

$ cp /data/etcd/certs/ca-config.json /data/k8s/certs/

$ cp /data/etcd/certs/ca-csr.json /data/k8s/certs/

$ sed -i 's/etcd CA/kubernetes CA/g' /data/k8s/certs/ca-csr.json说明:需要使用同一个CA根证书。

生成证书与私钥:

$ cd /data/k8s/certs && cfssl gencert -initca ca-csr.json | cfssljson -bare ca -下载kubernetes二进制包:

kubernetes官方地址,需要 科 学 上 网。

$ mkdir ~/kubernetes && cd ~/kubernetes

$ wget https://github.com/kubernetes/kubernetes/releases/download/v1.18.18/kubernetes.tar.gz

$ tar xf kubernetes.tar.gz

$ cd kubernetes/

$ ./cluster/get-kube-binaries.sh说明:./cluster/get-kube-binaries.sh 这一步需要上外网。亲测没有外网可以下载。但是可以会出现超时,或者连接错误。可以重试几次。下载到 kubernetes-server-linux-amd64.tar.gz压缩包就可以了。后面还会下载 kubernetes-manifests.tar.gz 的压缩可以。可以直接 CTRL + C退出下载。

解压kubernetes的安装包:

$ cd ~/kubernetes/kubernetes/server && tar xf kubernetes-server-linux-amd64.tar.gz说明:进入到server目录下,要是上面操作下载成功的话,会有 kubernetes-server-linux-amd64.tar.gz 压缩包。

2)安装kube-apiserver

拷贝命令:

$ cd ~/kubernetes/kubernetes/server/kubernetes/server/bin

$ cp kube-apiserver kubectl /data/k8s/bin/创建日志目录:

$ mkdir /data/k8s/logs/kube-apiserver生成apiserver证书与私钥:

$ cat > /data/k8s/certs/apiserver-csr.json <<EOF

{

"CN": "system:kube-apiserver",

"hosts": [

"10.183.0.1",

"127.0.0.1",

"192.168.31.103",

"192.168.31.79",

"192.168.31.95",

"192.168.31.78",

"192.168.31.253",

"192.168.31.100",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"name": [

{

"C": "CN",

"ST": "GuangDong",

"L": "Guangzhou",

"O": "Personal",

"OU": "Personal"

}

]

}

EOF

$ cd /data/k8s/certs && cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes apiserver-csr.json | cfssljson -bare apiserver -说明:需要改 IP地址,不可以使用IP地址段。hosts 里面需要写上 service IP地址的x.x.x.1的地址。

创建kube-apiserver的启动参数:

$ cat > /data/k8s/conf/kube-apiserver.conf << EOF

KUBE_APISERVER_OPTS="--alsologtostderr=true \\

--logtostderr=false \\

--v=4 \\

--log-dir=/data/k8s/logs/kube-apiserver \\

--audit-log-maxage=7 \\

--audit-log-maxsize=100 \\

--audit-log-path=/data/k8s/logs/kube-apiserver/kubernetes.audit \\

--audit-policy-file=/data/k8s/conf/kube-apiserver-audit.yml \\

--etcd-servers=https://192.168.31.95:2379,https://192.168.31.78:2379,https://192.168.31.253:2379 \\

--bind-address=0.0.0.0 \\

--insecure-port=0 \\

--secure-port=6443 \\

--allow-privileged=true \\

--service-cluster-ip-range=10.183.0.0/24 \\

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction,PodPreset \\

--runtime-config=settings.k8s.io/v1alpha1=true \\

--authorization-mode=RBAC,Node \\

--enable-bootstrap-token-auth=true \\

--token-auth-file=/data/k8s/conf/token.csv \\

--service-node-port-range=30000-32767 \\

--kubelet-client-certificate=/data/k8s/certs/apiserver.pem \\

--kubelet-client-key=/data/k8s/certs/apiserver-key.pem \\

--tls-cert-file=/data/k8s/certs/apiserver.pem \\

--tls-private-key-file=/data/k8s/certs/apiserver-key.pem \\

--client-ca-file=/data/k8s/certs/ca.pem \\

--service-account-key-file=/data/k8s/certs/ca-key.pem \\

--etcd-cafile=/data/etcd/certs/ca.pem \\

--etcd-certfile=/data/etcd/certs/etcd.pem \\

--etcd-keyfile=/data/etcd/certs/etcd-key.pem"

EOF说明:需要修改 IP地址 和 service-cluster-ip-range(service IP段) 。

创建审计策略配置文件:

cat > /data/k8s/conf/kube-apiserver-audit.yml <<-EOF

apiVersion: audit.k8s.io/v1beta1

kind: Policy

rules:

# 所有资源都记录请求的元数据(请求的用户、时间戳、资源、动词等等), 但是不记录请求或者响应的消息体。

- level: Metadata

EOF创建上述配置文件中token文件:

$ cat > /data/k8s/conf/token.csv <<EOF

0fb61c46f8991b718eb38d27b605b008,kubelet-bootstrap,10001,"system:kubelet-bootstrap"

EOF

#可以用使用下面命令生成token

$ head -c 16 /dev/urandom | od -An -t x | tr -d ' '创建kube-apiserver的systemd模板:

$ cat > /usr/lib/systemd/system/kube-apiserver.service <<EOF

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/data/k8s/conf/kube-apiserver.conf

ExecStart=/data/k8s/bin/kube-apiserver \$KUBE_APISERVER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF启动kube-apiserver:

$ systemctl daemon-reload

$ systemctl start kube-apiserver.service

$ systemctl enable kube-apiserver.service3)安装kube-controller-manager

拷贝命令:

$ cd ~/kubernetes/kubernetes/server/kubernetes/server/bin/

$ cp kube-controller-manager /data/k8s/bin/创建日志目录:

$ mkdir /data/k8s/logs/kube-controller-manager生成证书与私钥:

$ cat > /data/k8s/certs/controller-manager.json << EOF

{

"CN": "system:kube-controller-manager",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "GuangDong",

"L": "Guangzhou",

"O": "Personal",

"OU": "Personal"

}

]

}

EOF

$ cd /data/k8s/certs && cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes controller-manager.json | cfssljson -bare controller-manager -生成连接集群的kubeconfig文件:

$ KUBE_APISERVER="https://192.168.31.103:6443"

$ kubectl config set-cluster kubernetes \

--certificate-authority=/data/k8s/certs/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=/data/k8s/certs/controller-manager.kubeconfig

$ kubectl config set-credentials system:kube-controller-manager \

--client-certificate=/data/k8s/certs/controller-manager.pem \

--client-key=/data/k8s/certs/controller-manager-key.pem \

--embed-certs=true \

--kubeconfig=/data/k8s/certs/controller-manager.kubeconfig

$ kubectl config set-context default \

--cluster=kubernetes \

--user=system:kube-controller-manager \

--kubeconfig=/data/k8s/certs/controller-manager.kubeconfig

$ kubectl config use-context default \

--kubeconfig=/data/k8s/certs/controller-manager.kubeconfig启动kube-controller-manager参数:

$ cat > /data/k8s/conf/kube-controller-manager.conf <<EOF

KUBE_CONTROLLER_MANAGER_OPTS="--alsologtostderr=true \\

--logtostderr=false \\

--v=4 \\

--log-dir=/data/k8s/logs/kube-controller-manager \\

--master=https://192.168.31.103:6443 \\

--bind-address=0.0.0.0 \\

--port=0 \\

--secure-port=10257 \\

--leader-elect=true \\

--allocate-node-cidrs=true \\

--cluster-cidr=20.0.0.0/16 \\

--service-cluster-ip-range=10.183.0.0/24 \\

--authentication-kubeconfig=/data/k8s/certs/controller-manager.kubeconfig \\

--authorization-kubeconfig=/data/k8s/certs/controller-manager.kubeconfig \\

--client-ca-file=/data/k8s/certs/ca.pem \\

--cluster-signing-cert-file=/data/k8s/certs/ca.pem \\

--cluster-signing-key-file=/data/k8s/certs/ca-key.pem \\

--root-ca-file=/data/k8s/certs/ca.pem \\

--service-account-private-key-file=/data/k8s/certs/ca-key.pem \\

--kubeconfig=/data/k8s/certs/controller-manager.kubeconfig \\

--controllers=*,bootstrapsigner,tokencleaner \\

--node-cidr-mask-size=26 \\

--requestheader-client-ca-file=/data/k8s/certs/controller-manager.pem \\

--use-service-account-credentials=true \\

--experimental-cluster-signing-duration=87600h0m0s"

EOF说明:需要修改 service-cluster-ip-range(service IP段)、cluster-cidr(pod IP段) 和 master 的值。

kube-controller-manager的systemd模板:

$ cat > /usr/lib/systemd/system/kube-controller-manager.service <<EOF

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/data/k8s/conf/kube-controller-manager.conf

ExecStart=/data/k8s/bin/kube-controller-manager \$KUBE_CONTROLLER_MANAGER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF启动kube-controller-manager:

$ systemctl daemon-reload

$ systemctl start kube-controller-manager.service

$ systemctl enable kube-controller-manager.service4)安装kube-scheduler

拷贝命令:

$ cd ~/kubernetes/kubernetes/server/kubernetes/server/bin/

$ cp kube-scheduler /data/k8s/bin/创建日志目录:

$ mkdir /data/k8s/logs/kube-scheduler生成证书与私钥:

$ cat > /data/k8s/certs/scheduler.json << EOF

{

"CN": "system:kube-scheduler",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "GuangDong",

"L": "Guangzhou",

"O": "Personal",

"OU": "Personal"

}

]

}

EOF

$ cd /data/k8s/certs && cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes scheduler.json | cfssljson -bare scheduler -生成连接集群的kubeconfig文件:

$ KUBE_APISERVER="https://192.168.31.103:6443"

$ kubectl config set-cluster kubernetes \

--certificate-authority=/data/k8s/certs/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=/data/k8s/certs/scheduler.kubeconfig

$ kubectl config set-credentials system:kube-scheduler \

--client-certificate=/data/k8s/certs/scheduler.pem \

--client-key=/data/k8s/certs/scheduler-key.pem \

--embed-certs=true \

--kubeconfig=/data/k8s/certs/scheduler.kubeconfig

$ kubectl config set-context default \

--cluster=kubernetes \

--user=system:kube-scheduler \

--kubeconfig=/data/k8s/certs/scheduler.kubeconfig

$ kubectl config use-context default \

--kubeconfig=/data/k8s/certs/scheduler.kubeconfig创建启动kube-scheduler参数:

$ cat > /data/k8s/conf/kube-scheduler.conf <<EOF

KUBE_SCHEDULER_OPTS="--alsologtostderr=true \\

--logtostderr=false \\

--v=4 \\

--log-dir=/data/k8s/logs/kube-scheduler \\

--master=https://192.168.31.103:6443 \\

--authentication-kubeconfig=/data/k8s/certs/scheduler.kubeconfig \\

--authorization-kubeconfig=/data/k8s/certs/scheduler.kubeconfig \\

--bind-address=0.0.0.0 \\

--port=0 \\

--secure-port=10259 \\

--kubeconfig=/data/k8s/certs/scheduler.kubeconfig \\

--client-ca-file=/data/k8s/certs/ca.pem \\

--requestheader-client-ca-file=/data/k8s/certs/scheduler.pem \\

--leader-elect=true"

EOF说明:需要修改 master 的值。

创建kube-scheduler的systemd模板:

$ cat > /usr/lib/systemd/system/kube-scheduler.service <<EOF

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/data/k8s/conf/kube-scheduler.conf

ExecStart=/data/k8s/bin/kube-scheduler \$KUBE_SCHEDULER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF启动kube-scheduler:

$ systemctl daemon-reload

$ systemctl start kube-scheduler.service

$ systemctl enable kube-scheduler.service5)客户端设置及验证

客户端设置:

$ cat > /data/k8s/certs/admin-csr.json << EOF

{

"CN": "system:admin",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "GuangDong",

"L": "Guangzhou",

"O": "Personal",

"OU": "Personal"

}

]

}

EOF

$ cd /data/k8s/certs && cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin -

$ KUBE_APISERVER="https://192.168.31.103:6443"

$ kubectl config set-cluster kubernetes \

--certificate-authority=/data/k8s/certs/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=/data/k8s/certs/admin.kubeconfig

$ kubectl config set-credentials system:admin \

--client-certificate=/data/k8s/certs/admin.pem \

--client-key=/data/k8s/certs/admin-key.pem \

--embed-certs=true \

--kubeconfig=/data/k8s/certs/admin.kubeconfig

$ kubectl config set-context default \

--cluster=kubernetes \

--user=system:admin \

--kubeconfig=/data/k8s/certs/admin.kubeconfig

$ kubectl config use-context default \

--kubeconfig=/data/k8s/certs/admin.kubeconfig

$ sed -ri "s/(--insecure-port=0)/#\1/g" /data/k8s/conf/kube-apiserver.conf

$ systemctl restart kube-apiserver

$ kubectl create clusterrolebinding system:admin --clusterrole=cluster-admin --user=system:admin

$ kubectl create clusterrolebinding system:kube-apiserver --clusterrole=cluster-admin --user=system:kube-apiserver

$ sed -ri "s/#(--insecure-port=0)/\1/g" /data/k8s/conf/kube-apiserver.conf

$ systemctl restart kube-apiserver

$ cp /data/k8s/certs/admin.kubeconfig ~/.kube/config验证:

# http方式验证

$ kubectl get cs

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-1 Healthy {"health":"true"}

etcd-2 Healthy {"health":"true"}

etcd-0 Healthy {"health":"true"}

# https方式验证

$ curl -sk --cacert /data/k8s/certs/ca.pem --cert /data/k8s/certs/admin.pem --key /data/k8s/certs/admin-key.pem https://192.168.31.103:10257/healthz && echo

$ curl -sk --cacert /data/k8s/certs/ca.pem --cert /data/k8s/certs/admin.pem --key /data/k8s/certs/admin-key.pem https://192.168.31.103:10259/healthz && echo5. 部署节点(master)

1)安装kubelet

授权kubelet-bootstrap用户允许请求证书:

$ kubectl create clusterrolebinding kubelet-bootstrap \

--clusterrole=system:node-bootstrapper \

--user=kubelet-bootstrap创建日志目录:

$ mkdir /data/k8s/logs/kubelet拷贝命令:

$ cd ~/kubernetes/kubernetes/server/kubernetes/server/bin

$ cp kubelet /data/k8s/bin/创建kubelet启动参数:

$ cat > /data/k8s/conf/kubelet.conf <<EOF

KUBELET_OPTS="--alsologtostderr=true \\

--logtostderr=false \\

--v=4 \\

--log-dir=/data/k8s/logs/kubelet \\

--hostname-override=192.168.31.103 \\

--network-plugin=cni \\

--cni-conf-dir=/etc/cni/net.d \\

--cni-bin-dir=/opt/cni/bin \\

--kubeconfig=/data/k8s/certs/kubelet.kubeconfig \\

--bootstrap-kubeconfig=/data/k8s/certs/bootstrap.kubeconfig \\

--config=/data/k8s/conf/kubelet-config.yaml \\

--cert-dir=/data/k8s/certs/ \\

--root-dir=/data/k8s/data/kubelet/ \\

--pod-infra-container-image=ecloudedu/pause-amd64:3.0"

EOF说明:修改 hostname-override 为当前的 IP地址。cni-conf-dir 默认是 /etc/cni/net.d,cni-bin-dir 默认是/opt/cni/bin。指定 cgroupdriver 为systemd,默认也是systemd,root-dir 默认是/var/lib/kubelet目录。

创建kubelet配置参数文件:

$ cat > /data/k8s/conf/kubelet-config.yaml <<EOF

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

address: 0.0.0.0

port: 10250

readOnlyPort: 0

cgroupDriver: systemd

clusterDNS:

- 10.183.0.2

clusterDomain: cluster.local

failSwapOn: false

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 2m0s

enabled: true

x509:

clientCAFile: /data/k8s/certs/ca.pem

anthorization:

mode: Webhook

Webhook:

cacheAuthorizedTTL: 5m0s

cacheUnauthorizedTTL: 30s

evictionHard:

imagefs.available: 15%

memory.available: 100Mi

nodefs.available: 10%

nodefs.inodesFree: 5%

maxOpenFiles: 1000000

maxPods: 100

EOF说明:需要修改 clusterDNS 的IP地址为 server IP段。

参考地址:

GitHub - kubernetes/kubelet: kubelet component configs

Kubelet 配置 (v1beta1) | Kubernetes

v1beta1 package - k8s.io/kubelet/config/v1beta1 - Go Packages

生成bootstrap.kubeconfig文件:

$ KUBE_APISERVER="https://192.168.31.103:6443" #master IP

$ TOKEN="0fb61c46f8991b718eb38d27b605b008" #跟token.csv文件的token一致

# 设置集群参数

$ kubectl config set-cluster kubernetes \

--certificate-authority=/data/k8s/certs/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=/data/k8s/certs/bootstrap.kubeconfig

# 设置客户端认证参数

$ kubectl config set-credentials "kubelet-bootstrap" \

--token=${TOKEN} \

--kubeconfig=/data/k8s/certs/bootstrap.kubeconfig

# 设置上下文参数

$ kubectl config set-context default \

--cluster=kubernetes \

--user="kubelet-bootstrap" \

--kubeconfig=/data/k8s/certs/bootstrap.kubeconfig

# 设置默认上下文

$ kubectl config use-context default \

--kubeconfig=/data/k8s/certs/bootstrap.kubeconfig创建kubelet的systemd模板:

$ cat > /usr/lib/systemd/system/kubelet.service <<EOF

[Unit]

Description=Kubernetes Kubelet

After=docker.service

[Service]

EnvironmentFile=/data/k8s/conf/kubelet.conf

ExecStart=/data/k8s/bin/kubelet \$KUBELET_OPTS

Restart=on-failure

LimitNOFILE=65535

[Install]

WantedBy=multi-user.target

EOF启动kubelet:

$ systemctl daemon-reload

$ systemctl start kubelet.service

$ systemctl enable kubelet.service批准kubelet加入集群:

$ kubectl get csr

NAME AGE SIGNERNAME REQUESTOR CONDITION

node-csr-C0QE1O0aWVJc-H5AObkjBJ4iqhQY2BiUqIyUVe9UBUM 6m22s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Pending

$ kubectl certificate approve node-csr-DaJ36jEFJwOPQwFGY3uWmsyfS-4_LFYuTsYA71yCOZY

certificatesigningrequest.certificates.k8s.io/node-csr-DaJ36jEFJwOPQwFGY3uWmsyfS-4_LFYuTsYA71yCOZY approved说明:node-csr-C0QE1O0aWVJc-H5AObkjBJ4iqhQY2BiUqIyUVe9UBUM是kubectl get csr获取的name的值。

验证:

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

192.168.31.103 NotReady <none> 27s v1.18.182)安装kube-proxy

创建日志目录:

$ mkdir /data/k8s/logs/kube-proxy拷贝命令:

$ cd ~/kubernetes/kubernetes/server/kubernetes/server/bin/

$ cp kube-proxy /data/k8s/bin/创建启动kube-proxy的参数:

$ cat > /data/k8s/conf/kube-proxy.conf << EOF

KUBE_PROXY_OPTS="--alsologtostderr=true \\

--logtostderr=false \\

--v=4 \\

--log-dir=/data/k8s/logs/kube-proxy \\

--config=/data/k8s/conf/kube-proxy-config.yml"

EOF创建配置参数文件:

$ cat > /data/k8s/conf/kube-proxy-config.yml << EOF

kind: KubeProxyConfiguration

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: 0.0.0.0

metricsBindAddress: 0.0.0.0:10249

clientConnection:

kubeconfig: /data/k8s/certs/proxy.kubeconfig

hostnameOverride: 192.168.31.103

clusterCIDR: 20.0.0.0/16

mode: ipvs

ipvs:

minSyncPeriod: 5s

syncPeriod: 5s

scheduler: "rr"

EOF说明:修改hostnameOverride的值为IP地址。clusterCIDR的值为pod IP段。

参考地址:

GitHub - kubernetes/kube-proxy: kube-proxy component configs

v1alpha1 package - k8s.io/kube-proxy/config/v1alpha1 - Go Packages

kube-proxy 配置 (v1alpha1) | Kubernetes

生成证书与私钥:

$ cat > /data/k8s/certs/proxy.json << EOF

{

"CN": "system:kube-proxy",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "GuangDong",

"L": "Guangzhou",

"O": "Personal",

"OU": "Personal"

}

]

}

EOF

$ cd /data/k8s/certs && cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes proxy.json | cfssljson -bare proxy -生成kube-proxy.kubeconfig文件:

$ KUBE_APISERVER="https://192.168.31.103:6443"

# 设置集群参数

$ kubectl config set-cluster kubernetes \

--certificate-authority=/data/k8s/certs/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=/data/k8s/certs/proxy.kubeconfig

# 设置客户端认证参数

$ kubectl config set-credentials system:kube-proxy \

--client-certificate=/data/k8s/certs/proxy.pem \

--client-key=/data/k8s/certs/proxy-key.pem \

--embed-certs=true \

--kubeconfig=/data/k8s/certs/proxy.kubeconfig

# 设置上下文参数

$ kubectl config set-context default \

--cluster=kubernetes \

--user=system:kube-proxy \

--kubeconfig=/data/k8s/certs/proxy.kubeconfig

# 设置默认上下文

$ kubectl config use-context default \

--kubeconfig=/data/k8s/certs/proxy.kubeconfig创建kube-proxy的systemd模板:

$ cat > /usr/lib/systemd/system/kube-proxy.service << EOF

[Unit]

Description=Kubernetes Proxy

After=network.target

[Service]

EnvironmentFile=-/data/k8s/conf/kube-proxy.conf

ExecStart=/data/k8s/bin/kube-proxy \$KUBE_PROXY_OPTS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF启动kube-proxy:

$ systemctl daemon-reload

$ systemctl start kube-proxy.service

$ systemctl enable kube-proxy.service解决ROLES不显示:

kubectl label node 192.168.31.103 node-role.kubernetes.io/master=如果标签打错了,使用kubectl label node 192.168.31.103 node-role.kubernetes.io/node-取消标签。

6. 新增node节点

创建k8s目录及环境变量:

$ mkdir -p /data/k8s/{bin,conf,certs,logs} && mkdir /data/k8s/logs/kubelet

$ echo 'PATH=/data/k8s/bin:$PATH' > /etc/profile.d/k8s.sh && source /etc/profile.d/k8s.sh获取kubelet文件:

scp root@k8s-master:/data/k8s/bin/kubelet /data/k8s/bin/kubelet启动参数:

$ scp k8s-master01:/data/k8s/conf/kubelet.conf /data/k8s/conf/kubelet.conf

$ scp k8s-master:/data/k8s/conf/kubelet-config.yaml /data/k8s/conf/注意:修改 kubelet.conf 配置文件中的hostname-override的值。

获取相关证书:

$ scp root@k8s-master01:/data/k8s/certs/{ca*pem,bootstrap.kubeconfig} /data/k8s/certs/

创建kubelet的systemd模板:

$ scp k8s-master01:/usr/lib/systemd/system/kubelet.service /usr/lib/systemd/system/kubelet.service

启动kubelet:

$ systemctl daemon-reload

$ systemctl start kubelet.service

$ systemctl enable kubelet.service

批准kubelet加入集群:

$ kubectl get csr

NAME AGE SIGNERNAME REQUESTOR CONDITION

node-csr-i8aN5Ua8282QMSOERSZFCr26dzmSmXod-kv5fCm5Kf8 26s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Pending

node-csr-sePBDxehlZbf8B4DwMvObQpRp-a5fOKNbx3NpDYcKeA 12m kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Approved,Issued

$ kubectl certificate approve node-csr-i8aN5Ua8282QMSOERSZFCr26dzmSmXod-kv5fCm5Kf8

certificatesigningrequest.certificates.k8s.io/node-csr-i8aN5Ua8282QMSOERSZFCr26dzmSmXod-kv5fCm5Kf8 approved

说明:node-csr-i8aN5Ua8282QMSOERSZFCr26dzmSmXod-kv5fCm5Kf8是kubectl get csr 获取的name的值。

验证:

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

192.168.31.103 NotReady master 15h v1.18.18

192.168.31.253 NotReady <none> 15h v1.18.18

192.168.31.78 NotReady <none> 4s v1.18.18

192.168.31.95 NotReady <none> 4s v1.18.182)安装kube-proxy服务

创建日志目录:

mkdir /data/k8s/logs/kube-proxy

拷贝kube-proxy文件:

scp root@k8s-master:/data/k8s/bin/kube-proxy /data/k8s/bin/

拷贝启动服务参数:

scp k8s-master:/data/k8s/conf/kube-proxy.conf /data/k8s/conf/kube-proxy.conf

scp root@k8s-master:/data/k8s/conf/kube-proxy-config.yml /data/k8s/conf/

注意:修改 kube-proxy-config.yml 文件中 hostnameOverride与kubelet的hostnameOverride 一致。

拷贝相关证书:

scp root@k8s-master:/data/k8s/certs/{ca*.pem,proxy.kubeconfig} /data/k8s/certs/

创建kube-proxy的systemd模板:

scp k8s-master:/usr/lib/systemd/system/kube-proxy.service /usr/lib/systemd/system/kube-proxy.service

启动kube-proxy服务:

systemctl daemon-reload

systemctl start kube-proxy.service

systemctl enable kube-proxy.service

验证:

journalctl -xeu kube-proxy.service

注意:日志如果出现这个 can't set sysctl net/ipv4/vs/conn_reuse_mode, kernel version must be at least 4.1 需要升级内核。

7. 补充

k8s命令补全:

$ yum install -y bash-completion

$ source /usr/share/bash-completion/bash_completion

$ source <(kubectl completion bash)

$ echo "source <(kubectl completion bash)" >> ~/.bashrc附加iptables规则:

# ssh 服务

iptables -t filter -A INPUT -p icmp --icmp-type 8 -j ACCEPT

iptables -t filter -A INPUT -p tcp --dport 22 -m comment --comment "sshd service" -j ACCEPT

iptables -t filter -A INPUT -m state --state ESTABLISHED,RELATED -j ACCEPT

iptables -t filter -A INPUT -i lo -j ACCEPT

iptables -t filter -P INPUT DROP

# etcd数据库

iptables -t filter -I INPUT -p tcp --dport 2379:2381 -m comment --comment "etcd Component ports" -j ACCEPT

# matster服务

iptables -t filter -I INPUT -p tcp -m multiport --dport 6443,10257,10259 -m comment --comment "k8s master Component ports" -j ACCEPT

# node服务

iptables -t filter -I INPUT -p tcp -m multiport --dport 10249,10250,10256 -m comment --comment "k8s node Component ports" -j ACCEPT

# k8s使用到的端口

iptables -t filter -I INPUT -p tcp --dport 32768:65535 -m comment --comment "ip_local_port_range ports" -j ACCEPT

iptables -t filter -I INPUT -p tcp --dport 30000:32767 -m comment --comment "k8s service nodeports" -j ACCEPT

# calico服务端口

iptables -t filter -I INPUT -p tcp -m multiport --dport 179,9099 -m comment --comment "k8s calico Component ports" -j ACCEPT

iptables -t filter -I INPUT -p tcp --dport 9091 -m comment --comment "k8s calico metrics ports" -j ACCEPT

# coredns服务端口

iptables -t filter -I INPUT -p udp -m udp --dport 53 -m comment --comment "k8s coredns ports" -j ACCEPT

# pod 到 service 网络。没有设置的话,启动coredns失败。

iptables -t filter -I INPUT -p tcp -s 20.0.0.0/16 -d 10.183.0.0/24 -m comment --comment "pod to service" -j ACCEPT

# 记录别drop的数据包,日志在 /var/log/messages,过滤关键字"iptables-drop: "

iptables -t filter -A INPUT -j LOG --log-prefix='iptables-drop: '3、插件部署

1. 安装calico

详细的参数信息,请查看calico官网

下载calico部署yaml文件:

mkdir ~/calico && cd ~/calico

curl https://docs.projectcalico.org/archive/v3.18/manifests/calico-etcd.yaml -o calico.yaml

修改calico yaml文件:

1.修改 Secret 类型,calico-etcd-secrets 的 `etcd-key` 、 `etcd-cert` 、 `etcd-ca`

将 cat /data/etcd/certs/ca.pem | base64 -w 0 && echo 输出的所有内容复制到 `etcd-ca`

将 cat /data/etcd/certs/etcd.pem | base64 -w 0 && echo 输出的所有内容复制到 `etcd-cert`

将 cat /data/etcd/certs/etcd-key.pem | base64 -w 0 && echo 输出的所有内容复制到 `etcd-key`

2.修改 ConfigMap 类型,calico-config 的 `etcd_endpoints`、`etcd_ca`、`etcd_cert`、`etcd_key`

`etcd_endpoints`:"https://192.168.31.95:2379,https://192.168.31.78:2379,https://192.168.31.253:2379"

`etcd_ca`: "/calico-secrets/etcd-ca" # "/calico-secrets/etcd-ca"

`etcd_cert`: "/calico-secrets/etcd-cert" # "/calico-secrets/etcd-cert"

`etcd_key`: "/calico-secrets/etcd-key" # "/calico-secrets/etcd-key"

根据后面注释的内容填写。

3.修改 DaemonSet 类型,calico-node 的 `CALICO_IPV4POOL_CIDR`、`calico-etcd-secrets`

将注释打开,填上你预计的pod IP段

- name: CALICO_IPV4POOL_CIDR

value: "20.0.0.0/16"

4.修改 DaemonSet 类型,calico-node 的 spec.template.spec.containers.env 下添加一段下面的内容

# 是指定使用那个网卡,可以使用 | 分隔开,表示或者的关系。

- name: IP_AUTODETECTION_METHOD

value: "interface=eth.*|em.*|enp.*"

5.修改 Deployment 类型,calico-kube-controllers 的 spec.template.spec.volumes

将默认权限400,修改成644。

- name: etcd-certs

secret:

secretName: calico-etcd-secrets

defaultMode: 0644

6.修改 DaemonSet 类型,calico-node 的 spec.template.spec.volumes

将默认权限400,修改成644。

- name: etcd-certs

secret:

secretName: calico-etcd-secrets

defaultMode: 0644

7.暴露metrics接口,calico-node 的 spec.template.spec.containers.env 下添加一段下面的内容

- name: FELIX_PROMETHEUSMETRICSENABLED

value: "True"

- name: FELIX_PROMETHEUSMETRICSPORT

value: "9091"

8. calico-node 的 spec.template.spec.containers 下添加一段下面的内容

ports:

- containerPort: 9091

name: http-metrics

protocol: TCP

需要监控calico才设置 7、8 步骤,metric接口需要暴露 9091 端口。

部署calico:

kubectl apply -f calico.yaml

验证calico:

$ kubectl -n kube-system get pod

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-f4c6dbf-tkq77 1/1 Running 1 42h

calico-node-c4ccj 1/1 Running 1 42h

calico-node-crs9k 1/1 Running 1 42h

calico-node-fm697 1/1 Running 1 42h

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

192.168.31.103 Ready master 5d23h v1.18.18

192.168.31.253 Ready <none> 5d23h v1.18.18

192.168.31.78 Ready <none> 5d23h v1.18.18

192.168.31.95 Ready <none> 5d23h v1.18.18

**注意**:status不是为ready的话,稍等一段时间再看看。一直都没有变成ready,请检查 kubelet 配置文件是否设置cni-bin-dir参数。默认是 `/opt/cni/bin`、`/etc/cni/net.d/`

$ kubectl run busybox --image=jiaxzeng/busybox:1.24.1 sleep 3600

$ kubectl run nginx --image=nginx

$ kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

busybox 1/1 Running 6 42h 20.0.58.194 192.168.31.78 <none> <none>

nginx 1/1 Running 1 42h 20.0.85.194 192.168.31.95 <none> <none>

$ kubectl exec busybox -- ping 20.0.85.194 -c4

PING 20.0.85.194 (20.0.85.194): 56 data bytes

64 bytes from 20.0.85.194: seq=0 ttl=62 time=0.820 ms

64 bytes from 20.0.85.194: seq=1 ttl=62 time=0.825 ms

64 bytes from 20.0.85.194: seq=2 ttl=62 time=0.886 ms

64 bytes from 20.0.85.194: seq=3 ttl=62 time=0.840 ms

--- 20.0.85.194 ping statistics ---

4 packets transmitted, 4 packets received, 0% packet loss

round-trip min/avg/max = 0.820/0.842/0.886 ms

除ping不通跨节点容器外,其他都没有问题的话。

可能是IP隧道的原因。可以手动测试一下两台主机IP隧道是否可以通信。

modprobe ipip

ip tunnel add ipip-tunnel mode ipip remote 对端外面IP local 本机外网IP

ifconfig ipip-tunnel 虚IP netmask 255.255.255.0

如上述不通,请核查主机IP隧道通信问题。如果是openstack创建的虚机出现这种情况,可以禁用安全端口功能。

openstack server show 主机名称

openstack server remove security group 主机名称 安全组名称

openstack port set --disable-port-security `openstack port list | grep '主机IP地址' | awk '{print $2}'`

安装calicoctl客户端:

curl -L https://github.com/projectcalico/calicoctl/releases/download/v3.18.6/calicoctl -o /usr/local/bin/calicoctl

chmod +x /usr/local/bin/calicoctl

配置calicoctl:

mkdir -p /etc/calico

cat <<EOF | sudo tee /etc/calico/calicoctl.cfg > /dev/null

apiVersion: projectcalico.org/v3

kind: CalicoAPIConfig

metadata:

spec:

etcdEndpoints: https://192.168.31.95:2379,https://192.168.31.78:2379,https://192.168.31.253:2379

etcdKeyFile: /data/etcd/certs/etcd-key.pem

etcdCertFile: /data/etcd/certs/etcd.pem

etcdCACertFile: /data/etcd/certs/ca.pem

EOF2. 部署coreDNS

下载coredns部署yaml文件:

$ mkdir ~/coredns && cd ~/coredns

$ wget https://raw.githubusercontent.com/kubernetes/kubernetes/v1.18.18/cluster/addons/dns/coredns/coredns.yaml.sed -O coredns.yaml

修改参数:

$ vim coredns.yaml

...

kubernetes $DNS_DOMAIN in-addr.arpa ip6.arpa {

...

memory: $DNS_MEMORY_LIMIT

...

clusterIP: $DNS_SERVER_IP

...

image: k8s.gcr.io/coredns:1.6.5

# 添加 pod 反亲和,在 deploy.spec.template.spec 添加以下内容

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 80

podAffinityTerm:

topologyKey: kubernetes.io/hostname

labelSelector:

matchLabels:

k8s-app: kube-dns

- 将

$DNS_DOMAIN替换成cluster.local.。默认 DNS_DOMAIN 就是 cluster.local. 。 - 将

$DNS_MEMORY_LIMIT替换成合适的资源。 - 将

$DNS_SERVER_IP替换成和 kubelet-config.yaml 的clusterDNS字段保持一致 - 如果不能上外网的话,将 image 的镜像设置为

coredns/coredns:x.x.x。 - 生产环境只有一个副本数不合适,所以在

deployment控制器的spec字段下,添加一行replicas: 3参数。

部署coredns:

$ kubectl apply -f coredns.yaml

验证:

$ kubectl get pod -n kube-system -l k8s-app=kube-dns

NAME READY STATUS RESTARTS AGE

coredns-75d9bd4f59-df94b 1/1 Running 0 7m55s

coredns-75d9bd4f59-kh4rp 1/1 Running 0 7m55s

coredns-75d9bd4f59-vjkpb 1/1 Running 0 7m55s

$ kubectl run dig --rm -it --image=jiaxzeng/dig:latest /bin/sh

If you don't see a command prompt, try pressing enter.

/ # nslookup kubernetes.default.svc.cluster.local.

Server: 10.211.0.2

Address: 10.211.0.2#53

Name: kubernetes.default.svc.cluster.local

Address: 10.211.0.1

/ # nslookup kube-dns.kube-system.svc.cluster.local.

Server: 10.211.0.2

Address: 10.211.0.2#53

Name: kube-dns.kube-system.svc.cluster.local

Address: 10.211.0.23. 安装metrics-server

创建证书签名请求文件:

cat > /data/k8s/certs/proxy-client-csr.json <<-EOF

{

"CN": "aggregator",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "GuangDong",

"L": "GuangDong",

"O": "k8s"

}

]

}

EOF

生成proxy-client证书和私钥:

cd /data/k8s/certs/ && cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes proxy-client-csr.json | cfssljson -bare proxy-client -

下载yaml文件:

mkdir ~/metrics-server && cd ~/metrics-server

wget https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.5.2/components.yaml -O metrics-server.yaml

修改配置文件,修改metrics-server容器中的 deployment.spec.template.spec.containers.args 的参数:

- args:

- --cert-dir=/tmp

- --secure-port=4443

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --kubelet-use-node-status-port

- --kubelet-insecure-tls # 添加的

kube-apiserver 服务开启API聚合功能:

# /data/k8s/conf/kube-apiserver.conf 添加以下内容

--runtime-config=api/all=true \

--requestheader-allowed-names=aggregator \

--requestheader-group-headers=X-Remote-Group \

--requestheader-username-headers=X-Remote-User \

--requestheader-extra-headers-prefix=X-Remote-Extra- \

--requestheader-client-ca-file=/data/k8s/certs/ca.pem \

--proxy-client-cert-file=/data/k8s/certs/proxy-client.pem \

--proxy-client-key-file=/data/k8s/certs/proxy-client-key.pem \

--enable-aggregator-routing=true"

- –requestheader-allowed-names: 允许访问的客户端 common names 列表,通过 header 中 –requestheader-username-headers 参数指定的字段获取。客户端 common names 的名称需要在 client-ca-file 中进行设置,将其设置为空值时,表示任意客户端都可访问。

- –requestheader-username-headers: 参数指定的字段获取。

- –requestheader-group-headers 请求头中需要检查的组名。

- –requestheader-extra-headers-prefix: 请求头中需要检查的前缀名。

- –requestheader-username-headers 请求头中需要检查的用户名。

- –requestheader-client-ca-file: 客户端CA证书。

- –proxy-client-cert-file: 在请求期间验证Aggregator的客户端CA证书。

- –proxy-client-key-file: 在请求期间验证Aggregator的客户端私钥。

- --enable-aggregator-routing=true 如果 kube-apiserver 所在的主机上没有运行 kube-proxy,即无法通过服务的 ClusterIP 进行访问,那么还需要设置以下启动参数。

重启kube-apiserver服务:

systemctl daemon-reload && systemctl restart kube-apiserver

部署metrics-server:

cd ~/metrics-server

kubectl apply -f metrics-server.yaml

如果出现拉取镜像失败的话,可以更换仓库地址。修改 metrics-server.yaml, 将 k8s.gcr.io/metrics-server/metrics-server:v0.5.2 修改成 bitnami/metrics-server:0.5.2。

4. 部署dashboard

下载dashboard.yaml文件:

$ mkdir ~/dashboard && cd ~/dashboard

$ wget https://raw.githubusercontent.com/kubernetes/dashboard/master/aio/deploy/recommended.yaml -O dashboard.yaml

修改dashboard.yml:

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

ports:

- port: 443

targetPort: 8443

nodePort: 30088 #添加

type: NodePort #添加

selector:

k8s-app: kubernetes-dashboard

添加两个参数nodePort、type 。请仔细看配置文件,有两个Service配置文件。

部署dashboard:

$ kubectl apply -f dashboard.yaml

创建sa并绑定cluster-admin:

$ kubectl create serviceaccount dashboard-admin -n kube-system

$ kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin

验证:

$ kubectl get pod -n kubernetes-dashboard

NAME READY STATUS RESTARTS AGE

dashboard-metrics-scraper-78f5d9f487-8gn6n 1/1 Running 0 5m47s

kubernetes-dashboard-7d8574ffd9-cgwvq 1/1 Running 0 5m47s

获取token:

$ kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep dashboard-admin | awk '{print $1}')

Name: dashboard-admin-token-dw4zw

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name: dashboard-admin

kubernetes.io/service-account.uid: 50d8dc6a-d75c-41e3-b9a6-82006d0970f9

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1314 bytes

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6InlPZEgtUlJLQ3lReG4zMlEtSm53UFNsc09nMmQ0YWVOWFhPbEUwUF85aEUifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4tZHc0enciLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiNTBkOGRjNmEtZDc1Yy00MWUzLWI5YTYtODIwMDZkMDk3MGY5Iiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmUtc3lzdGVtOmRhc2hib2FyZC1hZG1pbiJ9.sgEroj26ANWX1PzzEMZlCIa1ZxcPkYuP5xolT1L6DDdlaJFteaZZffOqv3hIGQBSUW02n6-nZz4VvRZAitrcA9BCW2VPlqHiQDE37UueU8UE1frQ4VtUkLXAKtMc7CUgHa1stod51LW2ndIKiwq-qWdNC1CQA0KsiBi0t2mGgjNQSII9-7FBTFruDwHUp6RRRqtl_NUl1WQanhHOPXia5wScfB37K8MVB0A4jxXIxNCwpd7zEVp-oQPw8XB500Ut94xwUJY6ppxJpnzXHTcoNt6ClapldTtzTY-HXzy0nXv8QVDozTXC7rTX7dChc1yDjMLWqf-KwT1ZYrKzk-2RHg4、高可用master

环境配置与上面的一样。

1. 安装master节点

创建k8s目录及环境变量:

$ mkdir -p /data/k8s/{bin,conf,certs,logs,data}

$ mkdir -p /data/etcd/certs

$ echo 'PATH=/data/k8s/bin:$PATH' > /etc/profile.d/k8s.sh && source /etc/profile.d/k8s.sh

拷贝命令:

$ scp k8s-master01:/data/k8s/bin/{kube-apiserver,kubectl} /data/k8s/bin/

创建日志目录:

$ mkdir /data/k8s/logs/kube-api-server

获取证书:

$ scp k8s-master01:/data/k8s/certs/{apiserver*.pem,ca*.pem} /data/k8s/certs/

$ scp k8s-node01:/data/etcd/certs/{ca*.pem,etcd*.pem} /data/etcd/certs/

获取审计配置文件:

$ scp k8s-master01:/data/k8s/conf/kube-apiserver-audit.yml /data/k8s/conf/

获取kube-apiserver的启动参数:

$ scp k8s-master01:/data/k8s/conf/kube-apiserver.conf /data/k8s/conf/

说明:需要修改advertise-address为IP地址 。

获取token文件:

$ scp k8s-master01:/data/k8s/conf/token.csv /data/k8s/conf/

创建kube-apiserver的systemd模板:

$ scp k8s-master01:/usr/lib/systemd/system/kube-apiserver.service /usr/lib/systemd/system/kube-apiserver.service

启动kube-apiserver:

$ systemctl daemon-reload

$ systemctl start kube-apiserver.service

$ systemctl enable kube-apiserver.service

拷贝命令:

$ scp k8s-master01:/data/k8s/bin/kube-controller-manager /data/k8s/bin/

创建日志目录:

$ mkdir /data/k8s/logs/kube-controller-manager

获取证书:

$ scp k8s-master01:/data/k8s/certs/controller-manager*.pem /data/k8s/certs/

生成连接集群的kubeconfig文件:

scp k8s-master01:/data/k8s/certs/controller-manager.kubeconfig /data/k8s/certs/

sed -ri 's/192.168.31.103/192.168.31.79/g' /data/k8s/certs/controller-manager.kubeconfig

获取kube-controller-manager参数:

$ scp k8s-master01:/data/k8s/conf/kube-controller-manager.conf /data/k8s/conf/

说明:需要修改master的值。

kube-controller-manager的systemd模板:

$ scp k8s-master01:/usr/lib/systemd/system/kube-controller-manager.service /usr/lib/systemd/system/kube-controller-manager.service

启动kube-controller-manager:

$ systemctl daemon-reload

$ systemctl start kube-controller-manager.service

$ systemctl enable kube-controller-manager.service

拷贝命令:

$ scp k8s-master01:/data/k8s/bin/kube-scheduler /data/k8s/bin

创建日志目录:

$ mkdir /data/k8s/logs/kube-scheduler

获取证书:

$ scp k8s-master01:/data/k8s/certs/scheduler*.pem /data/k8s/certs

生成连接集群的kubeconfig文件:

scp k8s-master01:/data/k8s/certs/scheduler.kubeconfig /data/k8s/certs/

sed -ri 's/192.168.31.103/192.168.31.79/g' /data/k8s/certs/scheduler.kubeconfig

获取启动kube-scheduler参数:

$ scp k8s-master01:/data/k8s/conf/kube-scheduler.conf /data/k8s/conf/

说明:需要修改master的值。

创建kube-scheduler的systemd模板:

$ scp k8s-master01:/usr/lib/systemd/system/kube-scheduler.service /usr/lib/systemd/system/kube-scheduler.service

启动kube-scheduler:

$ systemctl daemon-reload

$ systemctl start kube-scheduler.service

$ systemctl enable kube-scheduler.service

mkdir -p ~/.kube/

scp k8s-master01:~/.kube/config ~/.kube/config2. 负载均衡服务器

非集群节点上安装以下的服务。

curl -L https://get.daocloud.io/docker/compose/releases/download/1.29.2/docker-compose-`uname -s`-`uname -m` > /usr/local/bin/docker-compose

chmod +x /usr/local/bin/docker-compose

在尝试部署 haproxy 容器之前,主机必须允许 ipv4 地址的非本地绑定。为此,请配置 sysctl 可调参数net.ipv4.ip_nonlocal_bind=1。

# 持久化系统参数

$ cat <<-EOF | sudo tee /etc/sysctl.d/kubernetes.conf > /dev/null

net.ipv4.ip_nonlocal_bind = 1

EOF

# 生效配置文件

$ sysctl -p /etc/sysctl.d/kubernetes.conf

# 验证

$ cat /proc/sys/net/ipv4/ip_nonlocal_bind

1

haproxy配置:

$ cat /etc/haproxy/haproxy.cfg

global

log 127.0.0.1 local7 info

defaults

log global

mode tcp

option tcplog

maxconn 4096

balance roundrobin

timeout connect 5000ms

timeout client 50000ms

timeout server 50000ms

listen stats

bind *:10086

mode http

stats enable

stats uri /stats

stats auth admin:admin

stats admin if TRUE

listen kubernetes

bind 192.168.31.100:6443

mode tcp

balance roundrobin

server master01 192.168.31.103:6443 weight 1 check inter 1000 rise 3 fall 5

server master02 192.168.31.79:6443 weight 1 check inter 1000 rise 3 fall 5

server:修改主机IP和端口。

其他配置可以保持不变,其中haproxy统计页面默认账号密码为admin:admin。

docker-compose配置:

$ cat /etc/haproxy/docker-compose.yaml

version: "3"

services:

haproxy:

container_name: haproxy

image: haproxy:2.3-alpine

volumes:

- "./haproxy.cfg:/usr/local/etc/haproxy/haproxy.cfg"

network_mode: "host"

restart: always

启动haproxy:

docker-compose -f /etc/haproxy/docker-compose.yaml up -d

配置keepalived:

$ cat /etc/keepalived/keepalived.conf

include /etc/keepalived/keepalived_apiserver.conf

$ cat /etc/keepalived/keepalived_apiserver.conf

! Configuration File for keepalived

global_defs {

# 标识机器的字符串(默认:本地主机名)

router_id lb01

}

vrrp_script apiserver {

# 检测脚本路径

script "/etc/keepalived/chk_apiserver.sh"

# 执行检测脚本的用户

user root

# 脚本调用之间的秒数

interval 1

# 转换失败所需的次数

fall 5

# 转换成功所需的次数

rise 3

# 按此权重调整优先级

weight -50

}

# 如果多个 vrrp_instance,切记名称不可以重复。包含上面的 include 其他子路径

vrrp_instance apiserver {

# 状态是主节点还是从节点

state MASTER

# inside_network 的接口,由 vrrp 绑定。

interface eth0

# 虚拟路由id,根据该id进行组成主从架构

virtual_router_id 100

# 初始优先级

# 最后优先级权重计算方法

# (1) weight 为正数,priority - weight

# (2) weight 为负数,priority + weight

priority 200

# 加入集群的认证

authentication {

auth_type PASS

auth_pass pwd100

}

# vip 地址

virtual_ipaddress {

192.168.31.100

}

# 健康检查脚本

track_script {

apiserver

}

}

keepalived检测脚本:

$ cat /etc/keepalived/chk_apiserver.sh

#!/bin/bash

count=$(ss -lntup | egrep '6443' | wc -l)

if [ "$count" -ge 1 ];then

# 退出状态为0,代表检查成功

exit 0

else

# 退出状态为1,代表检查不成功

exit 1

fi

$ chmod +x /etc/keepalived/chk_apiserver.sh

docker-compose文件:

$ cat /etc/keepalived/docker-compose.yaml

version: "3"

services:

keepalived:

container_name: keepalived

image: arcts/keepalived:1.2.2

environment:

KEEPALIVED_AUTOCONF: "false"

KEEPALIVED_DEBUG: "true"

volumes:

- "/usr/share/zoneinfo/Asia/Shanghai:/etc/localtime"

- ".:/etc/keepalived"

cap_add:

- NET_ADMIN

network_mode: "host"

restart: always

4)启动keepalived

$ docker-compose -f /etc/keepalived/docker-compose.yaml up -d3. 修改服务连接地址

k8s所有的master节点:

sed -ri 's#(server: https://).*#\1192.168.31.100:6443#g' /data/k8s/certs/bootstrap.kubeconfig

sed -ri 's#(server: https://).*#\1192.168.31.100:6443#g' /data/k8s/certs/admin.kubeconfig

sed -ri 's#(server: https://).*#\1192.168.31.100:6443#g' /data/k8s/certs/kubelet.kubeconfig

sed -ri 's#(server: https://).*#\1192.168.31.100:6443#g' /data/k8s/certs/proxy.kubeconfig

sed -ri 's#(server: https://).*#\1192.168.31.100:6443#g' ~/.kube/config

systemctl restart kubelet kube-proxy

k8s所有的node节点:

sed -ri 's#(server: https://).*#\1192.168.31.100:6443#g' /data/k8s/certs/bootstrap.kubeconfig

sed -ri 's#(server: https://).*#\1192.168.31.100:6443#g' /data/k8s/certs/kubelet.kubeconfig

sed -ri 's#(server: https://).*#\1192.168.31.100:6443#g' /data/k8s/certs/proxy.kubeconfig

systemctl restart kubelet kube-proxy4. 附加iptables规则

# haproxy

iptables -t filter -I INPUT -p tcp --dport 6443 -m comment --comment "k8s vip ports" -j ACCEPT

iptables -t filter -I INPUT -p tcp --source 192.168.31.1 --dport 10086 -m comment --comment "haproxy stats ports" -j ACCEPT

# keepalived心跳

iptables -t filter -I INPUT -p vrrp -s 192.168.31.0/24 -d 224.0.0.18 -m comment --comment "keepalived Heartbeat" -j ACCEPT三、ingress使用

1、ingress安装

环境说明:

| kubernetes版本 | nginx-ingress-controller版本 | 使用端口情况 |

|---|---|---|

| 1.18.18 | 0.45.0 | 80、443、8443 |

官方说明:

下载所需的 yaml 文件:

mkdir ~/ingress && cd ~/ingress

wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v0.45.0/deploy/static/provider/baremetal/deploy.yaml 修改配置文件,将原本的 nodeport 修改成 clusterIP:

# 在 ingress-nginx-controller service的 svc.spec 注释掉 type: NodePort

spec:

# type: NodePort

type: ClusterIP

将容器端口映射到宿主机:

# 在 ingress-nginx-controller 容器的 deploy.spec.template.spec 添加 hostNetwork: true

spec:

hostNetwork: true

修改DNS的策略:

# 在 ingress-nginx-controller 容器的 deploy.spec.template.spec 修改 dnsPolicy

spec:

dnsPolicy: ClusterFirstWithHostNet

修改下载镜像路径:

# 在 ingress-nginx-controller 容器的 deploy.spec.template.spec.containers 修改 image 字段

containers:

- name: controller

image: jiaxzeng/nginx-ingress-controller:v0.45.0

指定 pod 调度特定节点:

# 节点添加标签

kubectl label node k8s-node02 kubernetes.io/ingress=nginx

kubectl label node k8s-node03 kubernetes.io/ingress=nginx

# 在 ingress-nginx-controller 容器的 deploy.spec.template.spec 修改 nodeSelector

nodeSelector:

kubernetes.io/ingress: nginx

$ kubectl apply -f deploy.yaml

namespace/ingress-nginx created

serviceaccount/ingress-nginx created

configmap/ingress-nginx-controller created

clusterrole.rbac.authorization.k8s.io/ingress-nginx created

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx created

role.rbac.authorization.k8s.io/ingress-nginx created

rolebinding.rbac.authorization.k8s.io/ingress-nginx created

service/ingress-nginx-controller-admission created

service/ingress-nginx-controller created

deployment.apps/ingress-nginx-controller created

validatingwebhookconfiguration.admissionregistration.k8s.io/ingress-nginx-admission created

serviceaccount/ingress-nginx-admission created

clusterrole.rbac.authorization.k8s.io/ingress-nginx-admission created

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created

role.rbac.authorization.k8s.io/ingress-nginx-admission created

rolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created

job.batch/ingress-nginx-admission-create created

job.batch/ingress-nginx-admission-patch created

$ kubectl -n ingress-nginx get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

ingress-nginx-admission-create-tm6hb 0/1 Completed 0 21s 20.0.85.198 192.168.31.95 <none> <none>

ingress-nginx-admission-patch-64bgc 0/1 Completed 1 21s 20.0.32.136 192.168.31.103 <none> <none>

ingress-nginx-controller-656cf6c7fd-lw9dx 1/1 Running 0 21s 192.168.31.253 192.168.31.253 <none> <none>

iptables -t filter -I INPUT -p tcp -m multiport --dport 80,443,8443 -m comment --comment "nginx ingress controller ports" -j ACCEPT

iptables -t filter -I INPUT -p tcp --source 192.168.31.0/24 --dport 10254 -m comment --comment "nginx ingress metrics ports" -j ACCEPT2、ingress高可用

将原基础的 ingress-nginx 一个副本提升到多个副本。然后再提供VIP进行访问。

以下三种方式都可以实现高可用

- LoadBalancer

- nodeport + VIP

- hostpath + VIP

- 其中

LoadBalancer是在公有云上使用,不过自管集群也可以安装Metallb也可以实现LoadBalancer的方式。 Metallb的官网为 MetalLB, bare metal load-balancer for Kubernetes

这里演示 hostpath + keepalived + haproxy 的组合方式。实现高可用和高并发。

haproxy的配置文件:

$ cat /etc/haproxy/haproxy.cfg

global

log 127.0.0.1 local7 info

defaults

log global

mode tcp

option tcplog

maxconn 4096

balance roundrobin

timeout connect 5000ms

timeout client 50000ms

timeout server 50000ms

listen stats

bind *:10086

mode http

stats enable

stats uri /stats

stats auth admin:admin

stats admin if TRUE

listen nginx_igress_http

bind 192.168.31.188:80

mode tcp

server master01 192.168.31.103:80 weight 1 check inter 1000 rise 3 fall 5 send-proxy

server master02 192.168.31.79:80 weight 1 check inter 1000 rise 3 fall 5 send-proxy

listen nginx_igress_https

bind 192.168.31.188:443

mode tcp

server master01 192.168.31.103:443 weight 1 check inter 1000 rise 3 fall 5 send-proxy

server master02 192.168.31.79:443 weight 1 check inter 1000 rise 3 fall 5 send-proxy

- server: 修改主机IP和端口

- 其他配置可以保持不变,其中haproxy统计页面默认账号密码为

admin:admin send-proxy是开启 use-proxy 功能,ingress获取真实IP地址

haproxy的docker-compose:

$ cat /etc/haproxy/docker-compose.yaml

version: "3"

services:

haproxy:

container_name: haproxy

image: haproxy:2.3-alpine

volumes:

- "./haproxy.cfg:/usr/local/etc/haproxy/haproxy.cfg"

network_mode: "host"

restart: always

注意:经测试 2.4.9、2.5.0 镜像启动绑定不了低于1024端口。

启动haproxy:

docker-compose -f /etc/haproxy/docker-compose.yaml up -d

keepalived配置:

$ cat /etc/keepalived/keepalived.conf

include /etc/keepalived/keepalived_ingress.conf

$ cat /etc/keepalived/keepalived_ingress.conf

! Configuration File for keepalived

global_defs {

# 标识机器的字符串(默认:本地主机名)

router_id lb02

}

vrrp_script ingress {

# 检测脚本路径

script "/etc/keepalived/chk_ingress.sh"

# 执行检测脚本的用户

user root

# 脚本调用之间的秒数

interval 1

# 转换失败所需的次数

fall 5

# 转换成功所需的次数

rise 3

# 按此权重调整优先级

weight -50

}

vrrp_instance ingress {

# 状态是主节点还是从节点

state MASTER

# inside_network 的接口,由 vrrp 绑定。

interface eth0

# 虚拟路由id,根据该id进行组成主从架构

virtual_router_id 200

# 初始优先级

# 最后优先级权重计算方法

# (1) weight 为正数,priority - weight

# (2) weight 为负数,priority + weight

priority 200

# 加入集群的认证

authentication {

auth_type PASS

auth_pass pwd200

}

# vip 地址

virtual_ipaddress {

192.168.31.188

}

# 健康检查脚本

track_script {

ingress

}

}

keepalived检测脚本:

$ cat /etc/keepalived/chk_ingress.sh

#!/bin/bash

count=$(ss -lntup | egrep ":443|:80" | wc -l)

if [ "$count" -ge 2 ];then

# 退出状态为0,代表检查成功

exit 0

else

# 退出状态为1,代表检查不成功

exit 1

fi

$ chmod +x /etc/keepalived/chk_ingress.sh

keepalived的docker-compose:

$ cat /etc/keepalived/docker-compose.yaml

version: "3"

services:

keepalived:

container_name: keepalived

image: arcts/keepalived:1.2.2

environment:

KEEPALIVED_AUTOCONF: "false"

KEEPALIVED_DEBUG: "true"

volumes:

- "/usr/share/zoneinfo/Asia/Shanghai:/etc/localtime"

- ".:/etc/keepalived"

cap_add:

- NET_ADMIN

network_mode: "host"

restart: always

启动keepalived:

docker-compose -f /etc/keepalived/docker-compose.yaml up -d

# 在 deploy 添加或修改replicas

replicas: 2

# 在 deploy.spec.template.spec 下面添加affinity

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

topologyKey: kubernetes.io/hostname

需要重启ingress-nginx-controller容器。

iptables -I INPUT -p tcp -m multiport --dports 80,443,8443 -m comment --comment "nginx ingress controller external ports" -j ACCEPT

iptables -I INPUT -p tcp --dport 10086 -m comment --comment "haproxy stats ports" -j ACCEPT3、ingress基本使用

创建测试应用:

cat > nginx.yaml <<-EOF

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-nginx

spec:

selector:

matchLabels:

app: my-nginx

template:

metadata:

labels:

app: my-nginx

spec:

containers:

- name: my-nginx

image: nginx

resources:

limits:

memory: "200Mi"

cpu: "500m"

requests:

memory: "100Mi"

cpu: "100m"

ports:

- name: web

containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx-service

spec:

selector:

app: my-nginx

ports:

- port: 80

targetPort: web

EOF

$ kubectl apply -f nginx.yaml

deployment.apps/my-nginx created

service/nginx-service created

$ kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

my-nginx-759cf4d696-vkj4q 1/1 Running 0 4m10s 20.0.85.199 k8s-node01 <none> <none>

$ cat > nginx-ingress.yaml <<-EOF

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: nginx-ingress

labels:

name: nginx-ingress

spec:

ingressClassName: nginx

rules:

- host: www.ecloud.com

http:

paths:

- path: /

backend:

serviceName: nginx-service

servicePort: 80

EOF

$ kubectl apply -f nginx-ingress.yaml

ingress.extensions/nginx-ingress created

$ kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

nginx-ingress <none> www.ecloud.com 192.168.31.103,192.168.31.79 80 21s

$ echo '192.168.31.103 www.ecloud.com' >> /etc/hosts

$ curl www.ecloud.com

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

可以通过 keepalived + LVS 高可用,使用 VIP 做域名解析。这里就不实现了。

4、Rewrite配置

由于域名和公网费用昂贵。通常是只有一个域名,但是有多个应用需要上线。通常都会域名+应用名称(www.ecloud.com/app)。原本应用已经开发好的了,访问是在 / 。那就需要改写上下文来实现。

原应用演示:

$ kubectl get svc app demo

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

app ClusterIP 10.183.0.36 <none> 8001/TCP 6m13s

demo ClusterIP 10.183.0.37 <none> 8002/TCP 2m47s

$ curl 10.183.0.36:8001

app

$ curl 10.183.0.37:8002/test/demo/

demo

现在有两个应用分别是 app 、demo。分别的访问路径为:/、/test/demo。现在只有一个域名是 www.ecloud.com 且需要把两个网页都放在同一个域名访问。

1)添加上下文路径

现在的目标是把 app 应用,可以通过 www.ecloud.com/app/ 来展示。

创建ingress:

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: app

namespace: default

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /$2 # 真实到服务的上下文

spec:

ingressClassName: nginx

rules:

- host: www.ecloud.com

http:

paths:

- path: /app(/|)(.*) # 浏览器访问上下文

backend:

serviceName: app

servicePort: 8001

$ curl www.ecloud.com/app/

app

$ curl www.ecloud.com/app/index.html

app

现在的目标是把 demo 应用,可以通过 www.ecloud.com/demo/ 来展示。

创建ingress:

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: demo

namespace: default

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /test/demo/$2 # 真实到服务的上下文

spec:

ingressClassName: nginx

rules:

- host: www.ecloud.com

http:

paths:

- path: /demo(/|)(.*) # 浏览器访问上下文

backend:

serviceName: demo

servicePort: 8002

$ curl www.ecloud.com/demo

demo

$ curl www.ecloud.com/demo/

demo

$ curl www.ecloud.com/demo/index.html

demo

应该给应用设置一个 app-root 的注解,这样当我们访问主域名的时候会自动跳转到我们指定的 app-root 目录下面。如下所示:

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: demo

namespace: default

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /test/demo/$2 # 真实到服务的上下文

nginx.ingress.kubernetes.io/app-root: /demo/ # 这里写浏览器访问的路径

spec:

ingressClassName: nginx

rules:

- host: www.ecloud.com

http:

paths:

- path: /demo(/|)(.*) # 浏览器访问上下文

backend:

serviceName: demo

servicePort: 8002

验证:

$ curl www.ecloud.com

<html>

<head><title>302 Found</title></head>

<body>

<center><h1>302 Found</h1></center>

<hr><center>nginx</center>

</body>

</html>

# nginx-ingress-controller 的日志

192.168.31.103 - - [16/Sep/2021:08:22:39 +0000] "GET / HTTP/1.1" 302 138 "-" "curl/7.29.0" 78 0.000 [-] [] - - - - 5ba35f028edbd48ff316bd544ae60746

$ curl www.ecloud.com -L

demo

# nginx-ingress-controller 的日志

192.168.31.103 - - [16/Sep/2021:08:22:56 +0000] "GET / HTTP/1.1" 302 138 "-" "curl/7.29.0" 78 0.000 [-] [] - - - - 4ffa0129b9fab80b9e904ad9716bd8ca

192.168.31.103 - - [16/Sep/2021:08:22:56 +0000] "GET /demo/ HTTP/1.1" 200 5 "-" "curl/7.29.0" 83 0.003 [default-demo-8002] [] 20.0.32.159:8002 5 0.002 200 3d17d7cb25f3eacc7eb848955a28675f

不能定义默认的 ingress.spec.backend 字段。否则会发生不符合预期的跳转。

模拟定义 ingress.spec.backend 字段:

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: app

namespace: default

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /$2

spec:

ingressClassName: nginx

backend: # 设置默认的backend

serviceName: app

servicePort: 8001

rules:

- host: www.ecloud.com

http:

paths:

- path: /app(/|$)(.*)

backend:

serviceName: app

servicePort: 8001

查看ingress资源情况:

$ kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

app nginx www.ecloud.com 192.168.31.79 80 20m

$ kubectl describe ingress app

Name: app

Namespace: default

Address: 192.168.31.79

Default backend: app:8001 (20.0.32.157:8001)

Rules:

Host Path Backends

---- ---- --------

www.ecloud.com

/app(/|$)(.*) app:8001 (20.0.32.157:8001)

Annotations: nginx.ingress.kubernetes.io/rewrite-target: /$2

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Sync 7m52s (x5 over 21m) nginx-ingress-controller Scheduled for sync

测试访问:

$ curl www.ecloud.com

app

$ curl www.ecloud.com/fskl/fskf/ajfk

app

发现不符合 /app 的上下文也可以匹配到 / 的页面,这个是不符合我们的预期的。

查看nginx的配置文件:

$ kubectl -n ingress-nginx exec -it ingress-nginx-controller-6c979c5b47-bpwf6 -- bash

$ vi /etc/nginx/nginx.conf

# 找到 `server_name` 为设置的域名,找到为 `location ~* "^/"`

# 没有匹配到 `/app` 的上下文,则进入该location。

# 该location读取app应用的 `/` 。所以访问 `/fskl/fskf/ajfk` 都可以访问到 `/` 的页面

# 原本我们的预期是访问错了上下文,应该是报 `404` 的,而不是访问主域名页面

location ~* "^/" {

set $namespace "default";

set $ingress_name "app";

set $service_name "app";

set $service_port "8001";

set $location_path "/"

...

}

虽然没有定义默认的 ingress.spec.backend 字段。在 kubectl describe ingress 查看ingress详情时,会有 Default backend: default-http-backend:80 (<error: endpoints "default-http-backend" not found>) 提示,但是影响正常使用。

5、tls安全路由

互联网越来越严格,很多网站都配置了https的协议了。这里聊一下ingress的tls安全路由,分为以下两种方式:

- 配置安全的路由服务

- 配置HTTPS双向认证

生成一个证书文件tls.crt和一个私钥文件tls.key:

$ openssl req -x509 -nodes -days 365 -newkey rsa:2048 -keyout tls.key -out tls.crt -subj "/CN=foo.ecloud.com"

创建密钥:

$ kubectl create secret tls app-v1-tls --key tls.key --cert tls.crt

创建一个安全的Nginx Ingress服务:

$ cat <<EOF | kubectl create -f -

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: app-v1-tls

spec:

ingressClassName: nginx

tls:

- hosts:

- foo.ecloud.com

secretName: app-v1-tls

rules:

- host: foo.ecloud.com

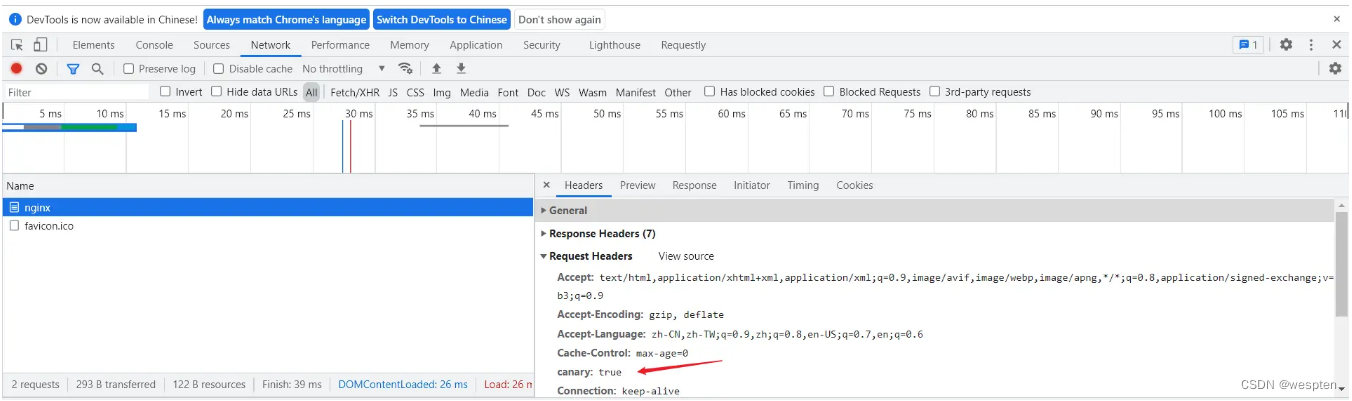

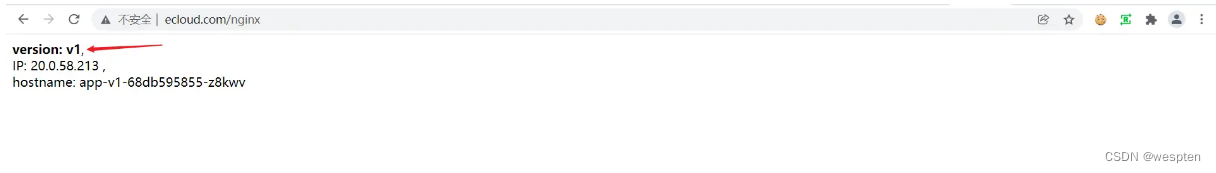

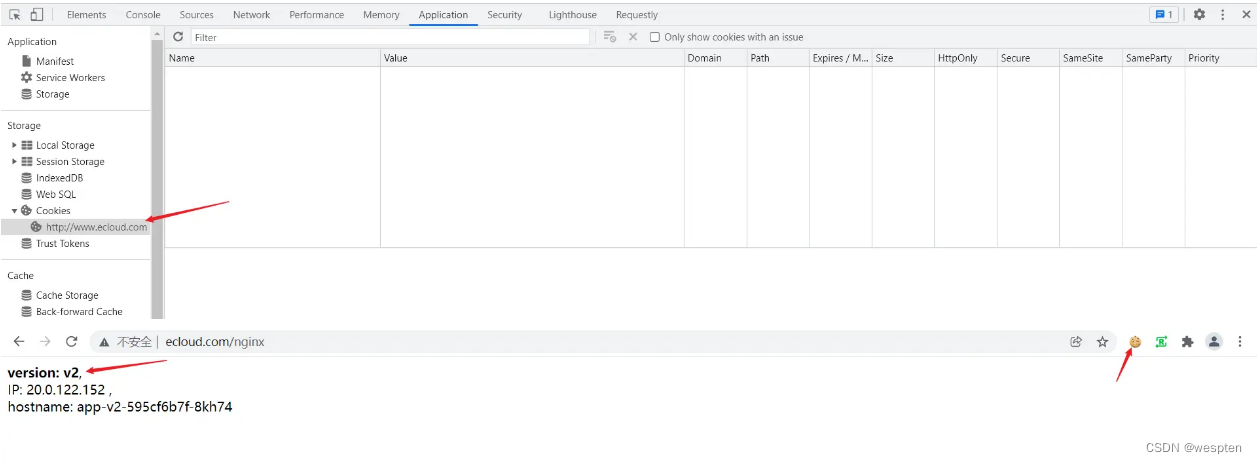

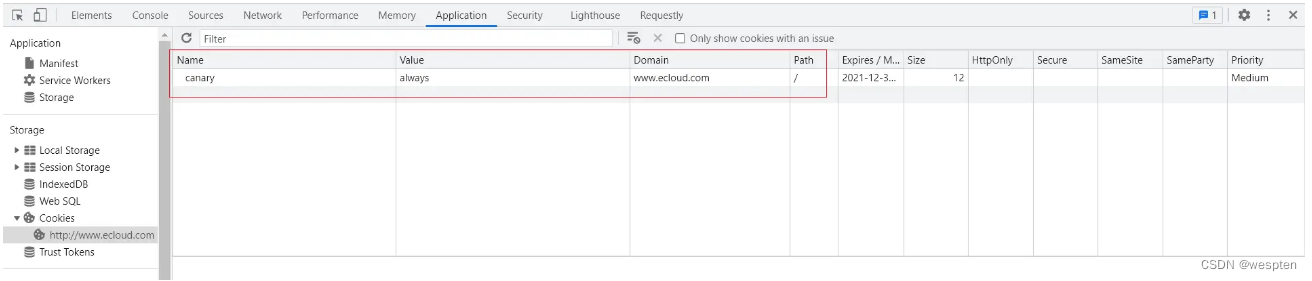

http: