1、简述

video_loopback demo演示了怎么从call这一层创建流,接下来看一下视频的采集,编码,发送流程,本文只是粗略的跟踪流程,具体细节知识点后续分析。

webrtc的更新太快,模块有些调整,也加了些处理流程,所以得重复的去看流程,也是因为脑子不好使,看完接着忘。

代码真看主要函数,省略了很多

2、跟踪代码

2.1 视频采集阶段

数据流走向

主要调用流程

2.1.1 统一输入格式YUV420

统一成yuv420格式

int32_t VideoCaptureImpl::IncomingFrame(uint8_t* videoFrame,

size_t videoFrameLength,

const VideoCaptureCapability& frameInfo,

int64_t captureTime /*=0*/) {

......

//旋转及统一格式成yuv420

//通过调用libyuv

const int conversionResult = libyuv::ConvertToI420(

videoFrame, videoFrameLength, buffer.get()->MutableDataY(),

buffer.get()->StrideY(), buffer.get()->MutableDataU(),

buffer.get()->StrideU(), buffer.get()->MutableDataV(),

buffer.get()->StrideV(), 0, 0, // No Cropping

width, height, target_width, target_height, rotation_mode,

ConvertVideoType(frameInfo.videoType));

......

//设置时间戳

VideoFrame captureFrame =

VideoFrame::Builder()

.set_video_frame_buffer(buffer)

.set_timestamp_rtp(0)

.set_timestamp_ms(rtc::TimeMillis())

.set_rotation(!apply_rotation ? _rotateFrame : kVideoRotation_0)

.build();

captureFrame.set_ntp_time_ms(captureTime);

//后续分发

DeliverCapturedFrame(captureFrame);

return 0;

}

本文福利, C++音视频学习资料包、技术视频,内容包括(音视频开发,面试题,FFmpeg ,webRTC ,rtmp ,hls ,rtsp ,ffplay ,srs)↓↓↓↓↓↓见下面↓↓文章底部点击领取↓↓

2.1.2 视频缩放

//检测采集到的的 yuv是不是设置的,因为摄像头可能不支持编码设置的分辨率,需要缩放裁剪

void TestVideoCapturer::OnFrame(const VideoFrame& original_frame) {

......

//获取视频缩放大小

if (!video_adapter_.AdaptFrameResolution(

frame.width(), frame.height(), frame.timestamp_us() * 1000,

&cropped_width, &cropped_height, &out_width, &out_height)) {

}

......

if (out_height != frame.height() || out_width != frame.width()) {

......

if (frame.has_update_rect()) {

//视频缩放

VideoFrame::UpdateRect new_rect = frame.update_rect().ScaleWithFrame(

frame.width(), frame.height(), 0, 0, frame.width(), frame.height(),

out_width, out_height);

new_frame_builder.set_update_rect(new_rect);

}

broadcaster_.OnFrame(new_frame_builder.build());

} else {

// No adaptations needed, just return the frame as is.

broadcaster_.OnFrame(frame);

}

}

2.1.3 yuv分发

yuv可能分发到多个通道

例如,本地渲染,编码等

void VideoBroadcaster::OnFrame(const webrtc::VideoFrame& frame) {

//有多个分发

for (auto& sink_pair : sink_pairs()) {

//判断旋转角度不对的丢

if (sink_pair.wants.rotation_applied &&

frame.rotation() != webrtc::kVideoRotation_0) {

......

continue;

}

//是否需要黑屏数据,比如禁用时

if (sink_pair.wants.black_frames) {

webrtc::VideoFrame black_frame =

webrtc::VideoFrame::Builder()

.set_video_frame_buffer(

GetBlackFrameBuffer(frame.width(), frame.height()))

.set_rotation(frame.rotation())

.set_timestamp_us(frame.timestamp_us())

.set_id(frame.id())

.build();

sink_pair.sink->OnFrame(black_frame);

} else if (!previous_frame_sent_to_all_sinks_ && frame.has_update_rect()) {

webrtc::VideoFrame copy = frame;

copy.clear_update_rect();

sink_pair.sink->OnFrame(copy);

} else {

//分发

sink_pair.sink->OnFrame(frame);

}

}

previous_frame_sent_to_all_sinks_ = !current_frame_was_discarded;

}

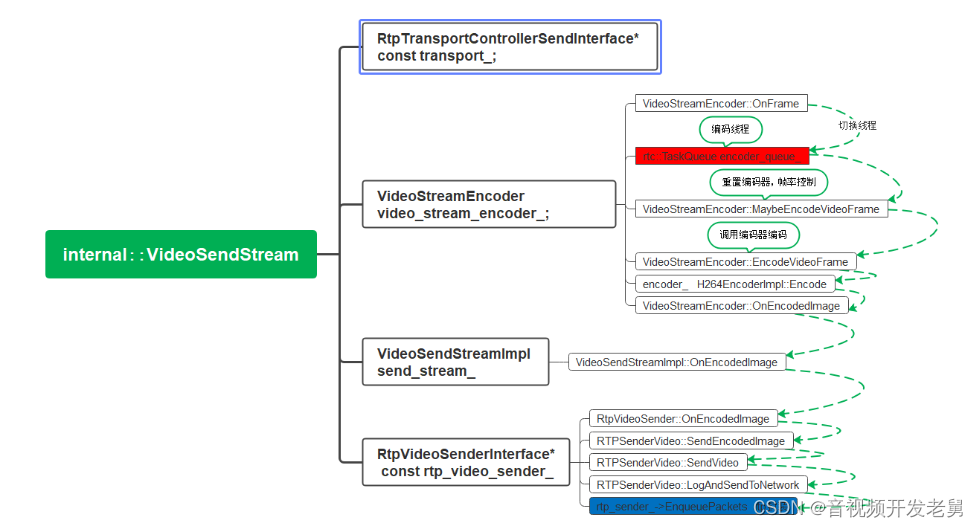

2.2 视频编码流程

数据流

void VideoStreamEncoder::OnFrame(const VideoFrame& video_frame)

|

//切换编码线程

VideoStreamEncoder encoder_queue_.PostTask()

|

//初始化编码器在此函数

VideoStreamEncoder::MaybeEncodeVideoFrame()

|

VideoStreamEncoder::ReconfigureEncoder()

VideoStreamEncoder::EncodeVideoFrame()

|

H264EncoderImpl::Encode()

|

VideoStreamEncoder::OnEncodedImage()

|

VideoSendStreamImpl::OnEncodedImage()

|

RtpVideoSender::OnEncodedImage()

|

RTPSenderVideo::SendEncodedImage()

|

RTPSenderVideo::LogAndSendToNetwork()

|

//进入rtp打包发送流程

RTPSender::EnqueuePackets()

|

PacedSender::EnqueuePackets()

2.2.1 视频流接收到yuv,切换编码线程

void VideoStreamEncoder::OnFrame(const VideoFrame& video_frame) {

//设置ntp时间

if (video_frame.ntp_time_ms() > 0) {

capture_ntp_time_ms = video_frame.ntp_time_ms();

} else if (video_frame.render_time_ms() != 0) {

capture_ntp_time_ms = video_frame.render_time_ms() + delta_ntp_internal_ms_;

} else {

capture_ntp_time_ms = now.ms() + delta_ntp_internal_ms_;

}

incoming_frame.set_ntp_time_ms(capture_ntp_time_ms);

//设置rtp timestamp

// Convert NTP time, in ms, to RTP timestamp.

const int kMsToRtpTimestamp = 90;

incoming_frame.set_timestamp(

kMsToRtpTimestamp * static_cast<uint32_t>(incoming_frame.ntp_time_ms()));

//当前帧时间小于等于之前的,删除

if (incoming_frame.ntp_time_ms() <= last_captured_timestamp_) {

// We don't allow the same capture time for two frames, drop this one.

return;

}

//切换编码线程

++posted_frames_waiting_for_encode_;

encoder_queue_.PostTask(

[this, incoming_frame, post_time_us, log_stats]() {

//编码缓存数量为1,编码,否则丢弃,cpu在不足的时候,丢弃部分yuv,只编最新的

if (posted_frames_waiting_for_encode == 1 && !cwnd_frame_drop) {

MaybeEncodeVideoFrame(incoming_frame, post_time_us);

}

});

}

- 1、设置ntp时间

- 2、设置rtp时间戳

- 3、判断帧是不是早于等于之前的

- 4、切换编码线程

- 5、判断编码缓存帧数量,数量大于1丢弃之前的,只编码最新的,处理cpu过高时造成堆积

2.2.2 编码器设置,帧率控制

void VideoStreamEncoder::MaybeEncodeVideoFrame(const VideoFrame& video_frame,

int64_t time_when_posted_us) {

//编码器设置

if (pending_encoder_reconfiguration_) {

ReconfigureEncoder();

last_parameters_update_ms_.emplace(now_ms);

} else if (!last_parameters_update_ms_ ||

now_ms - *last_parameters_update_ms_ >=

kParameterUpdateIntervalMs) {

if (last_encoder_rate_settings_) {

EncoderRateSettings new_rate_settings = *last_encoder_rate_settings_;

new_rate_settings.rate_control.framerate_fps =

static_cast<double>(framerate_fps);

SetEncoderRates(UpdateBitrateAllocation(new_rate_settings));

}

last_parameters_update_ms_.emplace(now_ms);

}

//编码器暂停

if (EncoderPaused()) {

// Storing references to a native buffer risks blocking frame capture.

if (video_frame.video_frame_buffer()->type() !=

VideoFrameBuffer::Type::kNative) {

if (pending_frame_)

TraceFrameDropStart();

pending_frame_ = video_frame;

pending_frame_post_time_us_ = time_when_posted_us;

} else {

// Ensure that any previously stored frame is dropped.

pending_frame_.reset();

TraceFrameDropStart();

accumulated_update_rect_.Union(video_frame.update_rect());

accumulated_update_rect_is_valid_ &= video_frame.has_update_rect();

}

return;

}

pending_frame_.reset();

//帧率控制

frame_dropper_.Leak(framerate_fps);

const bool frame_dropping_enabled =

!force_disable_frame_dropper_ &&

!encoder_info_.has_trusted_rate_controller;

frame_dropper_.Enable(frame_dropping_enabled);

if (frame_dropping_enabled && frame_dropper_.DropFrame()) {

OnDroppedFrame(

EncodedImageCallback::DropReason::kDroppedByMediaOptimizations);

accumulated_update_rect_.Union(video_frame.update_rect());

accumulated_update_rect_is_valid_ &= video_frame.has_update_rect();

return;

}

//编码

EncodeVideoFrame(video_frame, time_when_posted_us);

}

- 1、如果需要则重置编码器

- 2、编码器暂停时 丢帧,码率不够了情况下

- 3、帧率控制

2.2.3 调用编码器编码

void VideoStreamEncoder::EncodeVideoFrame(const VideoFrame& video_frame,

int64_t time_when_posted_us) {

//视频裁剪缩放

if (crop_width_ < 4 && crop_height_ < 4) {

cropped_buffer = video_frame.video_frame_buffer()->CropAndScale(

crop_width_ / 2, crop_height_ / 2, cropped_width, cropped_height,

cropped_width, cropped_height);

update_rect.offset_x -= crop_width_ / 2;

update_rect.offset_y -= crop_height_ / 2;

update_rect.Intersect(

VideoFrame::UpdateRect{0, 0, cropped_width, cropped_height});

} else {

cropped_buffer = video_frame.video_frame_buffer()->Scale(cropped_width,

cropped_height);

}

//调用编码器编码

const int32_t encode_status = encoder_->Encode(out_frame, &next_frame_types_);

was_encode_called_since_last_initialization_ = true;

}

- 1、图像裁剪或者缩放

- 2、调用编码器编码

2.2.4 回调编码完成,qp分析

编码完成后会回调 VideoStreamEncoder::OnEncodedImage

- 1 进行qp分析

EncodedImageCallback::Result VideoStreamEncoder::OnEncodedImage(

const EncodedImage& encoded_image,

const CodecSpecificInfo* codec_specific_info) {

......

frame_encode_metadata_writer_.FillTimingInfo(spatial_idx, &image_copy);

frame_encode_metadata_writer_.UpdateBitstream(codec_specific_info,

&image_copy);

VideoCodecType codec_type = codec_specific_info

? codec_specific_info->codecType

: VideoCodecType::kVideoCodecGeneric;

//qp 分析

if (image_copy.qp_ < 0 && qp_parsing_allowed_) {

// Parse encoded frame QP if that was not provided by encoder.

image_copy.qp_ = qp_parser_

.Parse(codec_type, spatial_idx, image_copy.data(),

image_copy.size())

.value_or(-1);

}

//编码状态回调

encoder_stats_observer_->OnSendEncodedImage(image_copy, codec_specific_info);

//继续发送

EncodedImageCallback::Result result =

sink_->OnEncodedImage(image_copy, codec_specific_info);

......

}

本文福利, C++音视频学习资料包、技术视频,内容包括(音视频开发,面试题,FFmpeg ,webRTC ,rtmp ,hls ,rtsp ,ffplay ,srs)↓↓↓↓↓↓见下面↓↓文章底部点击领取↓↓