活动地址:CSDN21天学习挑战赛

构造数据

代码是在jupyter下运行的,首先构造一组输入数据x和其对应的输出值y:

import numpy as np

import torch

import torch.nn as nn

x_values = [i for i in range(11)]

x_train = np.array(x_values, dtype=np.float32)

x_train = x_train.reshape(-1, 1)

y_values = [9*i + 9 for i in x_values]

y_train = np.array(y_values, dtype=np.float32)

y_train = y_train.reshape(-1, 1)

print(x_values)

print(y_values)

结果:

[0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10]

[9, 18, 27, 36, 45, 54, 63, 72, 81, 90, 99]

CPU

其实线性回归就是一个不加激活函数的全连接层,首先定义线性回归的一个类LinearRegressionModel:

class LinearRegressionModel(nn.Module):

# 构造函数

def __init__(self, input_dim, output_dim):

super(LinearRegressionModel, self).__init__()

self.linear = nn.Linear(input_dim, output_dim)

# 重写前向传播方法,继承自module

def forward(self, x):

out = self.linear(x)

return out

初始化模型:

input_dim = 1

output_dim = 1

model = LinearRegressionModel(input_dim, output_dim)

model

输出(bias=true,即考虑偏置的情况):

LinearRegressionModel(

(linear): Linear(in_features=1, out_features=1, bias=True)

)

指定好参数和损失函数:

epochs = 1000

learning_rate = 0.01

# 随机梯度下降,优化器

optimizer = torch.optim.SGD(model.parameters(), lr=learning_rate)

# 均方误差,损失函数

criterion = nn.MSELoss()

训练模型:

对于outputs = model(inputs)给出解释:

上述代码与outputs = model.__call__(forward(inputs))等价,因为 __call__方法可以使得类的实例可以像一个函数一样被调用,且一般会是调用forward方法,可看:

python中这种写法,为什么可以直接outputs = model(inputs)?用到了什么特性?

for epoch in range(epochs):

epoch += 1

# 注意转行成tensor

inputs = torch.from_numpy(x_train)

labels = torch.from_numpy(y_train)

# 梯度要清零每一次迭代

optimizer.zero_grad()

# 前向传播

outputs = model(inputs)

# 计算损失

loss = criterion(outputs, labels)

# 返向传播

loss.backward()

# 更新权重参数

optimizer.step()

if epoch % 50 == 0:

print('epoch {}, loss {}'.format(epoch, loss.item()))

结果:

epoch 50, loss 7.823598861694336

epoch 100, loss 4.462289333343506

epoch 150, loss 2.545119524002075

epoch 200, loss 1.451640009880066

epoch 250, loss 0.8279607892036438

epoch 300, loss 0.47223785519599915

epoch 350, loss 0.269347220659256

epoch 400, loss 0.1536264419555664

epoch 450, loss 0.08762145042419434

epoch 500, loss 0.04997712001204491

epoch 550, loss 0.028505485504865646

epoch 600, loss 0.016258591786026955

epoch 650, loss 0.009272975847125053

epoch 700, loss 0.005288919433951378

epoch 750, loss 0.003016551025211811

epoch 800, loss 0.0017205380136147141

epoch 850, loss 0.0009813279611989856

epoch 900, loss 0.0005597395356744528

epoch 950, loss 0.0003192391886841506

epoch 1000, loss 0.00018208501569461077

测试模型预测结果:

predicted = model(torch.from_numpy(x_train).requires_grad_()).data.numpy()

predicted

输出:

array([[ 8.974897],

[17.978512],

[26.982128],

[35.98574 ],

[44.989357],

[53.992973],

[62.99659 ],

[72.000206],

[81.00382 ],

[90.00744 ],

[99.011055]], dtype=float32)

模型的保存与读取:

torch.save(model.state_dict(), 'model.pkl')

model.load_state_dict(torch.load('model.pkl'))

输出:

<All keys matched successfully>

GPU

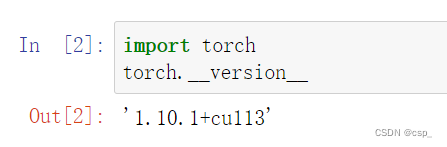

首先确认自己有GPU环境:

之后对变量device初始化:device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

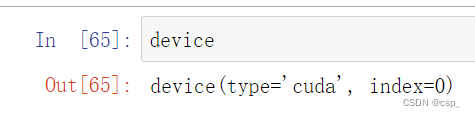

看一下device的值:

之后把数据(inputs,labels)和模型model传入到cuda(device)里面就可以了

代码:

import torch

import torch.nn as nn

import numpy as np

class LinearRegressionModel(nn.Module):

def __init__(self, input_dim, output_dim):

super(LinearRegressionModel, self).__init__()

self.linear = nn.Linear(input_dim, output_dim)

def forward(self, x):

out = self.linear(x)

return out

input_dim = 1

output_dim = 1

model = LinearRegressionModel(input_dim, output_dim)

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

model.to(device)

criterion = nn.MSELoss()

learning_rate = 0.01

optimizer = torch.optim.SGD(model.parameters(), lr=learning_rate)

epochs = 1000

for epoch in range(epochs):

epoch += 1

inputs = torch.from_numpy(x_train).to(device)

labels = torch.from_numpy(y_train).to(device)

optimizer.zero_grad()

outputs = model(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

if epoch % 50 == 0:

print('epoch {}, loss {}'.format(epoch, loss.item()))

结果:

epoch 50, loss 8.292747497558594

epoch 100, loss 4.729876518249512

epoch 150, loss 2.6977455615997314

epoch 200, loss 1.5386940240859985

epoch 250, loss 0.8776141405105591

epoch 300, loss 0.5005567669868469

epoch 350, loss 0.28549838066101074

epoch 400, loss 0.16283737123012543

epoch 450, loss 0.09287738054990768

epoch 500, loss 0.052974116057157516

epoch 550, loss 0.0302141010761261

epoch 600, loss 0.017233209684491158

epoch 650, loss 0.009829038754105568

epoch 700, loss 0.0056058201007544994

epoch 750, loss 0.0031971274875104427

epoch 800, loss 0.0018234187737107277

epoch 850, loss 0.0010400479659438133

epoch 900, loss 0.0005932282656431198

epoch 950, loss 0.0003383254224900156

epoch 1000, loss 0.000192900508409366

GPU和CPU的定义:

借鉴自:详解gpu是什么和cpu的区别

- CPU(Central Processing Unit-中央处理器),是一块超大规模的集成电路,是一台计算机的运算核心(Core)和控制核心( Control Unit),它的功能主要是解释计算机指令以及处理计算机软件中的数据

- GPU(Graphics Processing Unit-图形处理器),是一种专门在个人电脑、工作站、游戏机和一些移动设备(如平板电脑、智能手机等)上图像运算工作的微处理器

GPU和CPU的区别:

1、缓存

- CPU有大量的缓存结构,目前主流的CPU芯片上都有四级缓存,这些缓存结构消耗了大量的晶体管,在运行的时候需要大量的电力

- GPU的缓存就很简单,目前主流的GPU芯片最多有两层缓存,而且GPU可以利用晶体管上的空间和能耗做成ALU单元,因此GPU比CPU的效率要高一些

2、响应方式

- CPU要求的是实时响应,对单任务的速度要求很高,所以就要用很多层缓存的办法来保证单任务的速度

- GPU是把所有的任务都排好,然后再批处理,对缓存的要求相对很低

3、浮点运算方式

- CPU除了负责浮点整形运算外,还有很多其他的指令集的负载,比如像多媒体解码,硬件解码等,因此CPU是多才多艺的。CPU注重的是单线程的性能,要保证指令流不中断,需要消耗更多的晶体管和能耗用在控制部分,于是CPU分配在浮点计算的功耗就会变少

- GPU基本上只做浮点运算的,设计结构简单,也就可以做的更快。GPU注重的是吞吐量,单指令能驱动更多的计算,相比较GPU消耗在控制部分的能耗就比较少,因此可以把电省下来的资源给浮点计算使用

4、应用方向

- CPU所擅长的像操作系统这一类应用,需要快速响应实时信息,需要针对延迟优化,所以晶体管数量和能耗都需要用在分支预测、乱序执行、低延迟缓存等控制部分

- GPU适合对于具有极高的可预测性和大量相似的运算以及高延迟、高吞吐的架构运算