前言

本文分析ffmpeg软解码流程,相关函数如下,以find_stream_info中的try_decode_frame为例; 相关函数都在libavcodec包下。

基本调用流程如下:

const AVCodec *codec = find_probe_decoder(s, st, st->codecpar->codec_id);

/*

* Force thread count to 1 since the H.264 decoder will not extract

* SPS and PPS to extradata during multi-threaded decoding.

*

* 这块的设置后续会单独写文章梳理,和FFmpeg使用的解码模式有关,Frame threading或Slice threading,很值得研究一番

*/

av_dict_set(options ? options : &thread_opt, "threads", "1", 0);

avcodec_open2(avctx, codec, options ? options : &thread_opt);

AVPacket pkt = *avpkt; // av_read_frame得到的packet

avcodec_send_packet(avctx, &pkt);

AVFrame *frame = av_frame_alloc();

avcodec_receive_frame(avctx, frame); // 解码获取一帧

软解码结构体介绍

在avformat_find_stream_info#find_probe_decoder方法中找到解码器,具体可以看avformat_find_stream_info函数分析文章,相应codec_id的解码是通过avcodec_register_all注册进来的。

视频

libavcodec包下h264dec.c#ff_h264_decoder

在find_stream_info#read_frame_internal中读取第一个视频包(sps/pps)和第一个音频包(sequenceheader)时会赋值codec_id;打印avcodec_get_name(codecContext->codec_id)可以获取到27,codec_name为h264,找到当前使用的解码器为libavcodec包下h264dec.c。目前一般都使用的h264编码,所以本篇文章也以h264格式来介绍ffmpeg的解码流程;

// h264dec.c

AVCodec ff_h264_decoder = {

.name = "h264",

.long_name = NULL_IF_CONFIG_SMALL("H.264 / AVC / MPEG-4 AVC / MPEG-4 part 10"),

.type = AVMEDIA_TYPE_VIDEO,

.id = AV_CODEC_ID_H264,

.priv_data_size = sizeof(H264Context),

.init = h264_decode_init,

.close = h264_decode_end,

.decode = h264_decode_frame,

.capabilities = /*AV_CODEC_CAP_DRAW_HORIZ_BAND |*/ AV_CODEC_CAP_DR1 |

AV_CODEC_CAP_DELAY | AV_CODEC_CAP_SLICE_THREADS |

AV_CODEC_CAP_FRAME_THREADS,

.hw_configs = (const AVCodecHWConfigInternal*[]) {

NULL

},

.caps_internal = FF_CODEC_CAP_INIT_THREADSAFE | FF_CODEC_CAP_EXPORTS_CROPPING |

FF_CODEC_CAP_ALLOCATE_PROGRESS | FF_CODEC_CAP_INIT_CLEANUP,

.flush = h264_decode_flush,

.update_thread_context = ONLY_IF_THREADS_ENABLED(ff_h264_update_thread_context),

.profiles = NULL_IF_CONFIG_SMALL(ff_h264_profiles),

.priv_class = &h264_class,

};

音频

libavcodec包下aacdec.c#ff_aac_decoder

AVCodec ff_aac_decoder = {

.name = "aac",

.long_name = NULL_IF_CONFIG_SMALL("AAC (Advanced Audio Coding)"),

.type = AVMEDIA_TYPE_AUDIO,

.id = AV_CODEC_ID_AAC,

.priv_data_size = sizeof(AACContext),

.init = aac_decode_init,

.close = aac_decode_close,

.decode = aac_decode_frame,

.sample_fmts = (const enum AVSampleFormat[]) {

AV_SAMPLE_FMT_FLTP, AV_SAMPLE_FMT_NONE

},

.capabilities = AV_CODEC_CAP_CHANNEL_CONF | AV_CODEC_CAP_DR1,

.caps_internal = FF_CODEC_CAP_INIT_THREADSAFE,

.channel_layouts = aac_channel_layout,

.flush = flush,

.priv_class = &aac_decoder_class,

.profiles = NULL_IF_CONFIG_SMALL(ff_aac_profiles),

};

avcodec_open2

// utils.c

int attribute_align_arg avcodec_open2(AVCodecContext *avctx, const AVCodec *codec, AVDictionary **options) {

// 赋值等一大堆操作

// ...

if (HAVE_THREADS

&& !(avctx->internal->frame_thread_encoder && (avctx->active_thread_type&FF_THREAD_FRAME))) {

ret = ff_thread_init(avctx); // 这块进行初始化Frame threading或Slice threading,之后再写文章梳理

if (ret < 0) {

goto free_and_end;

}

}

// 初始化codec

if (avctx->codec->init && (!(avctx->active_thread_type&FF_THREAD_FRAME)

|| avci->frame_thread_encoder)) {

ret = avctx->codec->init(avctx);

if (ret < 0) {

goto free_and_end;

}

codec_init_ok = 1;

}

// ...

}

avctx->codec->init

走到h264dec.c中的h264_decode_init,此处初始化解码器并调用ff_h264_decode_extradata对sps和pps进行解码,封装出H264ParamSets供后续调用,实际却是在decode第一个真正的视频包时赋值width、profile、level等重要参数,这块需要再研究。

static av_cold int h264_decode_init(AVCodecContext *avctx)

{

av_log(avctx, AV_LOG_DEBUG, "h264_decode_init");

H264Context *h = avctx->priv_data;

int ret;

ret = h264_init_context(avctx, h);

if (ret < 0)

return ret;

ret = ff_thread_once(&h264_vlc_init, ff_h264_decode_init_vlc);

if (ret != 0) {

av_log(avctx, AV_LOG_ERROR, "pthread_once has failed.");

return AVERROR_UNKNOWN;

}

if (avctx->ticks_per_frame == 1) {

if(h->avctx->time_base.den < INT_MAX/2) {

h->avctx->time_base.den *= 2;

} else

h->avctx->time_base.num /= 2;

}

avctx->ticks_per_frame = 2;

if (avctx->extradata_size > 0 && avctx->extradata) {

ret = ff_h264_decode_extradata(avctx->extradata, avctx->extradata_size,

&h->ps, &h->is_avc, &h->nal_length_size,

avctx->err_recognition, avctx);

av_log(avctx, AV_LOG_DEBUG, "ff_h264_decode_extradata: %d", ret);

if (ret < 0) {

h264_decode_end(avctx);

return ret;

}

}

if (h->ps.sps && h->ps.sps->bitstream_restriction_flag &&

h->avctx->has_b_frames < h->ps.sps->num_reorder_frames) {

h->avctx->has_b_frames = h->ps.sps->num_reorder_frames;

}

avctx->internal->allocate_progress = 1;

ff_h264_flush_change(h);

if (h->enable_er < 0 && (avctx->active_thread_type & FF_THREAD_SLICE))

h->enable_er = 0;

if (h->enable_er && (avctx->active_thread_type & FF_THREAD_SLICE)) {

av_log(avctx, AV_LOG_WARNING,

"Error resilience with slice threads is enabled. It is unsafe and unsupported and may crash. "

"Use it at your own risk\n");

}

return 0;

}

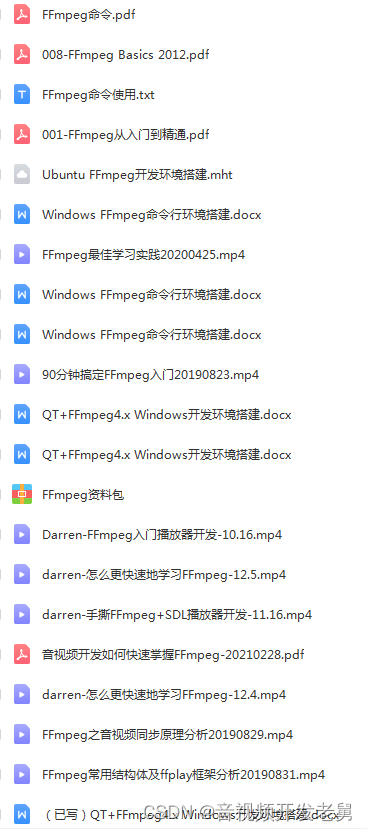

CSDN站内私信我,领取最新最全C++音视频学习提升资料,内容包括(C/C++,Linux 服务器开发,FFmpeg ,webRTC ,rtmp ,hls ,rtsp ,ffplay ,srs)

avcodec_send_packet

向解码器送入一帧,若此时没有帧可用,就触发解码流程;

// decode.c

int attribute_align_arg avcodec_send_packet(AVCodecContext *avctx, const AVPacket *avpkt)

{

AVCodecInternal *avci = avctx->internal;

int ret;

if (!avcodec_is_open(avctx) || !av_codec_is_decoder(avctx->codec))

return AVERROR(EINVAL);

if (avctx->internal->draining)

return AVERROR_EOF;

if (avpkt && !avpkt->size && avpkt->data)

return AVERROR(EINVAL);

ret = bsfs_init(avctx);

if (ret < 0)

return ret;

av_packet_unref(avci->buffer_pkt);

if (avpkt && (avpkt->data || avpkt->side_data_elems)) {

ret = av_packet_ref(avci->buffer_pkt, avpkt);

if (ret < 0)

return ret;

}

ret = av_bsf_send_packet(avci->bsf, avci->buffer_pkt);

if (ret < 0) {

av_packet_unref(avci->buffer_pkt);

return ret;

}

// 如果没有解码出来一帧,提前触发解码,往buffer里存;

if (!avci->buffer_frame->buf[0]) {

ret = decode_receive_frame_internal(avctx, avci->buffer_frame);

if (ret < 0 && ret != AVERROR(EAGAIN) && ret != AVERROR_EOF)

return ret;

}

return 0;

}

// utils.c

int avcodec_is_open(AVCodecContext *s) {

return !!s->internal;

}

// utils.c

int av_codec_is_decoder(const AVCodec *codec) {

return codec && (codec->decode || codec->receive_frame);

}

avcodec_receive_frame

如果有解码好的帧直接使用;如果没有则触发解码流程;

// decode.c

int attribute_align_arg avcodec_receive_frame(AVCodecContext *avctx, AVFrame *frame)

{

AVCodecInternal *avci = avctx->internal;

int ret, changed;

av_frame_unref(frame);

if (!avcodec_is_open(avctx) || !av_codec_is_decoder(avctx->codec))

return AVERROR(EINVAL);

if (avci->buffer_frame->buf[0]) {

// 有已经解码好的帧,直接取出

av_frame_move_ref(frame, avci->buffer_frame);

} else {

// 没有则进行解码

ret = decode_receive_frame_internal(avctx, frame);

if (ret < 0)

return ret;

}

if (avctx->codec_type == AVMEDIA_TYPE_VIDEO) {

ret = apply_cropping(avctx, frame);

if (ret < 0) {

av_frame_unref(frame);

return ret;

}

}

avctx->frame_number++;

// ...

return 0;

}

decode_receive_frame_internal

static int decode_receive_frame_internal(AVCodecContext *avctx, AVFrame *frame)

{

AVCodecInternal *avci = avctx->internal;

int ret;

if (avctx->codec->receive_frame) {

ret = avctx->codec->receive_frame(avctx, frame);

if (ret != AVERROR(EAGAIN))

av_packet_unref(avci->last_pkt_props);

} else {

// h264走这

ret = decode_simple_receive_frame(avctx, frame);

}

if (ret == AVERROR_EOF)

avci->draining_done = 1;

if (!ret) {

/* the only case where decode data is not set should be decoders

* that do not call ff_get_buffer() */

if (frame->private_ref) {

FrameDecodeData *fdd = (FrameDecodeData*)frame->private_ref->data;

if (fdd->post_process) {

ret = fdd->post_process(avctx, frame);

if (ret < 0) {

av_frame_unref(frame);

return ret;

}

}

}

}

/* free the per-frame decode data */

av_buffer_unref(&frame->private_ref);

return ret;

}

decode_simple_receive_frame

static int decode_simple_receive_frame(AVCodecContext *avctx, AVFrame *frame)

{

int ret;

while (!frame->buf[0]) {

// 填充 frame->buf[0]

ret = decode_simple_internal(avctx, frame);

if (ret < 0)

return ret;

}

return 0;

}

decode_simple_internal

获取pkt,然后使用h264dec.c去解码获取frame;

static inline int decode_simple_internal(AVCodecContext *avctx, AVFrame *frame)

{

AVCodecInternal *avci = avctx->internal;

DecodeSimpleContext *ds = &avci->ds;

AVPacket *pkt = ds->in_pkt;

// copy to ensure we do not change pkt

int got_frame, actual_got_frame;

int ret;

// pkt data为空

if (!pkt->data && !avci->draining) {

av_packet_unref(pkt);

// 去获取pkt

ret = ff_decode_get_packet(avctx, pkt);

if (ret < 0 && ret != AVERROR_EOF)

return ret;

}

// Some codecs (at least wma lossless) will crash when feeding drain packets after EOF was signaled.

if (avci->draining_done)

return AVERROR_EOF;

if (!pkt->data &&

!(avctx->codec->capabilities & AV_CODEC_CAP_DELAY ||

avctx->active_thread_type & FF_THREAD_FRAME))

return AVERROR_EOF;

got_frame = 0;

// h264解码

ret = avctx->codec->decode(avctx, frame, &got_frame, pkt);

if (!(avctx->codec->caps_internal & FF_CODEC_CAP_SETS_PKT_DTS))

frame->pkt_dts = pkt->dts;

if (avctx->codec->type == AVMEDIA_TYPE_VIDEO) {

if(!avctx->has_b_frames)

frame->pkt_pos = pkt->pos;

}

actual_got_frame = got_frame;

if (avctx->codec->type == AVMEDIA_TYPE_VIDEO) {

if (frame->flags & AV_FRAME_FLAG_DISCARD)

got_frame = 0;

if (got_frame)

frame->best_effort_timestamp = guess_correct_pts(avctx,

frame->pts,

frame->pkt_dts);

} else if (avctx->codec->type == AVMEDIA_TYPE_AUDIO) {

// ...

}

if (!got_frame)

av_frame_unref(frame);

if (ret >= 0 && avctx->codec->type == AVMEDIA_TYPE_VIDEO && !(avctx->flags & AV_CODEC_FLAG_TRUNCATED))

ret = pkt->size;

if (avci->draining && !actual_got_frame) {

if (ret < 0) {

int nb_errors_max = 20 + (HAVE_THREADS && avctx->active_thread_type & FF_THREAD_FRAME ? avctx->thread_count : 1);

if (avci->nb_draining_errors++ >= nb_errors_max) {

avci->draining_done = 1;

ret = AVERROR_BUG;

}

} else {

avci->draining_done = 1;

}

}

avci->compat_decode_consumed += ret;

if (ret >= pkt->size || ret < 0) {

av_packet_unref(pkt);

av_packet_unref(avci->last_pkt_props);

} else {

int consumed = ret;

pkt->data += consumed;

pkt->size -= consumed;

avci->last_pkt_props->size -= consumed; // See extract_packet_props() comment.

pkt->pts = AV_NOPTS_VALUE;

pkt->dts = AV_NOPTS_VALUE;

avci->last_pkt_props->pts = AV_NOPTS_VALUE;

avci->last_pkt_props->dts = AV_NOPTS_VALUE;

}

return ret < 0 ? ret : 0;

}

h264_decode_frame

// h264dec.c

static int h264_decode_frame(AVCodecContext *avctx, void *data,

int *got_frame, AVPacket *avpkt)

{

const uint8_t *buf = avpkt->data;

int buf_size = avpkt->size;

H264Context *h = avctx->priv_data;

AVFrame *pict = data;

int buf_index;

int ret;

h->flags = avctx->flags;

h->setup_finished = 0;

h->nb_slice_ctx_queued = 0;

ff_h264_unref_picture(h, &h->last_pic_for_ec);

/* end of stream, output what is still in the buffers */

if (buf_size == 0)

return send_next_delayed_frame(h, pict, got_frame, 0);

if (av_packet_get_side_data(avpkt, AV_PKT_DATA_NEW_EXTRADATA, NULL)) {

int side_size;

uint8_t *side = av_packet_get_side_data(avpkt, AV_PKT_DATA_NEW_EXTRADATA, &side_size);

ff_h264_decode_extradata(side, side_size,

&h->ps, &h->is_avc, &h->nal_length_size,

avctx->err_recognition, avctx);

}

if (h->is_avc && buf_size >= 9 && buf[0]==1 && buf[2]==0 && (buf[4]&0xFC)==0xFC) {

if (is_avcc_extradata(buf, buf_size))

return ff_h264_decode_extradata(buf, buf_size,

&h->ps, &h->is_avc, &h->nal_length_size,

avctx->err_recognition, avctx);

}

buf_index = decode_nal_units(h, buf, buf_size);

if (buf_index < 0)

return AVERROR_INVALIDDATA;

if (!h->cur_pic_ptr && h->nal_unit_type == H264_NAL_END_SEQUENCE) {

av_assert0(buf_index <= buf_size);

return send_next_delayed_frame(h, pict, got_frame, buf_index);

}

if (!(avctx->flags2 & AV_CODEC_FLAG2_CHUNKS) && (!h->cur_pic_ptr || !h->has_slice)) {

if (avctx->skip_frame >= AVDISCARD_NONREF ||

buf_size >= 4 && !memcmp("Q264", buf, 4))

return buf_size;

av_log(avctx, AV_LOG_ERROR, "no frame!\n");

return AVERROR_INVALIDDATA;

}

if (!(avctx->flags2 & AV_CODEC_FLAG2_CHUNKS) ||

(h->mb_y >= h->mb_height && h->mb_height)) {

if ((ret = ff_h264_field_end(h, &h->slice_ctx[0], 0)) < 0)

return ret;

/* Wait for second field. */

if (h->next_output_pic) {

ret = finalize_frame(h, pict, h->next_output_pic, got_frame);

if (ret < 0)

return ret;

}

}

av_assert0(pict->buf[0] || !*got_frame);

ff_h264_unref_picture(h, &h->last_pic_for_ec);

return get_consumed_bytes(buf_index, buf_size);

}

额外的:

h264_decode_frame

–> h264dec.c#decode_nal_units

–> h264_slice.c#ff_h264_queue_decode_slice

–> h264_slice.c#h264_field_start

–> h264_slice.c#h264_init_ps,这里赋值解码参数,find_stream_info的视频参数是在这里赋值的。