Mongo DB is a distributed NOSQL(Not Only SQL) database based on a document model where data objects are stored as separate document inside a collection. Each MongoDB instance can have multiple databases and each database can have multiple collections. A document is the basic unit of data for Mongo DB and is nearly equivalent to a row in an RDBMS.

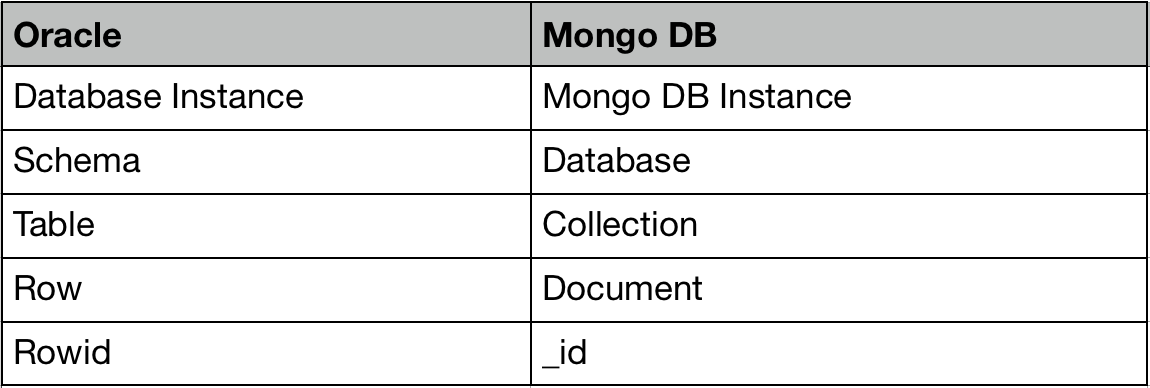

Let’s see a comparison of Oracle(RDBMS) and Mongo DB(NOSQL).

Data in each document is represented as JSON and MongoDB stores data records as BSON documents. BSON is a binary representation of JSON documents. The maximum BSON document size is 16 megabytes.

To get an idea of what a JSON document is, check out the following Figure.

A Mongo document printed as JSON

In this blog we will explore the steps required to load data into Mongo DB database using Apache Spark. In this code example, I’m using H-1B Visa Petitions data(3 million)as my source data which is downloaded from kaggle.com. This example code uses Spark 3.0.0-preview , Scala 2.12.10 and MongoDB 4.2.1.

Step 1: Download dependency jars and add these jars to the Eclipse class path.

a)mongo-java-driver-3.11.2.jar

c)mongo-spark-connector_2.12–2.4.1.jar

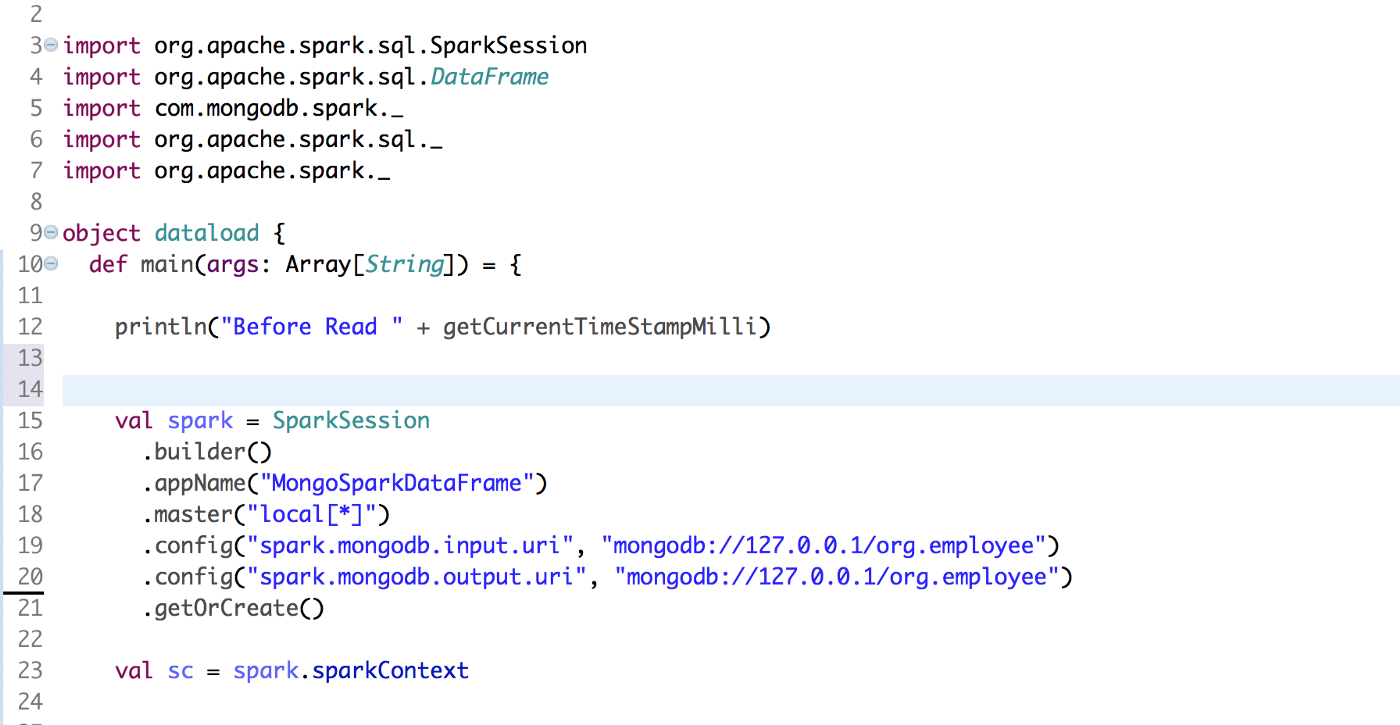

Step 2: Lets create a scala object named “dataload” and create a SPARK session.

We can set all Input/Output Configuration via the uri setting as seen below. Click here to see the full list of configuration options.

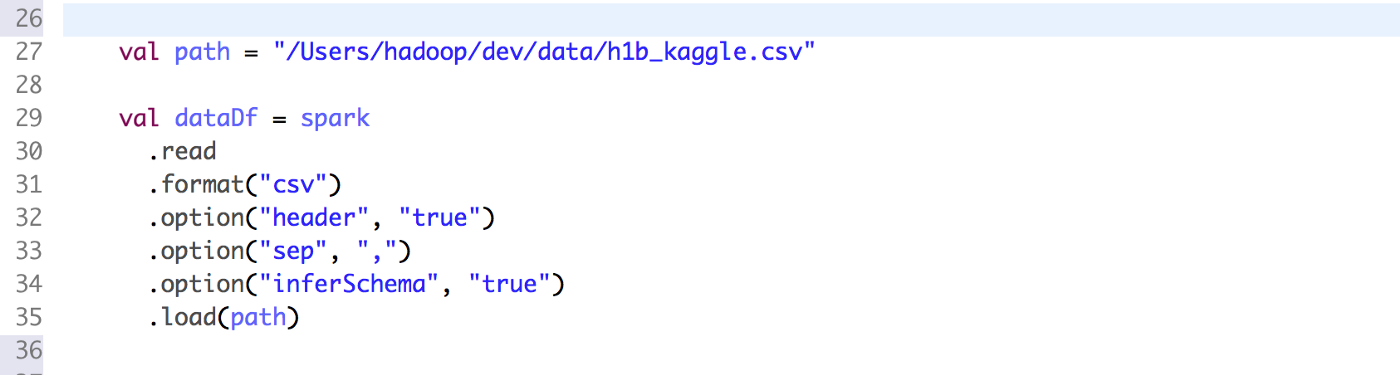

Step 3: Set directory path for the input data file and use Spark DataFrame reader to read the input data(.CSV)file as a Spark DataFrame.

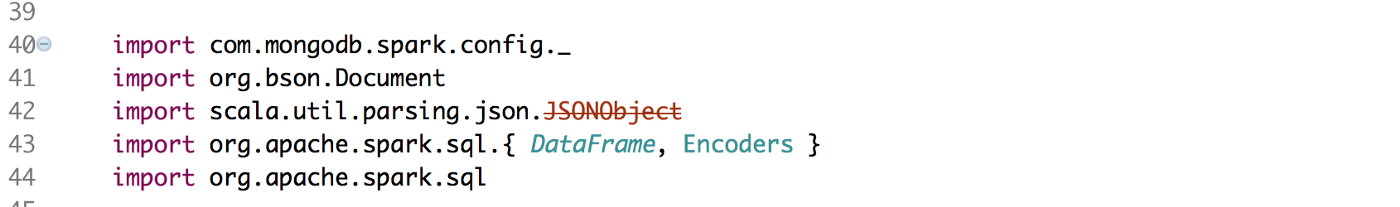

Step 4: Next let’s import the necessary dependencies.

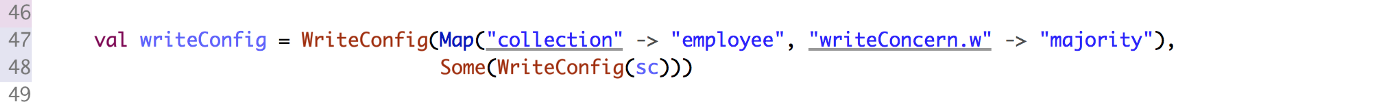

Step 5: Lets set the database write configurations. “WriteConfig” object can specify various write configuration settings, such as mongo db collection or the write concern.

In this configuration we are using the Mongo DB collection “employee” and writeConcern.w "majority".

“majority” means Write operation returns acknowledgement after propagating to M-number of data-bearing voting members (primary and secondaries)

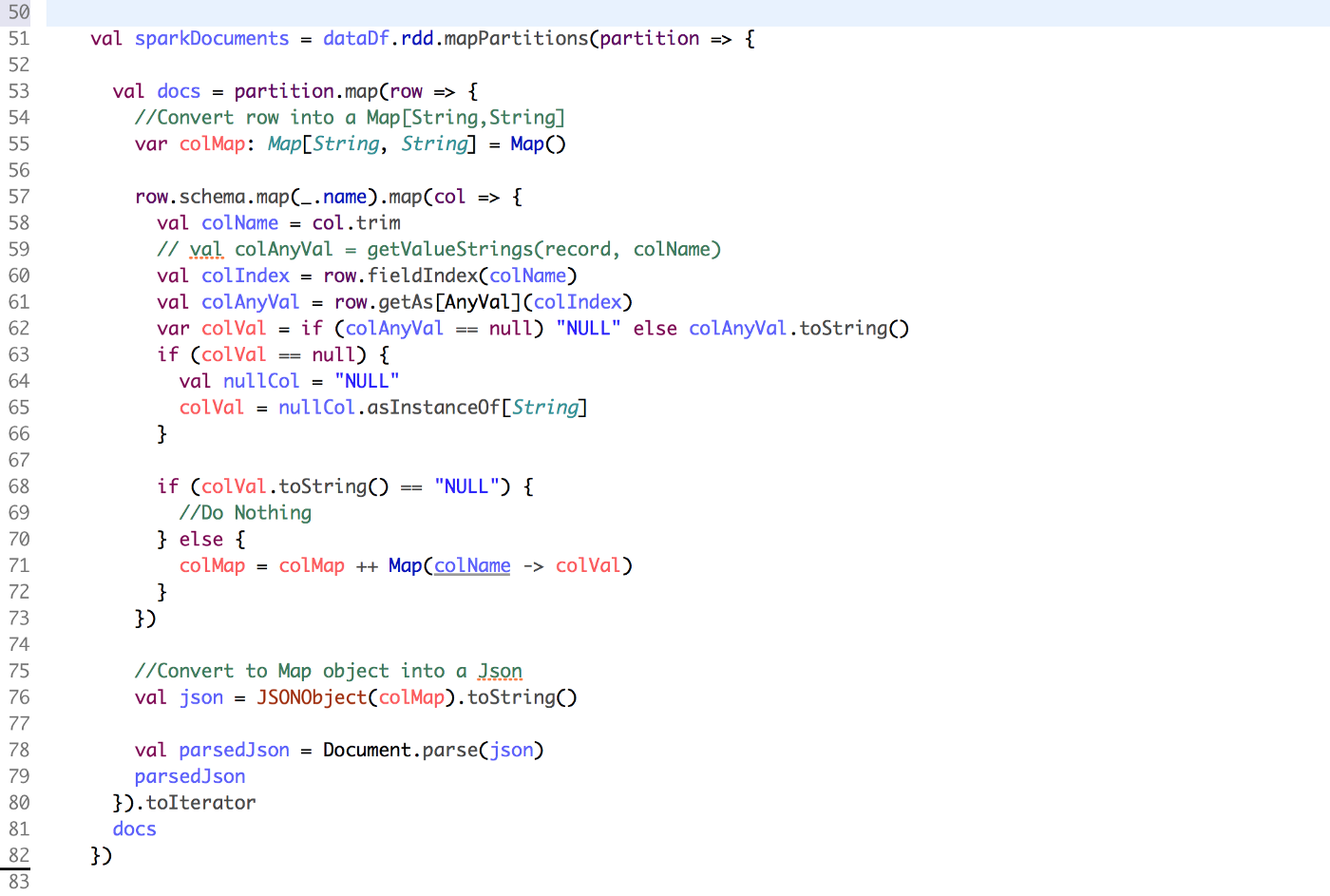

Step 6: Iterate thorough each Spark partition and parse JSON string to Mongo DB Document.

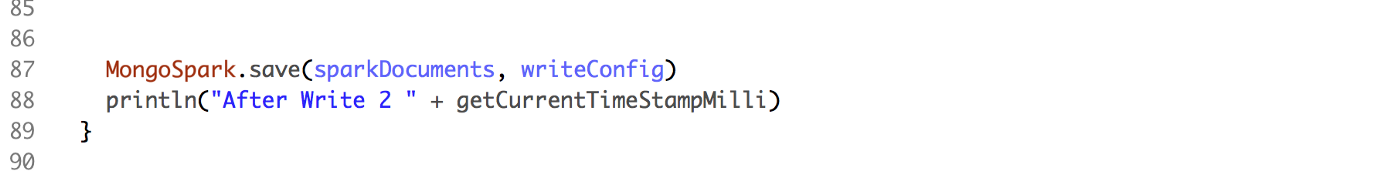

Step 7: The following code saves data to the “employee" collection with a majority write concern

Above program took 1 minute 13 secs and 283 milli seconds(1.13.283) to load 3 million records into Mongo DB using the Mongo-Spark-Connector. For the same data set Spark JDBC took 2 minute 22 secs and 194 milli seconds.Based on this it is very clear that Mongo-Spark-Connector Outperform Spark JDBC.

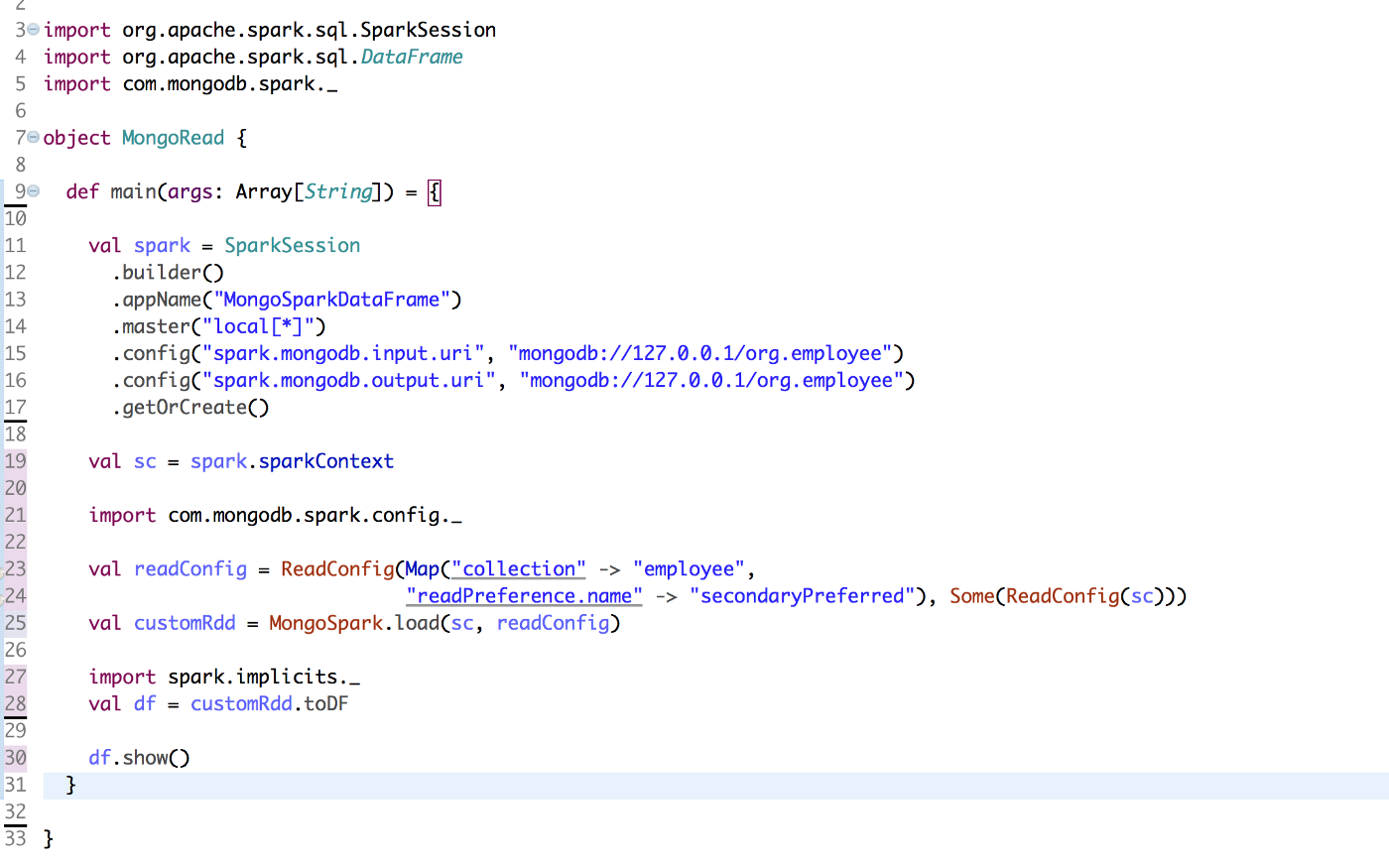

Step 8: Lets retrieve the “employee” collection data using Spark to ensure that data is loaded.

When we execute the above code, the output will be something similar to below.

Final Thoughts…

Mongo DB provides high performance, high availability, and automatic scaling and very easy to implement. With the fast performance of Spark and real-time analytics capabilities of Mongo DB, enterprises can build more robust applications. Moreover Mongo-Spark-Connector gives an edge to MongoDB when working with Spark over other NOSQL databases.