读取JSON文件,以JSON,CSV,jdbc格式写出

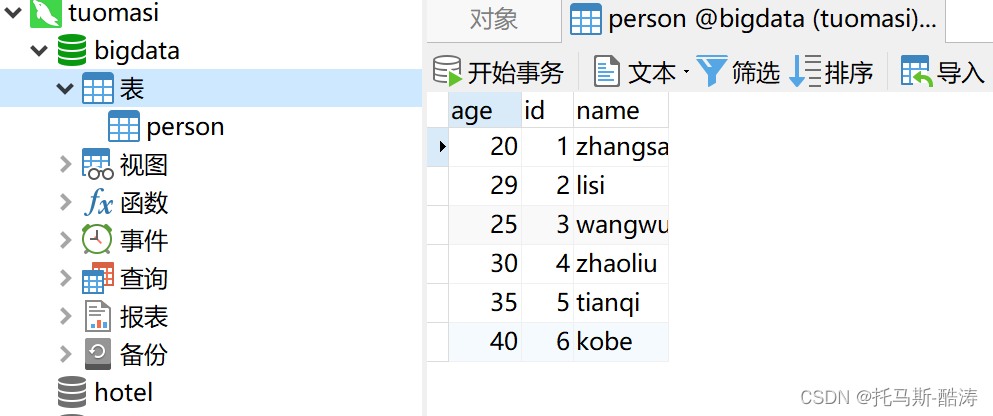

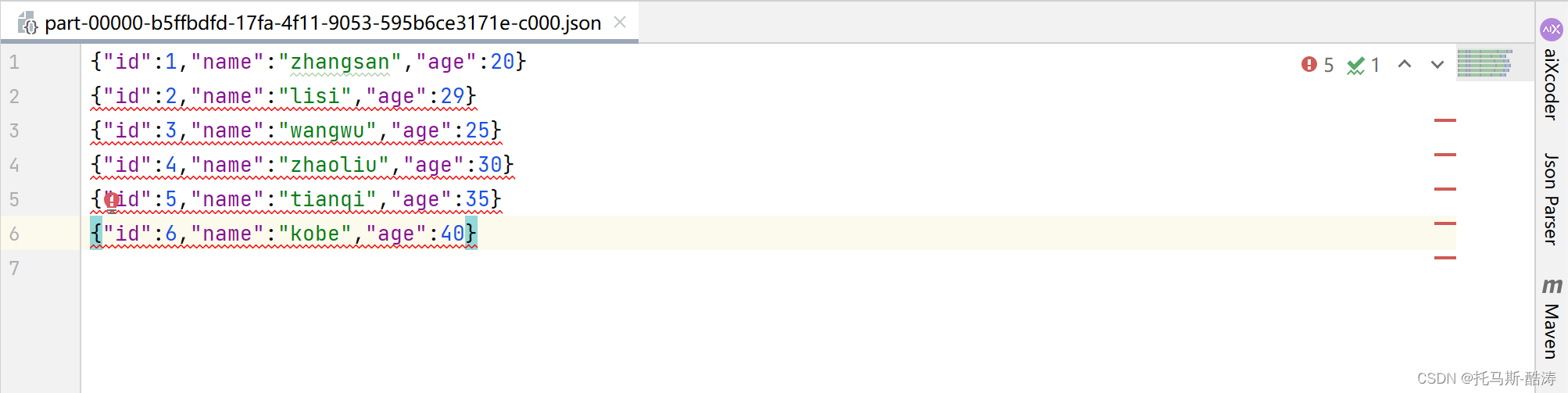

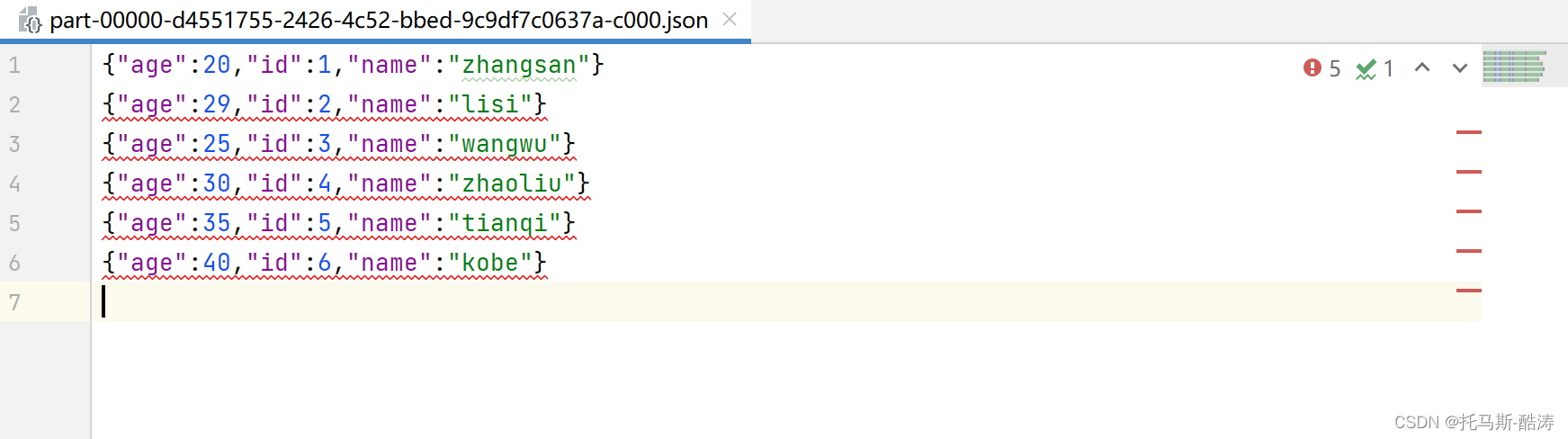

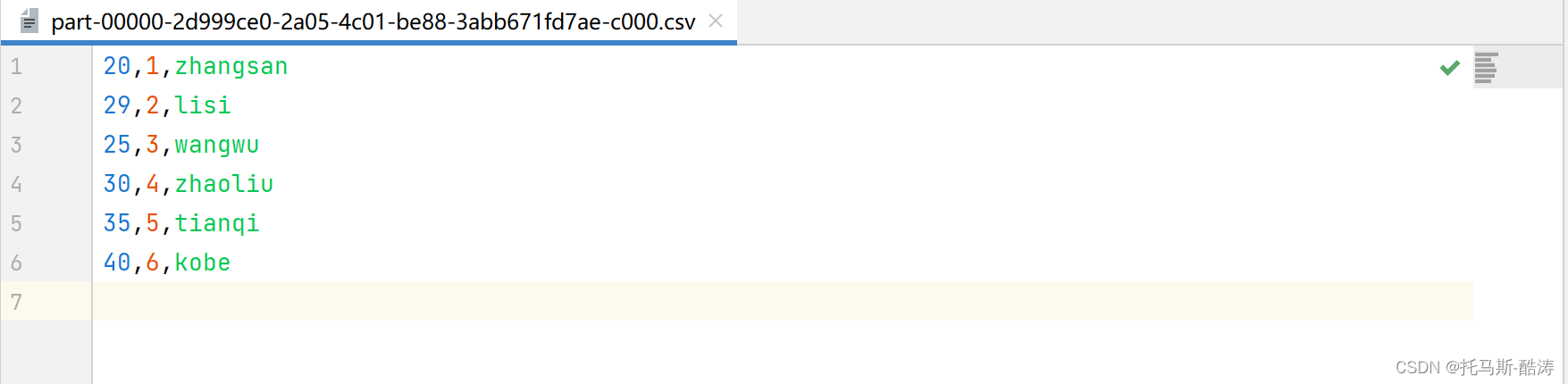

数据展示

代码

package org.example.SQL

import org.apache.log4j.{Level, Logger}

import org.apache.spark.sql.{DataFrame, SaveMode, SparkSession}

import java.util.Properties

object sql_DataSource { //支持外部数据源

//支持的文件数据格式:text/json/csv/parquet/orc...

def main(args: Array[String]): Unit = {

//不打印日志

Logger.getLogger("org").setLevel(Level.ERROR)

val spark: SparkSession = SparkSession.builder().appName("test2")

.master("local[*]").getOrCreate()

val sc = spark.sparkContext

val df1: DataFrame = spark.read.json("data/input/json")

df1.printSchema()

df1.show()

df1.coalesce(1).write.mode(SaveMode.Overwrite).json("data/output/json")

df1.coalesce(1).write.mode(SaveMode.Overwrite).csv("data/output/csv")

val prop = new Properties()

prop.setProperty("user", "root")

prop.setProperty("password", "123456")

df1.coalesce(1).write.mode(SaveMode.Overwrite).jdbc("jdbc:mysql://localhost:3306/bigdata?characterEncoding=UTF-8", "person", prop)

//如果没有,表自动创建

spark.stop()

}

}

约束

root

|-- age: long (nullable = true)

|-- id: long (nullable = true)

|-- name: string (nullable = true)数据打印

+---+---+--------+

|age| id| name|

+---+---+--------+

| 20| 1|zhangsan|

| 29| 2| lisi|

| 25| 3| wangwu|

| 30| 4| zhaoliu|

| 35| 5| tianqi|

| 40| 6| kobe|

+---+---+--------+结果文件输出

json

csv

jdbc