一、工作原理

Logstash收集AppServer产生的Log,并存放到ElasticSearch集群中,而Kibana则从ES集群中查询数据生成图表,再返回给Browser

二、ELK

下载地址:https://www.elastic.co/downloads

操作系统:Ubuntu 16.04

java环境:JDK 1.8

启动顺序为:Elasticsearch > Logstash > Kibana,没有启动Elasticsearch会导致Kibana无法启动,而没有启动Logstash则会导致Kibana没有数据

1. Elasticsearch

注意:Elasticsearch不允许用root账户启动,否则会报错

# 解压 tar -zxvf elasticsearch-6.2.4.tar.gz # 切换目录 cd elasticsearch-6.2.4/

2. 重点配置(本地运行不用修改)

gedit config/elasticsearch.yml

network.host: localhost http.port: 9200

3. 启动

./bin/elasticsearch

4.访问http://localhost:9200,成功的话会显示一个JSON串

2. Logstash

使用log4j的小伙伴在这里注意了:我在网上查到的其他几个整合log4j的资料可能由于Logstash版本升级了的原因,完全按照他们的配置来最后会发生logstash - input - log4j插件找不到的错误,解决方式如下(此处疯狂感谢进哥):

#在logstash目录下输入 bin/logstash-plugin install logstash-input-log4j

详情请见http://www.elastic.co/guide/en/logstash/current/plugins-inputs-log4j.html

# 解压 tar -zxvf logstash-6.2.4.tar.gz # 切换目录 cd logstash-6.2.4/

2. 重点配置

gedit config/logstash.conf

input {

tcp {

#模式选择为server

mode => "server"

#ip和端口根据自己情况填写,端口默认4560,对应下文logback.xml里appender中的destination

host => "127.0.0.1"

port => 4560

#格式json

codec => json_lines

}

}

filter {

#过滤器,根据需要填写

}

output {

elasticsearch {

action => "index"

#这里是es的地址,多个es要写成数组的形式

hosts => "elasticsearch:9200"

#用于kibana过滤,可以填项目名称

index => "applog"

}

}

3. 启动

./bin/logstash -f config/logstash.conf

4.访问http://localhost:9600,成功的话会显示一个JSON串

3. Kibana

# 解压 tar -zxvf kibana-6.2.4-linux-x86_64.tar.gz # 切换目录 cd kibana-6.2.4-linux-x86_64/

2. 重点配置(本地运行不用修改)

gedit config/kibana.yml

#修改为es的地址 elasticsearch.url: http://localhost:9200

3. 启动

./bin/kibana

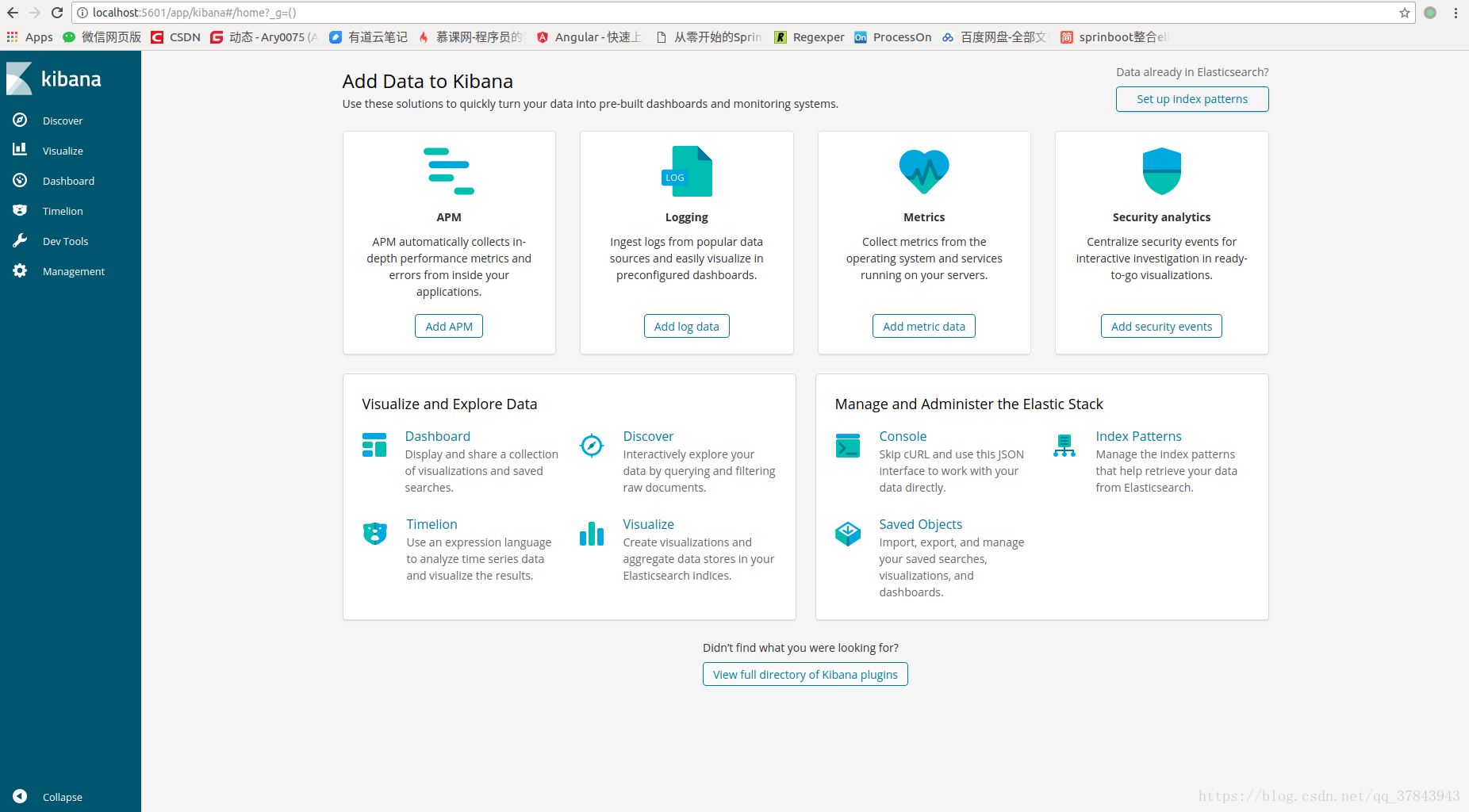

4.访问http://localhost:5601,启动成功的话会显示如下界面

此时暂时没有日志。

三、SpringBoot相关

1. pom.xml

<properties> <ch.qos.logback.version>1.2.3</ch.qos.logback.version> </properties>

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-logging</artifactId>

</dependency>

<dependency>

<groupId>ch.qos.logback</groupId>

<artifactId>logback-core</artifactId>

<version>${ch.qos.logback.version}</version>

</dependency>

<dependency>

<groupId>ch.qos.logback</groupId>

<artifactId>logback-classic</artifactId>

<version>${ch.qos.logback.version}</version>

</dependency>

<dependency>

<groupId>ch.qos.logback</groupId>

<artifactId>logback-access</artifactId>

<version>${ch.qos.logback.version}</version>

</dependency>

<dependency>

<groupId>net.logstash.logback</groupId>

<artifactId>logstash-logback-encoder</artifactId>

<version>5.1</version>

</dependency>

</dependencies>

2. logback.xml

<?xml version="1.0" encoding="UTF-8"?>

<configuration debug="false" scan="true" scanPeriod="1 seconds">

<include resource="org/springframework/boot/logging/logback/base.xml" />

<contextName>logback</contextName>

<appender name="stash" class="net.logstash.logback.appender.LogstashTcpSocketAppender">

<destination>127.0.0.1:4569</destination>

<!-- encoder必须配置,有多种可选 -->

<encoder charset="UTF-8" class="net.logstash.logback.encoder.LogstashEncoder" />

</appender>

<root level="info">

<appender-ref ref="stash" />

</root>

</configuration>

3. Test.java

import org.junit.Test;

import org.junit.runner.RunWith;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.boot.test.context.SpringBootTest;

import org.springframework.test.context.junit4.SpringRunner;

@RunWith(SpringRunner.class)

@SpringBootTest

public class ElkApplicationTests {

private Logger logger = LoggerFactory.getLogger(this.getClass());

@Test

public void contextLoads() {

}

@Test

public void test() throws Exception {

for(int i=0;i<5;i++) {

logger.error("[" + i + "]");

Thread.sleep(500);

}

}

}

四、测试

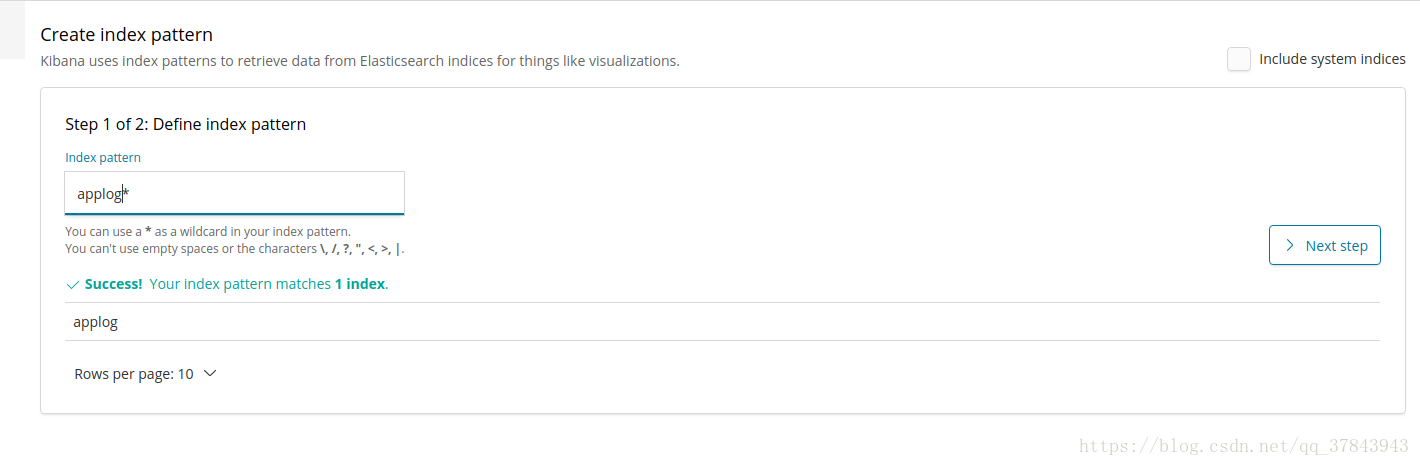

运行测试用例后回到kibana界面,Management --> Index Patterns,填入Logstash配置中index的值,此处为applog

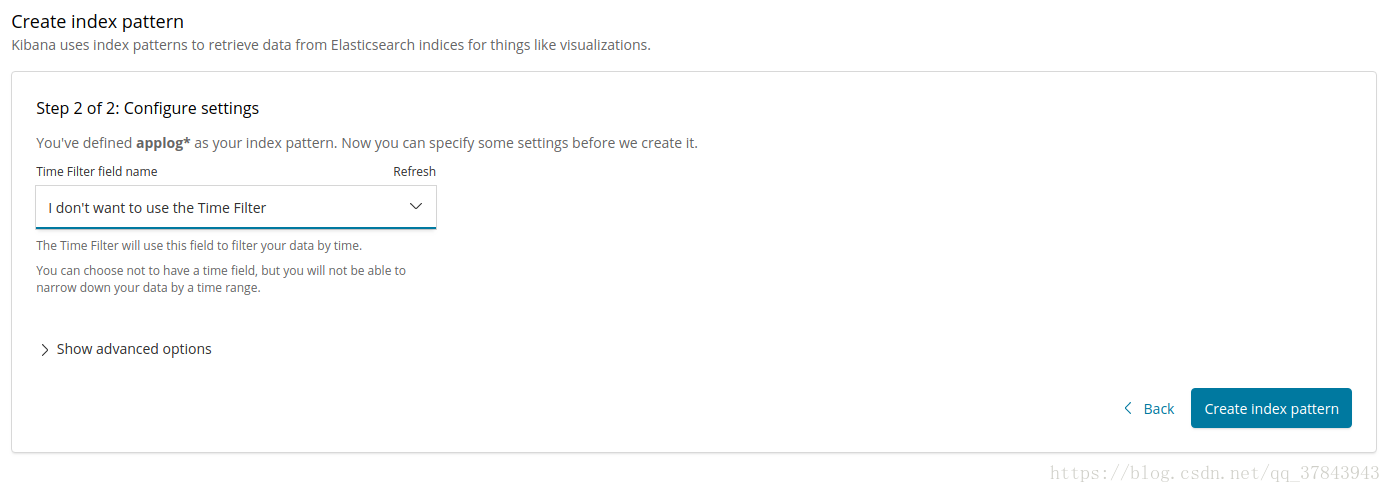

第二步根据个人情况确定,此处选择"I don't want to use the Time Fliter"

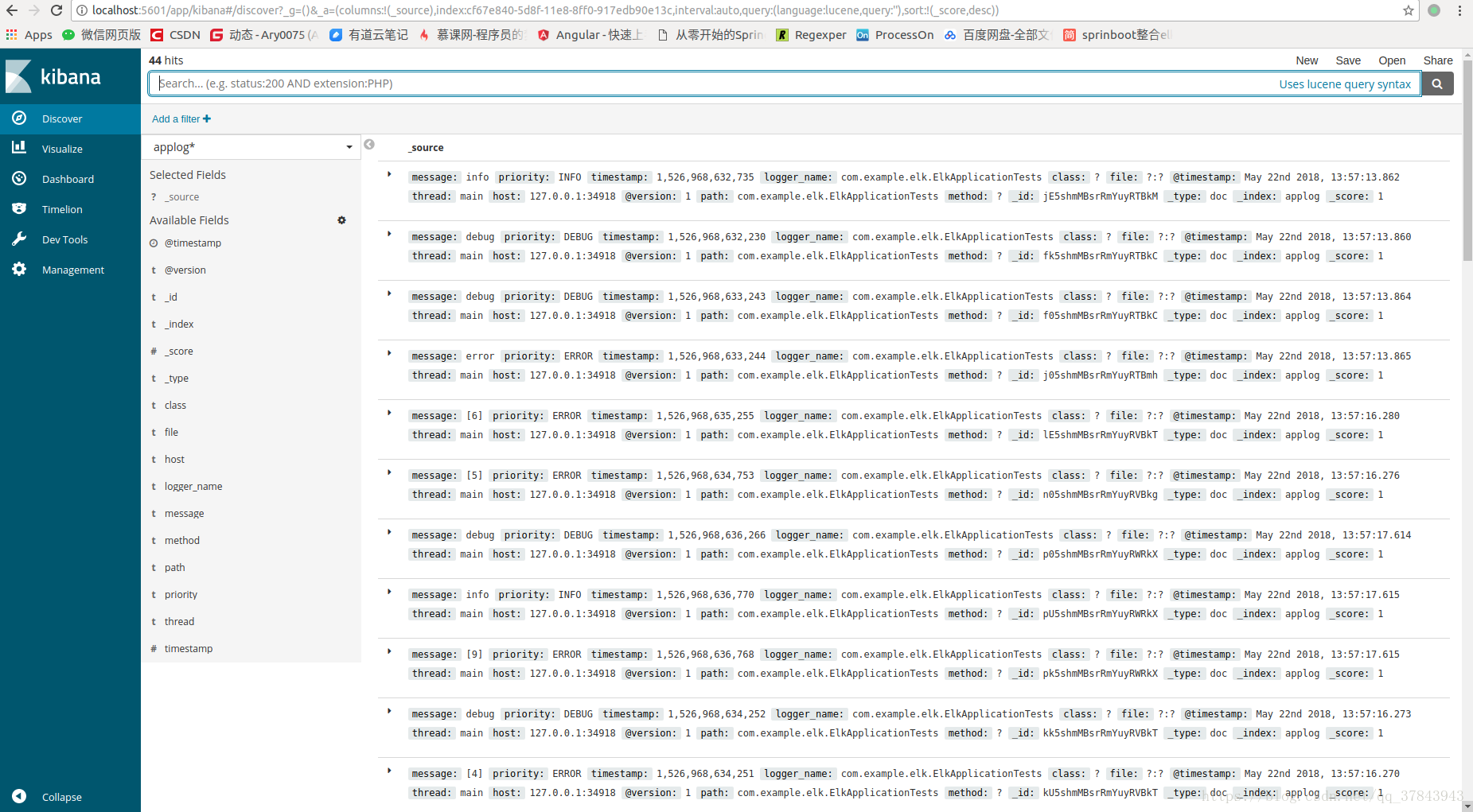

回到Discover,大功告成!

五、参考

https://my.oschina.net/itblog/blog/547250

http://lib.csdn.net/article/java/64854

六、不定期更新

1. 动态配置output - elasticsearch - index方法:

logback.xml添加customFields

<?xml version="1.0" encoding="UTF-8"?>

<configuration debug="false" scan="true" scanPeriod="1 seconds">

<include resource="org/springframework/boot/logging/logback/base.xml" />

<contextName>logback</contextName>

<appender name="stash" class="net.logstash.logback.appender.LogstashTcpSocketAppender">

<destination>127.0.0.1:4569</destination>

<!-- encoder必须配置,有多种可选 -->

<encoder charset="UTF-8" class="net.logstash.logback.encoder.LogstashEncoder">

<customFields>{"appname":"ary"}</customFields>

</encoder>

</appender>

<root level="info">

<appender-ref ref="stash" />

</root>

</configuration>

修改logstash.conf的index

input {

tcp {

#模式选择为server

mode => "server"

#ip和端口根据自己情况填写,端口默认4560,对应下文logback.xml里appender中的destination

host => "127.0.0.1"

port => 4560

#格式json

codec => json_lines

}

}

filter {

#过滤器,根据需要填写

}

output {

elasticsearch {

action => "index"

#这里是es的地址,多个es要写成数组的形式

hosts => "elasticsearch:9200"

#用于kibana过滤,可以填项目名称

index => "%{appname}"

}

}

此时上传log的index即为ary

小白所学尚浅,文章内容是根据参考+实践理解所得,如果有错误的地方欢迎指正!