前言

公司计划使用etcd来做统一配置管理,由于服务都在阿里云托管k8s集群上,所以这里也打算使用k8s来部署etcd集群。

一、创建持久化存储

1、创建etcd目录

mkdir etcd/

mkdir etcd/nas

2、创建storageclass存储

(1)设置rbac

cd /data/etcd/nas

cat rbac.yaml

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

namespace: default

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

#应用配置

kubectl apply -f rbac.yaml

(2)设置nfs-client

cat nfs-client.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

---

kind: Deployment

apiVersion: apps/v1

metadata:

name: nfs-client-provisioner

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccount: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: quay.io/external_storage/nfs-client-provisioner:latest

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: fuseim.pri/ifs

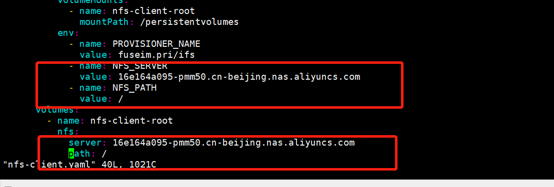

- name: NFS_SERVER

value: 16e164a095-pmm50.cn-beijing.nas.aliyuncs.com

- name: NFS_PATH

value: /

volumes:

- name: nfs-client-root

nfs:

server: 16e164a095-pmm50.cn-beijing.nas.aliyuncs.com

path: /

#应用配置

kubectl apply -f nfs-client.yaml

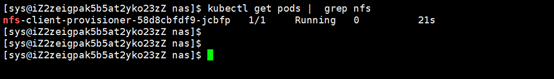

创建成功

nfs server地址与挂载目录(使用阿里云nas)

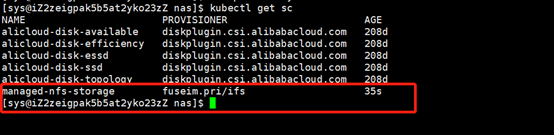

(3)设置storageclass

cat pv-class.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: managed-nfs-storage

provisioner: fuseim.pri/ifs

parameters:

archiveOnDelete: "false"

#应用配置

kubectl apply -f pv-class.yaml

创建成功

二、部署etcd集群

1、创建配置文件

cd /data/etcd/

cat etcd.yaml

apiVersion: v1

kind: Service

metadata:

name: etcd-headless

namespace: default

labels:

app: etcd

spec:

ports:

- port: 2380

name: etcd-server

- port: 2379

name: etcd-client

clusterIP: None

selector:

app: etcd

publishNotReadyAddresses: true

---

apiVersion: v1

kind: Service

metadata:

labels:

app: etcd

name: etcd-svc

namespace: default

spec:

ports:

- name: etcd-cluster

port: 2379

targetPort: 2379

nodePort: 31168

selector:

app: etcd

sessionAffinity: None

type: NodePort

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

labels:

app: etcd

name: etcd

namespace: default

spec:

replicas: 3

selector:

matchLabels:

app: etcd

serviceName: etcd-headless

template:

metadata:

labels:

app: etcd

name: etcd

spec:

containers:

- env:

- name: MY_POD_NAME #当前pod名

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: CLUSTER_NAMESPACE #名称空间

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: SERVICE_NAME #内部通信的无头服务名称

value: "etcd-headless"

- name: INITIAL_CLUSTER #initial-cluster的值

value: "etcd-0=http://etcd-0.etcd-headless:2380,etcd-1=http://etcd-1.etcd-headless:2380,etcd-2=http://etcd-2.etcd-headless:2380"

image: registry-vpc.cn-beijing.aliyuncs.com/treesmob-dt/etcd:0.1

imagePullPolicy: IfNotPresent

name: etcd

ports:

- containerPort: 2380

name: peer

protocol: TCP

- containerPort: 2379

name: client

protocol: TCP

resources:

requests:

memory: "1Gi"

cpu: "0.5"

limits:

memory: "1Gi"

cpu: "0.5"

volumeMounts:

- name: etcd-pvc

mountPath: /var/lib/etcd

updateStrategy:

type: OnDelete

volumeClaimTemplates:

- metadata:

name: etcd-pvc

spec:

accessModes: ["ReadWriteMany"]

storageClassName: managed-nfs-storage

resources:

requests:

storage: 10Gi

2、应用配置

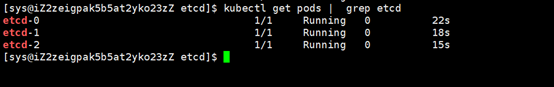

kubectl apply -f etcd.yaml

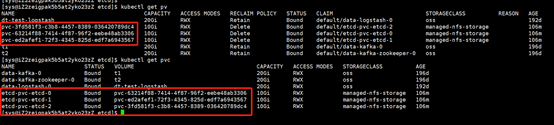

#自动创建pv、pvc

创建成功

3、客户端验证

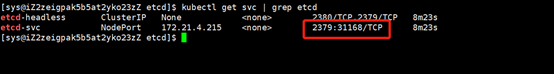

(1)查看etcd集群的NodePort的service端口

kubectl get svc | grep etcd

(2)创建值

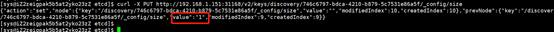

curl -X PUT http://192.168.1.151:31168/v2/keys/discovery/746c6797-bdca-4210-b879-5c7531e86a5f/_config/size -d value=1

(3)获取值

curl -X PUT http://192.168.1.151:31168/v2/keys/discovery/746c6797-bdca-4210-b879-5c7531e86a5f/_config/size