一、 创建MDS(CephFS集群)

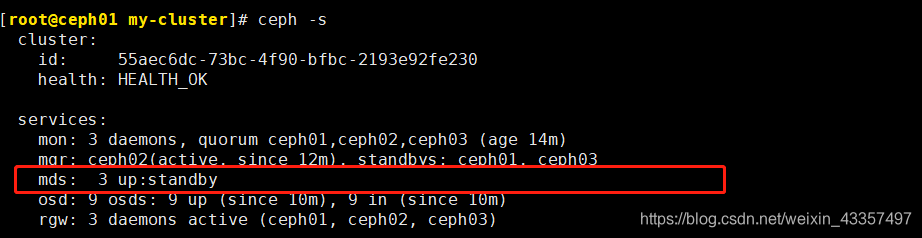

1、创建mds集群

ceph-deploy mds create ceph01 ceph02 ceph03

2、创建元数据pool和数据pool

ceph osd pool create cephfs_metadata 64

ceph osd pool create cephfs_data 64

ceph fs new cephfs cephfs_metadata cephfs_data

[root@ceph01 my-cluster]# ceph fs ls

name: cephfs, metadata pool: cephfs_metadata, data pools: [cephfs_data ]

3、CephFS mount方式挂载

[root@c1 ~]# cat /etc/ceph/ceph.client.admin.keyring

[client.admin]

key = AQDVV+Nf6nwoJRAA9DQaKs8jcHEXuby8YInhhQ==

caps mds = "allow *"

caps mgr = "allow *"

caps mon = "allow *"

caps osd = "allow *"

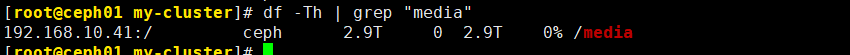

[root@c1 ~]# mount -t ceph ceph02:/ /media/ -o name=admin,secret=AQDVV+Nf6nwoJRAA9DQaKs8jcHEXuby8YInhhQ==

[root@c1 ~]# df -h /media/

开机自动挂载

#1.将密钥导入到文件中

echo 'AQDVV+Nf6nwoJRAA9DQaKs8jcHEXuby8YInhhQ==' > /root/.secret.key

echo 'ceph01:6789:/ /media ceph name=admin,secretfile=/root/.secret.key,noatime,_netdev 0 0' >> /etc/fstab

mount -a

4、cephfs管理命令

#查询所有的文件系统

ceph fs ls

#查询文件系统状态

ceph fs status cephfs

#更改文件系统多活节点

ceph fs cephfs set max_mds 2

ceph fs cephfs set max_mds 1

1. 设置存储池副本数

#ceph osd pool get testpool size

#ceph osd pool set testpool size 3

2. 打印存储池列表

#ceph osd lspools

3. 创建 删除 存储池

创建pool

# ceph osd pool create testPool 64

重命名pool

# ceph osd pool rename testPool amizPool

获取pool 副本数

# ceph osd pool get amizPool size

设置pool 副本数

# ceph osd pool set amizPool size 3

获取pool pg_num/pgp_num

# ceph osd pool get amizPool pg_num

# ceph osd pool get amizPool pgp_num

设置pool pg_num/pgp_num

# ceph osd pool set amizPool pg_num 128

# ceph osd pool set amizPool pgp_num 128

删除存储池

# ceph osd pool delete amizPool amizPool --yes-i-really-really-mean-it

删除池提示错误

Error EBUSY: pool 'testpool' is in use by CephFS

# ceph mds remove_data_pool testpool

# ceph osd pool delete testpool testpool --yes-i-really-really-mean-it

4. 设置查看存储池pool 配额

# ceph osd pool set-quota cephfs max_bytes 6G

查看存储池pool 配额

# ceph osd pool get-quota poolroom1

quotas for pool 'poolroom1':

max objects: N/A

max bytes : 6144MB # 存储池pool配额 6G

二、Kuboard K8S 集群添加CephFS持久化存储

请参考 kuboard网关文档 https://www.kuboard.cn/learning/k8s-intermediate/persistent/ceph/k8s-config.html#%E5%89%8D%E6%8F%90%E6%9D%A1%E4%BB%B6