WeatherPipeline.py

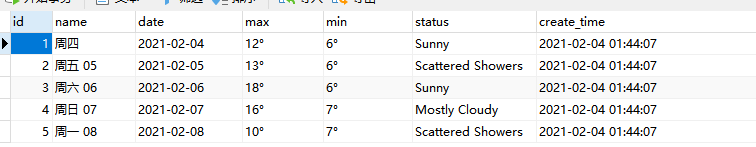

数据库写入

# Define your item pipelines here

import pymysql

class WeatherPipeline:

# 数据库

def __init__(self):

# 数据库配置

config = {

"host": "124.76.81.53",

"port": 3306,

"user": "root",

"password": "Mastertest.com",

"db": 'scrapy',

"charset": "utf8mb4",

"cursorclass": pymysql.cursors.DictCursor

}

# 数据字典的方式连接数据库

self.db = pymysql.connect(**config)

# 使用cursor()方法创建一个游标对象

self.cur = self.db.cursor()

# 执行SQL语句,插入

def process_item(self, item, spider):

sql = "replace into weather (name, status, max, min, date) VALUES (%s, %s, %s, %s, %s)"

# 使用execute()方法执行SQL语句

self.cur.execute(sql, (item["name"], item["status"], item["max"], item["min"], item["date"]))

# 使用fetall()获取全部数据

data = self.cur.fetchall()

print("-----------------------------------test---------------------------")

print(data)

print("-----------------------------------test---------------------------")

# 提交

self.db.commit()

return item

# 关闭

def close_spider(self, spider):

self.cur.close()

self.db.close()

items.py

字段声明

import scrapy

# 用于保存所抓取的数据的容器

# 定义字段内容

class WeatherItem(scrapy.Item):

# define the fields for your item here like:

# 今日

name = scrapy.Field()

# 天气状态

status = scrapy.Field()

# 日期

date = scrapy.Field()

# 最高气温

max = scrapy.Field()

# 最低气温

min = scrapy.Field()

pass

settings.py

增加配置

# Configure item pipelines

# See https://docs.scrapy.org/en/latest/topics/item-pipeline.html

ITEM_PIPELINES = {

'weather.pipelines.WeatherPipeline': 300,

}

爬虫逻辑

sh.py

import scrapy

import time

import datetime

# 爬虫逻辑

class ShSpider(scrapy.Spider):

# Spider名称,不能删除

name = "sh"

allowed_domains = ['weather.com']

start_urls = [

'https://weather.com/zh-CN/weather/today/l/7f14186934f484d567841e8646abc61b81cce4d88470d519beeb5e115c9b425a']

def parse(self, response):

# 每日预报

for li in response.css('div.DailyWeatherCard--TableWrapper--12r1N ul li'):

# 名称

name = li.css('a>h3>span::text').get()

# 天气状况

status = li.xpath('a/div[@class="Column--icon--1fMZT Column--small--3Qnmn"]/svg/title/text()').get()

# 最高气温

max_temp = li.xpath('a/div[@class="Column--temp--2v_go"]/span/text()').get()

# 最低气温

min_temp = li.xpath('a/div[@class="Column--tempLo--19O32"]/span/text()').get()

# 日期

date = ""

# 从name中获取日期

if name == "今天":

# date = time.strftime("%Y-%m-%d %H:%M:%S", time.localtime())

date = time.strftime("%Y-%m-%d", time.localtime())

name = get_week(date)

print(date)

else:

# 判断是否带有数字

if not name.isdigit():

number_str = ''.join(list(filter(str.isdigit, name)))

# 字符串转换为整数

if number_str.isdigit():

number = int(number_str)

# 根据number动态计算日期

date = get_date_by_diff(number)

print("文字+数字", date)

else:

print("纯文本")

yield {

"name": name,

"status": status,

"max": max_temp,

"min": min_temp,

"date": date

}

pass

# 根据日期差值获取日期

def get_date_by_diff(day):

diff = day - datetime.datetime.now().day

# 先获得时间数组格式的日期

threeDayAgo = (datetime.datetime.now() + datetime.timedelta(days=diff))

# 转换为时间戳

# timeStamp = int(time.mktime(threeDayAgo.timetuple()))

# 转换为其他字符串格式

otherStyleTime = threeDayAgo.strftime("%Y-%m-%d")

return otherStyleTime

pass

# 根据日期字符串获取周几 2021-02-01

def get_week(date):

# 获取1-7,代表周一到周日

dayOfWeek = datetime.datetime.fromtimestamp(time.mktime(time.strptime(date, "%Y-%m-%d"))).isoweekday()

dicts={

'1': '周一',

'2': '周二',

'3': '周三',

'4': '周四',

'5': '周五',

'6': '周六',

'7': '周日'

}

return dicts[str(dayOfWeek)]

pass

增加入口执行文件

entrypoint.py

# 入口执行文件

from scrapy import cmdline

cmdline.execute(['scrapy', 'runspider', 'F:\project\python\weather\weather\spiders\sh.py', '-o', 'test.json'])

执行该文件即可

附录:

数据库结构

SET NAMES utf8mb4;

SET FOREIGN_KEY_CHECKS = 0;

-- ----------------------------

-- Table structure for weather

-- ----------------------------

DROP TABLE IF EXISTS `weather`;

CREATE TABLE `weather` (

`id` int NOT NULL AUTO_INCREMENT,

`name` varchar(255) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NOT NULL,

`date` varchar(255) COLLATE utf8mb4_general_ci DEFAULT NULL,

`max` varchar(255) COLLATE utf8mb4_general_ci DEFAULT NULL,

`min` varchar(255) COLLATE utf8mb4_general_ci DEFAULT NULL,

`status` varchar(255) COLLATE utf8mb4_general_ci DEFAULT NULL,

`create_time` datetime NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP,

PRIMARY KEY (`id`) USING BTREE,

UNIQUE KEY `date` (`date`) USING BTREE

) ENGINE=InnoDB AUTO_INCREMENT=6 DEFAULT CHARSET=utf8mb4 COLLATE=utf8mb4_general_ci;