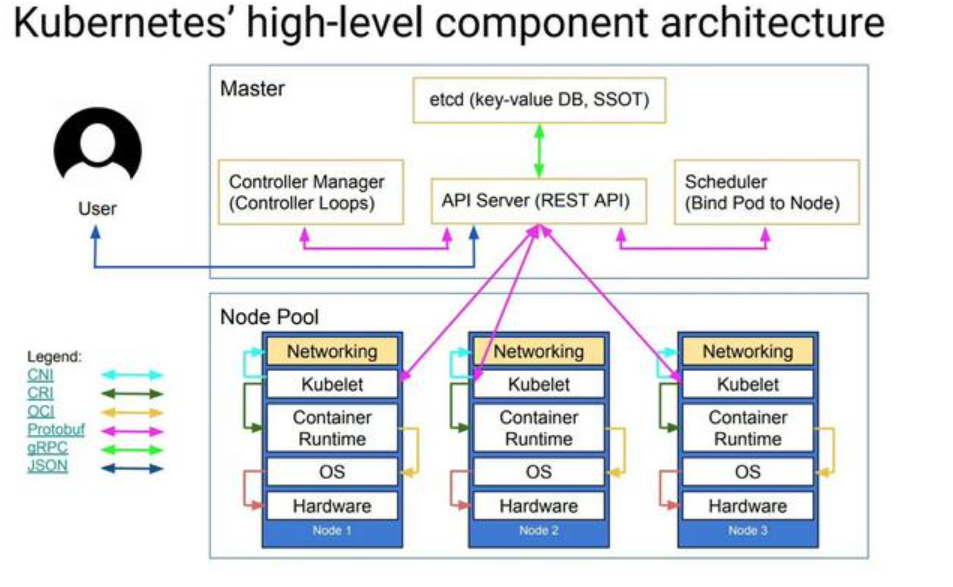

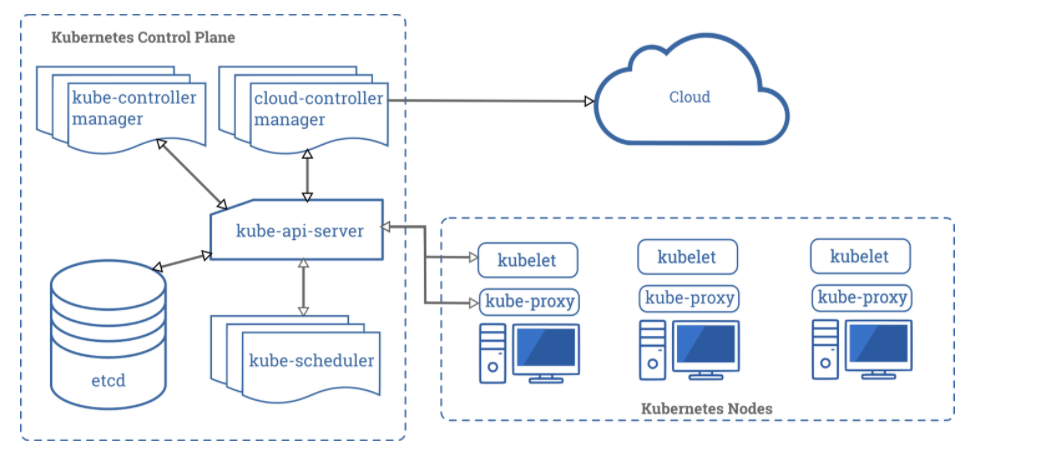

Kubernetes架构图

k8s各个组件的功能

kubectl 客户端命令行工具,将接受的命令格式化后发送给kube-apiserver,作为整个系统的操作入口

rest API 作为整个系统的控制入口,以RESTAPI服务提供接口

scheduler 负责节点资源管理,接受来自kube-apiserver创建Pods任务,并分配到某个节点

controller-manager 用来执行整个系统中的后台任务,包括节点状态状况、Pod个数、Pods和Service的关联等

controller-manager中包括:

节点控制器(Node Controller): 负责在节点出现故障时进行通知和响应。

副本控制器(Replication Controller): 负责为系统中的每个副本控制器对象维护正确数量的 Pod。

端点控制器(Endpoints Controller): 填充端点(Endpoints)对象(即加入 Service 与 Pod)。

服务帐户和令牌控制器(Service Account & Token Controllers): 为新的命名空间创建默认帐户和 API 访问令牌.

etcd 负责节点间的服务发现和配置共享,相当于一个数据库,配置共享

kubelet 客户端的一个程序,运行在每个计算节点上,作为agent,接受分配该节点的Pods任务及管理容器,周期性获取容器状态,反馈给kube-apiserver

proxy node上必须运行该程序,运行在每个计算节点上,负责Pod网络代理。定时从etcd获取到service信息来做相应的策略

kubernetes使用了CNI插件(是一个网络插件,container network interface),实现pod跨主机通讯,常用flannel、calico等。

kubeadmin进行安装

yum安装每个组件

kubectl version不能高于kube-apiserver version,可小于,最高接受两个小版本

kube-controller-manager、kube-scheduler、cloud-controller-manager不能高于kube-apiserver version,可以接受比该版本小一个版本,最好是版本相同.

kubectl可以比kube-apiserver version高一个版本,也可以小一个版本

升级顺序:kube-apiserver————>kube-controller-manager、kube-scheduler、cloud-controller-manager————>kubelet————>kube-proxy(kubelet必须和kube-proxy版本相同,kube-apiserver容错最小版本和kube-proxy相同)

准备环境(三台机器都做)

swapoff -a

防火墙、selinux等都关闭

[root@master ~]# cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

[root@master ~]#sysctl --system

安装docker,并添加加速器

配置k8s安装源

[root@master ~]#cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

[root@master ~]#yum install -y kubelet-1.18.0 kubeadm-1.18.0 kubectl-1.18.0

启动kubelet

[root@master ~]# systemctl start kubelet && systemctl enable kubelet

集群初始化

[root@master ~]# kubeadm init --apiserver-advertise-address=172.22.213.49 --image-repository registry.aliyuncs.com/google_containers --kubernetes-version v1.18.0 --service-cidr=10.96.0.0/12 --pod-network-cidr=10.244.0.0/16

--apiserver-advertise-address=172.22.213.49 #指定masterIP

--image-repository registry.aliyuncs.com/google_containers #指定拉取镜像 指定阿里云

--kubernetes-version v1.18.0 #指定k8s版本 不写为最新版本

--service-cidr=10.96.0.0/12

--pod-network-cidr=10.244.0.0/16

记录以下信息,后续会用

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 172.22.213.49:6443 --token ozhlzf.1g0dvh2vkg6k6461 \

--discovery-token-ca-cert-hash sha256:edafdcb29b96e64f75cc1901040321f045f9a568c0b72d85abb5aa2959815199

记录集群登录信息

[root@master ~]# mkdir -p $HOME/.kube

[root@master ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

如果加入集群的sha256忘记,使用以下命令获取

[root@master ~]# kubeadm token create --print-join-command

部署CNI网络插件

[root@master ~]# kubectl apply -f kube-flannel.yaml

链接:https://github.com/coreos/flannel/blob/master/Documentation/kube-flannel.yml

重新设置集群

[root@master ~]# kubeadm reset

各个node运行

[root@master ~]#kubeadm join 172.22.213.49:6443 --token ozhlzf.1g0dvh2vkg6k6461 \

--discovery-token-ca-cert-hash sha256:edafdcb29b96e64f75cc1901040321f045f9a568c0b72d85abb5aa2959815199

master执行

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready master 32m v1.18.0

node1 Ready <none> 25m v1.18.0

node2 Ready <none> 25m v1.18.0

表示已加入节点

查看node或者pod的状态 必须部署metric

heapster metric-server这两种

在kubernetes中最根本还是容器,创建的是pod,pod包含容器,每个pod至少包含一个容器

创建pod的两种方式

[root@master ~]#kubectl apply -f 文件名

[root@master ~]# kubectl create -f 文件名

删除pod

[root@master ~]# kubectl delete -f 文件名

create和apply的区别,apply可以进行追加

在kubernetes中最根本还是容器,创建的是pod,pod包含容器,每个pod至少包含一个容器

临时运行一个pod

[root@master ~]# kubectl run web --image=nginx --dry-run

[root@master ~]# kubectl run web --image=nginx --dry-run -o yaml

[root@master ~]# kubectl describe pod web #查看pod详细信息

Name: web

Namespace: default

Priority: 0

Node: node1/172.22.213.50

Start Time: Tue, 22 Dec 2020 19:05:26 +0800

Labels: xx=xx1

Annotations: Status: Running

IP: 10.244.1.5

IPs:

IP: 10.244.1.5

Containers:

web:

Container ID: docker://bd9e421c3e1f3922bf6e14e9adb0508be44373fbde4be088a948b341bd77bfb5

Image: nginx

Image ID: docker-pullable://nginx@sha256:4cf620a5c81390ee209398ecc18e5fb9dd0f5155cd82adcbae532fec94006fb9

Port: 80/TCP

Host Port: 0/TCP

State: Running

Started: Tue, 22 Dec 2020 19:05:42 +0800

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-4lr2p (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

default-token-4lr2p:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-4lr2p

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 23s default-scheduler Successfully assigned default/web to node1

Normal Pulling 22s kubelet, node1 Pulling image "nginx"

Normal Pulled 7s kubelet, node1 Successfully pulled image "nginx"

Normal Created 7s kubelet, node1 Created container web

Normal Started 7s kubelet, node1 Started container web

[root@master ~]#

pod中可以有多个容器,多个pod可以分布在不同的node上,同一个pod中的容器是不可以运行在不同的node上运行,只能在一个node上运行

[root@master ~]# kubectl exec -it pod sh/bash #获取pod的一个终端

[root@master ~]# kubectl logs pod #查看pod日志

[root@master ~]# kubectl describe pod podname #查看pod详细信息

pod生命周期

nginx.yml

apiVersion: v1

kind: Pod

metadata:

name: web

labels:

xx: xx1

spec:

containers:

- name: web

image: nginx

ports:

- name: web

containerPort: 80

protocol: TCP

源码安装kubernetes

环境初始化

关闭firewalld selinux、swap分区等 时间校对

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl --system

#拉取证书

curl -L https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 -o /usr/local/bin/cfssl

curl -L https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 -o /usr/local/bin/cfssljson

curl -L https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 -o /usr/local/bin/cfssl-certinfo

#授权

chmod +x /usr/local/bin/cfssl*

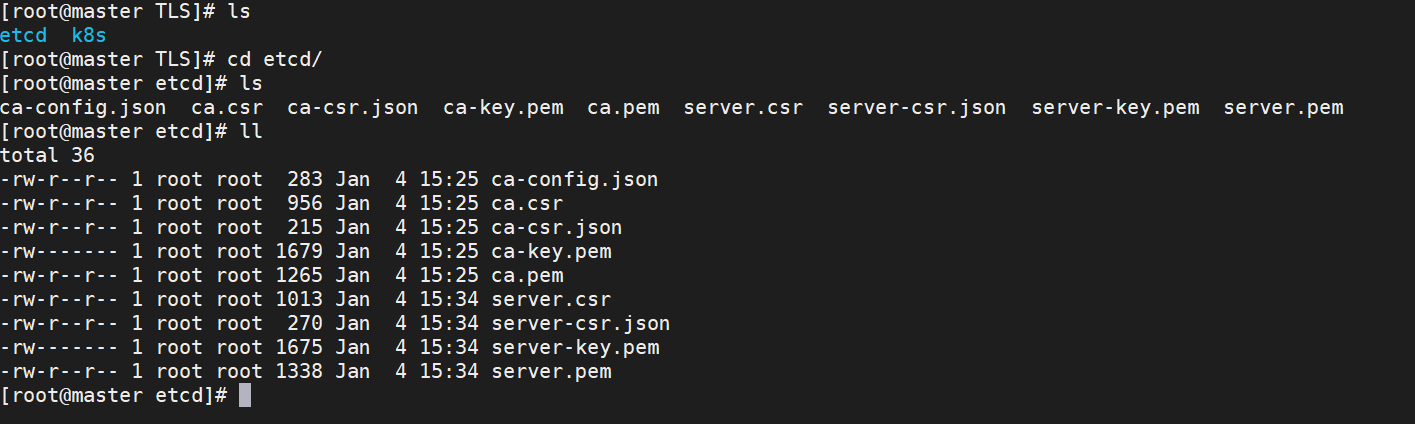

创建etcd证书

#创建工作目录:

mkdir -p ~/TLS/{

etcd,k8s}

cd ~/TLS/etcd

#使用自签证书CA

cat > ca-config.json<< EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"www": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

#etcd证书配置

cat > ca-csr.json<< EOF

{

"CN": "etcd CA",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing"

}

]

}

EOF

#生成证书 ca*pem证书

cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

#下面的文件的hosts字段的ip地址是为所有etcd集群内部通信的ip,我们要3个etcd做集群

cat > server-csr.json<< EOF

{

"CN": "etcd",

"hosts": [

"192.168.1.20",

"192.168.1.21",

"192.168.1.22"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing"

}

]

}

EOF

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=www server-csr.json | cfssljson -bare server

拉取etcd二进制包

#三台机器都执行同操作

#注意所用证书都因相同

https://github.com/etcd-io/etcd/releases/download/v3.4.9/etcd-v3.4.9-linux-amd64.tar.gz #地址

mkdir -p /opt/etcd/{

bin,cfg,ssl}

cp ~/TLS/etcd/ca*pem ~/TLS/etcd/server*pem /opt/etcd/ssl/

tar zxvf etcd-v3.4.9-linux-amd64.tar.gz

mv etcd-v3.4.9-linux-amd64/{

etcd,etcdctl} /opt/etcd/bin/

#修改etcd IP地址(master)

#[Member]

ETCD_NAME="etcd-1"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://172.22.213.49:2380"

ETCD_LISTEN_CLIENT_URLS="https://172.22.213.49:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://172.22.213.49:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://172.22.213.49:2379"

ETCD_INITIAL_CLUSTER="etcd-1=https://172.22.213.49:2380,etcd-2=https://172.22.213.50:2380,etcd-3=https://172.22.213.51:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

参数详解

ETCD_NAME #节点名称,集群中唯一

ETCD_DATA_DIR: #数据目录

ETCD_LISTEN_PEER_URLS #集群通信监听地址

ETCD_LISTEN_CLIENT_URLS #客户端访问监听地址

ETCD_INITIAL_ADVERTISE_PEER_URLS#集群通告地址

ETCD_ADVERTISE_CLIENT_URLS #客户端通告地址

ETCD_INITIAL_CLUSTER #集群节点地址

ETCD_INITIAL_CLUSTER_TOKEN #集群 Token

ETCD_INITIAL_CLUSTER_STATE #加入集群的当前状态,new 是新集群,existing 表示加入已有集群

#将etcd添加到systemd级别进行管理

cat > /usr/lib/systemd/system/etcd.service << EOF

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=/opt/etcd/cfg/etcd.conf

ExecStart=/opt/etcd/bin/etcd \

--cert-file=/opt/etcd/ssl/server.pem \

--key-file=/opt/etcd/ssl/server-key.pem \

--peer-cert-file=/opt/etcd/ssl/server.pem \

--peer-key-file=/opt/etcd/ssl/server-key.pem \

--trusted-ca-file=/opt/etcd/ssl/ca.pem \

--peer-trusted-ca-file=/opt/etcd/ssl/ca.pem \

--logger=zap

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

#node1/opt/etcd/cfg/etcd.conf配置

#[Member]

ETCD_NAME="etcd-2"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://172.22.213.50:2380"

ETCD_LISTEN_CLIENT_URLS="https://172.22.213.50:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://172.22.213.50:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://172.22.213.50:2379"

ETCD_INITIAL_CLUSTER="etcd-1=https://172.22.213.49:2380,etcd-2=https://172.22.213.50:2380,etcd-3=https://172.22.213.51:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

#node2/opt/etcd/cfg/etcd.conf配置

#[Member]

ETCD_NAME="etcd-3"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://172.22.213.51:2380"

ETCD_LISTEN_CLIENT_URLS="https://172.22.213.51:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://172.22.213.51:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://172.22.213.51:2379"

ETCD_INITIAL_CLUSTER="etcd-1=https://172.22.213.49:2380,etcd-2=https://172.22.213.50:2380,etcd-3=https://172.22.213.51:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

[root@master ~]#systemctl daemon-reload

[root@master ~]#systemctl start etcd

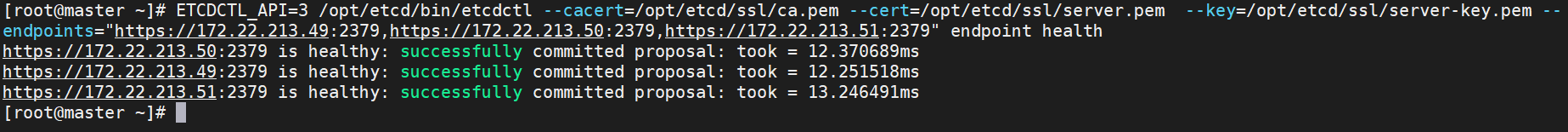

[root@master ~]# ETCDCTL_API=3 /opt/etcd/bin/etcdctl --cacert=/opt/etcd/ssl/ca.pem --cert=/opt/etcd/ssl/server.pem --key=/opt/etcd/ssl/server-key.pem --endpoints="https://172.22.213.49:2379,https://172.22.213.50:2379,https://172.22.213.51:2379" endpoint health

测试为以下结果 集群正常

luster"

ETCD_INITIAL_CLUSTER_STATE=“new”

[root@master ~]#systemctl daemon-reload

[root@master ~]#systemctl start etcd

[root@master ~]# ETCDCTL_API=3 /opt/etcd/bin/etcdctl --cacert=/opt/etcd/ssl/ca.pem --cert=/opt/etcd/ssl/server.pem --key=/opt/etcd/ssl/server-key.pem --endpoints=“https://172.22.213.49:2379,https://172.22.213.50:2379,https://172.22.213.51:2379” endpoint health

测试为以下结果 集群正常