笔记目录

学习目标

深度学习框架认识

学习内容

1、 整体概述和pytorch推荐书籍

推荐图书:动手学深度学习(pytorch版)

网页版:https://tangshusen.me/Dive-into-DL-PyTorch/#/

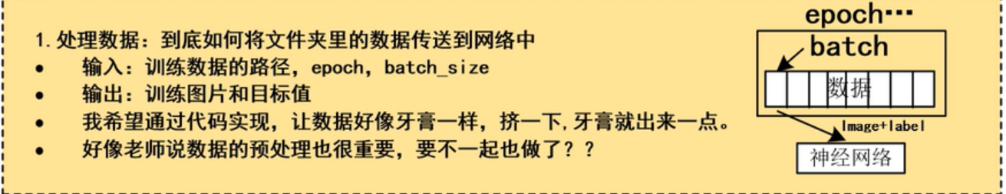

2、 处理数据

原理:

pycharm调试方法:

代码调试很重要

伪代码

dir = "./data/" #100张图片

def 挤牙膏操作(dir,batch_size):

读取文件夹(dir)

image ,label=按照batch_size进行数据的拆分(batch_size)

image=数据预处理(image)

return image ,label

for i in range(5): #5个epoch

for j in range(10):

image, label = 挤牙膏操作(dir, batch_size=10)

pre_label = 神经网络(image)

损失函数(pre_label,label)

pytorch模板

import os

import random

from PIL import Image

from torch.utils.data import Dataset

from torch.utils.data import DataLoader

import torchvision.transforms as transforms

import cv2

# class MYDataset(Dataset): #继承Dataset类

# def __init__(self, data_dir, transform=None): #定义3个方法

# ..

#

# def __getitem__(self, index):

# ..

# return ..,..

#

# def __len__(self):

# return len(..)

#

#

# train_dir='./' #存放数据文件夹

#

# train_data = MYDataset(data_dir=train_dir) #实例化

# # 构建DataLoder(DataLoder也是一个官方类)

# train_loader = DataLoader(dataset=train_data, batch_size=16)

#

pytorch代码

# -*- coding: utf-8 -*-

import os

import random

from PIL import Image

from torch.utils.data import Dataset

from torch.utils.data import DataLoader

import torchvision.transforms as transforms

import cv2

# random.seed(1)

#

#

# rmb_label = {

"1": 0, "100": 1} #定义两个类别(这里做人民币的)

#

train_transform = transforms.Compose([

transforms.Resize((32, 32)), #将图片大小变成一样的

# transforms.RandomCrop(32, padding=4),

transforms.ToTensor(), #将图片转成tensor形式

# transforms.Normalize(norm_mean, norm_std),

])

class RMBDataset(Dataset):

def __init__(self, data_dir, transform=None):

self.label_name = {

"1": 0, "100": 1}

self.data_info = self.get_img_info(data_dir) # data_info存储所有图片路径和标签,在DataLoader中通过index读取样本

self.transform = transform

def __getitem__(self, index):

path_img, label = self.data_info[index]

img = Image.open(path_img).convert('RGB') # 0~255

if self.transform is not None:

img = self.transform(img) # 在这里做transform,转为tensor等等

return img, label

def __len__(self):

return len(self.data_info)

@staticmethod

def get_img_info(data_dir):

data_info = list()

rmb_label = {

"1": 0, "100": 1}

for root, dirs, _ in os.walk(data_dir): #

# 遍历类别

for sub_dir in dirs:

img_names = os.listdir(os.path.join(root, sub_dir))

img_names = list(filter(lambda x: x.endswith('.jpg'), img_names))

# 遍历图片

for i in range(len(img_names)):

img_name = img_names[i]

path_img = os.path.join(root, sub_dir, img_name)

label = rmb_label[sub_dir]

data_info.append((path_img, int(label)))

return data_info

train_dir='./RMB_data/rmb_split/train/'

train_data = RMBDataset(data_dir=train_dir, transform=train_transform)

# 构建DataLoder

train_loader = DataLoader(dataset=train_data, batch_size=16)

for epoch in range(10):

for i, data in enumerate(train_loader):

pass

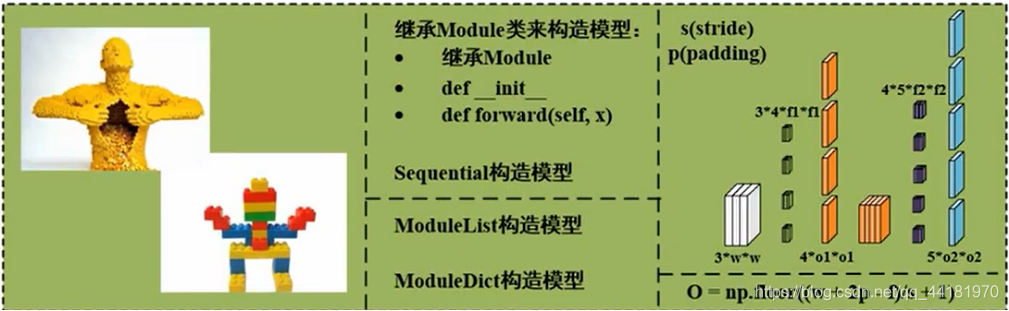

3、 构建网络

pytorch程序

import os

import random

import numpy as np

import torch

import torch.nn as nn

import torch.nn.functional as F

from collections import OrderedDict

class LeNet(nn.Module):

def __init__(self,classes):

super(LeNet, self).__init__()

self.conv1 = nn.Conv2d(3, 6, 5) #(32+2*0-5)/1+1 = 28 卷积操作 输入 输出 卷积核

self.conv2 = nn.Conv2d(6, 16, 5)

self.fc1 = nn.Linear(16*5*5, 120) #用于设置网络中的全连接层

self.fc2 = nn.Linear(120, 84)

self.fc3 = nn.Linear(84, classes)

def forward(self, x): #4 3 32 32 ->nn.Conv2d(3, 6, 5)-> 4 6 28 28

out = F.relu(self.conv1(x)) #32->28 4 6 28 28

out = F.max_pool2d(out, 2) #4 6 14 14 池化

out = F.relu(self.conv2(out)) # 4 16 10 10

out = F.max_pool2d(out, 2) # 4 16 5 5

out = out.view(out.size(0), -1) #4 400

out = F.relu(self.fc1(out))

out = F.relu(self.fc2(out))

out = self.fc3(out)

return out

def initialize_weights(self):

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.xavier_normal_(m.weight.data)

if m.bias is not None:

m.bias.data.zero_()

elif isinstance(m, nn.BatchNorm2d):

m.weight.data.fill_(1)

m.bias.data.zero_()

elif isinstance(m, nn.Linear):

nn.init.normal_(m.weight.data, 0, 0.1)

m.bias.data.zero_()

# net = LeNet(classes=3)

#

#

# fake_img = torch.randn((4, 3, 32, 32), dtype=torch.float32) #4:batch_size

# output = net(fake_img)

# print('over!')

#

pytorch提供的容器

# ============================ Sequential

#顺序

class LeNetSequential(nn.Module):

def __init__(self, classes):

super(LeNetSequential, self).__init__()

self.features = nn.Sequential(

nn.Conv2d(3, 6, 5),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2, stride=2),

nn.Conv2d(6, 16, 5),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2, stride=2),)

self.classifier = nn.Sequential(

nn.Linear(16*5*5, 120),

nn.ReLU(),

nn.Linear(120, 84),

nn.ReLU(),

nn.Linear(84, classes),)

def forward(self, x):

x = self.features(x)

x = x.view(x.size()[0], -1)

x = self.classifier(x)

return x

class LeNetSequentialOrderDict(nn.Module):

def __init__(self, classes):

super(LeNetSequentialOrderDict, self).__init__()

self.features = nn.Sequential(OrderedDict({

'conv1': nn.Conv2d(3, 6, 5),

'relu1': nn.ReLU(inplace=True),

'pool1': nn.MaxPool2d(kernel_size=2, stride=2),

'conv2': nn.Conv2d(6, 16, 5),

'relu2': nn.ReLU(inplace=True),

'pool2': nn.MaxPool2d(kernel_size=2, stride=2),

}))

self.classifier = nn.Sequential(OrderedDict({

'fc1': nn.Linear(16*5*5, 120),

'relu3': nn.ReLU(),

'fc2': nn.Linear(120, 84),

'relu4': nn.ReLU(inplace=True),

'fc3': nn.Linear(84, classes),

}))

def forward(self, x):

x = self.features(x)

x = x.view(x.size()[0], -1)

x = self.classifier(x)

return x

# net = LeNetSequential(classes=2)

# net = LeNetSequentialOrderDict(classes=2)

# # #

# fake_img = torch.randn((4, 3, 32, 32), dtype=torch.float32)

# # #

# output = net(fake_img)

#

# print(net)

# print(output)

# ============================ ModuleList

#列表 迭代性

class myModuleList(nn.Module):

def __init__(self):

super(myModuleList, self).__init__()

modullist_temp = [nn.Linear(10, 10) for i in range(20)]

self.linears = nn.ModuleList(modullist_temp)

def forward(self, x):

for i, linear in enumerate(self.linears):

x = linear(x)

return x

# net = myModuleList()

# #

# # print(net)

# #

# fake_data = torch.ones((10, 10))

# #

# output = net(fake_data)

# #

# print(output)

# ============================ ModuleDict

#字典(可选择)索引性

class myModuleDict(nn.Module):

def __init__(self):

super(myModuleDict, self).__init__()

self.choices = nn.ModuleDict({

'conv': nn.Conv2d(10, 10, 3),

'pool': nn.MaxPool2d(3)

})

self.activations = nn.ModuleDict({

'relu': nn.ReLU(),

'prelu': nn.PReLU()

})

def forward(self, x, choice, act):

x = self.choices[choice](x)

x = self.activations[act](x)

return x

#

# net = myModuleDict()

# #

# fake_img = torch.randn((4, 10, 32, 32))

# #

# output = net(fake_img, 'conv', 'relu')

#

# print(output)

4、损失函数与优化函数

代码

import torch

import torch.nn as nn

import torch.optim as optim

# ============================ step 3/5 损失函数 ============================

#一般情况下不用自己写损失函数

criterion = nn.CrossEntropyLoss() # 选择损失函数

# ============================ step 4/5 优化器 ============================

# /'ɒptɪmaɪzə/ /ˈskedʒuːlər/

optimizer = optim.SGD(net.parameters(), lr=LR, momentum=0.9) # 选择优化器

scheduler = optim.lr_scheduler.StepLR(optimizer, step_size=10, gamma=0.1) # 设置学习率下降策略

for epoch in range(10):

for i, data in enumerate(train_loader):

# forward

inputs, labels = data

outputs = net(inputs)

# backward

optimizer.zero_grad()

loss = criterion(outputs, labels) #输出值 期望值

loss.backward()

# update weights

optimizer.step()

scheduler.step() # 更新学习率

5、完整项目(RMB识别)

# -*- coding: utf-8 -*-

import os

import random

import numpy as np

import torch

import torch.nn as nn

import torch.nn.functional as F

from torch.utils.data import DataLoader

from torch.utils.data import Dataset

import torchvision.transforms as transforms

import torch.optim as optim

from matplotlib import pyplot as plt

from PIL import Image

def set_seed(seed=1):

random.seed(seed)

np.random.seed(seed)

torch.manual_seed(seed)

torch.cuda.manual_seed(seed)

#数据导入

class RMBDataset(Dataset):

def __init__(self, data_dir, transform=None):

self.label_name = {

"1": 0, "100": 1}

self.data_info = self.get_img_info(data_dir) # data_info存储所有图片路径和标签,在DataLoader中通过index读取样本

self.transform = transform

def __getitem__(self, index):

path_img, label = self.data_info[index]

img = Image.open(path_img).convert('RGB') # 0~255

if self.transform is not None:

img = self.transform(img) # 在这里做transform,转为tensor等等

return img, label

def __len__(self):

return len(self.data_info)

@staticmethod

def get_img_info(data_dir):

data_info = list()

for root, dirs, _ in os.walk(data_dir):

# 遍历类别

for sub_dir in dirs:

img_names = os.listdir(os.path.join(root, sub_dir))

img_names = list(filter(lambda x: x.endswith('.jpg'), img_names))

# 遍历图片

for i in range(len(img_names)):

img_name = img_names[i]

path_img = os.path.join(root, sub_dir, img_name)

label = rmb_label[sub_dir]

data_info.append((path_img, int(label)))

return data_info

#网络构建

class LeNet(nn.Module):

def __init__(self, classes):

super(LeNet, self).__init__()

self.conv1 = nn.Conv2d(3, 6, 5)

self.conv2 = nn.Conv2d(6, 16, 5)

self.fc1 = nn.Linear(16*5*5, 120)

self.fc2 = nn.Linear(120, 84)

self.fc3 = nn.Linear(84, classes)

def forward(self, x):

out = F.relu(self.conv1(x))

out = F.max_pool2d(out, 2)

out = F.relu(self.conv2(out))

out = F.max_pool2d(out, 2)

out = out.view(out.size(0), -1)

out = F.relu(self.fc1(out))

out = F.relu(self.fc2(out))

out = self.fc3(out)

return out

def initialize_weights(self):

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.xavier_normal_(m.weight.data)

if m.bias is not None:

m.bias.data.zero_()

elif isinstance(m, nn.BatchNorm2d):

m.weight.data.fill_(1)

m.bias.data.zero_()

elif isinstance(m, nn.Linear):

nn.init.normal_(m.weight.data, 0, 0.1)

m.bias.data.zero_()

set_seed() # 设置随机种子

rmb_label = {

"1": 0, "100": 1}

# 参数设置

MAX_EPOCH = 10

BATCH_SIZE = 5

LR = 0.01

log_interval = 10

val_interval = 1

# ============================ step 1/5 数据 ============================

split_dir = os.path.join(".", "RMB_data", "rmb_split")

train_dir = os.path.join(split_dir, "train")

valid_dir = os.path.join(split_dir, "valid")

norm_mean = [0.485, 0.456, 0.406] #均值

norm_std = [0.229, 0.224, 0.225] #方差

train_transform = transforms.Compose([

transforms.Resize((32, 32)),

transforms.RandomCrop(32, padding=4),

transforms.ToTensor(),

transforms.Normalize(norm_mean, norm_std),

])

valid_transform = transforms.Compose([

transforms.Resize((32, 32)),

transforms.ToTensor(),

transforms.Normalize(norm_mean, norm_std), #归一化

])

# 构建MyDataset实例

train_data = RMBDataset(data_dir=train_dir, transform=train_transform)

valid_data = RMBDataset(data_dir=valid_dir, transform=valid_transform)

# 构建DataLoder

train_loader = DataLoader(dataset=train_data, batch_size=BATCH_SIZE, shuffle=True)

valid_loader = DataLoader(dataset=valid_data, batch_size=BATCH_SIZE)

# ============================ step 2/5 模型 ============================

net = LeNet(classes=2)

net.initialize_weights() #网络初始化 梯度消失和爆炸

# ============================ step 3/5 损失函数 ============================

criterion = nn.CrossEntropyLoss() # 选择损失函数

# ============================ step 4/5 优化器 ============================

optimizer = optim.SGD(net.parameters(), lr=LR, momentum=0.9) # 选择优化器

scheduler = optim.lr_scheduler.StepLR(optimizer, step_size=10, gamma=0.1) # 设置学习率下降策略

# ============================ step 5/5 训练 ============================

train_curve = list()

valid_curve = list()

for epoch in range(MAX_EPOCH):

loss_mean = 0.

correct = 0.

total = 0.

net.train()

for i, data in enumerate(train_loader):

# forward

inputs, labels = data

outputs = net(inputs)

# backward

optimizer.zero_grad()

loss = criterion(outputs, labels)

loss.backward()

# update weights

optimizer.step()

# 统计分类情况

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).squeeze().sum().numpy()

# 打印训练信息

loss_mean += loss.item()

train_curve.append(loss.item())

if (i+1) % log_interval == 0:

loss_mean = loss_mean / log_interval

print("Training:Epoch[{:0>3}/{:0>3}] Iteration[{:0>3}/{:0>3}] Loss: {:.4f} Acc:{:.2%}".format(

epoch, MAX_EPOCH, i+1, len(train_loader), loss_mean, correct / total))

loss_mean = 0.

scheduler.step() # 更新学习率

# validate the model

if (epoch+1) % val_interval == 0:

correct_val = 0.

total_val = 0.

loss_val = 0.

net.eval()

with torch.no_grad():

for j, data in enumerate(valid_loader):

inputs, labels = data

outputs = net(inputs)

loss = criterion(outputs, labels)

_, predicted = torch.max(outputs.data, 1)

total_val += labels.size(0)

correct_val += (predicted == labels).squeeze().sum().numpy()

loss_val += loss.item()

valid_curve.append(loss_val)

print("Valid:\t Epoch[{:0>3}/{:0>3}] Iteration[{:0>3}/{:0>3}] Loss: {:.4f} Acc:{:.2%}".format(

epoch, MAX_EPOCH, j+1, len(valid_loader), loss_val, correct / total))

train_x = range(len(train_curve))

train_y = train_curve

train_iters = len(train_loader)

valid_x = np.arange(1, len(valid_curve)+1) * train_iters*val_interval # 由于valid中记录的是epochloss,需要对记录点进行转换到iterations

valid_y = valid_curve

plt.plot(train_x, train_y, label='Train')

plt.plot(valid_x, valid_y, label='Valid')

plt.legend(loc='upper right')

plt.ylabel('loss value')

plt.xlabel('Iteration')

plt.show()

# # ============================ inference ============================

#

# BASE_DIR = os.path.dirname(os.path.abspath(__file__))

# test_dir = os.path.join(BASE_DIR, "test_data")

#

# test_data = RMBDataset(data_dir=test_dir, transform=valid_transform)

# valid_loader = DataLoader(dataset=test_data, batch_size=1)

#

# for i, data in enumerate(valid_loader):

# # forward

# inputs, labels = data

# outputs = net(inputs)

# _, predicted = torch.max(outputs.data, 1)

#

# rmb = 1 if predicted.numpy()[0] == 0 else 100

# print("模型获得{}元".format(rmb))

5、GPU训练,模型的保存与加载

原理: 将数据和模型同时放到GPU上

代码

import argparse

import torch

import torch.nn as nn

from torch.utils.data import DataLoader

import torchvision.transforms as transforms

from dataset import RMBDataset

from model import LeNet

import torch.optim as optim

import utils

def train():

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

utils.set_seed()

# ============================ step 1/5 数据 ============================

norm_mean = [0.485, 0.456, 0.406]

norm_std = [0.229, 0.224, 0.225]

train_transform = transforms.Compose([

transforms.Resize((32, 32)),

transforms.RandomCrop(32, padding=4),

transforms.ToTensor(),

transforms.Normalize(norm_mean, norm_std),

])

valid_transform = transforms.Compose([

transforms.Resize((32, 32)),

transforms.ToTensor(),

transforms.Normalize(norm_mean, norm_std),

])

# 构建MyDataset实例

train_data = RMBDataset(data_dir=opt.train_dir, transform=train_transform)

valid_data = RMBDataset(data_dir=opt.valid_dir, transform=valid_transform)

# 构建DataLoder

train_loader = DataLoader(dataset=train_data, batch_size=opt.batch_size, shuffle=True)

valid_loader = DataLoader(dataset=valid_data, batch_size=opt.batch_size)

# ============================ step 2/5 模型 ============================

net = LeNet(classes=2).to(device)

# net.initialize_weights()

# ============================ step 3/5 损失函数 ============================

criterion = nn.CrossEntropyLoss() # 选择损失函数

# ============================ step 4/5 优化器 ============================

optimizer = optim.SGD(net.parameters(), lr=opt.lr, momentum=0.9) # 选择优化器

scheduler = optim.lr_scheduler.StepLR(optimizer, step_size=10, gamma=0.1) # 设置学习率下降策略

# ============================ step 5/5 训练 ============================

train_curve = list()

valid_curve = list()

for epoch in range(opt.epochs):

loss_mean = 0.

correct = 0.

total = 0.

net.train()

for i, data in enumerate(train_loader):

# forward

inputs, labels = data

inputs = inputs.to(device)

labels = labels.to(device)

outputs = net(inputs)

# backward

optimizer.zero_grad()

loss = criterion(outputs, labels)

loss.backward()

# update weights

optimizer.step()

# 统计分类情况

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).to("cpu").squeeze().sum().numpy()

# 打印训练信息

loss_mean += loss.item()

train_curve.append(loss.item())

if (i + 1) % opt.log_interval == 0:

loss_mean = loss_mean / opt.log_interval

print("Training:Epoch[{:0>3}/{:0>3}] Iteration[{:0>3}/{:0>3}] Loss: {:.4f} Acc:{:.2%}".format(

epoch, opt.epochs, i + 1, len(train_loader), loss_mean, correct / total))

loss_mean = 0.

scheduler.step() # 更新学习率

# validate the model

if (epoch + 1) % opt.val_interval == 0:

correct_val = 0.

total_val = 0.

loss_val = 0.

net.eval()

with torch.no_grad():

for j, data in enumerate(valid_loader):

inputs, labels = data

inputs = inputs.to(device)

labels = labels.to(device)

outputs = net(inputs)

loss = criterion(outputs, labels)

_, predicted = torch.max(outputs.data, 1)

total_val += labels.size(0)

correct_val += (predicted == labels).to("cpu").squeeze().sum().numpy()

loss_val += loss.item()

valid_curve.append(loss_val)

print("Valid:\t Epoch[{:0>3}/{:0>3}] Iteration[{:0>3}/{:0>3}] Loss: {:.4f} Acc:{:.2%}".format(

epoch, opt.epochs, j + 1, len(valid_loader), loss_val, correct / total))

# 保存模型参数

net_state_dict = net.state_dict() #将数据放到字典里

torch.save(net_state_dict, opt.path_state_dict) #保存 路径

print("save~")

if __name__ == '__main__':

parser = argparse.ArgumentParser()

parser.add_argument('--epochs', type=int, default=20) # 500200 batches at bs 16, 117263 COCO images = 273 epochs

parser.add_argument('--batch-size', type=int, default=5) # effective bs = batch_size * accumulate = 16 * 4 = 64

parser.add_argument('--lr', type=float, default=0.01)

parser.add_argument('--log_interval', type=int, default=10)

parser.add_argument('--val_interval', type=int, default=1)

parser.add_argument('--train_dir', type=str, default='./RMB_data/rmb_split/train')

parser.add_argument('--valid_dir', type=str, default='./RMB_data/rmb_split/valid')

parser.add_argument('--path_state_dict', type=str, default='./model_state_dict.pkl')

opt = parser.parse_args()

train()

学习时间

2021.1.20

学习产出

1、 csdn笔记 1篇总结

1.学习到了调试方法明白了如何快速读懂程序(调试)。

2.学到了一种新的学习方法,先看别人的程序了解思想再将其转换成自己的程序变成自己的东西。