Spark任务的提交方式

使用spark-shell命令和spark-submit命令来提交spark任务。

当执行测试程序,使用spark-shell,spark的交互式命令行

提交spark程序到spark集群中运行时,spark-submit

1、spark-shell

\quad \quad spark-shell 是 Spark 自带的交互式 Shell 程序,方便用户进行交互式编程,用户可以在该命令行下用 Scala 编写 spark 程序。

1.1 概述

1、spark-shell 使用帮助

(py27) [root@master ~]# cd /usr/local/src/spark-2.0.2-bin-hadoop2.6

(py27) [root@master spark-2.0.2-bin-hadoop2.6]# cd bin

(py27) [root@master bin]# ./spark-shell --help

Usage: ./bin/spark-shell [options]

Options:

--master MASTER_URL spark://host:port, mesos://host:port, yarn, or local.

--deploy-mode DEPLOY_MODE Whether to launch the driver program locally ("client") or

on one of the worker machines inside the cluster ("cluster")

(Default: client).

--class CLASS_NAME Your application's main class (for Java / Scala apps).

--name NAME A name of your application.

--jars JARS Comma-separated list of local jars to include on the driver

and executor classpaths.

--packages Comma-separated list of maven coordinates of jars to include

on the driver and executor classpaths. Will search the local

maven repo, then maven central and any additional remote

repositories given by --repositories. The format for the

coordinates should be groupId:artifactId:version.

--exclude-packages Comma-separated list of groupId:artifactId, to exclude while

resolving the dependencies provided in --packages to avoid

dependency conflicts.

--repositories Comma-separated list of additional remote repositories to

search for the maven coordinates given with --packages.

--py-files PY_FILES Comma-separated list of .zip, .egg, or .py files to place

on the PYTHONPATH for Python apps.

--files FILES Comma-separated list of files to be placed in the working

directory of each executor.

--conf PROP=VALUE Arbitrary Spark configuration property.

--properties-file FILE Path to a file from which to load extra properties. If not

specified, this will look for conf/spark-defaults.conf.

--driver-memory MEM Memory for driver (e.g. 1000M, 2G) (Default: 1024M).

--driver-java-options Extra Java options to pass to the driver.

--driver-library-path Extra library path entries to pass to the driver.

--driver-class-path Extra class path entries to pass to the driver. Note that

jars added with --jars are automatically included in the

classpath.

--executor-memory MEM Memory per executor (e.g. 1000M, 2G) (Default: 1G).

--proxy-user NAME User to impersonate when submitting the application.

This argument does not work with --principal / --keytab.

--help, -h Show this help message and exit.

--verbose, -v Print additional debug output.

--version, Print the version of current Spark.

Spark standalone with cluster deploy mode only:

--driver-cores NUM Cores for driver (Default: 1).

Spark standalone or Mesos with cluster deploy mode only:

--supervise If given, restarts the driver on failure.

--kill SUBMISSION_ID If given, kills the driver specified.

--status SUBMISSION_ID If given, requests the status of the driver specified.

Spark standalone and Mesos only:

--total-executor-cores NUM Total cores for all executors.

Spark standalone and YARN only:

--executor-cores NUM Number of cores per executor. (Default: 1 in YARN mode,

or all available cores on the worker in standalone mode)

YARN-only:

--driver-cores NUM Number of cores used by the driver, only in cluster mode

(Default: 1).

--queue QUEUE_NAME The YARN queue to submit to (Default: "default").

--num-executors NUM Number of executors to launch (Default: 2).

If dynamic allocation is enabled, the initial number of

executors will be at least NUM.

--archives ARCHIVES Comma separated list of archives to be extracted into the

working directory of each executor.

--principal PRINCIPAL Principal to be used to login to KDC, while running on

secure HDFS.

--keytab KEYTAB The full path to the file that contains the keytab for the

principal specified above. This keytab will be copied to

the node running the Application Master via the Secure

Distributed Cache, for renewing the login tickets and the

delegation tokens periodically.

- Spark-shell源码

(py27) [root@master bin]# cat spark-shell

function main() {

if $cygwin; then

# Workaround for issue involving JLine and Cygwin

# (see http://sourceforge.net/p/jline/bugs/40/).

# If you're using the Mintty terminal emulator in Cygwin, may need to set the

# "Backspace sends ^H" setting in "Keys" section of the Mintty options

# (see https://github.com/sbt/sbt/issues/562).

stty -icanon min 1 -echo > /dev/null 2>&1

export SPARK_SUBMIT_OPTS="$SPARK_SUBMIT_OPTS -Djline.terminal=unix"

"${SPARK_HOME}"/bin/spark-submit --class org.apache.spark.repl.Main --name "Spark shell" "$@"

stty icanon echo > /dev/null 2>&1

else

export SPARK_SUBMIT_OPTS

"${SPARK_HOME}"/bin/spark-submit --class org.apache.spark.repl.Main --name "Spark shell" "$@"

fi

}

- ,在 spark-shell 的main 方法中 执行了 spark-submit 的脚本 并且制定–name 为 Spark-shell ,这就解释了,为什么运行 spark-shell 的时候,在web ui中显示的名字 是spark-shell ,其次我们看到 指定了主类是

org.apache.spark.repl.Main ,这里其实可以猜到,必定为我们创建sparkcontext 对象,因为我们在执行spark-shell 的时候,会自动为我们创建好 sparkcontext 对象 - 从源码可以看出Spark-shell其实最终执行了Spark-submit命令

1.2 启动

1、 直接启动bin目录下的spark-shell

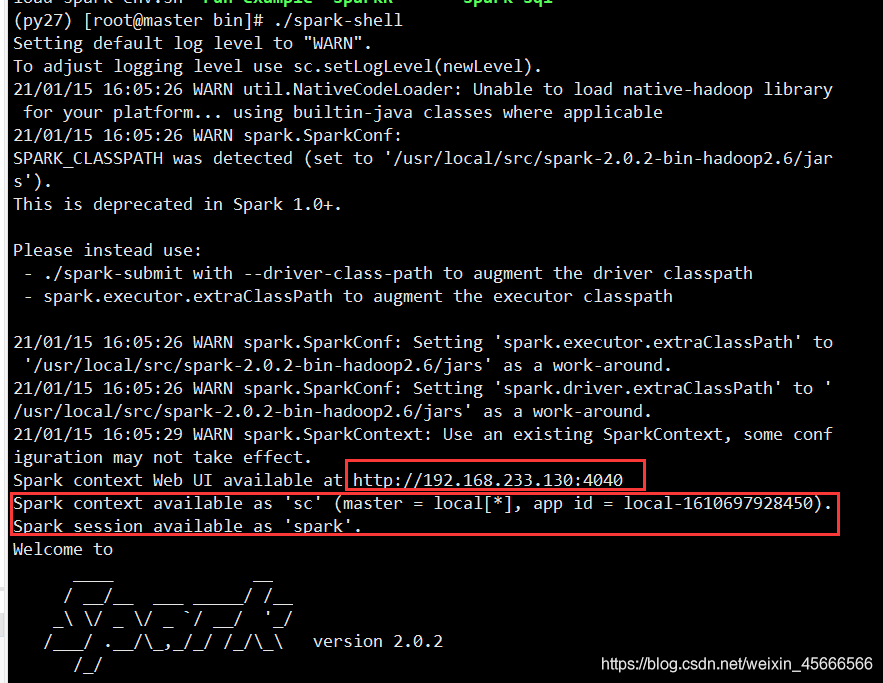

./spark-shell

-

表示默认使用local 模式启动,在本机启动一个SparkSubmit进程

-

还可指定参数 --master,如:

spark-shell --master local[N]表示在本地模拟N个线程来运行当前任务

spark-shell --master local[*]表示使用当前机器上所有可用的资源 -

不携带参数默认就是

spark-shell --master local[*]

- 退出spark-shell

:quit或快捷键Ctrl+D

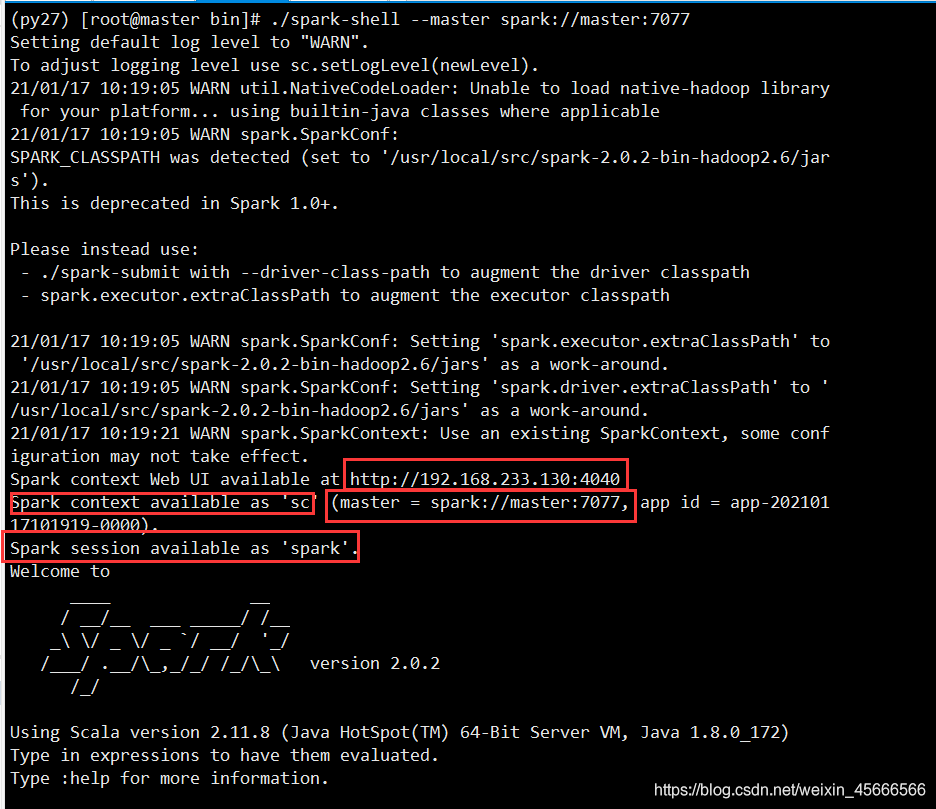

2、 ./spark-shell --master spark://master:7077

- 以Standalone模式进行启动,即集群模式

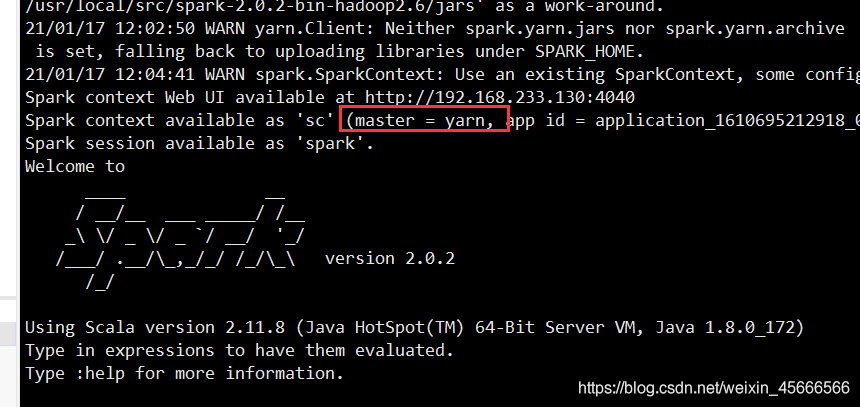

3、./spark-shell --master yarn-client

- 以Yarn client 模式启动

./spark-shell --master yarn-cluster这个是以Yarn cluster 模式启动

1.3 应用场景

- 通常是以测试为主

- 所以一般直接以

./spark-shell启动,进入本地模式测试

2、spark-submit

\quad \quad 程序一旦打包好,就可以使用 bin/spark-submit 脚本启动应用了。这个脚本负责设置 spark 使用的 classpath 和依赖,支持不同类型的集群管理器和发布模式。

2.1 概述

\quad \quad 它主要是用于提交编译并打包好的Jar包到集群环境中来运行,和hadoop中的hadoop jar命令很类似,hadoop jar是提交一个MR-task,而spark-submit是提交一个spark任务,这个脚本 可以设置Spark类路径(classpath)和应用程序依赖包,并且可以设置不同的Spark所支持的集群管理和部署模式。 相对于spark-shell来讲它不具有REPL(交互式的编程环境)的,在运行前需要指定应用的启动类,jar包路径,参数等内容。

1、spark-submit使用帮助

./spark-submit --help

(py27) [root@master bin]# ./spark-submit --help

Usage: spark-submit [options] <app jar | python file> [app arguments]

Usage: spark-submit --kill [submission ID] --master [spark://...]

Usage: spark-submit --status [submission ID] --master [spark://...]

Usage: spark-submit run-example [options] example-class [example args]

Options:

--master MASTER_URL spark://host:port, mesos://host:port, yarn, or local.

--deploy-mode DEPLOY_MODE Whether to launch the driver program locally ("client") or

on one of the worker machines inside the cluster ("cluster")

(Default: client).

--class CLASS_NAME Your application's main class (for Java / Scala apps).

--name NAME A name of your application.

--jars JARS Comma-separated list of local jars to include on the driver

and executor classpaths.

--packages Comma-separated list of maven coordinates of jars to include

on the driver and executor classpaths. Will search the local

maven repo, then maven central and any additional remote

repositories given by --repositories. The format for the

coordinates should be groupId:artifactId:version.

--exclude-packages Comma-separated list of groupId:artifactId, to exclude while

resolving the dependencies provided in --packages to avoid

dependency conflicts.

--repositories Comma-separated list of additional remote repositories to

search for the maven coordinates given with --packages.

--py-files PY_FILES Comma-separated list of .zip, .egg, or .py files to place

on the PYTHONPATH for Python apps.

--files FILES Comma-separated list of files to be placed in the working

directory of each executor.

--conf PROP=VALUE Arbitrary Spark configuration property.

--properties-file FILE Path to a file from which to load extra properties. If not

specified, this will look for conf/spark-defaults.conf.

--driver-memory MEM Memory for driver (e.g. 1000M, 2G) (Default: 1024M).

--driver-java-options Extra Java options to pass to the driver.

--driver-library-path Extra library path entries to pass to the driver.

--driver-class-path Extra class path entries to pass to the driver. Note that

jars added with --jars are automatically included in the

classpath.

--executor-memory MEM Memory per executor (e.g. 1000M, 2G) (Default: 1G).

--proxy-user NAME User to impersonate when submitting the application.

This argument does not work with --principal / --keytab.

--help, -h Show this help message and exit.

--verbose, -v Print additional debug output.

--version, Print the version of current Spark.

Spark standalone with cluster deploy mode only:

--driver-cores NUM Cores for driver (Default: 1).

Spark standalone or Mesos with cluster deploy mode only:

--supervise If given, restarts the driver on failure.

--kill SUBMISSION_ID If given, kills the driver specified.

--status SUBMISSION_ID If given, requests the status of the driver specified.

Spark standalone and Mesos only:

--total-executor-cores NUM Total cores for all executors.

Spark standalone and YARN only:

--executor-cores NUM Number of cores per executor. (Default: 1 in YARN mode,

or all available cores on the worker in standalone mode)

YARN-only:

--driver-cores NUM Number of cores used by the driver, only in cluster mode

(Default: 1).

--queue QUEUE_NAME The YARN queue to submit to (Default: "default").

--num-executors NUM Number of executors to launch (Default: 2).

If dynamic allocation is enabled, the initial number of

executors will be at least NUM.

--archives ARCHIVES Comma separated list of archives to be extracted into the

working directory of each executor.

--principal PRINCIPAL Principal to be used to login to KDC, while running on

secure HDFS.

--keytab KEYTAB The full path to the file that contains the keytab for the

principal specified above. This keytab will be copied to

the node running the Application Master via the Secure

Distributed Cache, for renewing the login tickets and the

delegation tokens periodically.

- Spark-submit源码

(py27) [root@master bin]# cat spark-submit

if [ -z "${SPARK_HOME}" ]; then

export SPARK_HOME="$(cd "`dirname "$0"`"/..; pwd)"

fi

# disable randomized hash for string in Python 3.3+

export PYTHONHASHSEED=0

exec "${SPARK_HOME}"/bin/spark-class org.apache.spark.deploy.SparkSubmit "$@"

- spark-submit 比较 简单 就是去执行 spark-class 脚本 制定主类是 org.apache.spark.deploy.SparkSubmit ,把接受的参数 都传到主类中

2.2 基本语法

- 例子:提交任务到 hadoop yarn 集群执行。

./bin/spark-submit \

--class org.apache.spark.examples.SparkPi \

--master yarn \

--deploy-mode cluster \

--driver-memory 1g \

--executor-memory 1g \

--executor-cores 1 \

--queue thequeue \

examples/target/scala-2.11/jars/spark-examples*.jar 10

参数的解释:

| 参数名 | 参数说明 |

|---|---|

| - -class | 应用程序的主类,仅针对 java 或 scala 应用 |

| - -master | master 的地址,提交任务到哪里执行,例如 local,spark://host:port, yarn, local |

| - -deploy-mode | 在本地 (client) 启动 driver 或在 cluster 上启动,默认是 client |

| - -name | 应用程序的名称,会显示在Spark的网页用户界面 |

| - -jars | 用逗号分隔的本地 jar 包,设置后,这些 jar 将包含在 driver 和 executor 的 classpath 下 |

| - -packages | 包含在driver 和executor 的 classpath 中的 jar 的 maven 坐标 |

| - -exclude-packages | 为了避免冲突 而指定不包含的 package |

| - -repositories | 远程 repository |

| - -conf PROP=VALUE | 指定 spark 配置属性的值,例如 -conf spark.executor.extraJavaOptions="-XX:MaxPermSize=256m" |

| - -properties-file | 加载的配置文件,默认为 conf/spark-defaults.conf |

| - -driver-memory | Driver内存,默认 1G |

| - -driver-java-options | 传给 driver 的额外的 Java 选项 |

| - -driver-library-path | 传给 driver 的额外的库路径 |

| - -driver-class-path | 传给 driver 的额外的类路径 |

| - -driver-cores | Driver 的核数,默认是1。在 yarn 或者 standalone 下使用 |

| - -executor-memory | 每个 executor 的内存,默认是1G |

| - -total-executor-cores | 所有 executor 总共的核数。仅仅在 mesos 或者 standalone 下使用 |

| - -num-executors | 启动的 executor 数量。默认为2。在 yarn 下使用 |

| - -executor-core | 每个 executor 的核数。在yarn或者standalone下使用 |

3、spark-shell、spark-submit比较

1、相同点:放置的位置都在/spark/bin目录下面

2、不同点:

(1)Spark-shell本身是交互式的,dos界面上会提供一种类似于IDE的开发环境,开发人员可以在上面进行编程。在运行时,会调用底层的spark-submit方法进行执行。

(2)Spark-submit本身不是交互性的,用于提交在IDEA等编辑器中编译并打包生成的Jar包到集群环境中,并执行。