yolov5的4.0版本也是更新了有一段时间了,具体更新内容如下;

nn.SiLU() activations replace nn.LeakyReLU(0.1) and nn.Hardswish() activations throughout the model, simplifying the architecture as we now only have one single activation function used everywhere rather than the two types before.

In general the changes result in smaller models (89.0M params -> 87.7M YOLOv5x), faster inference times (6.9ms -> 6.0ms), and improved mAP (49.2 -> 50.1) for all models except YOLOv5s, which reduced mAP slightly (37.0 -> 36.8). In general the largest models benefit the most from this update. YOLOv5x in particular is now above 50.0 mAP at --img-size 640, which may be the first time this is possible at 640 resolution for any architecture I’m aware of (correct me if I’m wrong though).

就是使用SiLU替换了LeakyReLU和Hardswish,简化了网络结构,但是模型也变得更大了,在使用26771个样本,15类别进行训练了109个epoch后得到了一个667.2M的模型,显然,这个模型还是比较大的。

所以就需要根据网络的激活函数进行模型的量化压缩,导出fp16模型,具体脚本如下:

import os

import torch

import torch

import torch.nn as nn

from tqdm import tqdm

def autopad(k, p=None):

# Pad to 'same'

if p is None:

p = k // 2 if isinstance(k, int) else [x // 2 for x in k] # auto-pad

return p

class Conv(nn.Module):

def __init__(self, c1, c2, k=1, s=1, p=None, g=1, act=True):

super(Conv, self).__init__()

self.conv = nn.Conv2d(c1, c2, k, s, autopad(k, p),

groups=g, bias=False)

self.bn = nn.BatchNorm2d(c2)

self.act = nn.SiLU() if act else nn.Identity()

def forward(self, x):

return self.act(self.bn(self.conv(x)))

def fuseforward(self, x):

return self.act(self.conv(x))

class Ensemble(nn.ModuleList):

def __init__(self):

super(Ensemble, self).__init__()

def forward(self, x, augment=False):

y = []

for module in self:

y.append(module(x, augment)[0])

y = torch.cat(y, 1)

return y, None

def attempt_load(weights, map_location=None):

model = Ensemble()

for w in weights if isinstance(weights, list) else [weights]:

# attempt_download(w)

model.append(torch.load(w, map_location=map_location)['model'].float().fuse().eval()) # load FP32 model

for m in model.modules():

if type(m) in [nn.Hardswish, nn.LeakyReLU, nn.ReLU, nn.ReLU6]:

m.inplace = True # pytorch 1.7.0 compatibility

elif type(m) is Conv:

m._non_persistent_buffers_set = set() # pytorch 1.6.0 compatibility

if len(model) == 1:

return model[-1] # return model

else:

print('Ensemble created with %s\n' % weights)

for k in ['names', 'stride']:

setattr(model, k, getattr(model[-1], k))

return model # return ensemble

def select_device(device='', batch_size=None):

# device = 'cpu' or '0' or '0,1,2,3'

cpu_request = device.lower() == 'cpu'

if device and not cpu_request: # if device requested other than 'cpu'

os.environ['CUDA_VISIBLE_DEVICES'] = device # set environment variable

assert torch.cuda.is_available(

), 'CUDA unavailable, invalid device %s requested' % device # check availablity

cuda = False if cpu_request else torch.cuda.is_available()

if cuda:

c = 1024 ** 2 # bytes to MB

ng = torch.cuda.device_count()

if ng > 1 and batch_size: # check that batch_size is compatible with device_count

assert batch_size % ng == 0, 'batch-size %g not multiple of GPU count %g' % (

batch_size, ng)

x = [torch.cuda.get_device_properties(i) for i in range(ng)]

s = f'Using torch {torch.__version__} '

for i in range(0, ng):

if i == 1:

s = ' ' * len(s)

return torch.device('cuda:0' if cuda else 'cpu')

if __name__ == '__main__':

import argparse

parser = argparse.ArgumentParser()

parser.add_argument('--in_weights', type=str,

default='./last.pt', help='initial weights path')

parser.add_argument('--out_weights', type=str,

default='quantification.pt', help='output weights path')

parser.add_argument('--device', type=str, default='0', help='device')

opt = parser.parse_args()

device = select_device(opt.device)

model = attempt_load(opt.in_weights, map_location=device)

model.to(device).eval()

model.half()

torch.save(model, opt.out_weights)

print('done.')

print('-[INFO] before: {} kb, after: {} kb'.format(

os.path.getsize(opt.in_weights), os.path.getsize(opt.out_weights)))

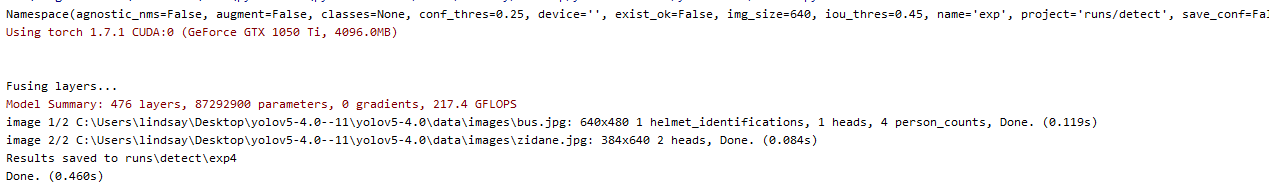

使用4.0版本,并且self.act = nn.SiLU(),进行量化压缩后,导出的模型由667.2M变为166M,加载官方给的detetct.py执行后,推理时间为0.460s

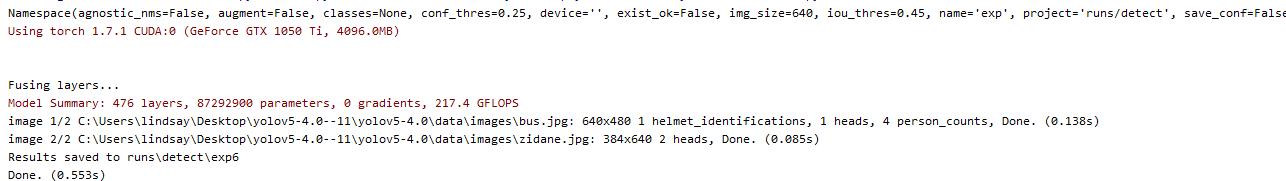

使用原版模型进行推理的时间为0.553s

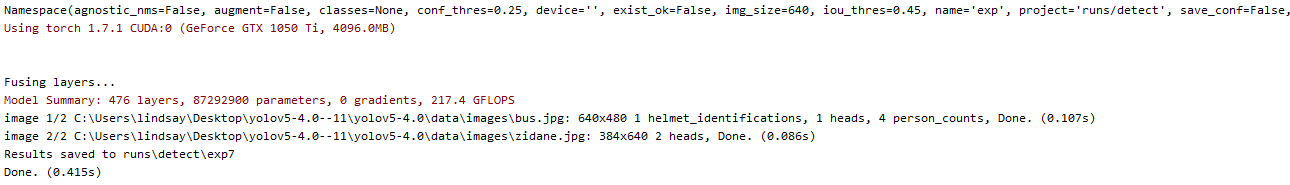

使用4.0版本,并且self.act = nn.Hardswish(),进行量化压缩后,导出的模型由667.2M变为166M,加载官方给的detetct.py执行后,推理时间为0.415s

注意事项:

修改工程文件夹内的models里面的experimental.py文件,否则会报错:

def attempt_load(weights, map_location=None):

# Loads an ensemble of models weights=[a,b,c] or a single model weights=[a] or weights=a

model = Ensemble()

for w in weights if isinstance(weights, list) else [weights]:

attempt_download(w)

model.append(torch.load(w, map_location=map_location).float().fuse().eval())