tensorrt作为一个优秀的gpu推理引擎,支持的深度学习框架和算子也十分的丰富

这里模型依然使用这个模型文件

转换onnx为trt文件

trt作为tensorrt推理的引擎文件

因此使用其他机器学习的框架都需进行转换

转换如下:

import os

import tensorrt as trt

TRT_LOGGER = trt.Logger()

model_path='FashionMNIST.onnx'

engine_file_path = "FashionMNIST.trt"

EXPLICIT_BATCH = 1 << (int)(trt.NetworkDefinitionCreationFlag.EXPLICIT_BATCH)#batchsize=1

with trt.Builder(TRT_LOGGER) as builder, builder.create_network(EXPLICIT_BATCH)\

as network, trt.OnnxParser(network, TRT_LOGGER) as parser:

builder.max_workspace_size = 1 << 28

builder.max_batch_size = 1

if not os.path.exists(model_path):

print('ONNX file {} not found.'.format(model_path))

exit(0)

print('Loading ONNX file from path {}...'.format(model_path))

with open(model_path, 'rb') as model:

print('Beginning ONNX file parsing')

if not parser.parse(model.read()):

print ('ERROR: Failed to parse the ONNX file.')

for error in range(parser.num_errors):

print (parser.get_error(error))

network.get_input(0).shape = [1, 1, 28, 28]

print('Completed parsing of ONNX file')

engine = builder.build_cuda_engine(network)

with open(engine_file_path, "wb") as f:

f.write(engine.serialize())运行结果如下:

![]()

接下来我们就使用这个trt文件进行推理

import pycuda.driver as cuda

import pycuda.autoinit

import cv2

import numpy as np

import os

import tensorrt as trt

TRT_LOGGER = trt.Logger()

model_path='FashionMNIST.onnx'

engine_file_path = "FashionMNIST.trt"

class HostDeviceMem(object):

def __init__(self, host_mem, device_mem):

self.host = host_mem

self.device = device_mem

def __str__(self):

return "Host:\n" + str(self.host) + "\nDevice:\n" + str(self.device)

def __repr__(self):

return self.__str__()

def allocate_buffers(engine):

inputs = []

outputs = []

bindings = []

stream = cuda.Stream()

for binding in engine:

size = trt.volume(engine.get_binding_shape(binding)) * engine.max_batch_size

dtype = trt.nptype(engine.get_binding_dtype(binding))

# Allocate host and device buffers

host_mem = cuda.pagelocked_empty(size, dtype)

device_mem = cuda.mem_alloc(host_mem.nbytes)

# Append the device buffer to device bindings.

bindings.append(int(device_mem))

# Append to the appropriate list.

if engine.binding_is_input(binding):

inputs.append(HostDeviceMem(host_mem, device_mem))

else:

outputs.append(HostDeviceMem(host_mem, device_mem))

return inputs, outputs, bindings, stream

def do_inference_v2(context, bindings, inputs, outputs, stream):

# Transfer input data to the GPU.

[cuda.memcpy_htod_async(inp.device, inp.host, stream) for inp in inputs]

# Run inference.

context.execute_async_v2(bindings=bindings, stream_handle=stream.handle)

# Transfer predictions back from the GPU.

[cuda.memcpy_dtoh_async(out.host, out.device, stream) for out in outputs]

# Synchronize the stream

stream.synchronize()

# Return only the host outputs.

return [out.host for out in outputs]

with open(engine_file_path, "rb") as f, trt.Runtime(TRT_LOGGER) as runtime,\

runtime.deserialize_cuda_engine(f.read()) as engine, engine.create_execution_context() as context:

inputs, outputs, bindings, stream = allocate_buffers(engine)

image = cv2.imread('123.jpg',cv2.IMREAD_GRAYSCALE)

image=cv2.resize(image,(28,28))

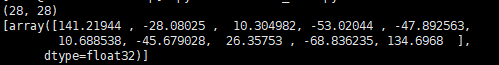

print(image.shape)

image=image[np.newaxis,np.newaxis,:,:].astype(np.float32)

inputs[0].host = image

#开始推理

trt_outputs =do_inference_v2(context, bindings=bindings, \

inputs=inputs, outputs=outputs, stream=stream)

print(trt_outputs)

运行结果:

扫描二维码关注公众号,回复:

12694702 查看本文章

和这个文章运行的结果一致,至此python版使用结束