volume 根据使用可以分为以下三类:

(1)Volume 本地和网络数据卷

(2)PersistentVolume 持久数据卷

(3)PersistentVolume 动态供给数据卷

注意:Kubernetes中的Volume提供了在容器中挂载外部存储的能力。

注意:Pod需要设置卷来源(spec.volumes)和挂载点(spec.containers.volumeMounts)两个信息后才可以使用相应的Volume。

1 k8s volume 本地存储和网络存储

volume中的本地数据卷和网络数据卷:

(1)本地数据卷:emptyDir、hostPath。

(2)网络数据卷:NFS。

1.1 emptyDir(空目录)

创建一个空卷,挂载到Pod中的容器。Pod删除该卷也会被删除。 应用场景:同一个Pod中运行的容器之间数据共享。

#docker pull busybox

#docker pull centos

#docker pull library/bash:4.4.23

文件empty.yaml

创建2个容器,一个写,一个读,来测试数据是否共享。

apiVersion: v1

kind: Pod

metadata:

name: my-pod

spec:

containers:

- name: write

image: library/bash:4.4.23

imagePullPolicy: IfNotPresent

command: ["sh","-c","while true; do echo 'hello' >> /data/hello.txt; sleep 2; done;"]

volumeMounts:

#将名为data的数据卷,挂载在容器的/data下面

- name: data

mountPath: /data

- name: read

image: centos

imagePullPolicy: IfNotPresent

command: ["bash","-c","tail -f /data/hello.txt"]

volumeMounts:

#将名为data的数据卷,挂载在容器的/data下面

- name: data

mountPath: /data

#定义一个数据卷来源

volumes:

#定义数据卷名字

- name: data

emptyDir: {

}

#kubectl apply -f empty.yaml

由于通过指定的镜像启动容器后,容器内部没有常驻进程,导致容器启动成功后即退出,从而进行了持续的重启。

command: ["sh","-c","while true; do echo 'hello' >> /data/hello.txt; sleep 2; done;"]

#kubectl logs my-pod -c read

每隔两秒写入,所以能够不停的看见打印的数据。

1.2 hostPath(本地挂载)

挂载节点文件系统上文件或者目录到Pod中的容器。 应用场景:Pod中容器需要访问宿主机文件。

文件hostpath.yaml

apiVersion: v1

kind: Pod

metadata:

name: my-pod

spec:

containers:

- name: busybox

image: busybox

imagePullPolicy: IfNotPresent

args:

- /bin/sh

- -c

- sleep 36000

# 挂载点

volumeMounts:

#将名为data的数据卷,挂载在容器的/data下面

- name: data

mountPath: /data

volumes:

- name: data

#挂载来源,宿主机的/tmp目录

hostPath:

path: /tmp

type: Directory

#kubectl apply -f hostpath.yaml

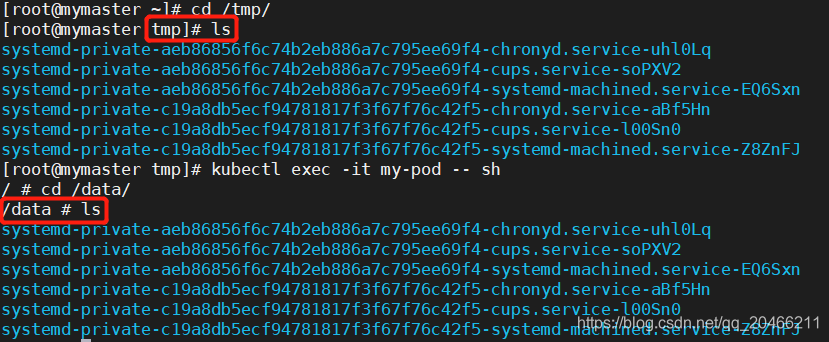

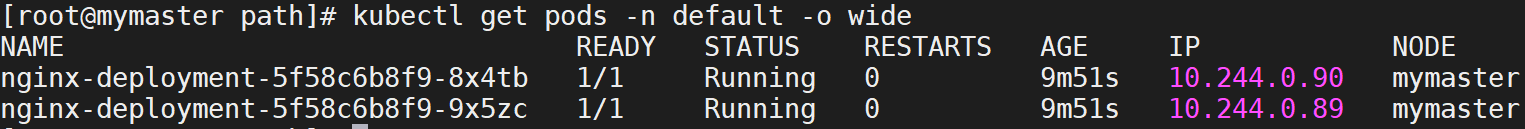

查看pod被调度到哪个节点上

#kubectl get pods -n default -o wide

看到宿主机上/tmp上的数据,成功展示在pod 的/data目录下。

看到宿主机上/tmp上的数据,成功展示在pod 的/data目录下。

1.3 NFS(文件共享存储网络卷)

192.168.0.165 mymaster

192.168.0.163 myworker

(1)安装nfs:(需要使用nfs服务,每台机器都需要安装)

#yum install -y nfs-utils #安装nfs服务

#yum install -y rpcbind#安装rpc服务

#注意:先启动rpc服务,再启动nfs服务,每台机器都启动。

#systemctl start rpcbind #先启动rpc服务

#systemctl enable rpcbind #设置开机启动

#systemctl start nfs-server #启动nfs服务

#systemctl enable nfs-server #设置开机启动

(2)设置mymaster为服务端,配置/some/path目录共享出来,并且具有读写权限

#cat /etc/exports

/some/path 192.168.0.0/24(rw,no_root_squash)

#docker pull nginx

此时在客户端的时候,不用刻意去用mount 挂载,因为k8s会帮我们自动挂载。

(3)文件nfs.yaml

apiVersion: apps/v1beta1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 2

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

imagePullPolicy: IfNotPresent

#将名为wwwroot的数据卷,挂载在容器的nginx的html目录下

volumeMounts:

- name: wwwroot

mountPath: /usr/share/nginx/html

ports:

- containerPort: 80

#定义数据卷名字为wwwroot,类型为nfs

volumes:

- name: wwwroot

nfs:

server: mymaster

path: /some/path

#kubectl apply -f nfs.yaml

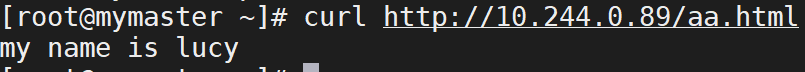

#kubectl exec -it nginx-deployment-5f58c6b8f9-8x4tb – sh

查看目录下是否有内容

#cat /some/path/aa.html

my name is lucy

2 安装本地存储的pv和pvc

镜像rancher/local-path-provisioner:v0.0.11

#docker load -i local-path-provisioner.tar

文件local-path-storage.yaml

apiVersion: v1

kind: Namespace

metadata:

name: local-path-storage

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: local-path-provisioner-service-account

namespace: local-path-storage

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: local-path-provisioner-role

rules:

- apiGroups: [""]

resources: ["nodes", "persistentvolumeclaims"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["endpoints", "persistentvolumes", "pods"]

verbs: ["*"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "patch"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: local-path-provisioner-bind

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: local-path-provisioner-role

subjects:

- kind: ServiceAccount

name: local-path-provisioner-service-account

namespace: local-path-storage

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: local-path-provisioner

namespace: local-path-storage

spec:

replicas: 1

selector:

matchLabels:

app: local-path-provisioner

template:

metadata:

labels:

app: local-path-provisioner

spec:

serviceAccountName: local-path-provisioner-service-account

containers:

- name: local-path-provisioner

image: rancher/local-path-provisioner:v0.0.11

imagePullPolicy: IfNotPresent

command:

- local-path-provisioner

- --debug

- start

- --config

- /etc/config/config.json

volumeMounts:

- name: config-volume

mountPath: /etc/config/

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumes:

- name: config-volume

configMap:

name: local-path-config

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: local-path

annotations: #添加为默认StorageClass

storageclass.beta.kubernetes.io/is-default-class: "true"

provisioner: rancher.io/local-path

volumeBindingMode: WaitForFirstConsumer

reclaimPolicy: Delete

---

kind: ConfigMap

apiVersion: v1

metadata:

name: local-path-config

namespace: local-path-storage

data:

config.json: |-

{

"nodePathMap":[

{

"node":"DEFAULT_PATH_FOR_NON_LISTED_NODES",

"paths":["/opt/local-path-provisioner"]

}

]

}

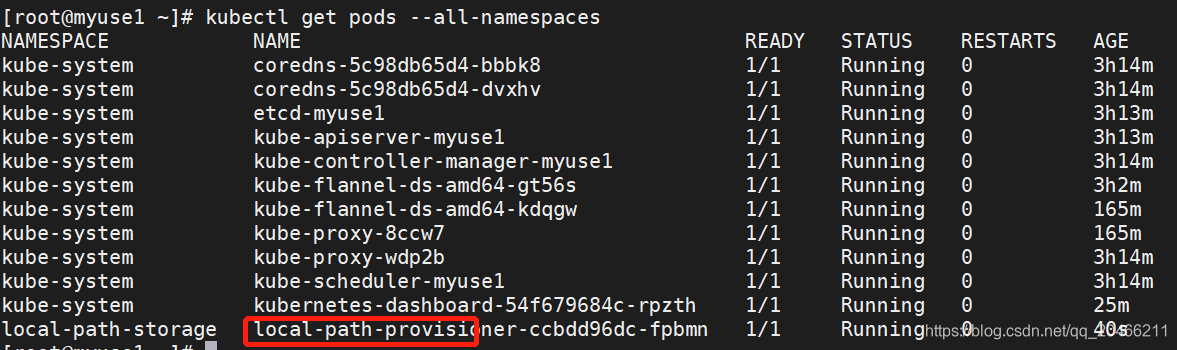

#kubectl apply -f local-path-storage.yaml

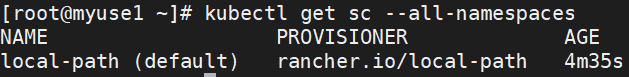

查看默认存储

查看默认存储