1.下载pinpoint

https://github.com/pinpoint-apm/pinpoint

2.执行hbase脚本

初始化数据(hbase-create.hbase)

D:\hbase-2.3.3\hbase-2.3.3\bin>hbase shell D:/pinpoint-2.1.1/pinpoint-2.1.1/hbase/scripts/hbase-create.hbase

Created table AgentInfo

Took 3.6380 seconds

Created table AgentStatV2

Took 4.4220 seconds

Created table ApplicationStatAggre

Took 2.1830 seconds

Created table ApplicationIndex

Took 0.6380 seconds

Created table AgentLifeCycle

Took 0.6380 seconds

Created table AgentEvent

Took 0.6400 seconds

Created table StringMetaData

Took 0.6450 seconds

Created table ApiMetaData

Took 0.6330 seconds

Created table SqlMetaData_Ver2

Took 0.6350 seconds

Created table TraceV2

Took 4.1810 seconds

Created table ApplicationTraceIndex

Took 0.6360 seconds

Created table ApplicationMapStatisticsCaller_Ver2

Took 0.6350 seconds

Created table ApplicationMapStatisticsCallee_Ver2

Took 1.1680 seconds

Created table ApplicationMapStatisticsSelf_Ver2

Took 1.2470 seconds

Created table HostApplicationMap_Ver2

Took 0.6530 seconds

TABLE

AgentEvent

AgentInfo

AgentLifeCycle

AgentStatV2

ApiMetaData

ApplicationIndex

ApplicationMapStatisticsCallee_Ver2

ApplicationMapStatisticsCaller_Ver2

ApplicationMapStatisticsSelf_Ver2

ApplicationStatAggre

ApplicationTraceIndex

HostApplicationMap_Ver2

SqlMetaData_Ver2

StringMetaData

TraceV2

15 row(s)

Took 0.1600 seconds

D:\hbase-2.3.3\hbase-2.3.3\bin>3. Pinpoint Collector

java -jar -Dpinpoint.zookeeper.address=localhost pinpoint-collector-boot-2.1.1.jarhbase的zookeeper的ip:127.0.0.1 默认端口2181

D:\pinpoint>java -jar -Dpinpoint.zookeeper.address=127.0.0.1 pinpoint-collector-boot-2.2.0.jar

01-06 14:11:33.711 INFO ProfileApplicationListener : onApplicationEvent-ApplicationEnvironmentPreparedEvent

01-06 14:11:33.712 INFO ProfileApplicationListener : spring.profiles.active:[release]

Tomcat started on port(s): 8081 (http) with context path ''

01-06 14:13:33.033 [ main] INFO c.n.p.c.CollectorApp : Started CollectorApp in 124.488 seconds (JVM running for 154.548)默认配置

- pinpoint-collector-root.properties - contains configurations for the collector. Check the following values with the agent’s configuration options :

collector.receiver.base.port(agent’s profiler.collector.tcp.port - default: 9994/TCP)collector.receiver.stat.udp.port(agent’s profiler.collector.stat.port - default: 9995/UDP)collector.receiver.span.udp.port(agent’s profiler.collector.span.port - default: 9996/UDP)

pinpoint.zookeeper.address=localhost

# base data receiver config ---------------------------------------------------------------------

collector.receiver.base.ip=0.0.0.0

collector.receiver.base.port=9994

# number of tcp worker threads

collector.receiver.base.worker.threadSize=8

# capacity of tcp worker queue

collector.receiver.base.worker.queueSize=1024

# monitoring for tcp worker

collector.receiver.base.worker.monitor=true

collector.receiver.base.request.timeout=3000

collector.receiver.base.closewait.timeout=3000

# 5 min

collector.receiver.base.ping.interval=300000

# 30 min

collector.receiver.base.pingwait.timeout=1800000

# stat receiver config ---------------------------------------------------------------------

collector.receiver.stat.udp=true

collector.receiver.stat.udp.ip=0.0.0.0

collector.receiver.stat.udp.port=9995

collector.receiver.stat.udp.receiveBufferSize=4194304

## required linux kernel 3.9 & java 9+

collector.receiver.stat.udp.reuseport=false

## If not set, follow the cpu count automatically.

#collector.receiver.stat.udp.socket.count=1

# Should keep in mind that TCP transport load balancing is per connection.(UDP transport loadbalancing is per packet)

collector.receiver.stat.tcp=false

collector.receiver.stat.tcp.ip=0.0.0.0

collector.receiver.stat.tcp.port=9995

collector.receiver.stat.tcp.request.timeout=3000

collector.receiver.stat.tcp.closewait.timeout=3000

# 5 min

collector.receiver.stat.tcp.ping.interval=300000

# 30 min

collector.receiver.stat.tcp.pingwait.timeout=1800000

# number of udp statworker threads

collector.receiver.stat.worker.threadSize=8

# capacity of udp statworker queue

collector.receiver.stat.worker.queueSize=64

# monitoring for udp stat worker

collector.receiver.stat.worker.monitor=true

# span receiver config ---------------------------------------------------------------------

collector.receiver.span.udp=true

collector.receiver.span.udp.ip=0.0.0.0

collector.receiver.span.udp.port=9996

collector.receiver.span.udp.receiveBufferSize=4194304

## required linux kernel 3.9 & java 9+

collector.receiver.span.udp.reuseport=false

## If not set, follow the cpu count automatically.

#collector.receiver.span.udp.socket.count=1

# Should keep in mind that TCP transport load balancing is per connection.(UDP transport loadbalancing is per packet)

collector.receiver.span.tcp=false

collector.receiver.span.tcp.ip=0.0.0.0

collector.receiver.span.tcp.port=9996

collector.receiver.span.tcp.request.timeout=3000

collector.receiver.span.tcp.closewait.timeout=3000

# 5 min

collector.receiver.span.tcp.ping.interval=300000

# 30 min

collector.receiver.span.tcp.pingwait.timeout=1800000

# number of udp statworker threads

collector.receiver.span.worker.threadSize=32

# capacity of udp statworker queue

collector.receiver.span.worker.queueSize=256

# monitoring for udp stat worker

collector.receiver.span.worker.monitor=true

# configure l4 ip address to ignore health check logs

# support raw address and CIDR address (Ex:10.0.0.1,10.0.0.1/24)

collector.l4.ip=

# change OS level read/write socket buffer size (for linux)

#sudo sysctl -w net.core.rmem_max=

#sudo sysctl -w net.core.wmem_max=

# check current values using:

#$ /sbin/sysctl -a | grep -e rmem -e wmem

# number of agent event worker threads

collector.agentEventWorker.threadSize=4

# capacity of agent event worker queue

collector.agentEventWorker.queueSize=1024

# Determines whether to register the information held by com.navercorp.pinpoint.collector.monitor.CollectorMetric to jmx

collector.metric.jmx=false

collector.metric.jmx.domain=pinpoint.collector.metrics

# -------------------------------------------------------------------------------------------------

# The cluster related options are used to establish connections between the agent, collector, and web in order to send/receive data between them in real time.

# You may enable additional features using this option (Ex : RealTime Active Thread Chart).

# -------------------------------------------------------------------------------------------------

# Usage : Set the following options for collector/web components that reside in the same cluster in order to enable this feature.

# 1. cluster.enable (pinpoint-web.properties, pinpoint-collector-root.properties) - "true" to enable

# 2. cluster.zookeeper.address (pinpoint-web.properties, pinpoint-collector-root.properties) - address of the ZooKeeper instance that will be used to manage the cluster

# 3. cluster.web.tcp.port (pinpoint-web.properties) - any available port number (used to establish connection between web and collector)

# -------------------------------------------------------------------------------------------------

# Please be aware of the following:

#1. If the network between web, collector, and the agents are not stable, it is advisable not to use this feature.

#2. We recommend using the cluster.web.tcp.port option. However, in cases where the collector is unable to establish connection to the web, you may reverse this and make the web establish connection to the collector.

# In this case, you must set cluster.connect.address (pinpoint-web.properties); and cluster.listen.ip, cluster.listen.port (pinpoint-collector-root.properties) accordingly.

cluster.enable=true

cluster.zookeeper.address=${pinpoint.zookeeper.address}

cluster.zookeeper.sessiontimeout=30000

cluster.listen.ip=

cluster.listen.port=-1

#collector.admin.password=

#collector.admin.api.rest.active=

#collector.admin.api.jmx.active=

collector.spanEvent.sequence.limit=10000

# Flink configuration

flink.cluster.enable=false

flink.cluster.zookeeper.address=${pinpoint.zookeeper.address}

flink.cluster.zookeeper.sessiontimeout=3000- pinpoint-collector-grpc.properties - contains configurations for the grpc.

collector.receiver.grpc.agent.port(agent’s profiler.transport.grpc.agent.collector.port, profiler.transport.grpc.metadata.collector.port - default: 9991/TCP)collector.receiver.grpc.stat.port(agent’s profiler.transport.grpc.stat.collector.port - default: 9992/TCP)collector.receiver.grpc.span.port(agent’s profiler.transport.grpc.span.collector.port - default: 9993/TCP)

# gRPC

# Agent

collector.receiver.grpc.agent.enable=true

collector.receiver.grpc.agent.ip=0.0.0.0

collector.receiver.grpc.agent.port=9991

# Executor of Server

collector.receiver.grpc.agent.server.executor.thread.size=8

collector.receiver.grpc.agent.server.executor.queue.size=256

collector.receiver.grpc.agent.server.executor.monitor.enable=true

# Executor of Worker

collector.receiver.grpc.agent.worker.executor.thread.size=16

collector.receiver.grpc.agent.worker.executor.queue.size=1024

collector.receiver.grpc.agent.worker.executor.monitor.enable=true

# Stat

collector.receiver.grpc.stat.enable=true

collector.receiver.grpc.stat.ip=0.0.0.0

collector.receiver.grpc.stat.port=9992

# Executor of Server

collector.receiver.grpc.stat.server.executor.thread.size=4

collector.receiver.grpc.stat.server.executor.queue.size=256

collector.receiver.grpc.stat.server.executor.monitor.enable=true

# Executor of Worker

collector.receiver.grpc.stat.worker.executor.thread.size=16

collector.receiver.grpc.stat.worker.executor.queue.size=1024

collector.receiver.grpc.stat.worker.executor.monitor.enable=true

# Stream scheduler for rejected execution

collector.receiver.grpc.stat.stream.scheduler.thread.size=1

collector.receiver.grpc.stat.stream.scheduler.period.millis=1000

collector.receiver.grpc.stat.stream.call.init.request.count=100

collector.receiver.grpc.stat.stream.scheduler.recovery.message.count=100

# Span

collector.receiver.grpc.span.enable=true

collector.receiver.grpc.span.ip=0.0.0.0

collector.receiver.grpc.span.port=9993

# Executor of Server

collector.receiver.grpc.span.server.executor.thread.size=4

collector.receiver.grpc.span.server.executor.queue.size=256

collector.receiver.grpc.span.server.executor.monitor.enable=true

# Executor of Worker

collector.receiver.grpc.span.worker.executor.thread.size=32

collector.receiver.grpc.span.worker.executor.queue.size=1024

collector.receiver.grpc.span.worker.executor.monitor.enable=true

# Stream scheduler for rejected execution

collector.receiver.grpc.span.stream.scheduler.thread.size=1

collector.receiver.grpc.span.stream.scheduler.period.millis=1000

collector.receiver.grpc.span.stream.call.init.request.count=100

collector.receiver.grpc.span.stream.scheduler.recovery.message.count=100- hbase.properties - contains configurations to connect to HBase.

hbase.client.host(default: localhost)hbase.client.port(default: 2181)

hbase.client.host=${pinpoint.zookeeper.address}

hbase.client.port=2181

# hbase default:/hbase

hbase.zookeeper.znode.parent=/hbase

# hbase namespace to use default:default

hbase.namespace=default

# ==================================================================================

# hbase client thread pool option

hbase.client.thread.max=32

hbase.client.threadPool.queueSize=5120

# prestartAllCoreThreads

hbase.client.threadPool.prestart=false

# warmup hbase connection cache

hbase.client.warmup.enable=false

# enable hbase async operation. default: false

hbase.client.async.enable=falseWhen Using Released Binary (Recommended)

- You can override any configuration values with

-Doption. For example,通过命令参数修改java -jar -Dspring.profiles.active=release -Dpinpoint.zookeeper.address=localhost -Dhbase.client.port=1234 pinpoint-collector-boot-2.1.1.jar

- 从jar包拉出来修改完在拖进去

Pinpoint Collector provides two profiles: release and local (default)

4. Pinpoint Web

java -jar -Dpinpoint.zookeeper.address=localhost pinpoint-web-boot-2.1.1.jar

hbase的zookeeper的ip:127.0.0.1 默认端口2181

默认配置

There are 2 configuration files used for Pinpoint Web: pinpoint-web-root.properties, and hbase.properties.

- hbase.properties - contains configurations to connect to HBase.

hbase.client.host(default: localhost)hbase.client.port(default: 2181)

When Using Released Binary (Recommended)

- You can override any configuration values with

-Doption. For example,通过命令参数修改java -jar -Dspring.profiles.active=release -Dpinpoint.zookeeper.address=localhost -Dhbase.client.port=1234 pinpoint-web-boot-2.1.1.jar

- 从jar包拉出来修改完在拖进去

config/web.properties

Pinpoint Web provides two profiles: release (default) and local.

http://localhost:8080/main

D:\pinpoint>java -jar -Dpinpoint.zookeeper.address=127.0.0.1 pinpoint-web-boot-2.2.0.jar

01-06 14:21:54.862 INFO ProfileApplicationListener : onApplicationEvent-ApplicationEnvironmentPreparedEvent

01-06 14:21:54.864 INFO ProfileApplicationListener : spring.profiles.active:[release]

01-06 14:21:54.866 INFO ProfileApplicationListener : pinpoint.profiles.active:release

01-06 14:21:54.867 INFO ProfileApplicationListener : PropertiesPropertySource pinpoint.profiles.active=release

01-06 14:21:54.868 INFO ProfileApplicationListener : PropertiesPropertySource logging.config=classpath:profiles/release/log4j2.xml

com.navercorp.pinpoint.agent.plugin.proxy.nginx.NginxRequestType@59a67c3a

01-06 14:24:01.001 [ main] INFO c.n.p.w.c.LogConfiguration -- LogConfiguration{logLinkEnable=false, logButtonName='', logPageUrl='', disableButtonMessage=''}

01-06 14:24:14.014 [ main] INFO o.a.c.h.Http11NioProtocol -- Starting ProtocolHandler ["http-nio-8080"]

01-06 14:24:14.014 [ main] INFO o.s.b.w.e.t.TomcatWebServer -- Tomcat started on port(s): 8080 (http) with context path ''

01-06 14:24:14.014 [ main] INFO c.n.p.w.WebApp -- Started WebApp in 145.568 seconds (JVM running for 176.405)

- hbase-root.properties:配置pp_web从哪个数据源获取采集数据,这里我只指定Hbase的zk地址

- jdbc-root.properties :pp_web连接自身Mysql数据库的连接认证配置文件

- sql目录 pp_web本身有些数据需要存放在MySQL数据库中,需初始化表结构(执行两个.sql脚本即可)

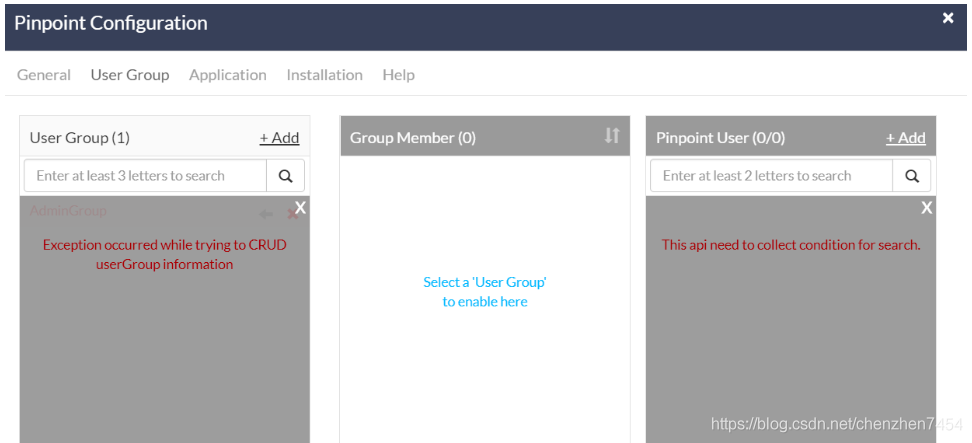

Pinpoint的Alarm功能需要MySQL服务

如果要使用Pinpoint的Alarm功能需要MySQL服务支持,否则点击pp web页面右上角的齿轮后,其中一些功能(如编辑用户、用户组、报警等功能)会出现如图所示的异常:

注意:查看jdbc-root.properties,先创建库,在创建表

5. Pinpoint Agent 测试应用

tomcat启动方式

1.使用D:\pinpoint-2.1.1\pinpoint-2.1.1\quickstart\testapp提供的应用测试

2.进入testapp目录,运行mvn install -Dmaven.test.skip=true 编译app

3.修改当前tomcat的bin/catalina.bat文件,添加启动参数

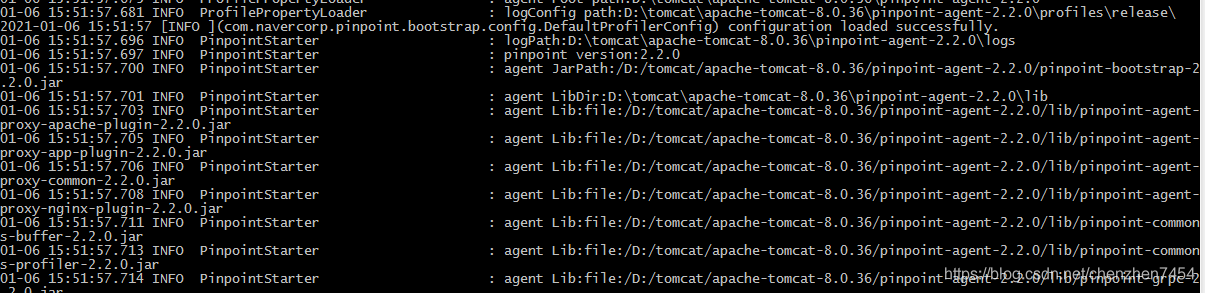

set CATALINA_OPTS=-javaagent:D:/tomcat/apache-tomcat-8.0.36/pinpoint-agent-2.2.0/pinpoint-bootstrap-2.2.0.jar

-Dpinpoint.agentId=pp202101061457

-Dpinpoint.applicationName=MyTomcatPP

默认配置 pinpoint-root.config.

修改pinpoint-agent/pinpoint.config profiler.collector.ip=127.0.0.1

这个ip地址和你安装了pinpoint机器地址保持一致。pinpoint-root.config和 pinpoint-bootstrap-$VERSION.jar在同一目录

THRIFT

profiler.collector.ip(default: 127.0.0.1)profiler.collector.tcp.port(collector’s collector.receiver.base.port - default: 9994/TCP)profiler.collector.stat.port(collector’s collector.receiver.stat.udp.port - default: 9995/UDP)profiler.collector.span.port(collector’s collector.receiver.span.udp.port - default: 9996/UDP)

GRPC

profiler.transport.grpc.collector.ip(default: 127.0.0.1)profiler.transport.grpc.agent.collector.port(collector’s collector.receiver.grpc.agent.port - default: 9991/TCP)profiler.transport.grpc.metadata.collector.port(collector’s collector.receiver.grpc.agent.port - default: 9991/TCP)profiler.transport.grpc.stat.collector.port(collector’s collector.receiver.grpc.stat.port - default: 9992/TCP)profiler.transport.grpc.span.collector.port(collector’s collector.receiver.grpc.span.port - default: 9993/TCP)

从tomcat的日志中看到pinpoint-agent已经加载

jar包启动方式

java -javaagent:D:/tomcat/apache-tomcat-8.0.36/pinpoint-agent-2.2.0/pinpoint-bootstrap-2.2.0.jar -Dpinpoint.agentId=pp202101191756 -Dpinpoint.applicationName=testapp-2.1.1 -jar D:/pinpoint-2.1.1/pinpoint-2.1.1/quickstart/testapp/target/pinpoint-quickstart-testapp-2.1.1.jar

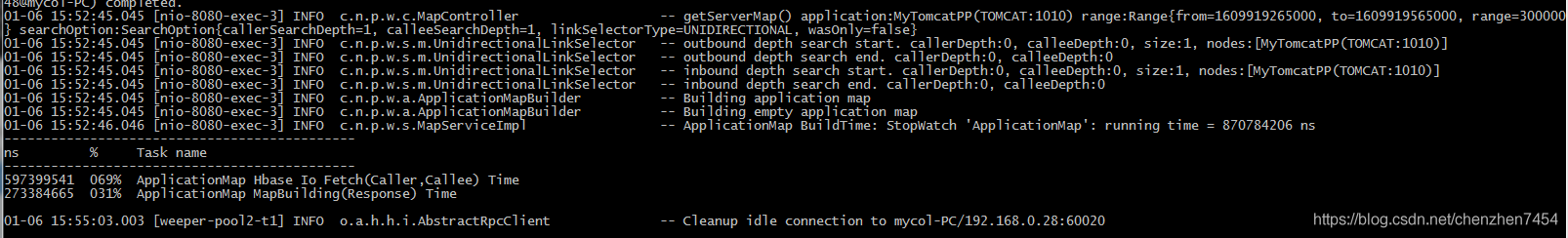

collector日志截图

web日志截图

wei图形展示

集群部署

pinpoint支持集群部署,通过需要配置Zookeeper地址

默认是集群模式:cluster.enable=true

cluster.enable=true

cluster.zookeeper.address=localhost

cluster.zookeeper.sessiontimeout=30000