1.下载配置Spark源码

首先下载Spark 源码:https://github.com/apache/spark/tree/v2.4.5

官网地址:https://github.com/apache/spark

这里最好是云主机上面编译好之后将仓库拉到本地然后配置本地的maven和仓库地址,在windows上面下载的话可能会比较慢,如果等不及可以墙一下。

可以修改主pom文件中的scala 版本和hadoop版本

<hadoop.version>2.6.0-cdh5.16.2</hadoop.version>

<scala.version>2.12.10</scala.version>

<scala.binary.version>2.12</scala.binary.version>

需要的话可以在主pom中加上CDH仓库的地址https://repository.cloudera.com/artifactory/cloudera-repos/

2.编译Spark 源码

编译Spark源码之前,需要修改一些东西,原因是scope规定provided会报ClassNotFoundException

- 修改hive-thriftserver模块下的pom.xm文件

<dependency>

<groupId>org.eclipse.jetty</groupId>

<artifactId>jetty-server</artifactId>

<!-- <scope>provided</scope>-->

</dependency>

<dependency>

<groupId>org.eclipse.jetty</groupId>

<artifactId>jetty-servlet</artifactId>

<!-- <scope>provided</scope>-->

</dependency>

修改主pom.xml文件

<dependency>

<groupId>org.eclipse.jetty</groupId>

<artifactId>jetty-http</artifactId>

<version>${jetty.version}</version>

<!-- <scope>provided</scope>-->

</dependency>

<dependency>

<groupId>org.eclipse.jetty</groupId>

<artifactId>jetty-continuation</artifactId>

<version>${jetty.version}</version>

<!-- <scope>provided</scope>-->

</dependency>

<dependency>

<groupId>org.eclipse.jetty</groupId>

<artifactId>jetty-servlet</artifactId>

<version>${jetty.version}</version>

<!-- <scope>provided</scope>-->

</dependency>

<dependency>

<groupId>org.eclipse.jetty</groupId>

<artifactId>jetty-servlets</artifactId>

<version>${jetty.version}</version>

<!-- <scope>provided</scope>-->

</dependency>

<dependency>

<groupId>org.eclipse.jetty</groupId>

<artifactId>jetty-proxy</artifactId>

<version>${jetty.version}</version>

<!-- <scope>provided</scope>-->

</dependency>

<dependency>

<groupId>org.eclipse.jetty</groupId>

<artifactId>jetty-client</artifactId>

<version>${jetty.version}</version>

<!-- <scope>provided</scope>-->

</dependency>

<dependency>

<groupId>org.eclipse.jetty</groupId>

<artifactId>jetty-util</artifactId>

<version>${jetty.version}</version>

<!-- <scope>provided</scope>-->

</dependency>

<dependency>

<groupId>org.eclipse.jetty</groupId>

<artifactId>jetty-security</artifactId>

<version>${jetty.version}</version>

<!-- <scope>provided</scope>-->

</dependency>

<dependency>

<groupId>org.eclipse.jetty</groupId>

<artifactId>jetty-plus</artifactId>

<version>${jetty.version}</version>

<!-- <scope>provided</scope>-->

</dependency>

<dependency>

<groupId>org.eclipse.jetty</groupId>

<artifactId>jetty-server</artifactId>

<version>${jetty.version}</version>

<!-- <scope>provided</scope>-->

</dependency>

将如下换成compile

<dependency>

<groupId>xml-apis</groupId>

<artifactId>xml-apis</artifactId>

<version>1.4.01</version>

<scope>compile</scope>

</dependency>

<dependency>

<groupId>com.google.guava</groupId>

<artifactId>guava</artifactId>

<scope>compile</scope>

</dependency>

如果还有其他类似的ClassNotFoundException,都是这个原因引起的,注释即可

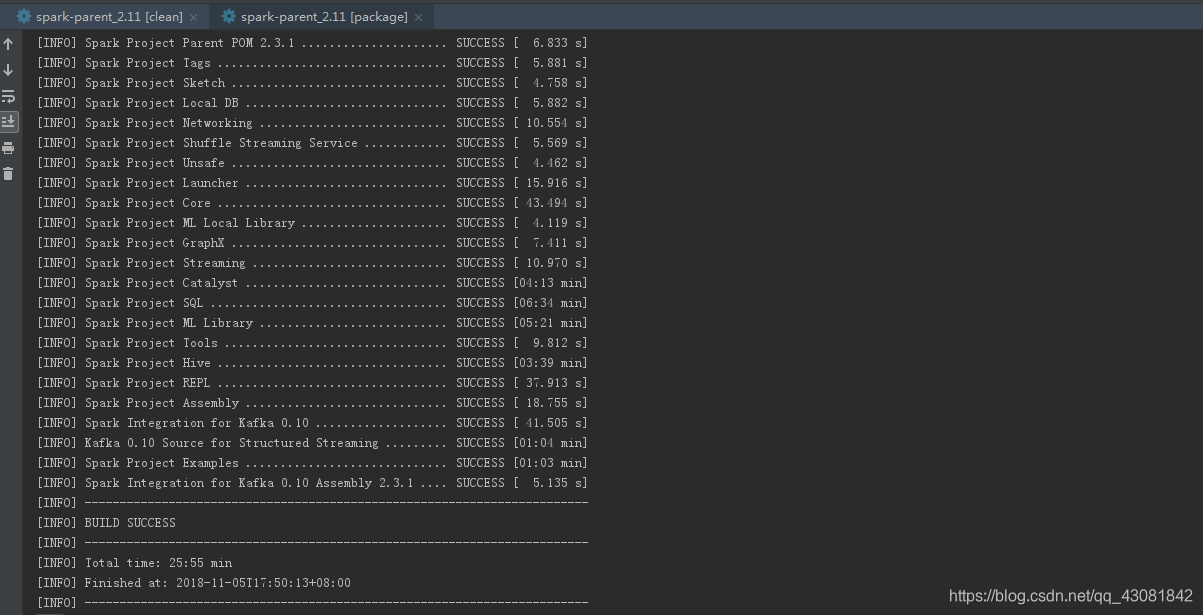

使用git-bash编译,在gitbash中使用命令mvn clean package -DskipTests=true进行编译

3.将源码导入IDEA

源码以Maven方式,导入IDEA后,等待依赖加载完成

在编译之前需要删除spark-sql下的test包下的streaming包,不然会在Build Project时进入这里,引起java.lang.OutOfMemoryError: GC overhead limit exceeded异常 点击Build Project编译

编译成功后就可以调试SparkSQL了

4.本地调试SparkSQL

找到hive-thriftserver模块,在main下,新建resources目录,并标记为资源目录

拷贝集群上如下配置文件到resources目录中

hive-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>hive.cli.print.header</name>

<value>true</value>

</property>

<property>

<name>hive.cli.print.current.db</name>

<value>true</value>

</property>

<property>

<name>hive.metastore.uris</name>

<value>thrift://hadoop:9083</value>

<description>指向的是运行metastore服务的主机</description>

</property>

</configuration>

注意:这里只需要hive-site.xml 即可

服务器需启动 metastore 服务

hive --service metastore &

运行SparkSQLCLIDriver

在运行之前,需要在VM options中添加参数

-Dspark.master=local[2] -Djline.WindowsTerminal.directConsole=false

spark-sql (default)> show databases;

show databases;

databaseName

company

default

hive_function_analyze

skewtest

spark-sql (default)> Time taken: 0.028 seconds, Fetched 10 row(s)

select * from score;

INFO SparkSQLCLIDriver: Time taken: 1.188 seconds, Fetched 4 row(s)

id name subject

1 tom ["HuaXue","Physical","Math","Chinese"]

2 jack ["HuaXue","Animal","Computer","Java"]

3 john ["ZheXue","ZhengZhi","SiXiu","history"]

4 alice ["C++","Linux","Hadoop","Flink"]

spark-sql (default)>

总结:

下载spark源码,在导入idea之前先用命令行进行编译,编译成功之后再导入idea,导入idea之后进行build project ,此时会报错calss not found 可以Generate Source,不行的话在看看依赖中是不是又provided的项目,慢慢解决问题,最后使用案例进行测试。