目录

3.2.1 创建连接请求队列 request_sock_queue (核心)

3.2.2 注册监听套接字到TCP全局监听hash表(核心)

1. listen功能概述

- 创建传入的backlog创建请求队列:半连接队列,全连接队列

- 将套接字的状态迁移至LISTEN

- 将监听sock注册到TCP全局的监听套接字哈希表

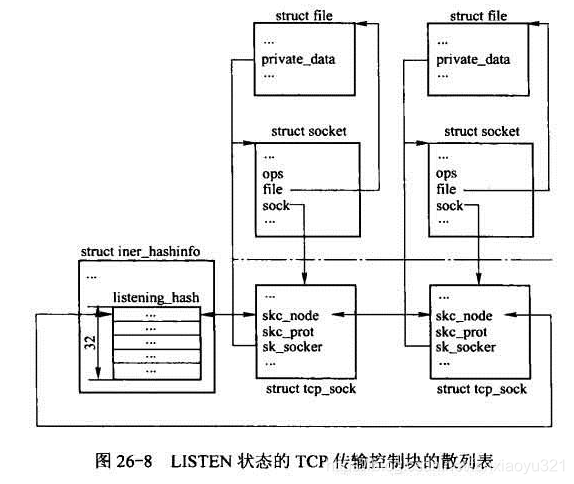

2. TCP监听套接字的管理 listening_hash

为了查询方便,TCP协议将所有的监听套接字用全局的哈希表管理起来,哈希表信息如下:

path: net/ipv4/tcp_ipv4.c

struct inet_hashinfo __cacheline_aligned tcp_hashinfo;

/* This is for listening sockets, thus all sockets which possess wildcards. */

#define INET_LHTABLE_SIZE 32 /* Yes, really, this is all you need. */

//inet_hashinfo是TCP层面的多个哈希表的集合,下面只列出了和监听套接字管理相关的字段

struct inet_hashinfo {

...

/* All sockets in TCP_LISTEN state will be in here. This is the only

* table where wildcard'd TCP sockets can exist. Hash function here

* is just local port number.

*/

struct hlist_head listening_hash[INET_LHTABLE_SIZE];

//保护对该结构成员的互斥访问

rwlock_t lhash_lock ____cacheline_aligned;

//对该结构的引用计数

atomic_t lhash_users;

};

TCP对监听套接字的组织可以用下图表示:

3. listen内核实现

listen 的主要功能就是为套接字创建一个请求队列(半连接、全连接),并将套接字放入tcp全局监听的hash表中

sys_listen

----inet_listen

----inet_csk_listen_start

----reqsk_queue_alloc

----inet_hash3.1 sys_listen()

- 根据用户空间传入的fd获取相应的套接字sock

- 请求连接数检查,不能超过内核设置最大的全连接数

- 调用 AF_INET 协议族的绑定接口inet_listen

/*

* Perform a listen. Basically, we allow the protocol to do anything

* necessary for a listen, and if that works, we mark the socket as

* ready for listening.

*/

asmlinkage long sys_listen(int fd, int backlog)

{

struct socket *sock;

int err, fput_needed;

int somaxconn;

sock = sockfd_lookup_light(fd, &err, &fput_needed);

if (sock) {

somaxconn = sock_net(sock->sk)->core.sysctl_somaxconn;

if ((unsigned)backlog > somaxconn)

backlog = somaxconn;

err = security_socket_listen(sock, backlog);

if (!err)

err = sock->ops->listen(sock, backlog);

fput_light(sock->file, fput_needed);

}

return err;

}3.1 inet_listen()

- 套接字类型检查、状态检查,类型必须是流式套接字且为可连接,状态必须是close或者listen

- 如果状态是listen,直接用 backlog 修改全连接队列大小后直接返回

- 如果状态是close,则开始执行套接字请求队列的创建、使能(注册)等操作。

int inet_listen(struct socket *sock, int backlog)

{

struct sock *sk = sock->sk;

unsigned char old_state;

int err;

lock_sock(sk);

//套接口层socket的状态应该是未连接的、类型必须是SOCK_STREAM,

//从这里可以看到,UDP套接字是不可以调用listen()的

err = -EINVAL;

if (sock->state != SS_UNCONNECTED || sock->type != SOCK_STREAM)

goto out;

//只能是CLOSE或LISTEN状态的套接口才能调用listen()

old_state = sk->sk_state;

if (!((1 << old_state) & (TCPF_CLOSE | TCPF_LISTEN)))

goto out;

/* Really, if the socket is already in listen state

* we can only allow the backlog to be adjusted.

*/

if (old_state != TCP_LISTEN) {

//创建连接请求队列;并将TCB状态迁移到TCP_LISTEN

err = inet_csk_listen_start(sk, backlog);

if (err)

goto out;

}

//将用户指定的backlog更新到套接字的sk_max_ack_backlog中,该变量就是

//accept连接队列所允许的最大值,即如果服务器端程序迟迟不调用accept,那

//么一旦已连接套接字超过该限定值,那么三次握手将无法完成,客户端会出现连

//接失败的问题

sk->sk_max_ack_backlog = backlog;

err = 0;

out:

release_sock(sk);

return err;

}

从上面的代码逻辑来看,应用程序是可以通过多次调用listen()修改sk_max_ack_backlog参数的。

3.2 inet_csk_listen_start()

- 创建请求队列

- 清除和ack相关的字段

- 迁移套接字的状态至listen

- 判断该套接字是否绑定地址和端口,若未绑定则随机分配一个,已经绑定则返回

//nr_table_entries就是listen()调用是用户程序传入的backlog参数

int inet_csk_listen_start(struct sock *sk, const int nr_table_entries)

{

struct inet_sock *inet = inet_sk(sk);

struct inet_connection_sock *icsk = inet_csk(sk);

//根据用户指定的backlog值分配SYN请求队列,该函数分析见下文

int rc = reqsk_queue_alloc(&icsk->icsk_accept_queue, nr_table_entries);

if (rc != 0)

return rc;

//初始化该监听套接字TCB中的计数变量

sk->sk_max_ack_backlog = 0;

sk->sk_ack_backlog = 0;

//清零延迟ACK相关数据成员

inet_csk_delack_init(sk);

/* There is race window here: we announce ourselves listening,

* but this transition is still not validated by get_port().

* It is OK, because this socket enters to hash table only

* after validation is complete.

*/

//将TCB的状态迁移到TCP_LISTEN

sk->sk_state = TCP_LISTEN;

//如果listen()之前已经bind()过,那么该函数会直接返回0;如果之前未bind()过,

//那么该函数会为该监听套接字绑定一个端口,即实现自动绑定。关于端口绑定,可以参

//考《TCP之系统调用bind()》

if (!sk->sk_prot->get_port(sk, inet->num)) {

//端口绑定成功

inet->sport = htons(inet->num);

//路由相关操作

sk_dst_reset(sk);

//监听状态的套接字需要注册到TCP的监听套接字散列表中,即(tcphashinfo->listening_hash)

//对于TCP,该回调函数实际上是inet_hash(),见下文

sk->sk_prot->hash(sk);

return 0;

}

//绑定端口出错时,设置TCB状态为TCP_CLOSE,并且销毁已经分配的accept连接队列和SYN请求队列

sk->sk_state = TCP_CLOSE;

__reqsk_queue_destroy(&icsk->icsk_accept_queue);

return -EADDRINUSE;

}

3.2.1 创建连接请求队列 request_sock_queue (核心)

基于流式套接字的连接请求队列 request_sock_queue 结构如下:

/** struct request_sock_queue - queue of request_socks

*

* @rskq_accept_head - FIFO head of established children

* @rskq_accept_tail - FIFO tail of established children

* @rskq_defer_accept - User waits for some data after accept()

* @syn_wait_lock - serializer

*

* %syn_wait_lock is necessary only to avoid proc interface having to grab the main

* lock sock while browsing the listening hash (otherwise it's deadlock prone).

*

* This lock is acquired in read mode only from listening_get_next() seq_file

* op and it's acquired in write mode _only_ from code that is actively

* changing rskq_accept_head. All readers that are holding the master sock lock

* don't need to grab this lock in read mode too as rskq_accept_head. writes

* are always protected from the main sock lock.

*/

struct request_sock_queue {

struct request_sock *rskq_accept_head; //全连接队列

struct request_sock *rskq_accept_tail;

rwlock_t syn_wait_lock;

u8 rskq_defer_accept;

/* 3 bytes hole, try to pack */

struct listen_sock *listen_opt; //半连接队列

};

/** struct listen_sock - listen state

*

* @max_qlen_log - log_2 of maximal queued SYNs/REQUESTs

*/

struct listen_sock {

u8 max_qlen_log;

/* 3 bytes hole, try to use */

int qlen;

int qlen_young;

int clock_hand;

u32 hash_rnd;

u32 nr_table_entries;

struct request_sock *syn_table[0];//半连接队列节点数据

};

/* struct request_sock - mini sock to represent a connection request

*/

struct request_sock {

struct request_sock *dl_next; /* Must be first member! */

u16 mss;

u8 retrans;

u8 cookie_ts; /* syncookie: encode tcpopts in timestamp */

/* The following two fields can be easily recomputed I think -AK */

u32 window_clamp; /* window clamp at creation time */

u32 rcv_wnd; /* rcv_wnd offered first time */

u32 ts_recent;

unsigned long expires;

const struct request_sock_ops *rsk_ops;//配套的接口函数

struct sock *sk;

u32 secid;

u32 peer_secid;

};- 创建半连接listen_opt,大小经过区间[8,max_syn_backlog]的修正,且为2的整数次幂(方便取余)

- 初始化全连接队列rskq_accept_head为空

- 创建同步读写锁 syn_wait_lock

/*

* Maximum number of SYN_RECV sockets in queue per LISTEN socket.

* One SYN_RECV socket costs about 80bytes on a 32bit machine.

* It would be better to replace it with a global counter for all sockets

* but then some measure against one socket starving all other sockets

* would be needed.

*

* It was 128 by default. Experiments with real servers show, that

* it is absolutely not enough even at 100conn/sec. 256 cures most

* of problems. This value is adjusted to 128 for very small machines

* (<=32Mb of memory) and to 1024 on normal or better ones (>=256Mb).

* Note : Dont forget somaxconn that may limit backlog too.

*/

int sysctl_max_syn_backlog = 256;

int reqsk_queue_alloc(struct request_sock_queue *queue,

unsigned int nr_table_entries)

{

size_t lopt_size = sizeof(struct listen_sock);

struct listen_sock *lopt;

//从下面的逻辑可以看出,backlog是如何影响半连接队列的哈希桶大小的:

//1. 如果用户指定的backlog超过了系统最大值(/proc/sys/net/ipv4/tcp_max_syn_backlog),

// 那么取系统允许的最大值

//2. 确保哈系桶的大小不小于8

//最后通过roundup_pow_of_two()向上调整,使得最终的nr_table_entries值为2的整数幂

nr_table_entries = min_t(u32, nr_table_entries, sysctl_max_syn_backlog);

nr_table_entries = max_t(u32, nr_table_entries, 8);

nr_table_entries = roundup_pow_of_two(nr_table_entries + 1);

//为request_sock和其内部的散列表listen_opt分配内存空间

lopt_size += nr_table_entries * sizeof(struct request_sock *);

if (lopt_size > PAGE_SIZE)

lopt = __vmalloc(lopt_size,

GFP_KERNEL | __GFP_HIGHMEM | __GFP_ZERO,

PAGE_KERNEL);

else

lopt = kzalloc(lopt_size, GFP_KERNEL);

if (lopt == NULL)

return -ENOMEM;

//初始化max_qlen_log为nr_table_entries以2为底的对数,即2^max_qlen_log=nr_table_entries

for (lopt->max_qlen_log = 3;

(1 << lopt->max_qlen_log) < nr_table_entries;

lopt->max_qlen_log++);

//生成一个随机数,该随机数用于访问listen_opt哈希表时计算哈希值

get_random_bytes(&lopt->hash_rnd, sizeof(lopt->hash_rnd));

//初始化锁

rwlock_init(&queue->syn_wait_lock);

//初始化accept连接队列为空

queue->rskq_accept_head = NULL;

//记录listen_opt哈希表的桶大小到nr_table_entries

lopt->nr_table_entries = nr_table_entries;

//将listen_opt记录到监听套接字连接请求队列中,即

//tcp_sock.inet_conn.icsk_accept_queue.listen_opt

write_lock_bh(&queue->syn_wait_lock);

queue->listen_opt = lopt;

write_unlock_bh(&queue->syn_wait_lock);

return 0;

}

可以看出,reqsk_queue_alloc()的核心操作就是创建半连接队列 listen_opt。

3.2.2 注册监听套接字到TCP全局监听hash表(核心)

void inet_hash(struct sock *sk)

{

//非TCP_CLOSE状态(如上,listen()调用过程中,到这里状态应该是TCP_LISTEN),

//调用__inet_hash()

if (sk->sk_state != TCP_CLOSE) {

local_bh_disable();

__inet_hash(sk);

local_bh_enable();

}

}

static void __inet_hash(struct sock *sk)

{

struct inet_hashinfo *hashinfo = sk->sk_prot->hashinfo;

struct hlist_head *list;

rwlock_t *lock;

//对于TCP,并且是listen()调用流程,TCB状态一定是TCP_LISTEN,肯定不满足该条件

if (sk->sk_state != TCP_LISTEN) {

__inet_hash_nolisten(sk);

return;

}

//将该监听套接字加入到TCP的全局哈希表listenning_hash中

BUG_TRAP(sk_unhashed(sk));

list = &hashinfo->listening_hash[inet_sk_listen_hashfn(sk)];

lock = &hashinfo->lhash_lock;

inet_listen_wlock(hashinfo);

__sk_add_node(sk, list);

//还会累加对协议结构的引用计数

sock_prot_inuse_add(sk->sk_prot, 1);

write_unlock(lock);

wake_up(&hashinfo->lhash_wait);

}