执行SparkSQL时出现异常:

Exception in thread "main" java.lang.NoClassDefFoundError: org/codehaus/janino/InternalCompilerException

at org.apache.spark.sql.catalyst.expressions.codegen.GenerateSafeProjection$.create(GenerateSafeProjection.scala:197)

at org.apache.spark.sql.catalyst.expressions.codegen.GenerateSafeProjection$.create(GenerateSafeProjection.scala:36)

at org.apache.spark.sql.catalyst.expressions.codegen.CodeGenerator.generate(CodeGenerator.scala:1321)

at org.apache.spark.sql.Dataset.org$apache$spark$sql$Dataset$$collectFromPlan(Dataset.scala:3272)

at org.apache.spark.sql.Dataset$$anonfun$head$1.apply(Dataset.scala:2484)

at org.apache.spark.sql.Dataset$$anonfun$head$1.apply(Dataset.scala:2484)

at org.apache.spark.sql.Dataset$$anonfun$52.apply(Dataset.scala:3254)

at org.apache.spark.sql.execution.SQLExecution$.withNewExecutionId(SQLExecution.scala:77)

at org.apache.spark.sql.Dataset.withAction(Dataset.scala:3253)

at org.apache.spark.sql.Dataset.head(Dataset.scala:2484)

at org.apache.spark.sql.Dataset.take(Dataset.scala:2698)

at org.apache.spark.sql.Dataset.showString(Dataset.scala:254)

at org.apache.spark.sql.Dataset.show(Dataset.scala:723)

at org.apache.spark.sql.Dataset.show(Dataset.scala:682)

at org.apache.spark.sql.Dataset.show(Dataset.scala:691)

Caused by: java.lang.ClassNotFoundException: org.codehaus.janino.InternalCompilerException

at java.net.URLClassLoader.findClass(URLClassLoader.java:381)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:349)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

... 17 more

解决方法:

1、缺少包,在项目pom.xml文件添加依赖:

<dependency>

<groupId>org.codehaus.janino</groupId>

<artifactId>janino</artifactId>

<version>3.0.8</version>

</dependency>

2、添加依赖后如果仍报错则需考虑是jar包出现了冲突:

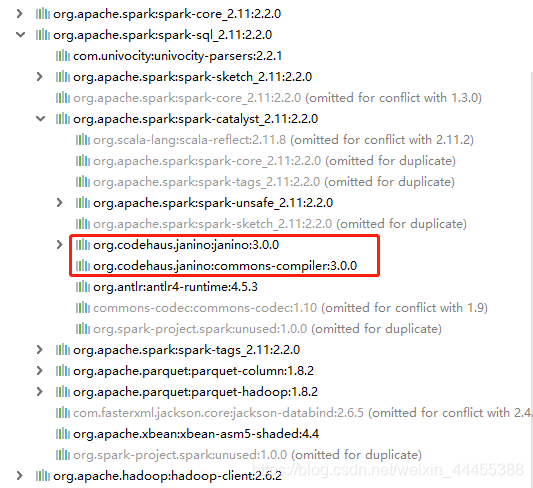

如下图中,我引入的spark-core包中也存在janino,版本之间的差异导致出现了冲突。

因此需要将spark-core中的janino剔除掉,再添加janino依赖(或者直接添加相同版本的依赖包应该也可以,总之不产生冲突就可以了):

注意:spark-core中剔除的janino为3.0.0版本,因此单独引入的janino也应该是3.0.0版本,不然依旧存在版本冲突问题。

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-sql_2.11.8</artifactId>

<version>2.2.0</version>

<exclusions>

<exclusion>

<groupId>org.codehaus.janino</groupId>

<artifactId>janino</artifactId>

</exclusion>

<exclusion>

<groupId>org.codehaus.janino</groupId>

<artifactId>commons-compiler</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.codehaus.janino</groupId>

<artifactId>janino</artifactId>

<version>3.0.0</version>

</dependency>