lvs:Linux Virtual Server,4层路由器,相比7层代理,4层代理过程中不受端口数量限制,因为没到应用层,ipvs在input连上强转报文到路由 ,有网站测试能达到400w并发。跟iptables配置类似,lvs的框架是ipvs,规则工具ipvsadm。

调度

-

静态方法:仅根据算法本身进行调度;

- RR:roundrobin,轮询;

- WRR:Weighted RR,加权轮询;

- SH:Source Hashing,实现session sticky,源IP地址hash;将来自于同一个IP地址的请求始终发往第一次挑中的RS,从而实现会话绑定;

- DH:Destination Hashing;目标地址哈希,将发往同一个目标地址的请求始终转发至第一次挑中的RS,典型使用场景是正向代理缓存场景中的负载均衡;

- 动态常用方法:主要根据每RS当前的负载状态及调度算法进行调度;

- LC:least connections :Overhead=activeconns*256+inactiveconns 算法:一个活动进程开销 是 非活动进程开销的 256倍 (这只是一个大概和理值)

- WLC:Weighted LC #默认算法,Overhead=(activeconns*256+inactiveconns)/weight

- SED:Shortest Expection Delay #不考虑非活动资源 算术理解,Overhead=(activeconns+1)*256/weight

- NQ:Never Queue #SED升级,权重1,10两台 , 前10个会走权重10的那台,而另外一台一个都不用处理,NQ就是处理这个情况。

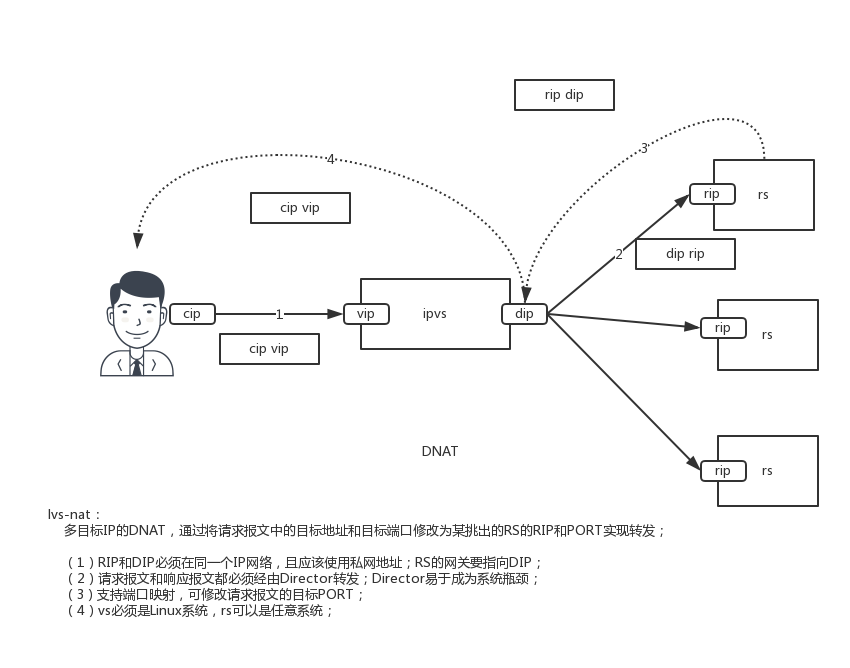

nat模式

工作流程图

准备条件:

vs:node1

rs:node2, node3, node4

node1:

172.16.86.249 #作为私网

192.168.1.200 # 作为公网

node2:

172.16.86.250 网关 172.16.86.249

node3:

172.16.86.248 网关 172.16.86.249

node4

172.16.86.251 网关 172.16.86.2491、vs安装ipvsadm

[root@node1 ~]# yum install ipvsadm2、服务管理

#添加

#ipvsadm -A|E -t|u|f service-address [-s scheduler] [-p [timeout]]

#-t: TCP协议的端口,VIP:TCP_PORT

#-u: UDP协议的端口,VIP:UDP_PORT

#-f:firewall MARK,是一个数字;

#______________________________________________________________________________

[root@node1 ~]# ipvsadm -A -t 192.168.1.200:80 -s rr

#修改

[root@node1 ~]# ipvsadm -E -t 192.168.1.200:80 -s wrr

#删除

[root@node1 ~]# ipvsadm -D -t 192.168.1.200:803、节点管理

#增改

#ipvsadm -a|e -t|u|f service-address -r server-address [-g|i|m] [-w weight]

#lvs类型:

#-g: gateway, dr类型

#-i: ipip, tun类型

#-m: masquerade, nat类型

# -w weight:权重;

#______________________________________________________________________________

[root@node1 ~]# ipvsadm -a -t 192.168.1.200:80 -r 172.16.86.250 -m #可以在rs的ip后面加端口 默认是把前面的端口映射到后面的端口

[root@node1 ~]# ipvsadm -a -t 192.168.1.200:80 -r 172.16.86.248 -m

[root@node1 ~]# ipvsadm -a -t 192.168.1.200:80 -r 172.16.86.251 -m

#删:

#ipvsadm -d -t|u|f service-address -r server-address

[root@node1 ~]# ipvsadm -d -t 172.16.86.249:80 -r 172.16.86.2513、查看

[root@node1 ~]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight权重 ActiveConn正在连接的 InActConn 非活动连接数量

TCP 192.168.1.200:80 wlc #默认是wlc调度

-> 172.16.86.248:80 Masq 1 0 0

-> 172.16.86.250:80 Masq 1 0 0

-> 172.16.86.251:80 Masq 1 0 0

[root@node1 ~]# ipvsadm -ln --stats

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Conns连接数 InPkts入栈报文数 OutPkts出栈报文数 InBytes入栈字节 OutBytes出栈字节

-> RemoteAddress:Port

TCP 192.168.1.200:80 282989 1708574 1419365 115244K 141336K

-> 172.16.86.248:80 169787 1026019 852945 69195245 84705145

-> 172.16.86.250:80 56599 341318 281848 23003003 28178841

-> 172.16.86.251:80 56603 341237 284572 23046538 28452109

[root@node1 ~]# watch -n.1 'ipvsadm -Ln --rate'

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port CPS每秒建立连接 InPPS每秒入栈报文数 OutPPS InBPS每秒入栈字节数 OutBPS

-> RemoteAddress:Port

TCP 192.168.1.200:80 1699 10176 8482 686755 850122

-> 172.16.86.248:80 1019 6106 5089 412064 508979

-> 172.16.86.250:80 340 2035 1696 137359 170579

-> 172.16.86.251:80 340 2035 1696 137332 1705644、先来测试rr轮询算法

[root@node1 ~]# ipvsadm -E -t 192.168.1.200:80 -s rr

[root@node1 ~]# curl http://192.168.1.200/

node4

[root@node1 ~]# curl http://192.168.1.200/

node3

[root@node1 ~]# curl http://192.168.1.200/

node2wrr

[root@node1 ~]# ipvsadm -E -t 192.168.1.200:80 -s wrr

[root@node1 ~]# ipvsadm -e -t 192.168.1.200:80 -r 172.16.86.248 -m -w 3

[root@node1 ~]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.1.200:80 wrr

-> 172.16.86.248:80 Masq 3 0 1 #node3

-> 172.16.86.250:80 Masq 1 0 1 #node2

-> 172.16.86.251:80 Masq 1 0 3 #node4

[root@node1 ~]# curl http://192.168.1.200/

node4

[root@node1 ~]# curl http://192.168.1.200/

node3

[root@node1 ~]# curl http://192.168.1.200/

node2

[root@node1 ~]# curl http://192.168.1.200/

node3

[root@node1 ~]# curl http://192.168.1.200/

node35、保存规则

#查看rpm包中的脚本

[root@node1 ~]# cat /usr/lib/systemd/system/ipvsadm.service

[Unit]

Description=Initialise the Linux Virtual Server

After=syslog.target network.target

[Service]

Type=oneshot

ExecStart=/bin/bash -c "exec /sbin/ipvsadm-restore < /etc/sysconfig/ipvsadm"

ExecStop=/bin/bash -c "exec /sbin/ipvsadm-save -n > /etc/sysconfig/ipvsadm"

ExecStop=/sbin/ipvsadm -C

RemainAfterExit=yes

[Install]

WantedBy=multi-user.target

#把规则保存到配置文件中

[root@node1 ~]# ipvsadm -S -n > /etc/sysconfig/ipvsadm

清空

[root@node1 ~]# ipvsadm -C

[root@node1 ~]# ipvsadm -ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

#重载

[root@node1 ~]# ipvsadm -R < /etc/sysconfig/ipvsadm

[root@node1 ~]# ipvsadm -ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.1.200:80 wrr

-> 172.16.86.248:80 Masq 3 0 0

-> 172.16.86.250:80 Masq 1 0 0

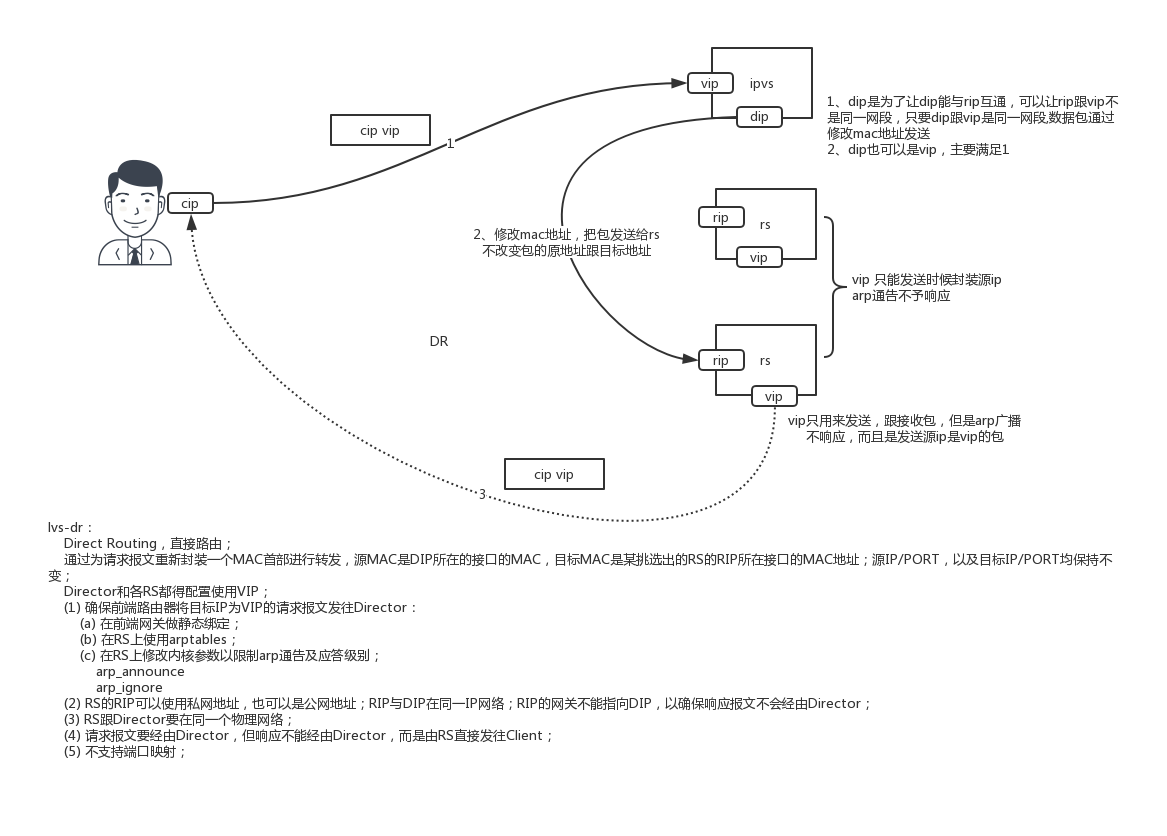

-> 172.16.86.251:80 Masq 1 0 0dr模型

工作流程图

限制响应级别:arp_ignore

0:默认值,表示可使用本地任意接口上配置的任意地址进行响应;

1: 仅在请求的目标IP配置在本地主机的接收到请求报文接口上时,才给予响应;

限制通告级别:arp_announce

0:默认值,把本机上的所有接口的所有信息向每个接口上的网络进行通告;

1:尽量避免向非本地连接网络进行通告;

2:必须避免向非本网络通告;

这两个参数一个是通告设置,防止外部连接,一个是响应设置,防止向外发送

实验准备:

node1: dip192.168.1.200 vip:192.168.1.205 vs

node2: 192.168.1.201 vip:192.168.1.205 rs

node3: 192.168.1.202 vip:192.168.1.205 rsnode1 配置 vip 如果vip等于dip,下面广播域不要是自己

[root@node1 ~]# ip addr add 192.168.1.205/32 broadcast 192.168.1.205 dev ens34:0

[root@node1 ~]# ip addr delete 192.168.1.205/32 broadcast 192.168.1.205 dev ens34:0rs: node2 node3 配置

#!/bin/bash

#

vip=192.168.1.205

mask='255.255.255.255'

case $1 in

start)

echo 1 > /proc/sys/net/ipv4/conf/all/arp_ignore

echo 1 > /proc/sys/net/ipv4/conf/lo/arp_ignore

echo 2 > /proc/sys/net/ipv4/conf/all/arp_announce

echo 2 > /proc/sys/net/ipv4/conf/lo/arp_announce

ip addr add $vip/32 broadcast $vip dev lo:0

#发往vip的报文,必须要从lo:0 出去 所以这个lo:0网卡是用来发报文的,相当于 修改 源ip

ip route add $vip dev lo:0

;;

stop)

ip addr del $vip/32 dev lo:0

ip route delete $vip dev lo:0

echo 0 > /proc/sys/net/ipv4/conf/all/arp_ignore

echo 0 > /proc/sys/net/ipv4/conf/lo/arp_ignore

echo 0 > /proc/sys/net/ipv4/conf/all/arp_announce

echo 0 > /proc/sys/net/ipv4/conf/lo/arp_announce

;;

*)

echo "Usage $(basename $0) start|stop"

exit 1

;;

esac vs:

[root@node1 ~]# ipvsadm -A -t 192.168.1.205:80 -s rr

#这里添加节点还有另外一个意义:就是通知本机 rs节点会有vip,你只要把包发送回去就行

[root@node1 ~]# ipvsadm -a -t 192.168.1.205:80 -r 192.168.1.201 -g

[root@node1 ~]# ipvsadm -a -t 192.168.1.205:80 -r 192.168.1.202 -g查看

[root@node1 ~]# ipvsadm -ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.1.205:80 rr

-> 192.168.1.201:80 Route 路由类型 1 0 0

-> 192.168.1.202:80 Route 1 0 0测试

marvindeMacBook-Pro:~ marvin$ curl http://192.168.1.205/

node3

marvindeMacBook-Pro:~ marvin$ curl http://192.168.1.205/

node2

marvindeMacBook-Pro:~ marvin$ curl http://192.168.1.205/

node3

marvindeMacBook-Pro:~ marvin$ curl http://192.168.1.205/

node2抓包分析

[root@node1 ~]# tcpdump -i any -nn port 80

#响应在rs节点

[root@node2 ~]# tcpdump -i any -nn port 80

10:18:54.367851 IP 192.168.1.205.80 > 192.168.1.104.55631: Flags [P.], seq 1:235, ack 78, win 227, options [nop,nop,TS val 352696164 ecr 945230025], length 234: HTTP: HTTP/1.1 200 OKvs配置脚本

#!/bin/bash

vip='192.168.1.205'

iface='ens34:0'

mask='255.255.255.255'

port='80'

rs1='192.168.1.201'

rs2='192.168.1.202'

scheduler='wrr'

type='-g'

case $1 in

start)

ip addr add $vip/32 broadcast $vip dev $iface

iptables -F

ipvsadm -A -t ${vip}:${port} -s $scheduler

ipvsadm -a -t ${vip}:${port} -r ${rs1} $type -w 1

ipvsadm -a -t ${vip}:${port} -r ${rs2} $type -w 1

;;

stop)

ipvsadm -C

ip addr delete $vip/32 broadcast $vip dev $iface

;;

*)

echo "Usage $(basename $0) start|stop"

exit 1

;;

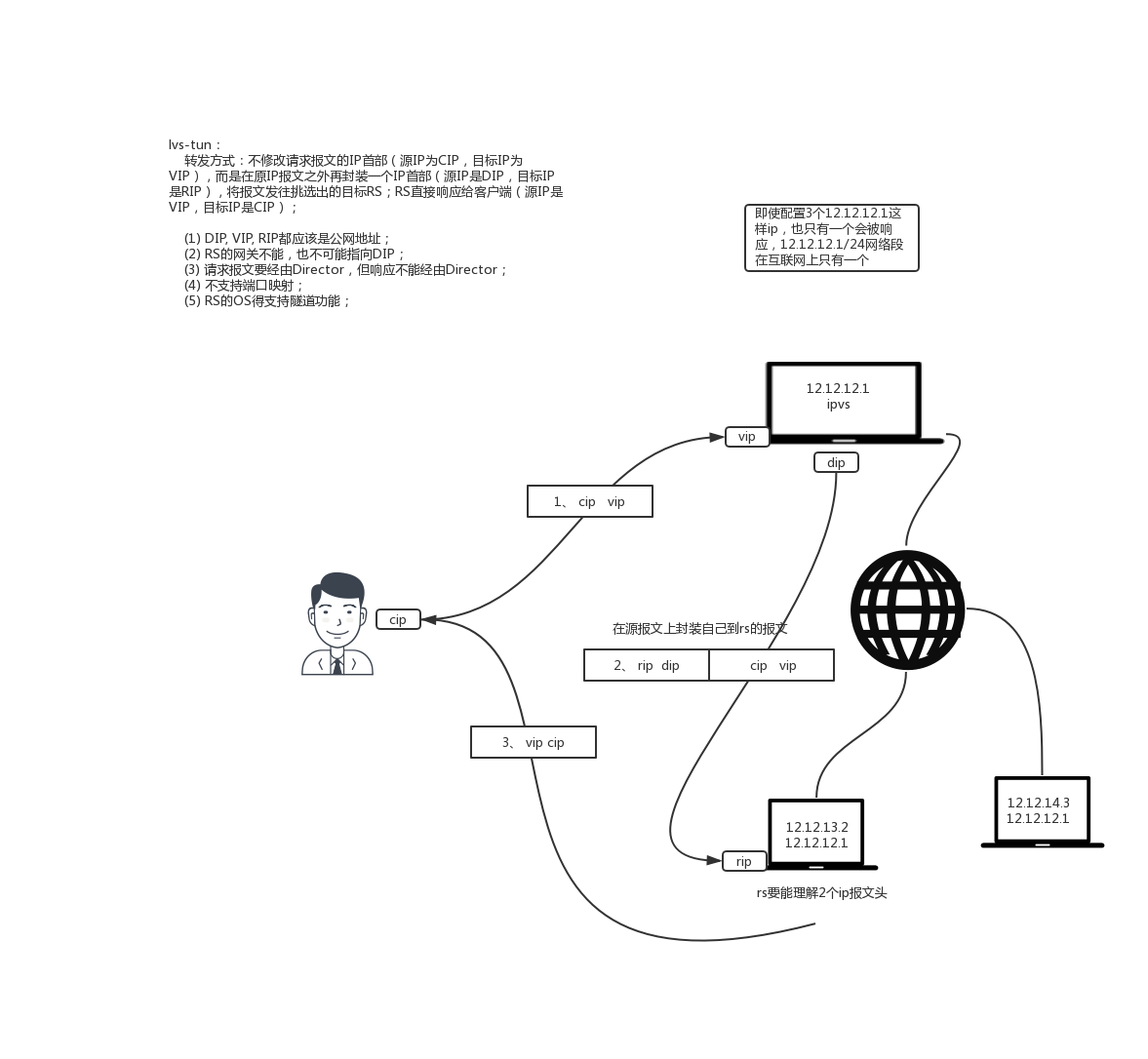

esactun模型

工作流程图

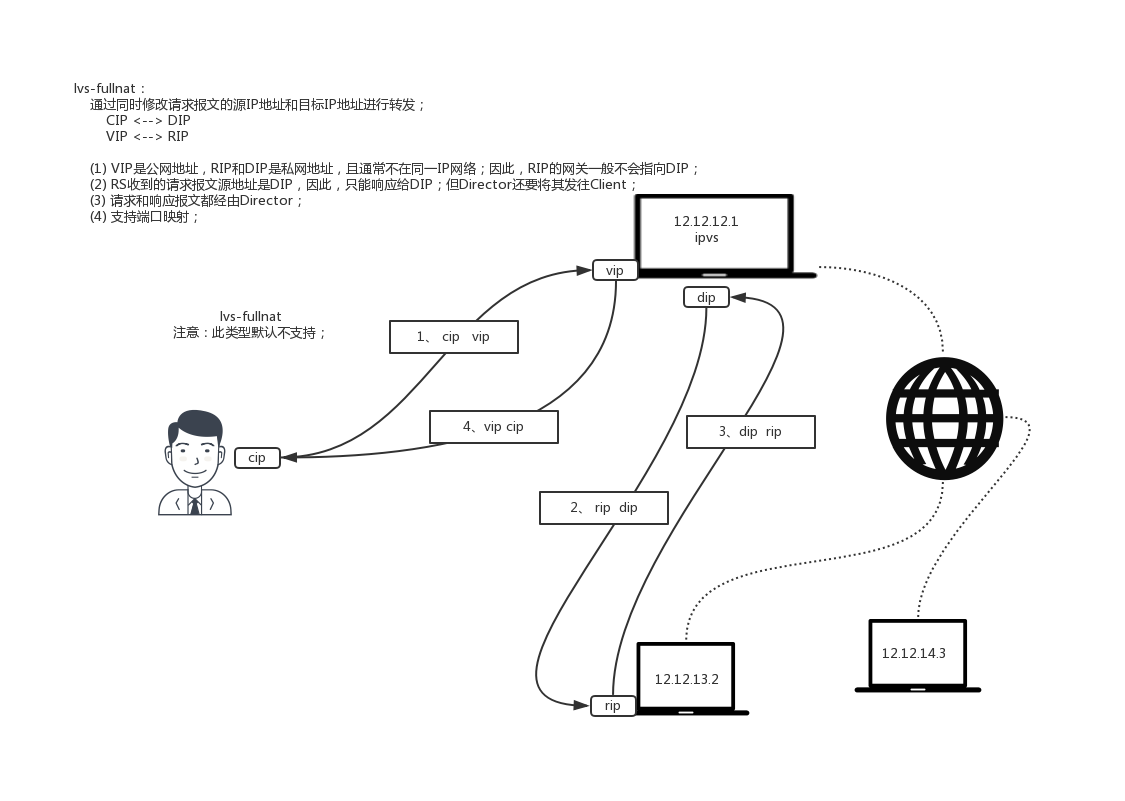

fullnat模型

工作流程图

把多个服务标记为一个服务

iptables -t mangle -A PREROUTING -d 192.168.1.200 -p tcp -m multoport --dports 80,443 -j MARK --set-mark 3 #标记3 随意

iptables -t mangle -vnL

ipvsadm -A -f 3 s sh #对标记3的报文定义一个服务

ipvsadm -a -f 3 -r 192.168.1.201 -g

ipvsadm -a -f 3 -r 192.168.1.202 -g持久连接

-p 实现持久连接

每端口持久:每个端口对应定义为一个集群服务,每集群服务单独调度;

ipvsadm -A 192.168.1.200:80 -s rr -p

每防火墙标记持久:基于防火墙标记定义集群服务;可实现将多个端口上的应用统一调度,即所谓的port Affinity;

ipvsadm -A -f 3 -s rr -p

每客户端持久:基于0端口定义集群服务,即将客户端对所有应用的请求统统调度至后端主机,必须定义为持久模式;

ipvsadm -A -t 192.168.1.200:0 -s rr -p

ipvsadm -a -t 192.168.1.200:0 -r 192.168.1.201 -g

ipvsadm -a -t 192.168.1.200:0 -r 192.168.1.202 -g

监测(启动)ldirectord

ldirectord功能:检查rs服务器是否有坏掉,如果坏掉,ipvsadm做delete,如果坏了修复会做ipvsadm add

配置脚本说明

checktimeout=3 超时时常

checkinterval=1 每个一秒检查一次,可以设置长点,减少压力

fallback=127.0.0.1:80 如果real server全跪了,本机提供

autoreload=yes 配置文件发生修改,自动加载

logfile="/var/log/ldirectord.log"

quiescent=no

virtual=5 #防火墙标记 ip:port 的话是正常标记

real=172.16.0.7:80 gate 2

real=172.16.0.8:80 gate 1

fallback=127.0.0.1:80 gate #如果rs都坏了,启用这项

service=http #用http协议方式监测,关闭这个选项,会用四层方式探测

scheduler=wrr

checktype=negotiate #谈判方式,不是一次击毙

checkport=80

request="index.html"

receive="CentOS" #index.html中带有CentOS安装配置

[root@node1 packages]# wget ftp://ftp.pbone.net/mirror/ftp5.gwdg.de/pub/opensuse/repositories/network:/ha-clustering:/Stable/CentOS_CentOS-6/x86_64/ldirectord-3.9.5-3.1.x86_64.rpm

[root@node1 packages]# yum install ldirectord-3.9.5-3.1.x86_64.rpm

[root@node1 packages]# rpm -ql ldirectord

/etc/ha.d

/etc/ha.d/resource.d

/etc/ha.d/resource.d/ldirectord

/etc/init.d/ldirectord

/etc/logrotate.d/ldirectord

/usr/lib/ocf/resource.d/heartbeat/ldirectord

/usr/sbin/ldirectord

/usr/share/doc/ldirectord-3.9.5

/usr/share/doc/ldirectord-3.9.5/COPYING

/usr/share/doc/ldirectord-3.9.5/ldirectord.cf

/usr/share/man/man8/ldirectord.8.gz

[root@node1 packages]# cp /usr/share/doc/ldirectord-3.9.5/ldirectord.cf /etc/ha.d/

[root@node1 packages]# vim /etc/ha.d/ldirectord.cf

# Global Directives

checktimeout=3

checkinterval=1

#fallback=127.0.0.1:80

#fallback6=[::1]:80

autoreload=yes

logfile="/var/log/ldirectord.log"

#logfile="local0"

#emailalert="[email protected]"

#emailalertfreq=3600

#emailalertstatus=all

quiescent=no

virtual=192.168.1.205:80

real=192.168.1.201:80 gate

real=192.168.1.202:80 gate

fallback=127.0.0.1:80 gate

# service=http

scheduler=rr

#persistent=600

#netmask=255.255.255.255

protocol=tcp

checktype=negotiate

checkport=80

# request="index.html"

# receive="Test Page"

# virtualhost=www.x.y.z启动服务

[root@node1 packages]# /etc/init.d/ldirectord start

Starting ldirectord (via systemctl): [ OK ]

[root@node1 packages]# ipvsadm -ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.1.205:80 rr

-> 192.168.1.201:80 Route 1 0 0

-> 192.168.1.202:80 Route 1 0 0

marvindeMacBook-Pro:~ marvin$ curl http://192.168.1.205/

node3

marvindeMacBook-Pro:~ marvin$ curl http://192.168.1.205/

node2停掉一个节点

[root@node3 ~]# systemctl stop mynginx

[root@node1 packages]# ipvsadm -ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.1.205:80 rr

-> 192.168.1.201:80 Route 1 0 6

marvindeMacBook-Pro:~ marvin$ curl http://192.168.1.205/

node2

marvindeMacBook-Pro:~ marvin$ curl http://192.168.1.205/

node2全部停掉 ,会自动启动本地服务

[root@node1 packages]# ipvsadm -ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.1.205:80 rr

-> 127.0.0.1:80 Route 1 0 0

marvindeMacBook-Pro:~ marvin$ curl http://192.168.1.205/

sorry

marvindeMacBook-Pro:~ marvin$ curl http://192.168.1.205/

sorry启用一台,恢复正常

[root@node2 ~]# systemctl start mynginx

[root@node1 packages]# ipvsadm -ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.1.205:80 rr

-> 192.168.1.201:80 Route 1 0 0