THE MNIST DATABASE of handwritten digits(点击进入下载4个数据文件)

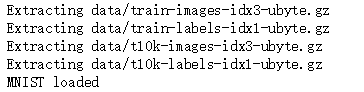

加载一下数据集

import numpy as np

import tensorflow as tf

import matplotlib.pyplot as plt

import input_data

import warnings

warnings.filterwarnings("ignore")

mnist = input_data.read_data_sets('data/', one_hot=True)#进行度热编码

trainimg = mnist.train.images

trainlabel = mnist.train.labels

testimg = mnist.test.images

testlabel = mnist.test.labels

print ("MNIST loaded")

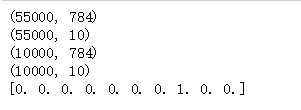

print (trainimg.shape)

print (trainlabel.shape)

print (testimg.shape)

print (testlabel.shape)

#print (trainimg)

print (trainlabel[0])

模型建立:

#None(无穷)为输入数据,784为输入数据单个案例的维度(列)

x = tf.placeholder("float", [None, 784])

y = tf.placeholder("float", [None, 10]) # None is for infinite

#对784个维度附上权重w

W = tf.Variable(tf.zeros([784, 10]))

#由于做10分类,因此只需要10个偏置因子b即可

b = tf.Variable(tf.zeros([10]))

# 假设一个模型

#由于逻辑回归为二分类,因此做个转化为softmax分类

#softmax(得分值)=x*w+b

actv = tf.nn.softmax(tf.matmul(x, W) + b)

# 在tf.reduce_sum等函数中,有一个reduction_indices参数,表示函数的处理维度。

# 当没有reduction_indices这个参数,此时该参数取默认值None,将把input_tensor降到0维,也就是一个数

# 损失函数:逻辑回归的为-logP,tf.log(actv)与[0. 0. 0. 0. 0. 0. 0. 1. 0. 0.]相乘,得到对应真实标签的均值

cost = tf.reduce_mean(-tf.reduce_sum(y*tf.log(actv), reduction_indices=1))

# 使用梯度下降对loss函数进行求解

learning_rate = 0.01

optm = tf.train.GradientDescentOptimizer(learning_rate).minimize(cost)模型评估

# 对于预测值label与索引值label是否

pred = tf.equal(tf.argmax(actv, 1), tf.argmax(y, 1))

# tf.cast将pred转化为float,布尔类型转为01,计算准确率

accr = tf.reduce_mean(tf.cast(pred, "float"))

# 初始化

init = tf.global_variables_initializer()模型求解:

#所有样本迭代50次

training_epochs = 50

#一次迭代100个样本

batch_size = 100

display_step = 5

# SESSION

sess = tf.Session()

sess.run(init)

# MINI-BATCH LEARNING

for epoch in range(training_epochs):

#初始化loss=0

avg_cost = 0.

#计算一次迭代100个样本有多少个batch

num_batch = int(mnist.train.num_examples/batch_size)

for i in range(num_batch):

#第一个batch

batch_xs, batch_ys = mnist.train.next_batch(batch_size)

#使用梯度下降法(optm)对每个batch进行求解,

sess.run(optm, feed_dict={x: batch_xs, y: batch_ys})

#计算更新后权重的loss值

feeds = {x: batch_xs, y: batch_ys}

avg_cost += sess.run(cost, feed_dict=feeds)/num_batch

# 每五个epochs输出一下

if epoch % display_step == 0:

feeds_train = {x: batch_xs, y: batch_ys}

feeds_test = {x: mnist.test.images, y: mnist.test.labels}

train_acc = sess.run(accr, feed_dict=feeds_train)

test_acc = sess.run(accr, feed_dict=feeds_test)

print ("Epoch: %03d/%03d cost: %.9f train_acc: %.3f test_acc: %.3f"

% (epoch, training_epochs, avg_cost, train_acc, test_acc))

print ("DONE")

可以看到效果还是较为不错了,测试集准确率达到了0.978