1 环境介绍与配置

1.1 ceph介绍

#ceph架构

#ceph支持的三种接口:

1 Object:有原生的API,而且也兼容Swift和S3的API。

2 Block:支持精简配置、快照、克隆。

3 File:Posix接口,支持快照。

#ceph的三种类型的优缺点:

1.2 环境介绍

[root@ceph131 ~]# cat /etc/redhat-release

CentOS Linux release 7.8.2003 (Core)

#ceph

nautilus 14.2.10-0.el7

#网络设计

172.16.1.0/24 #Management Network 可不用设

172.16.2.0/24 #Public Network

172.16.3.0/24 #Cluster Network

#每台ceph节点下除系统盘外,挂1个32G硬盘

ceph131 eth0:172.16.1.131 eth1:172.16.2.131 eth2:172.16.3.131 1u2g

ceph132 eth0:172.16.1.132 eth1:172.16.2.132 eth2:172.16.3.132 1u2g

ceph133 eth0:172.16.1.133 eth1:172.16.2.133 eth2:172.16.3.133 1u2g

1.3 基础环境准备

1.3.1 关闭selinux、防火墙

#关闭防火墙

systemctl stop firewalld.service

systemctl disable firewalld.service

firewall-cmd --state

#关闭SElinux

sed -i '/^SELINUX=.*/c SELINUX=disabled' /etc/selinux/config

sed -i 's/^SELINUXTYPE=.*/SELINUXTYPE=disabled/g' /etc/selinux/config

grep --color=auto '^SELINUX' /etc/selinux/config

setenforce 0

reboot

1.3.2 设置主机名,每台设置

hostnamectl set-hostname ceph135

su -

1.3.3 设置网卡对应网卡IP(自行更改网卡名进行替换IP)

#vim /etc/sysconfig/network-scripts/ifcfg-eth0

NetName=eth0

rm -f /etc/sysconfig/network-scripts/ifcfg-$NetName

nmcli con add con-name $NetName ifname $NetName autoconnect yes type ethernet \

ip4 172.16.1.131/24 ipv4.dns "114.114.114.114" ipv4.gateway "172.16.1.254"

#设置完成后执行下reload网络

systemctl network restart

#(可选)如要指定默认路由,只要在对应的网卡配置加上以下配置,如:

#vim /etc/sysconfig/network-scripts/ifcfg-eth0

IPV4_ROUTE_METRIC=0

1.3.4 在hosts里添加对应的ceph节点信息

#vim /etc/hosts

#[ceph14]

172.16.2.131 ceph131

172.16.2.132 ceph132

172.16.2.133 ceph133

1.3.5 添加Ceph nautilus版本源

#系统yum源更换成阿里源,并更新yum文件缓存

wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

yum clean all && yum makecache

#添加nautilus源

cat << EOM > /etc/yum.repos.d/ceph.repo

[ceph-noarch]

name=Ceph noarch packages

baseurl=https://download.ceph.com/rpm-nautilus/el7/noarch

enabled=1

gpgcheck=1

type=rpm-md

gpgkey=https://download.ceph.com/keys/release.asc

EOM

#更新应用版本

yum update -y

1.3.6 时间同步

#个人比较喜欢用以下方式来同步时间

ntpdate ntp3.aliyun.com

echo "*/3 * * * * ntpdate ntp3.aliyun.com &> /dev/null" > /tmp/crontab

crontab /tmp/crontab

##3# 1.3.7(可选)安装基础软件

yum install net-tools wget vim bash-completion lrzsz unzip zip -y

2 ceph安装与配置

2.1 ceph-deploy工具部署

#如果是在15版本,支持使用cephadm工具部署,ceph-deploy在14版本前都支持

#安装ceph-deploy,这里选用ceph131做为管理节点,因此ceph-deploy部署在这台上

yum install ceph-deploy -y

#这里安装的是版本是 ceph-deploy-2.0.1-0.noarch

#Ceph-deploy必须作为具有无密码sudo特权的用户登录到Ceph节点,因为它需要在不提示密码的情况下安装软件和配置文件

#在每个Ceph节点上创建ceph-deploy的用户,密码为 ceph.123

useradd -d /home/cephdeploy -m cephdeploy

passwd cephdeploy

usermod -G root cephdeploy

#对于添加到每个Ceph节点的用户,确保该用户具有sudo特权。

echo "cephdeploy ALL = (root) NOPASSWD:ALL" | sudo tee /etc/sudoers.d/cephdeploy

chmod 0440 /etc/sudoers.d/cephdeploy

#登陆cephdeploy启用没有密码的ssh,在ceph131执行即可,三次回车!

[cephdeploy@ceph131 ~]$ ssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:CsvXYKm8mRzasMFwgWVLx5LvvfnPrRc5S1wSb6kPytM root@ceph131

The key's randomart image is:

+---[RSA 2048]----+

| +o. |

| =oo. . |

|. oo o . |

| .. . . = |

|. ....+ S . * |

| + o.=.+ O |

| + * oo.. + * |

| B *o .+.E . |

| o * ...++. |

+----[SHA256]-----+

#将密钥复制到每个Ceph节点

ssh-copy-id cephdeploy@ceph131

ssh-copy-id cephdeploy@ceph132

ssh-copy-id cephdeploy@ceph133

#验证,不需要输入密码即为成功

[cephdeploy@ceph131 ~]$ ssh 'cephdeploy@ceph133'

Last failed login: Thu Jul 2 11:18:06 +08 2020 from 172.16.1.131 on ssh:notty

There were 2 failed login attempts since the last successful login.

Last login: Thu Jul 2 10:32:51 2020 from 172.16.1.131

[cephdeploy@ceph133 ~]$ exit

logout

Connection to ceph133 closed.

2.2 创建并配置ceph集群

2.2.1 创建ceph配置目录和集群

#创建集群目录,用于维护ceph-deploy为集群生成的配置文件和密钥。

su cephdeploy

mkdir ~/cephcluster && cd ~/cephcluster

[cephdeploy@ceph131 cephcluster]$ pwd

/home/cephdeploy/cephcluster

#注意点

#1 不要使用sudo调用ceph-deploy,如果您是以另一个用户登录,也不要以root身份运行它,因为它不会发出远程主机上所需的sudo命令。

#2 ceph-deploy会将文件输出到当前目录。如果在执行ceph-deploy时,一定要确保您在这个目录中。

#附:节点清理

#如果在任何时候你遇到麻烦,你想重新开始,执行以下清除Ceph包,并擦除其所有数据和配置:

ceph-deploy purge ceph131 ceph132 ceph133

ceph-deploy purgedata ceph131 ceph132 ceph133

ceph-deploy forgetkeys

rm ceph.*

#在cephcluster目录下执行创建集群

[cephdeploy@ceph131 cephcluster]$ ceph-deploy new ceph131 ceph132 ceph133

[ceph_deploy.conf][DEBUG ] found configuration file at: /home/cephdeploy/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /bin/ceph-deploy new ceph131 ceph132 ceph133

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] func : <function new at 0x7f9f54674e60>

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f9f541fa9e0>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] ssh_copykey : True

[ceph_deploy.cli][INFO ] mon : ['ceph131', 'ceph132', 'ceph133']

[ceph_deploy.cli][INFO ] public_network : None

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] cluster_network : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.cli][INFO ] fsid : None

[ceph_deploy.new][DEBUG ] Creating new cluster named ceph

[ceph_deploy.new][INFO ] making sure passwordless SSH succeeds

[ceph131][DEBUG ] connection detected need for sudo

[ceph131][DEBUG ] connected to host: ceph131

[ceph131][DEBUG ] detect platform information from remote host

[ceph131][DEBUG ] detect machine type

[ceph131][DEBUG ] find the location of an executable

[ceph131][INFO ] Running command: sudo /usr/sbin/ip link show

[ceph131][INFO ] Running command: sudo /usr/sbin/ip addr show

[ceph131][DEBUG ] IP addresses found: [u'172.16.1.131', u'172.16.2.131', u'172.16.3.131']

[ceph_deploy.new][DEBUG ] Resolving host ceph131

[ceph_deploy.new][DEBUG ] Monitor ceph131 at 172.16.1.131

[ceph_deploy.new][INFO ] making sure passwordless SSH succeeds

[ceph132][DEBUG ] connected to host: ceph131

[ceph132][INFO ] Running command: ssh -CT -o BatchMode=yes ceph132

[ceph132][DEBUG ] connection detected need for sudo

[ceph132][DEBUG ] connected to host: ceph132

[ceph132][DEBUG ] detect platform information from remote host

[ceph132][DEBUG ] detect machine type

[ceph132][DEBUG ] find the location of an executable

[ceph132][INFO ] Running command: sudo /usr/sbin/ip link show

[ceph132][INFO ] Running command: sudo /usr/sbin/ip addr show

[ceph132][DEBUG ] IP addresses found: [u'172.16.2.132', u'172.16.1.132', u'172.16.3.132']

[ceph_deploy.new][DEBUG ] Resolving host ceph132

[ceph_deploy.new][DEBUG ] Monitor ceph132 at 172.16.1.132

[ceph_deploy.new][INFO ] making sure passwordless SSH succeeds

[ceph133][DEBUG ] connected to host: ceph131

[ceph133][INFO ] Running command: ssh -CT -o BatchMode=yes ceph133

[ceph133][DEBUG ] connection detected need for sudo

[ceph133][DEBUG ] connected to host: ceph133

[ceph133][DEBUG ] detect platform information from remote host

[ceph133][DEBUG ] detect machine type

[ceph133][DEBUG ] find the location of an executable

[ceph133][INFO ] Running command: sudo /usr/sbin/ip link show

[ceph133][INFO ] Running command: sudo /usr/sbin/ip addr show

[ceph133][DEBUG ] IP addresses found: [u'172.16.2.133', u'172.16.1.133', u'172.16.3.133']

[ceph_deploy.new][DEBUG ] Resolving host ceph133

[ceph_deploy.new][DEBUG ] Monitor ceph133 at 172.16.1.133

[ceph_deploy.new][DEBUG ] Monitor initial members are ['ceph131', 'ceph132', 'ceph133']

[ceph_deploy.new][DEBUG ] Monitor addrs are ['172.16.1.131', '172.16.1.132', '172.16.1.133']

[ceph_deploy.new][DEBUG ] Creating a random mon key...

[ceph_deploy.new][DEBUG ] Writing monitor keyring to ceph.mon.keyring...

[ceph_deploy.new][DEBUG ] Writing initial config to ceph.conf...

2.2.2 ceph配置文件修改

#创建公共访问的网络

#vim /root/cephcluster/ceph.conf

[global]

fsid = 76235629-6feb-4f0c-a106-4be33d485535

mon_initial_members = ceph131, ceph132, ceph133

mon_host = 172.16.1.131,172.16.1.132,172.16.1.133

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

public_network = 172.16.1.0/24

cluster_network = 172.16.2.0/24

#设置副本数

osd_pool_default_size = 3

#设置最小副本数

osd_pool_default_min_size = 2

#设置时钟偏移0.5s

mon_clock_drift_allowed = .50

2.2.3 各节点安装基础包

#指定源安装ceph安装包,一定要在目录里执行

#输出无报错即可

ceph-deploy install --repo-url https://mirrors.tuna.tsinghua.edu.cn/ceph/rpm-nautilus/el7/ --gpg-url https://mirrors.tuna.tsinghua.edu.cn/ceph/keys/release.asc ceph131 ceph132 ceph133

2.2.4 部署初始mon并生成密钥:

#初始化mon,执行过程无报错即可

ceph-deploy mon create-initial

#这时候目录底下应该会多以下的key

[cephdeploy@ceph131 cephcluster]$ ll

total 220

-rw------- 1 cephdeploy cephdeploy 113 Jul 2 14:16 ceph.bootstrap-mds.keyring

-rw------- 1 cephdeploy cephdeploy 113 Jul 2 14:16 ceph.bootstrap-mgr.keyring

-rw------- 1 cephdeploy cephdeploy 113 Jul 2 14:16 ceph.bootstrap-osd.keyring

-rw------- 1 cephdeploy cephdeploy 113 Jul 2 14:16 ceph.bootstrap-rgw.keyring

-rw------- 1 cephdeploy cephdeploy 151 Jul 2 14:16 ceph.client.admin.keyring

-rw-rw-r-- 1 cephdeploy cephdeploy 453 Jul 2 14:05 ceph.conf

-rw-rw-r-- 1 cephdeploy cephdeploy 177385 Jul 2 14:16 ceph-deploy-ceph.log

-rw------- 1 cephdeploy cephdeploy 73 Jul 2 14:03 ceph.mon.keyring

#使用Ceph -deploy将配置文件和管理密钥复制到您的管理节点和Ceph节点

[cephdeploy@ceph131 cephcluster]$ ceph-deploy admin ceph131 ceph132 ceph133

[ceph_deploy.conf][DEBUG ] found configuration file at: /home/cephdeploy/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /bin/ceph-deploy admin ceph131 ceph132 ceph133

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7ff46c014638>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] client : ['ceph131', 'ceph132', 'ceph133']

[ceph_deploy.cli][INFO ] func : <function admin at 0x7ff46c8b52a8>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to ceph131

[ceph131][DEBUG ] connection detected need for sudo

[ceph131][DEBUG ] connected to host: ceph131

[ceph131][DEBUG ] detect platform information from remote host

[ceph131][DEBUG ] detect machine type

[ceph131][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to ceph132

[ceph132][DEBUG ] connection detected need for sudo

[ceph132][DEBUG ] connected to host: ceph132

[ceph132][DEBUG ] detect platform information from remote host

[ceph132][DEBUG ] detect machine type

[ceph132][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to ceph133

[ceph133][DEBUG ] connection detected need for sudo

[ceph133][DEBUG ] connected to host: ceph133

[ceph133][DEBUG ] detect platform information from remote host

[ceph133][DEBUG ] detect machine type

[ceph133][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

2.2.5 部署mgr服务

#创建mgr,执行过程无报错即可

ceph-deploy mgr create ceph131 ceph132 ceph133

2.2.6 添加OSD

#创建osd,执行过程无报错即可

ceph-deploy osd create --data /dev/sdb ceph131

ceph-deploy osd create --data /dev/sdb ceph132

ceph-deploy osd create --data /dev/sdb ceph133

2.2.7 验证集群状态

[root@ceph132 ~]# ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 0.09357 root default

-3 0.03119 host ceph131

0 hdd 0.03119 osd.0 up 1.00000 1.00000

-5 0.03119 host ceph132

1 hdd 0.03119 osd.1 up 1.00000 1.00000

-7 0.03119 host ceph133

2 hdd 0.03119 osd.2 up 1.00000 1.00000

3 扩展集群的服务 - 请根据需求开启

#所有的ceph-deploy操作都必须是使用cephdeploy用户并在配置文件目录底下执行!!!

[cephdeploy@ceph131 cephcluster]$ pwd

/home/cephdeploy/cephcluster

3.1 添加元数据服务器(mds)

ceph-deploy mds create ceph132

3.2 添加监控端(mon)

ceph-deploy mon add ceph132 ceph133

#一旦您添加了新的Ceph监视器,Ceph将开始同步监视器并形成一个quorum。您可以通过执行以下操作来检查仲裁状态:

ceph quorum_status --format json-pretty

3.3 增加守护进程(mgr)

#Ceph Manager守护进程以活动/备用模式操作。部署其他管理器守护进程可以确保,如果一个守护进程或主机失败,另一个守护进程可以接管,而不会中断服务。

ceph-deploy mgr create ceph132 ceph133

#验证

ceph -s

3.4 增加对象存储网关(rgw)

ceph-deploy rgw create ceph131

#默认情况下,RGW实例将监听端口7480。这可以通过在运行RGW的节点上编辑ceph.conf来改变,如下所示:

[client]

rgw frontends = civetweb port=80

#修改完端口需要重启服务

systemctl restart ceph-radosgw.service

4 存储部署

4.1 启用CephFS

4.1.1 请确认至少有一台节点启用了mds服务,pg数是有算法的,可以使用官网计算器去计算!

PG数量的预估 集群中单个池的PG数计算公式如下:PG 总数 = (OSD 数 * 100) / 最大副本数 / 池数 (结果必须舍入到最接近2的N次幂的值)

#在ceph集群,其中一台执行命令即可,这里用ceph132

[root@ceph132 ~]# ceph osd pool create cephfs_data 16

pool 'cephfs_data' created

[root@ceph132 ~]# ceph osd pool create cephfs_metadata 16

pool 'cephfs_metadata' created

[root@ceph132 ~]# ceph fs new cephfs_storage cephfs_metadata cephfs_data

new fs with metadata pool 2 and data pool 1

[root@ceph132 ~]# ceph df

RAW STORAGE:

CLASS SIZE AVAIL USED RAW USED %RAW USED

hdd 96 GiB 93 GiB 11 MiB 3.0 GiB 3.14

TOTAL 96 GiB 93 GiB 11 MiB 3.0 GiB 3.14

POOLS:

POOL ID STORED OBJECTS USED %USED MAX AVAIL

cephfs_data 1 0 B 0 0 B 0 29 GiB

cephfs_metadata 2 2.2 KiB 22 1.5 MiB 0 29 GiB

4.1.2 挂载cephfs

#在客户端创建密码文件

[root@ceph133 ~]# cat /etc/ceph/ceph.client.admin.keyring

[client.admin]

key = AQC5e/1eXm9WExAAmlD9aZoc2dZO6jbU8UXSqg==

caps mds = "allow *"

caps mgr = "allow *"

caps mon = "allow *"

caps osd = "allow *"

[root@ceph133 ~]# vim admin.secret

[root@ceph133 ~]# ll

total 8

-rw-r--r-- 1 root root 41 Jul 2 15:17 admin.secret

#挂载cephfs文件夹,并验证

[root@ceph133 ~]# mkdir /mnt/cephfs_storage

[root@ceph133 ~]# mount -t ceph 172.16.1.133:6789:/ /mnt/cephfs_storage -o name=admin,secretfile=admin.secret

[root@ceph133 ~]# df -Th

Filesystem Type Size Used Avail Use% Mounted on

devtmpfs devtmpfs 983M 0 983M 0% /dev

tmpfs tmpfs 995M 0 995M 0% /dev/shm

tmpfs tmpfs 995M 8.6M 987M 1% /run

tmpfs tmpfs 995M 0 995M 0% /sys/fs/cgroup

/dev/sda1 xfs 20G 2.2G 18G 11% /

tmpfs tmpfs 199M 0 199M 0% /run/user/0

tmpfs tmpfs 995M 52K 995M 1% /var/lib/ceph/osd/ceph-2

172.16.1.133:6789:/ ceph 30G 0 30G 0% /mnt/cephfs_storage

4.2 启用块存储

4.2.1 在ceph集群,其中一台执行命令即可,这里用ceph133

#初始化rbd池

PG数量的预估 集群中单个池的PG数计算公式如下:PG 总数 = (OSD 数 * 100) / 最大副本数 / 池数 (结果必须舍入到最接近2的N次幂的值)

[root@ceph132 ~]# ceph osd pool create rbd_storage 16 16 replicated

pool 'rbd_storage' created

#创建一个块设备

[root@ceph132 ~]# rbd create --size 1024 rbd_image -p rbd_storage

[root@ceph132 ~]# rbd ls rbd_storage

rbd_image

#删除命令

rbd rm rbd_storage/rbd_image

4.2.2 挂载rbd块设备

#将块设备映射到系统内核

[root@ceph133 ~]# rbd map rbd_storage/rbd_image

/dev/rbd0

[root@ceph133 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

rbd0 252:0 0 1G 0 disk

sdb 8:16 0 32G 0 disk

└─ceph--376ebd83--adf0--4ee1--b7e4--b4b79bae048e-osd--block--4b0444fa--535e--40c7--b55a--167ab21dbf9b 253:0 0 32G 0 lvm

sr0 11:0 1 4M 0 rom

sda 8:0 0 20G 0 disk

└─sda1 8:1 0 20G 0 part /

#格式化rbd设备

[root@ceph133 ~]# mkfs.ext4 -m0 /dev/rbd/rbd_storage/rbd_image

mke2fs 1.42.9 (28-Dec-2013)

Discarding device blocks: done

Filesystem label=

OS type: Linux

Block size=4096 (log=2)

Fragment size=4096 (log=2)

Stride=16 blocks, Stripe width=16 blocks

65536 inodes, 262144 blocks

0 blocks (0.00%) reserved for the super user

First data block=0

Maximum filesystem blocks=268435456

8 block groups

32768 blocks per group, 32768 fragments per group

8192 inodes per group

Superblock backups stored on blocks:

32768, 98304, 163840, 229376

Allocating group tables: done

Writing inode tables: done

Creating journal (8192 blocks): done

Writing superblocks and filesystem accounting information: done

#挂载rbd设备

[root@ceph133 ~]# mkdir /mnt/rbd_storage

[root@ceph133 ~]# mount /dev/rbd/rbd_storage/rbd_image /mnt/rbd_storage

[root@ceph133 ~]# df -Th

Filesystem Type Size Used Avail Use% Mounted on

devtmpfs devtmpfs 983M 0 983M 0% /dev

tmpfs tmpfs 995M 0 995M 0% /dev/shm

tmpfs tmpfs 995M 8.6M 987M 1% /run

tmpfs tmpfs 995M 0 995M 0% /sys/fs/cgroup

/dev/sda1 xfs 20G 2.2G 18G 11% /

tmpfs tmpfs 199M 0 199M 0% /run/user/0

tmpfs tmpfs 995M 52K 995M 1% /var/lib/ceph/osd/ceph-2

172.16.1.133:6789:/ ceph 30G 0 30G 0% /mnt/cephfs_storage

/dev/rbd0 ext4 976M 2.6M 958M 1% /mnt/rbd_storage

#取消内核挂载

rbd unmap /dev/rbd0

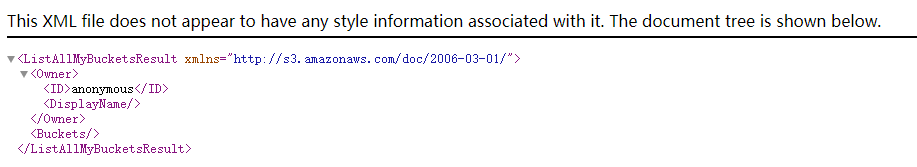

4.3 启用RGW对象存储

4.3.1 请确认至少有一台节点启用了rgw服务

#启用rgw见3.4

#使用浏览器,查看 http://172.16.1.131:7480/,说明已经启用成功

5 启用ceph dashboard

5.1 启用dashboard

#Ceph仪表板是一个内置的基于web的Ceph管理和监视应用程序,用于管理集群。

#节点默认没有安装mgr-dashboard程序,因此要先安装

yum install ceph-mgr-dashboard -y

#任一节点启用dashboard,没有提示

[root@ceph132 ~]# ceph mgr module enable dashboard

#配置登陆认证

[root@ceph132 ~]# ceph dashboard create-self-signed-cert

Self-signed certificate created

#配置登陆账户

[root@ceph132 ~]# ceph dashboard ac-user-create admin admin.123 administrator

{"username": "admin", "lastUpdate": 1593679011, "name": null, "roles": ["administrator"], "password": "$2b$12$kQYtMXun1jKdTDKwjfuNj.WYyJcr3vSHLTMWfXIi.wrKkFRCmmC1.", "email": null}

#测试登陆,浏览器查看:https://172.16.1.131:8443/ 用户名:admin 密码:admin.123

[root@ceph132 ~]# ceph mgr services

{

"dashboard": "https://ceph131:8443/"

}

5.2 Dashboard中启用RGW

#创建rgw的用户

[root@ceph131 ~]# radosgw-admin user create --uid=rgwadmin --display-name=rgwadmin --system

{

"user_id": "rgwadmin",

"display_name": "rgwadmin",

"email": "",

"suspended": 0,

"max_buckets": 1000,

"subusers": [],

"keys": [

{

"user": "rgwadmin",

"access_key": "VHK8BZYA3F5BDWMJFUER",

"secret_key": "WVBdt66ZbmGe0l6Wu3LoPKM1GlzF6V35JCpNKPJw"

}

],

"swift_keys": [],

"caps": [],

"op_mask": "read, write, delete",

"system": "true",

"default_placement": "",

"default_storage_class": "",

"placement_tags": [],

"bucket_quota": {

"enabled": false,

"check_on_raw": false,

"max_size": -1,

"max_size_kb": 0,

"max_objects": -1

},

"user_quota": {

"enabled": false,

"check_on_raw": false,

"max_size": -1,

"max_size_kb": 0,

"max_objects": -1

},

"temp_url_keys": [],

"type": "rgw",

"mfa_ids": []

}

#设置凭证,取创建用户生成的key

[root@ceph131 ~]# ceph dashboard set-rgw-api-access-key VHK8BZYA3F5BDWMJFUER

Option RGW_API_ACCESS_KEY updated

[root@ceph131 ~]# ceph dashboard set-rgw-api-secret-key WVBdt66ZbmGe0l6Wu3LoPKM1GlzF6V35JCpNKPJw

Option RGW_API_SECRET_KEY updated

#禁用SSL

[root@ceph131 ~]# ceph dashboard set-rgw-api-ssl-verify False

Option RGW_API_SSL_VERIFY updated

#启用rgw的dashboard

[root@ceph131 ~]# ceph dashboard set-rgw-api-host 172.16.1.131

Option RGW_API_HOST updated

[root@ceph131 ~]# ceph dashboard set-rgw-api-port 7480

Option RGW_API_PORT updated

[root@ceph131 ~]# ceph dashboard set-rgw-api-scheme http

Option RGW_API_SCHEME updated

[root@ceph131 ~]# ceph dashboard set-rgw-api-admin-resource admin

Option RGW_API_ADMIN_RESOURCE updated

[root@ceph131 ~]# ceph dashboard set-rgw-api-user-id rgwadmin

Option RGW_API_USER_ID updated

[root@ceph131 ~]# systemctl restart ceph-radosgw.target

X.部署过程遇到的问题

eg1.[root@ceph131 cephcluster]# ceph-deploy mon create-initial

[ceph131][INFO ] Running command: ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.ceph131.asok mon_status

[ceph_deploy.mon][WARNIN] mon.ceph131 monitor is not yet in quorum, tries left: 5

[ceph_deploy.mon][WARNIN] waiting 5 seconds before retrying

[ceph131][INFO ] Running command: ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.ceph131.asok mon_status

[ceph_deploy.mon][WARNIN] mon.ceph131 monitor is not yet in quorum, tries left: 4

[ceph_deploy.mon][WARNIN] waiting 10 seconds before retrying

[ceph131][INFO ] Running command: ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.ceph131.asok mon_status

[ceph_deploy.mon][WARNIN] mon.ceph131 monitor is not yet in quorum, tries left: 3

[ceph_deploy.mon][WARNIN] waiting 10 seconds before retrying

[ceph131][INFO ] Running command: ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.ceph131.asok mon_status

[ceph_deploy.mon][WARNIN] mon.ceph131 monitor is not yet in quorum, tries left: 2

[ceph_deploy.mon][WARNIN] waiting 15 seconds before retrying

[ceph131][INFO ] Running command: ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.ceph131.asok mon_status

[ceph_deploy.mon][WARNIN] mon.ceph131 monitor is not yet in quorum, tries left: 1

[ceph_deploy.mon][WARNIN] waiting 20 seconds before retrying

[ceph_deploy.mon][ERROR ] Some monitors have still not reached quorum:

[ceph_deploy.mon][ERROR ] ceph131

解决方案:

1 查看ceph.com的配置,public_network 或者mon_host 是否配置正确,

2 查看hosts配置是不是有增加了ipv6的解析,直接去掉即可再重新部署

eg2.[ceph_deploy][ERROR ] IOError: [Errno 13] Permission denied: '/root/cephcluster/ceph-deploy-ceph.log'

解决方案:

[root@ceph131 cephcluster]# echo "cephdeploy ALL = (root) NOPASSWD:ALL" | sudo tee /etc/sudoers.d/cephdeploy

cephdeploy ALL = (root) NOPASSWD:ALL

[root@ceph131 cephcluster]# chmod 0440 /etc/sudoers.d/cephdeploy

[root@ceph131 cephcluster]# cat /etc/sudoers.d/cephdeploy

cephdeploy ALL = (root) NOPASSWD:ALL

[root@ceph131 cephcluster]# usermod -G root cephdeploy

eg3.[root@ceph131 ~]# ceph -s

cluster:

id: 76235629-6feb-4f0c-a106-4be33d485535

health: HEALTH_WARN

clock skew detected on mon.ceph133

原因是:时钟偏移问题

解决方案:

1 在deploy节点的部署目录底下修改ceph.conf文件

[cephdeploy@ceph131 cephcluster]$ pwd

/home/cephdeploy/cephcluster

2 编辑ceph.conf,并添加以下字段保存退出

#设置时钟偏移

mon clock drift allowed = 2

mon clock drift warn backoff = 30

3 重新下发配置

[cephdeploy@ceph131 cephcluster]$ ceph-deploy --overwrite-conf config push ceph{131..133}

4 至对应节点重启mon

[root@ceph133 ~]# systemctl restart [email protected]

eg4.[root@ceph132 ~]# ceph mgr module enable dashboard

Error ENOENT: all mgr daemons do not support module 'dashboard', pass --force to force enablement

原因是:节点没有安装ceph-mgr-dashboard,在mgr的节点上安装。

yum install ceph-mgr-dashboard

eg5.[root@ceph133 ~]# ceph -s

cluster:

id: 76235629-6feb-4f0c-a106-4be33d485535

health: HEALTH_WARN

application not enabled on 1 pool(s)

[root@ceph133 ~]# ceph health detail

HEALTH_WARN application not enabled on 1 pool(s)

POOL_APP_NOT_ENABLED application not enabled on 1 pool(s)

application not enabled on pool 'rbd_storage'

use 'ceph osd pool application enable <pool-name> <app-name>', where <app-name> is 'cephfs', 'rbd', 'rgw', or freeform for custom applications.

解决方案:

ceph osd pool application enable rbd_storage rdb