kafka网络层简介

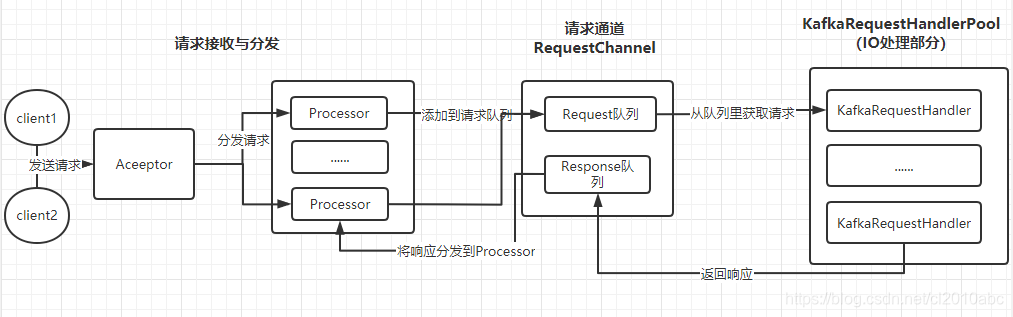

kafka网络层采用的是Reactor模型,是一种基于事件驱动的模型,如下图所示。

主要工作流程包括:

- Acceptor接收客户端或者其他Broker的请求,然后通过轮询的方式分发给不同的Processor。

- Processor线程处理请求,并将放入请求队列。

- KafkaRequestHandler(IO处理线程池)从请求队列中拉取请求,然后针对不同的请求进行处理,最后向RequestChannel发送response。

- RequestChannel将不同的response分发到各自对应的Processor,Processor再将response返回给客户端。

下面从源代码的层面分析Reactor模型各个组件的实现。

Acceptor

Acceptor继承了AbstractServerThread,主要执行体在run方法中。

private[kafka] class Acceptor(val endPoint: EndPoint,

val sendBufferSize: Int,

val recvBufferSize: Int,

brokerId: Int,

connectionQuotas: ConnectionQuotas,

metricPrefix: String) extends AbstractServerThread(connectionQuotas) with KafkaMetricsGroup {

// 创建nioSelector

private val nioSelector = NSelector.open()

// 创建ServerSocketChannel

val serverChannel = openServerSocket(endPoint.host, endPoint.port)

// 创建processor数组

private val processors = new ArrayBuffer[Processor]()

def run() {

// 注册OP_ACCEPT事件

serverChannel.register(nioSelector, SelectionKey.OP_ACCEPT)

startupComplete() // 等待Acceptor启动完成

try {

var currentProcessorIndex = 0

while (isRunning) {

try {

val ready = nioSelector.select(500)

if (ready > 0) {

val keys = nioSelector.selectedKeys()

val iter = keys.iterator()

while (iter.hasNext && isRunning) {

try {

val key = iter.next

iter.remove()

if (key.isAcceptable) {

// 调用accept处理OP_ACCEPT事件

accept(key).foreach { socketChannel => //调用accept获得socketchannel

var retriesLeft = synchronized(processors.length)

var processor: Processor = null

do {

retriesLeft -= 1

processor = synchronized {

// 更新currentProcessorIndex,从这里可以看出,使用Round-Robin方式选择Processor

currentProcessorIndex = currentProcessorIndex % processors.length

processors(currentProcessorIndex)

}

currentProcessorIndex += 1

// 将socketchannel放入processor的新连接队列里

} while (!assignNewConnection(socketChannel, processor, retriesLeft == 0))

}

} else

throw new IllegalStateException("Unrecognized key state for acceptor thread.")

} catch {

case e: Throwable => error("Error while accepting connection", e)

}

}

}

}

catch {

case e: ControlThrowable => throw e

case e: Throwable => error("Error occurred", e)

}

}

} finally {

debug("Closing server socket and selector.")

CoreUtils.swallow(serverChannel.close(), this, Level.ERROR)

CoreUtils.swallow(nioSelector.close(), this, Level.ERROR)

shutdownComplete()

}

}

private def accept(key: SelectionKey): Option[SocketChannel] = {

val serverSocketChannel = key.channel().asInstanceOf[ServerSocketChannel]

val socketChannel = serverSocketChannel.accept()

try {

// 增加connectionQuotas中记录的连接数

connectionQuotas.inc(socketChannel.socket().getInetAddress)

// 配置SocketChannel的相关属性

socketChannel.configureBlocking(false)

socketChannel.socket().setTcpNoDelay(true)

socketChannel.socket().setKeepAlive(true)

if (sendBufferSize != Selectable.USE_DEFAULT_BUFFER_SIZE)

socketChannel.socket().setSendBufferSize(sendBufferSize)

Some(socketChannel)

} catch {

case e: TooManyConnectionsException =>

info(s"Rejected connection from ${e.ip}, address already has the configured maximum of ${e.count} connections.")

close(socketChannel)

None

}

}

}

Processor

同样的Processor也继承了AbstractServerThread,Procesor的主要将底层读事件IO数据封装成Request存入队列中,然后将IO线程塞入的Response,返还给客户端,并处理Response 的回调逻辑。

private[kafka] class Processor(val id: Int,

time: Time,

maxRequestSize: Int,

requestChannel: RequestChannel,

connectionQuotas: ConnectionQuotas,

connectionsMaxIdleMs: Long,

failedAuthenticationDelayMs: Int,

listenerName: ListenerName,

securityProtocol: SecurityProtocol,

config: KafkaConfig,

metrics: Metrics,

credentialProvider: CredentialProvider,

memoryPool: MemoryPool,

logContext: LogContext,

connectionQueueSize: Int = ConnectionQueueSize) extends AbstractServerThread(connectionQuotas) with KafkaMetricsGroup {

...

// 存放Acceptor请求的阻塞队列

private val newConnections = new ArrayBlockingQueue[SocketChannel](connectionQueueSize)

// 存放已经发送给client的response

private val inflightResponses = mutable.Map[String, RequestChannel.Response]()

// response 队列,IO线程处理完之后response就保存在这里

private val responseQueue = new LinkedBlockingDeque[RequestChannel.Response]()

override def run() {

startupComplete()

try {

while (isRunning) {

try {

configureNewConnections() // 将newConnection里面的channel取出来注册到selector中

processNewResponses() //发送IO线程返回的response,并将其加入inflightResponses中

poll() //获取对应ScoketChannel上就绪的I/O事件

processCompletedReceives() // 接收和处理Request

processCompletedSends() // 负责处理Response的回调逻辑

processDisconnected() //关闭超过配额限制的连接

} catch {

case e: Throwable => processException("Processor got uncaught exception.", e)

}

}

} finally {

debug(s"Closing selector - processor $id")

CoreUtils.swallow(closeAll(), this, Level.ERROR)

shutdownComplete()

}

}

RequestChannel

RequestChannel主要用来缓存Request和Response。

class RequestChannel(val queueSize: Int, val metricNamePrefix : String) extends KafkaMetricsGroup {

import RequestChannel._

val metrics = new RequestChannel.Metrics

// 共享请求阻塞队列,线程安全,默认500

private val requestQueue = new ArrayBlockingQueue[BaseRequest](queueSize)

// Processor 线程池

private val processors = new ConcurrentHashMap[Int, Processor]()

//添加processor

def addProcessor(processor: Processor): Unit = {

if (processors.putIfAbsent(processor.id, processor) != null)

warn(s"Unexpected processor with processorId ${processor.id}")

newGauge(responseQueueSizeMetricName,

new Gauge[Int] {

def value = processor.responseQueueSize

},

Map(ProcessorMetricTag -> processor.id.toString)

)

}

//删除processor

def removeProcessor(processorId: Int): Unit = {

processors.remove(processorId)

removeMetric(responseQueueSizeMetricName, Map(ProcessorMetricTag -> processorId.toString))

}

// 发送请求

def sendRequest(request: RequestChannel.Request) {

requestQueue.put(request)

}

// 发送响应

def sendResponse(response: RequestChannel.Response) {

if (isTraceEnabled) {

val requestHeader = response.request.header

val message = response match {

case sendResponse: SendResponse =>

s"Sending ${requestHeader.apiKey} response to client ${requestHeader.clientId} of ${sendResponse.responseSend.size} bytes."

case _: NoOpResponse =>

s"Not sending ${requestHeader.apiKey} response to client ${requestHeader.clientId} as it's not required."

case _: CloseConnectionResponse =>

s"Closing connection for client ${requestHeader.clientId} due to error during ${requestHeader.apiKey}."

case _: StartThrottlingResponse =>

s"Notifying channel throttling has started for client ${requestHeader.clientId} for ${requestHeader.apiKey}"

case _: EndThrottlingResponse =>

s"Notifying channel throttling has ended for client ${requestHeader.clientId} for ${requestHeader.apiKey}"

}

trace(message)

}

// 获取响应对应的processor

val processor = processors.get(response.processor)

if (processor != null) {

// 将响应加入到processor的response队列里

processor.enqueueResponse(response)

}

}

// 待超时的阻塞获取请求

def receiveRequest(timeout: Long): RequestChannel.BaseRequest =

requestQueue.poll(timeout, TimeUnit.MILLISECONDS)

// 阻塞从队列获取请求

def receiveRequest(): RequestChannel.BaseRequest =

requestQueue.take()

}

KafkaRequestHandlerPool

KafkaRequestHandlerPool是IO线程池,负责对Request进行各种处理。

class KafkaRequestHandlerPool(val brokerId: Int,

val requestChannel: RequestChannel, //RequestChannel 对象

val apis: KafkaApis, //KadkaApis是kafka上层对消息处理的API入口

time: Time,

numThreads: Int, //IO线程数

requestHandlerAvgIdleMetricName: String,

logAndThreadNamePrefix : String) extends Logging with KafkaMetricsGroup {

// io线程runnables,即KafkaRequestHandler

val runnables = new mutable.ArrayBuffer[KafkaRequestHandler](numThreads)

for (i <- 0 until numThreads) {

createHandler(i)

}

// 创建io线程

def createHandler(id: Int): Unit = synchronized {

runnables += new KafkaRequestHandler(id, brokerId, aggregateIdleMeter, threadPoolSize, requestChannel, apis, time)

KafkaThread.daemon(logAndThreadNamePrefix + "-kafka-request-handler-" + id, runnables(id)).start()

}

}

KafkaRequestHandlerPool线程池中线程的执行体是KafkaRequestHandler。主要逻辑在run方法中。

class KafkaRequestHandler(id: Int,

brokerId: Int,

val aggregateIdleMeter: Meter,

val totalHandlerThreads: AtomicInteger,

val requestChannel: RequestChannel,

apis: KafkaApis,

time: Time) extends Runnable with Logging {

...

def run() {

while (!stopped) {

val startSelectTime = time.nanoseconds

// 获取请求

val req = requestChannel.receiveRequest(300)

val endTime = time.nanoseconds

val idleTime = endTime - startSelectTime

aggregateIdleMeter.mark(idleTime / totalHandlerThreads.get)

req match {

case RequestChannel.ShutdownRequest => //shutdown 请求类型

debug(s"Kafka request handler $id on broker $brokerId received shut down command")

shutdownComplete.countDown()

return

case request: RequestChannel.Request => //正常请求

try {

request.requestDequeueTimeNanos = endTime

trace(s"Kafka request handler $id on broker $brokerId handling request $request")

// 调用KafkaApis来对不同的消息进行处理

apis.handle(request)

} catch {

case e: FatalExitError =>

shutdownComplete.countDown()

Exit.exit(e.statusCode)

case e: Throwable => error("Exception when handling request", e)

} finally {

request.releaseBuffer()

}

case null => // continue

}

}

shutdownComplete.countDown()

}

...

}

KafkaRequestHandler中会调用handle方法对不同的Request进行各自不同的处理。

// KafkaApi.scala

def handle(request: RequestChannel.Request) {

try {

trace(s"Handling request:${request.requestDesc(true)} from connection ${request.context.connectionId};" +

s"securityProtocol:${request.context.securityProtocol},principal:${request.context.principal}")

request.header.apiKey match {

case ApiKeys.PRODUCE => handleProduceRequest(request)

// follower 拉取数据请求的处理入口

case ApiKeys.FETCH => handleFetchRequest(request)

case ApiKeys.LIST_OFFSETS => handleListOffsetRequest(request)

case ApiKeys.METADATA => handleTopicMetadataRequest(request)

// Broker LEADER_AND_ISR请求入口

case ApiKeys.LEADER_AND_ISR => handleLeaderAndIsrRequest(request)

// API_VERSION请求入口

case ApiKeys.API_VERSIONS => handleApiVersionsRequest(request)

...

}

} catch {

case e: FatalExitError => throw e

case e: Throwable => handleError(request, e)

} finally {

request.apiLocalCompleteTimeNanos = time.nanoseconds

}

}

下面以API_VERSIONS Request为例来看具体的处理过程。

def handleApiVersionsRequest(request: RequestChannel.Request) {

def createResponseCallback(requestThrottleMs: Int): ApiVersionsResponse = {

val apiVersionRequest = request.body[ApiVersionsRequest]

if (apiVersionRequest.hasUnsupportedRequestVersion)

apiVersionRequest.getErrorResponse(requestThrottleMs, Errors.UNSUPPORTED_VERSION.exception)

else

ApiVersionsResponse.apiVersionsResponse(requestThrottleMs,

config.interBrokerProtocolVersion.recordVersion.value)

}

// 调用sendResponseMaybeThrottle

sendResponseMaybeThrottle(request, createResponseCallback)

}

private def sendResponseMaybeThrottle(request: RequestChannel.Request,

createResponse: Int => AbstractResponse,

onComplete: Option[Send => Unit] = None): Unit = {

val throttleTimeMs = quotas.request.maybeRecordAndGetThrottleTimeMs(request)

quotas.request.throttle(request, throttleTimeMs, sendResponse)

// 调用sendResponse

sendResponse(request, Some(createResponse(throttleTimeMs)), onComplete)

}

private def sendResponse(request: RequestChannel.Request,

responseOpt: Option[AbstractResponse],

onComplete: Option[Send => Unit]): Unit = {

responseOpt.foreach(response => requestChannel.updateErrorMetrics(request.header.apiKey, response.errorCounts.asScala))

val response = responseOpt match {

case Some(response) =>

val responseSend = request.context.buildResponse(response)

val responseString =

if (RequestChannel.isRequestLoggingEnabled) Some(response.toString(request.context.apiVersion))

else None

new RequestChannel.SendResponse(request, responseSend, responseString, onComplete)

case None =>

new RequestChannel.NoOpResponse(request)

}

// 调用sendResponse

sendResponse(response)

}

private def sendResponse(response: RequestChannel.Response): Unit = {

// 调用RequestChannel的sendResponse方法

requestChannel.sendResponse(response)

}

SocketServer

SocketServer是kafka网络服务器的实现。kafka中将请求分为数据类和控制类,dataPlane负责处理数据请求,controlPlane负责处理控制请求。

class SocketServer(val config: KafkaConfig, val metrics: Metrics, val time: Time, val credentialProvider: CredentialProvider) extends Logging with KafkaMetricsGroup {

// 共享请求队列长度,由Broker端参数queued.max.requests值而定,默认500

private val maxQueuedRequests = config.queuedMaxRequests

// data-plane

// 处理数据类型请求的Processors线程池

private val dataPlaneProcessors = new ConcurrentHashMap[Int, Processor]()

// 处理数据请求类型的Acceptor线程池,每套监听器对应一个Acceptor线程

private[network] val dataPlaneAcceptors = new ConcurrentHashMap[EndPoint, Acceptor]()

// 处理数据类请求RequestChannel对象

val dataPlaneRequestChannel = new RequestChannel(maxQueuedRequests, DataPlaneMetricPrefix)

// control-plane

// 处理控制类型请求的Processor,只有一个线程

private var controlPlaneProcessorOpt : Option[Processor] = None

// 处理控制类型请求的Acceptor,一个线程

private[network] var controlPlaneAcceptorOpt : Option[Acceptor] = None

// 处理控制类型请求的RequestChannel对象

val controlPlaneRequestChannelOpt: Option[RequestChannel] = config.controlPlaneListenerName.map(_ => new RequestChannel(20, ControlPlaneMetricPrefix))

}

def startup(startupProcessors: Boolean = true) {

this.synchronized {

connectionQuotas = new ConnectionQuotas(config.maxConnectionsPerIp, config.maxConnectionsPerIpOverrides)

createControlPlaneAcceptorAndProcessor(config.controlPlaneListener)

createDataPlaneAcceptorsAndProcessors(config.numNetworkThreads, config.dataPlaneListeners)

if (startupProcessors) {

// 启动ControlPlaneProcessor

startControlPlaneProcessor()

// 启动DataPlaneProcessors

startDataPlaneProcessors()

}

}

...

}

SocketServer的启动在KafkaServer.scala startup方法中完成的。

// KafkaServer.scala

def startup() {

socketServer = new SocketServer(config, metrics, time, credentialProvider)

socketServer.startup(startupProcessors = false)

}