针对第四章编写的代码出现的错误做一个总结

Traceback (most recent call last):

File "H:\image\chapter4\p81_chongxie.py", line 160, in <module>

l1 = Linear(X, W1, b1)

TypeError: Linear() takes no arguments

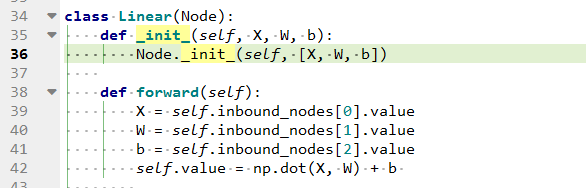

出问题时的init方法的图片

可以看出init两边只有一个下划线 _.

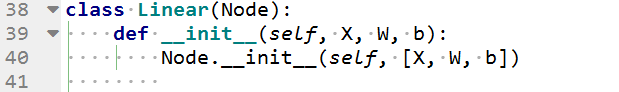

解决办法:把init的两边改成两个下划线 __。即可。

代码运行环境:win7系统 + anaconda3_2020

第四章的代码如下:

1 #######################数据结构部分############################################# 2 import numpy as np 3 import matplotlib.pyplot as plt 4 5 # %matplotlib inline 6 7 class Node(object): 8 def __init__(self, inbound_nodes = []): 9 self.inbound_nodes = inbound_nodes 10 self.value = None 11 self.outbound_nodes = [] 12 13 self.gradients = {} 14 15 for node in inbound_nodes: 16 node.outbound_nodes.append(self) 17 18 def forward(self): 19 raise NotImplementedError 20 21 def backward(self): 22 raise NotImplementedError 23 24 25 class Input(Node): 26 def __init__(self): 27 Node.__init__(self) 28 29 def forward(self): 30 pass 31 32 def backward(self): 33 self.gradients = {self : 0} 34 for n in self.outbound_nodes: 35 self.gradients[self] += n.gradients[self] 36 37 ################################################################################## 38 class Linear(Node): 39 def __init__(self, X, W, b): 40 Node.__init__(self, [X, W, b]) 41 42 def forward(self): 43 X = self.inbound_nodes[0].value 44 W = self.inbound_nodes[1].value 45 b = self.inbound_nodes[2].value 46 self.value = np.dot(X, W) + b 47 48 def backward(self): 49 self.gradients = {n: np.zeros_like(n.value) for n in self.inbound_nodes } 50 for n in self.outbound_nodes: 51 grad_cost = n.gradients[self] 52 self.gradients[self.inbound_nodes[0]] += np.dot(grad_cost, self.inbound_nodes[1].value.T) 53 self.gradients[self.inbound_nodes[1]] += np.dot(self.inbound_nodes[0].value.T, grad_cost) 54 self.gradients[self.inbound_nodes[2]] += np.sum(grad_cost, axis = 0, keepdims = False) 55 56 ################################################################################### 57 class Sigmoid(Node): 58 def __init__(self, node): 59 Node.__init__(self, [node]) 60 61 def _sigmoid(self, x): 62 return 1. / (1. + np.exp(-x)) #exp() 方法返回x的指数,e的x次幂 63 64 def forward(self): 65 input_value = self.inbound_nodes[0].value 66 self.value = self._sigmoid(input_value) 67 68 def backward(self): 69 self.gradients = {n: np.zeros_like(n.value) for n in self.inbound_nodes} 70 for n in self.outbound_nodes: 71 grad_cost = n.gradients[self] 72 sigmoid = self.value 73 self.gradients[self.inbound_nodes[0]] += sigmoid * (1 - sigmoid) * grad_cost 74 75 76 class MSE(Node): 77 def __init__(self, y, a): 78 Node.__init__(self, [y, a]) 79 80 81 def forward(self): 82 y = self.inbound_nodes[0].value.reshape(-1, 1) 83 a = self.inbound_nodes[1].value.reshape(-1, 1) 84 85 self.m = self.inbound_nodes[0].value.shape[0] 86 self.diff = y - a 87 self.value = np.mean(self.diff**2) 88 89 90 def backward(self): 91 self.gradients[self.inbound_nodes[0]] = (2 / self.m) * self.diff 92 self.gradients[self.inbound_nodes[1]] = (-2 / self.m) * self.diff 93 94 95 96 ##########################计算图部分############################################# 97 def topological_sort(feed_dict): 98 input_nodes = [n for n in feed_dict.keys()] 99 G = {} 100 nodes = [n for n in input_nodes] 101 while len(nodes) > 0: 102 n = nodes.pop(0) 103 if n not in G: 104 G[n] = {'in' : set(), 'out' : set()} 105 for m in n.outbound_nodes: 106 if m not in G: 107 G[m] = {'in' : set(), 'out' : set()} 108 G[n]['out'].add(m) 109 G[m]['in'].add(n) 110 nodes.append(m) 111 112 L = [] 113 S = set(input_nodes) 114 while len(S) > 0 : 115 n = S.pop() 116 if isinstance(n, Input): 117 n.value = feed_dict[n] 118 L.append(n) 119 for m in n.outbound_nodes: 120 G[n]['out'].remove(m) 121 G[m]['in'].remove(n) 122 if len(G[m]['in']) == 0 : 123 S.add(m) 124 return L 125 126 127 128 #######################使用方法############################################## 129 #首先由图的定义执行顺序 130 #graph = topological_sort(feed_dict) 131 def forward_and_backward(graph): 132 for n in graph : 133 n.forward() 134 135 for n in graph[:: -1]: 136 n.backward() 137 138 #对各个模块进行正向计算和反向求导 139 #forward_and_backward(graph) 140 141 #########################介绍梯度下降################ 142 def sgd_update(trainables, learning_rate = 1e-2): 143 for t in trainables : 144 t.value = t.value - learning_rate * t.gradients[t] 145 146 ###########使用这个模型################################# 147 from sklearn.utils import resample 148 from sklearn import datasets 149 150 # %matplotlib inline 151 152 data = datasets.load_iris() 153 X_ = data.data 154 y_ = data.target 155 y_[y_ == 2] = 1 # 0 for virginica, 1 for not virginica 156 print(X_.shape, y_.shape) # out (150,4) (150,) 157 158 ########################用写的模块来定义这个神经网络######################### 159 160 np.random.seed(0) 161 n_features = X_.shape[1] 162 n_class = 1 163 n_hidden = 3 164 165 X, y = Input(), Input() 166 W1, b1 = Input(), Input() 167 W2, b2 = Input(), Input() 168 169 l1 = Linear(X, W1, b1) 170 s1 = Sigmoid(l1) 171 l2 = Linear(s1, W2, b2) 172 t1 = Sigmoid(l2) 173 cost = MSE(y, t1) 174 175 176 ###########训练模型########################################### 177 #随即初始化参数值 178 W1_0 = np.random.random(X_.shape[1] * n_hidden).reshape([X_.shape[1], n_hidden]) 179 W2_0 = np.random.random(n_hidden * n_class).reshape([n_hidden, n_class]) 180 b1_0 = np.random.random(n_hidden) 181 b2_0 = np.random.random(n_class) 182 183 #将输入值带入算子 184 feed_dict = { 185 X: X_, y: y_, 186 W1:W1_0, b1: b1_0, 187 W2:W2_0, b2: b2_0 188 } 189 190 #训练参数 191 #这里训练100轮(eprochs),每轮抽4个样本(batch_size),训练150/4次(steps_per_eproch),学习率 0.1 192 epochs = 100 193 m = X_.shape[0] 194 batch_size = 4 195 steps_per_eproch = m // batch_size 196 lr = 0.1 197 198 graph = topological_sort(feed_dict) 199 trainables = [W1, b1,W2, b2] 200 201 l_Mat_W1 = [W1_0] 202 l_Mat_W2 = [W2_0] 203 204 l_loss = [] 205 for i in range(epochs): 206 loss = 0 207 for j in range(steps_per_eproch): 208 X_batch, y_batch = resample(X_, y_, n_samples = batch_size) 209 X.value = X_batch 210 y.value = y_batch 211 212 forward_and_backward(graph) 213 sgd_update(trainables, lr) 214 loss += graph[-1].value 215 216 l_loss.append(loss) 217 if i % 10 ==9 : 218 print("Eproch %d, Loss = %1.5f" % (i, loss)) 219 220 221 #图形化显示 222 plt.plot(l_loss) 223 plt.title("Cross Entropy value") 224 plt.xlabel("Eproch") 225 plt.ylabel("Loss") 226 plt.show() 227 228 229 ##########最后用模型预测所有的数据的情况 230 X.value = X_ 231 y.value = y_ 232 for n in graph: 233 n.forward() 234 235 236 plt.plot(graph[-2].value.ravel()) 237 plt.title("predict for all 150 Iris data") 238 plt.xlabel("Sample ID") 239 plt.ylabel("Probability for not a virginica") 240 plt.show()