文章目录

Redis-删除策略以及逐出(淘汰)策略篇

Redis简介

Redis 是 C 语言开发的一个高性能键值对(key -value) 内存数据库,可以用作数据库,缓存和消息中间件等。

特点

-

作为内存数据库,它的性能非常优秀,数据存储在内存当中,读写速度非常快,支持并发

10W QPS(每秒查询次数),单进程单线程,是线程安全的,采用IO多路复用机制。 -

丰富的数据类型,支持字符串,散列,列表,集合,有序集合等,支持数据持久化。可以将内存中数据保存在磁盘中,重启时加载。

-

主从复制,哨兵,高可用,可用作分布式锁。可以作为消息中间件使用,支持发布订阅。

删除策略以及逐出策略

什么是过期数据?

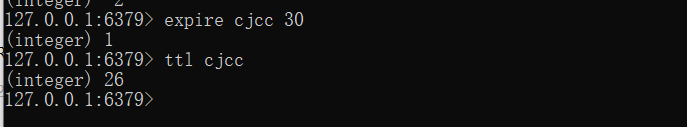

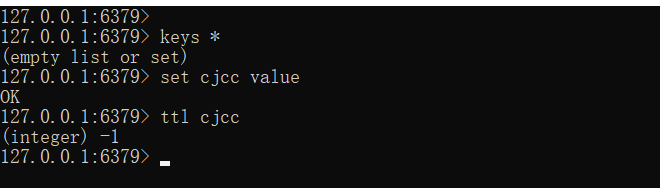

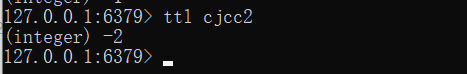

Redis 是一种内存数据库,所有数据都存放在内存中,内存中的数据可以通过 TTL 指令获取其状态。

如:

- XX:具有时效性的数据,通过下列命令来定义:

setex key seconds valueexpire key secondsexpireat key timestamppexpire key millisecondspexpireat key milliseconds-timestamp

- -1:永久有效的数据

- -2:已过期 | 未定义 | 已删除的数据

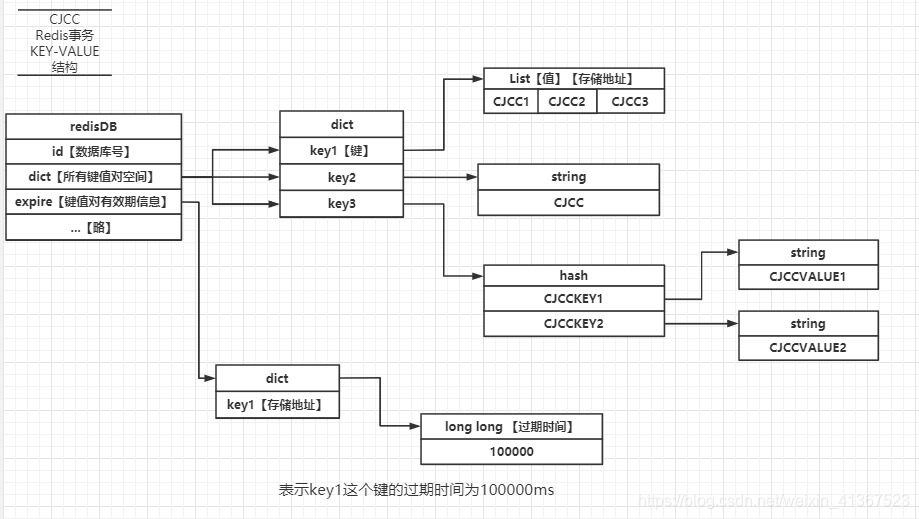

redis存储 key-value 结构:

代码

/* Redis database representation. There are multiple databases identified

* by integers from 0 (the default database) up to the max configured

* database. The database number is the 'id' field in the structure. */

typedef struct redisDb {

dict *dict; /* 数据库键空间,保存所有键值对信息 The keyspace for this DB */

dict *expires; /* 键的有效期信息 Timeout of keys with a timeout set */

dict *blocking_keys; /* Keys with clients waiting for data (BLPOP)*/

dict *ready_keys; /* Blocked keys that received a PUSH */

dict *watched_keys; /* 实现监控 WATCHED keys for MULTI/EXEC CAS */

int id; /* 数据库号,标记是哪一个数据库的 Database ID */

long long avg_ttl; /* Average TTL, just for stats */

unsigned long expires_cursor; /* Cursor of the active expire cycle. */

list *defrag_later; /* List of key names to attempt to defrag one by one, gradually. */

} redisDb;

在这里用到了redisDB这个结构体的:

dict *dict;—>数据库键空间,保存所有键值对信息dict *expires;—>键的有效期信息int id;—>数据库号

如图一:

过期的数据是否真的被删除了?

过期数据:指的是曾经设置过过期时间的数据,到达了它的过期时间失效。

当 redis 需要处理某条数据的时候,发送一条指令给 CPU,CPU 轻轻松松就可以搞定,相对来说不会占用太多时间,但是如果有多个 redis 同时发送了非常多的增删查改指令过来,那 CPU 压力就会变得非常大,造成性能下降,所有操作都在排队等着 CPU 空闲处理指令。那么,我们在这里能不能做一个优化,查数据,加数据,改数据这部分还是得正常进来处理,但是过期数据貌似就不是一个很着急的事情了。如果内存空间也不是很紧张,没达到阈值,那可以先放在内存里,等有空的时间再删掉。也就是说,当这些数据过期以后,实际上还是先放在内存里等到要删的时候再去删它。而具体怎么删,Redis 会提供相应的删除策略。

Redis提供的删除策略

Redis 提供了三种删除策略:1. 定时删除 | 2. 惰性删除 | 2. 定期删除

数据删除策略的目标就是在

内存和CPU占用之间寻找一种平衡,避免某一边压力过大造成整体性能下降,甚至引发服务器宕机或内存泄露。

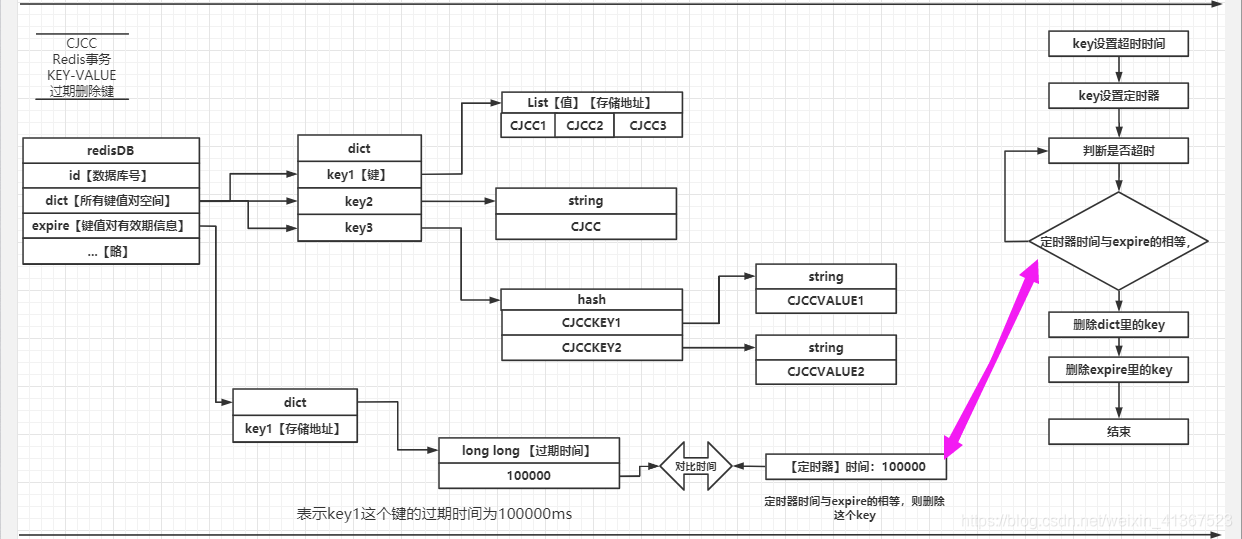

定时删除

当 key 设置过期时间的时候,创建一个定时器事件,当 key 过期时间到达时,由定时器任务立即执行对 key 的删除操作,删除操作先删除存储空间的,再移除掉 expire 的 key

优点:节约内存,到时就删除,快速释放掉不必要的内存占用

缺点:CPU 压力大,无论 CPU 此时负载量多高,都会去占用 CPU 进行 key 的删除操作,会影响 Redis 服务器响应时间和吞吐量,是一种比较低效的方式

结论:用 CPU 性能换取内存空间,时间换空间

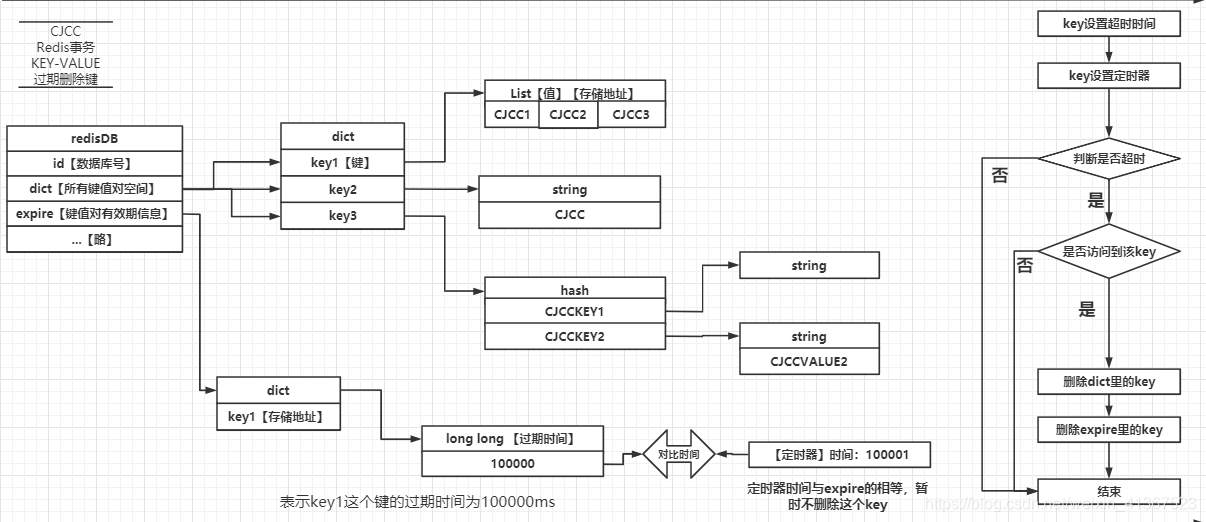

惰性删除|被动删除

数据到达超时时间的,不立即处理,等下次访问该数据的时候,再去删除(操作会执行expireIfNeeded()函数去检查)

优点:不占用 CPU 节约 CPU 性能,只在获取访问key的时候才判断是否过期,过期则删除,只会删除当前获取的这一个key,其他的key还是保持原样

缺点:内存占用大,如果一直没有获取它,那么数据就会长期占用内存空间,当有大量的key没有被使用到,也造成了大量内存浪费,对内存数据库来说,也不太友好

结论:空间换时间

过期删除调用的几个主要函数 db.c

int expireIfNeeded(redisDb *db, robj *key)int keyIsExpired(redisDb *db, robj *key)long long getExpire(redisDb *db, robj *key)

notifyKeyspaceEvent(NOTIFY_EXPIRED,"expired",key,db->id);server.lazyfree_lazy_expire ? dbAsyncDelete(db,key) : dbSyncDelete(db,key);

int expireIfNeeded(redisDb *db, robj *key) {

if (!keyIsExpired(db,key)) return 0; //未过期的key

/* If we are running in the context of a slave, instead of

* evicting the expired key from the database, we return ASAP:

* the slave key expiration is controlled by the master that will

* send us synthesized DEL operations for expired keys.

*

* Still we try to return the right information to the caller,

* that is, 0 if we think the key should be still valid, 1 if

* we think the key is expired at this time. */

if (server.masterhost != NULL) return 1;

/* Delete the key */

server.stat_expiredkeys++;

propagateExpire(db,key,server.lazyfree_lazy_expire);

notifyKeyspaceEvent(NOTIFY_EXPIRED,

"expired",key,db->id);

//删除操作

int retval = server.lazyfree_lazy_expire ? dbAsyncDelete(db,key) :

dbSyncDelete(db,key);

if (retval) signalModifiedKey(NULL,db,key);

return retval;

}

定期删除|主动删除

前面说的两种方案1.时间换空间,2.空间换时间都是两个极端方法,为避免前面方案带来的问题,Redis 引入了定期删除策略(是他们的一个比较折中的方案)

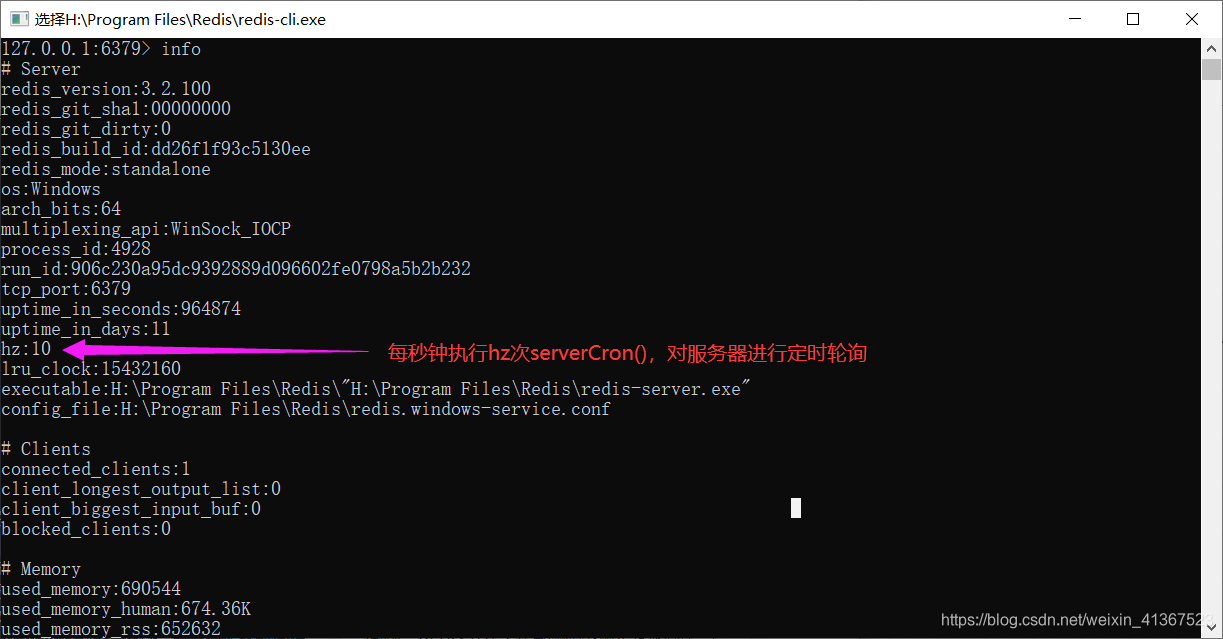

周期性轮询

Redis库中的时效性数据,采取随机抽取的策略,利用过期数据占比的方式控制删除频度。

- 在Redis服务器初始化时,读取

server.hz的值,默认值为10。- 定时轮询服务器,每秒钟执行

server.hz次serverCron()函数。 databaseCron()在后台轮询处理 16 个 redis 数据库的操作,如这里的过期 key 的处理activeExpireCycle(),对每个数据库的expire空间进行检测,每次执行250ms/server.hz

- 定时轮询服务器,每秒钟执行

- 随机选取一批

expire空间的 key(redis有16个数据库,从0号数据库开始---15号数据库)- 删除这批 key 中已过期的

- 如果这批 key 中已过期的占比超过25%,那么再重复执行步骤一。(

循环到小于25%结束当前数据库的删除) - 如果这批 key 中已过期的占比 ≤ 25%,检测下一个数据库的

expire空间(current_db++)

用info命令查看相关配置参数,如:server.hz配置

代码位置:

server.c

/*

This is our timer interrupt, called server.hz times per second.

Here is where we do a number of things that need to be done asynchronously.

For instance:

Active expired keys collection (it is also performed in a lazy way on lookup).

..............

*/

int serverCron(struct aeEventLoop *eventLoop, long long id, void *clientData){

/* Handle background operations on Redis databases. */

databasesCron();

//略............

}

server.c

/*

This function handles 'background' operations we are required to do incrementally in Redis databases, such as active key expiring, resizing, rehashing.

*/

void databasesCron(void) {

/* Expire keys by random sampling. Not required for slaves

* as master will synthesize DELs for us. */

if (server.active_expire_enabled) {

if (iAmMaster()) {

activeExpireCycle(ACTIVE_EXPIRE_CYCLE_SLOW);

} else {

expireSlaveKeys();

}

}

//略............

}

expire.c

void activeExpireCycle(int type){

//代码太长了不放了,主要执行流程是

随机选取一批expire空间的key(从0号数据库开始---15号数据库)

删除这批key中已过期的

如果这批key中已过期的占比超过25%,那么再重复执行步骤一。(`循环到小于25%结束当前数据库的删除`)

如果这批key中已过期的占比 ≤ 25%,检测下一个数据库的expire空间(`current_db++)

}

除了主动淘汰的频率外,Redis 对每次淘汰任务执行的最大时长也有一个限定,这样保证了每次主动淘汰不会过多阻塞应用请求,以下是这个限定计算公式:

#define ACTIVE_EXPIRE_CYCLE_SLOW_TIME_PERC 25 /* Max % of CPU to use. */

/* Adjust the running parameters according to the configured expire

* effort. The default effort is 1, and the maximum configurable effort

* is 10. */

unsigned long effort = server.active_expire_effort-1, /* Rescale from 0 to 9. */

unsigned long config_cycle_slow_time_perc = ACTIVE_EXPIRE_CYCLE_SLOW_TIME_PERC + 2*effort;

/* We can use at max 'config_cycle_slow_time_perc' percentage of CPU

* time per iteration. Since this function gets called with a frequency of

* server.hz times per second, the following is the max amount of

* microseconds we can spend in this function. */

timelimit = config_cycle_slow_time_perc*1000000/server.hz/100;

结论:CPU 性能占用设置有峰值,检测频度可自定义设置,内存压力不是很大,长期占用内存的冷数据会被持续清理(周期性随机抽查,重点抽查)

删除策略比对

- 定时删除(时间换空间)

- 节约内存无占用

- 不分时段占用 CPU 资源,频度高

- 惰性删除(空间换时间)

- 内存占用高

- 延迟执行,不会一直占用CPU资源,CPU 压力小,频度低

- 定期删除(周期性随机抽查)

- 内存定期随机清理

- 每秒花费固定 CPU 资源维护内存(清除过期数据)

逐出(淘汰)策略

在Redis 中经常会进行数据的增删查改操作,那么如果在添加数据的时候遇到了内存不足,该怎么办?在前面用的删除策略可以避免出现这种情况吗?

实际上,在前面所说的删除策略,它针对的是expire命令进行的操作,也就是说那些具有时效性的数据(已经过期,并且还在占用内存的数据),我们在这里说的是针对那些并没有过期,或者是内存中的数据没有一个带有有效期,全是永久性数据,这时候删除策略就不起作用了,所以这个时候内存满了我们再去插入数据到内存是怎么做?

介绍

Redis在进行存储操作的时候,会先干一件事,在执行每一个命令前都会去调用freeMemoryIfNeeded(void)方法去检测内存是否充足,如果内存不满足新加入数据最低存储要求,则需要临时删除一些数据为当前数据腾出存储空间。清理数据的方策叫做逐出(淘汰)算法。

逐出(淘汰)算法不是100%能清理出足够的可使用的内存空间,如果不成功则反复执行。当对所有数据尝试完成后,还是不能达到要求的话,就会报错。

步骤大致如下:

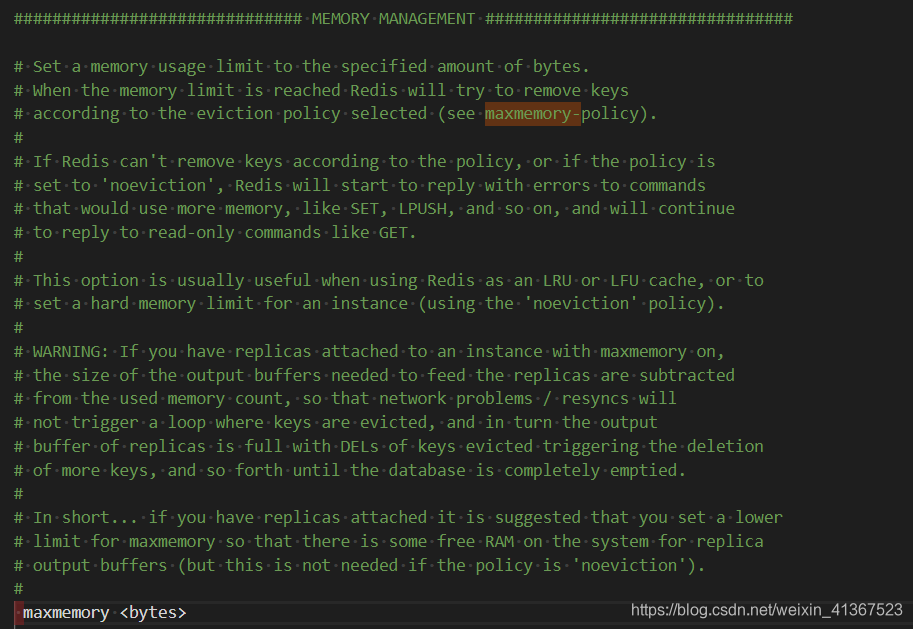

redis.windows-service.conf|redis.conf中配置maxmemory <bytes>限制内存使用量为100mb–>maxmemory 100mb,默认值设置为 0 则表示内存不限制,通常设置占物理内存的50%以上

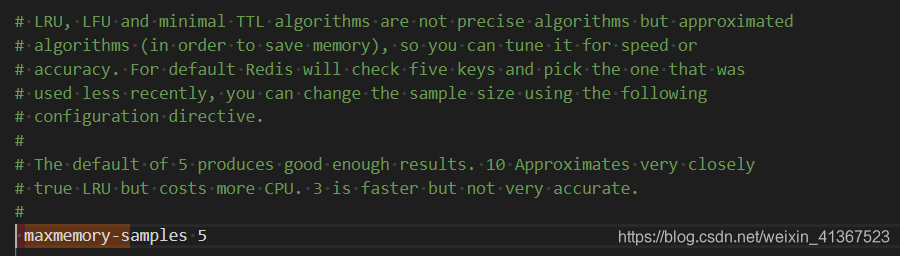

redis.windows-service.conf|redis.conf中配置maxmemory-samples x每次选取删除数据的个数,选取数据时并不会全库扫描而导致严重的性能消耗降低读写性能,因此采用随机获取数据的方式作为待检测删除数据。

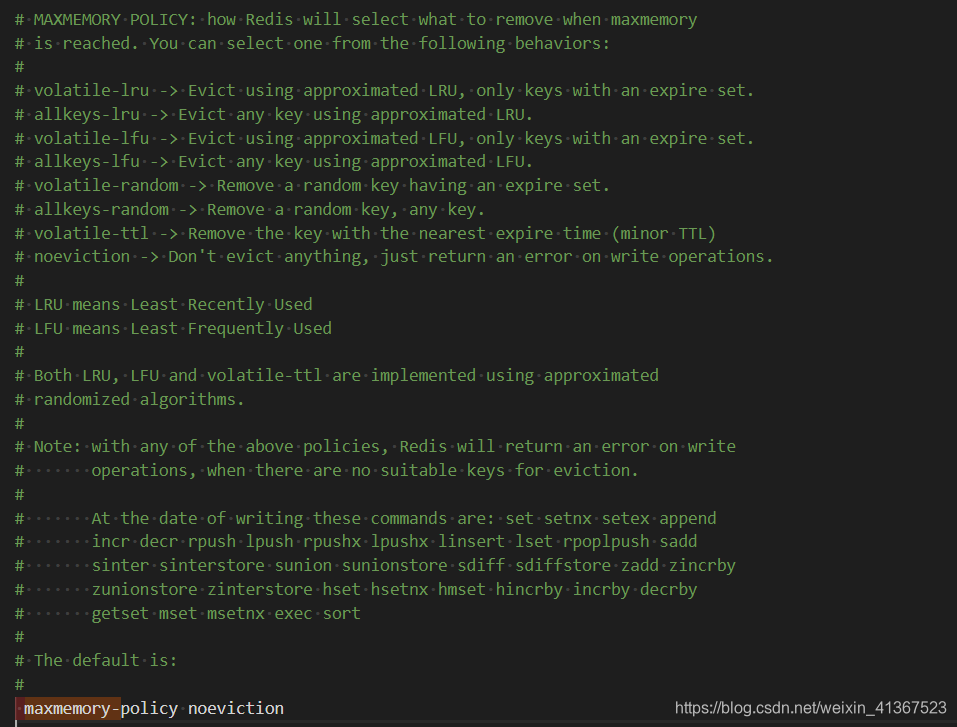

redis.windows-service.conf|redis.conf中配置maxmemory-policy noeviction删除策略,默认是noeviction当redis内存超出限制时,触发逐出(淘汰)机制,对被挑选出来的数据进行删除。

代码流程:

redis用int processCommand(client *c)函数处理每条命令,在这个函数里回去调用int freeMemoryIfNeededAndSafe(void)方法来判断内存空间

int processCommand(client *c) {

//..............略

/* Handle the maxmemory directive.

*

* Note that we do not want to reclaim memory if we are here re-entering

* the event loop since there is a busy Lua script running in timeout

* condition, to avoid mixing the propagation of scripts with the

* propagation of DELs due to eviction. */

if (server.maxmemory && !server.lua_timedout) {

int out_of_memory = freeMemoryIfNeededAndSafe() == C_ERR;

/* freeMemoryIfNeeded may flush slave output buffers. This may result

* into a slave, that may be the active client, to be freed. */

if (server.current_client == NULL) return C_ERR;

/* It was impossible to free enough memory, and the command the client

* is trying to execute is denied during OOM conditions or the client

* is in MULTI/EXEC context? Error. */

if (out_of_memory &&

(c->cmd->flags & CMD_DENYOOM ||

(c->flags & CLIENT_MULTI &&

c->cmd->proc != execCommand &&

c->cmd->proc != discardCommand)))

{

flagTransaction(c);

addReply(c, shared.oomerr);

return C_OK;

}

/* Save out_of_memory result at script start, otherwise if we check OOM

* untill first write within script, memory used by lua stack and

* arguments might interfere. */

if (c->cmd->proc == evalCommand || c->cmd->proc == evalShaCommand) {

server.lua_oom = out_of_memory;

}

}

//..............略

}

int freeMemoryIfNeededAndSafe(void)则会去调用真正判断内存的freeMemoryIfNeeded()函数来判断当前使用的内存是否超过了最大使用内存

/* This is a wrapper for freeMemoryIfNeeded() that only really calls the

* function if right now there are the conditions to do so safely:

*

* - There must be no script in timeout condition.

* - Nor we are loading data right now.

*

*/

int freeMemoryIfNeededAndSafe(void) {

if (server.lua_timedout || server.loading) return C_OK;

return freeMemoryIfNeeded();

}

int freeMemoryIfNeeded(void)这个函数开始进行内存计算,进一步选出需要淘汰的键

/* This function is periodically called to see if there is memory to free

* according to the current "maxmemory" settings. In case we are over the

* memory limit, the function will try to free some memory to return back

* under the limit.

*

* The function returns C_OK if we are under the memory limit or if we

* were over the limit, but the attempt to free memory was successful.

* Otehrwise if we are over the memory limit, but not enough memory

* was freed to return back under the limit, the function returns C_ERR. */

int freeMemoryIfNeeded(void) {

int keys_freed = 0;

/* By default replicas should ignore maxmemory

* and just be masters exact copies. */

if (server.masterhost && server.repl_slave_ignore_maxmemory) return C_OK;

size_t mem_reported, mem_tofree, mem_freed;

mstime_t latency, eviction_latency, lazyfree_latency;

long long delta;

int slaves = listLength(server.slaves);

int result = C_ERR;

/* When clients are paused the dataset should be static not just from the

* POV of clients not being able to write, but also from the POV of

* expires and evictions of keys not being performed. */

if (clientsArePaused()) return C_OK;

if (getMaxmemoryState(&mem_reported,NULL,&mem_tofree,NULL) == C_OK)

return C_OK;

mem_freed = 0;

latencyStartMonitor(latency);

if (server.maxmemory_policy == MAXMEMORY_NO_EVICTION)

goto cant_free; /* We need to free memory, but policy forbids. */

while (mem_freed < mem_tofree) {

int j, k, i;

static unsigned int next_db = 0;

sds bestkey = NULL;

int bestdbid;

redisDb *db;

dict *dict;

dictEntry *de;

if (server.maxmemory_policy & (MAXMEMORY_FLAG_LRU|MAXMEMORY_FLAG_LFU) || server.maxmemory_policy == MAXMEMORY_VOLATILE_TTL)

{

struct evictionPoolEntry *pool = EvictionPoolLRU;

while(bestkey == NULL) {

unsigned long total_keys = 0, keys;

/* We don't want to make local-db choices when expiring keys,

* so to start populate the eviction pool sampling keys from

* every DB. */

for (i = 0; i < server.dbnum; i++) {

db = server.db+i;

dict = (server.maxmemory_policy & MAXMEMORY_FLAG_ALLKEYS) ? db->dict : db->expires;

if ((keys = dictSize(dict)) != 0) {

evictionPoolPopulate(i, dict, db->dict, pool);

total_keys += keys;

}

}

if (!total_keys) break; /* No keys to evict. */

/* Go backward from best to worst element to evict. */

for (k = EVPOOL_SIZE-1; k >= 0; k--) {

if (pool[k].key == NULL) continue;

bestdbid = pool[k].dbid;

if (server.maxmemory_policy & MAXMEMORY_FLAG_ALLKEYS) {

de = dictFind(server.db[pool[k].dbid].dict,

pool[k].key);

} else {

de = dictFind(server.db[pool[k].dbid].expires,

pool[k].key);

}

/* Remove the entry from the pool. */

if (pool[k].key != pool[k].cached)

sdsfree(pool[k].key);

pool[k].key = NULL;

pool[k].idle = 0;

/* If the key exists, is our pick. Otherwise it is

* a ghost and we need to try the next element. */

if (de) {

bestkey = dictGetKey(de);

break;

} else {

/* Ghost... Iterate again. */

}

}

}

}

/* volatile-random and allkeys-random policy */

else if (server.maxmemory_policy == MAXMEMORY_ALLKEYS_RANDOM ||

server.maxmemory_policy == MAXMEMORY_VOLATILE_RANDOM)

{

/* When evicting a random key, we try to evict a key for

* each DB, so we use the static 'next_db' variable to

* incrementally visit all DBs. */

for (i = 0; i < server.dbnum; i++) {

j = (++next_db) % server.dbnum;

db = server.db+j;

dict = (server.maxmemory_policy == MAXMEMORY_ALLKEYS_RANDOM) ?

db->dict : db->expires;

if (dictSize(dict) != 0) {

de = dictGetRandomKey(dict);

bestkey = dictGetKey(de);

bestdbid = j;

break;

}

}

}

/* Finally remove the selected key. */

if (bestkey) {

db = server.db+bestdbid;

robj *keyobj = createStringObject(bestkey,sdslen(bestkey));

propagateExpire(db,keyobj,server.lazyfree_lazy_eviction);

/* We compute the amount of memory freed by db*Delete() alone.

* It is possible that actually the memory needed to propagate

* the DEL in AOF and replication link is greater than the one

* we are freeing removing the key, but we can't account for

* that otherwise we would never exit the loop.

*

* AOF and Output buffer memory will be freed eventually so

* we only care about memory used by the key space. */

delta = (long long) zmalloc_used_memory();

latencyStartMonitor(eviction_latency);

if (server.lazyfree_lazy_eviction)

dbAsyncDelete(db,keyobj);

else

dbSyncDelete(db,keyobj);

signalModifiedKey(NULL,db,keyobj);

latencyEndMonitor(eviction_latency);

latencyAddSampleIfNeeded("eviction-del",eviction_latency);

delta -= (long long) zmalloc_used_memory();

mem_freed += delta;

server.stat_evictedkeys++;

notifyKeyspaceEvent(NOTIFY_EVICTED, "evicted",

keyobj, db->id);

decrRefCount(keyobj);

keys_freed++;

/* When the memory to free starts to be big enough, we may

* start spending so much time here that is impossible to

* deliver data to the slaves fast enough, so we force the

* transmission here inside the loop. */

if (slaves) flushSlavesOutputBuffers();

/* Normally our stop condition is the ability to release

* a fixed, pre-computed amount of memory. However when we

* are deleting objects in another thread, it's better to

* check, from time to time, if we already reached our target

* memory, since the "mem_freed" amount is computed only

* across the dbAsyncDelete() call, while the thread can

* release the memory all the time. */

if (server.lazyfree_lazy_eviction && !(keys_freed % 16)) {

if (getMaxmemoryState(NULL,NULL,NULL,NULL) == C_OK) {

/* Let's satisfy our stop condition. */

mem_freed = mem_tofree;

}

}

} else {

goto cant_free; /* nothing to free... */

}

}

result = C_OK;

cant_free:

/* We are here if we are not able to reclaim memory. There is only one

* last thing we can try: check if the lazyfree thread has jobs in queue

* and wait... */

if (result != C_OK) {

latencyStartMonitor(lazyfree_latency);

while(bioPendingJobsOfType(BIO_LAZY_FREE)) {

if (getMaxmemoryState(NULL,NULL,NULL,NULL) == C_OK) {

result = C_OK;

break;

}

usleep(1000);

}

latencyEndMonitor(lazyfree_latency);

latencyAddSampleIfNeeded("eviction-lazyfree",lazyfree_latency);

}

latencyEndMonitor(latency);

latencyAddSampleIfNeeded("eviction-cycle",latency);

return result;

}

逐出(淘汰)算法策略及其相关配置

random:在expire空间或者dict空间随机淘汰。

volatile:在expire空间先淘汰到期或快到期数据。

allkeys:在dict空间查找

近似 LRU 算法(最近最少使用Least Recently Used)

近似 LFU 算法 (最近使用次数最少Least Frequently Used)

1. 检测带有时效性的数据进行淘汰(第i个数据库的expire空间)

volatile-lru:在设置了时效性的 keys 中选择最近最少使用的数据淘汰(Evict using approximated LRU, only keys with an expire set.)volatile-lfu:在设置了时效性的 keys 中选择最近使用次数最少的数据淘汰(Evict using approximated LFU, only keys with an expire set.)volatile-random:在设置了时效性的 keys 中随机选择一个淘汰(Remove a random key having an expire set.)volatile-ttl:在设置了时效性的 keys 中选择最快过期TTL最短的数据淘汰(Remove the key with the nearest expire time (minor TTL))

2. 检测全库的数据进行淘汰(第i个数据库的dict空间)

allkeys-lru:在所有 key 中使用最近最少使用的数据淘汰(Evict any key using approximated LRU.)allkeys-lfu:在所有 key 中使用最近使用次数最少的数据淘汰(Evict any key using approximated LFU.)allkeys-random:在所有 key 中随机选择一个淘汰(Remove a random key, any key.)

不同的策略,指向的数据集也不同:根据指向expire的空间还是dict空间来删除,主要可以看下面这两段代码可以看出:

if (server.maxmemory_policy & (MAXMEMORY_FLAG_LRU|MAXMEMORY_FLAG_LFU) ||

server.maxmemory_policy == MAXMEMORY_VOLATILE_TTL)

{

//根据淘汰策略选择一个空间dict空间或expire空间

dict = (server.maxmemory_policy & MAXMEMORY_FLAG_ALLKEYS) ? db->dict : db->expires;

}

/* volatile-random and allkeys-random policy */

else if (server.maxmemory_policy == MAXMEMORY_ALLKEYS_RANDOM || server.maxmemory_policy == MAXMEMORY_VOLATILE_RANDOM)

{

//根据淘汰策略选择一个空间dict空间或expire空间

dict = (server.maxmemory_policy == MAXMEMORY_ALLKEYS_RANDOM) ? db->dict : db->expires;

}

3. 不进行淘汰–NO_EVICTION

noeviction:不淘汰任何东西,仅在写操作时返回一个错误(Don't evict anything, just return an error on write operations.)目前(redis_version:3.2.100)版本默认是配置noeviction策略。容易引发OOM

if (server.maxmemory_policy == MAXMEMORY_NO_EVICTION)

goto cant_free; /* We need to free memory, but policy forbids. */