五一刚好放假在家有时间,把上周在工作中遇到的一个自认为比较奇怪的问题整理一下,写出来,自己当时也花了差不多几个才搞定,希望对大家有所帮助。

问题是这样的,项目中有用到hdfs,用来存储数据。然后整个项目本地调试基本ok了,然后准备部署到测试服务器上进行测试。在这个过程中突然发现对hdfs的操作突然没用了。那么就把对hdfs的操作抽出来,搞了一个maven项目,进行测试。这个问题怎么可能难道我这个技术专家呢。

对hdfs的操作很简单,封装了一个hdfs工具类

public class HdfsUtils implements Closeable {

private FileSystem fs;

private Configuration conf;

public static HdfsUtilsBuilder newBuilder() {

return new HdfsUtilsBuilder();

}

public static class HdfsUtilsBuilder {

public HdfsUtils build() throws Exception {

String hadoopConfDir = System.getenv("HADOOP_CONF_DIR");

if (null == hadoopConfDir) {

// todo 根据实际情况修改

hadoopConfDir = "/Users/apple/Documents/hadoop/conf";

// hadoopConfDir = "/etc/hadoop/conf";

// hadoopConfDir = "/data/bigdata/conf";

}

if (!hadoopConfDir.endsWith("/")) {

hadoopConfDir = hadoopConfDir + "/";

}

Configuration conf = new Configuration();

conf.addResource(new Path(hadoopConfDir + "core-site.xml"));

conf.addResource(new Path(hadoopConfDir + "hdfs-site.xml"));

URI uri = FileSystem.getDefaultUri(conf);

System.out.println(uri.getScheme());

System.out.println(uri.getHost());

System.out.println(uri.getPort());

// todo 根据实际情况修改

FileSystem fs = FileSystem.newInstance(FileSystem.getDefaultUri(conf), conf, "hdfs");

return new HdfsUtils(fs, conf);

}

}

public HdfsUtils(FileSystem fs, Configuration conf) {

this.fs = fs;

this.conf = conf;

}

// 合并文件

public void combineFiles(String sourceDir, String targetFile) throws IOException {

FSDataOutputStream out = null;

try {

List<FileStatus> stats = listFileStatus(sourceDir);

out = create(new Path(targetFile));

if (CollectionUtils.isNotEmpty(stats)) {

for (int i = 0; i < stats.size(); ++i) {

if (!stats.get(i).getPath().getName().startsWith(".")) {

FSDataInputStream in = openFile(stats.get(i).getPath());

byte[] buffer = new byte[1024 * 1024];

int readLength = 0;

while ((readLength = in.read(buffer)) > 0) {

out.write(buffer, 0, readLength);

out.flush();

}

if (in != null) {

in.close();

}

}

}

}

} finally {

if (out != null) {

out.close();

}

}

}

// 打开文件

public FSDataInputStream openFile(String path) throws IOException {

return openFile(new Path(path));

}

public FSDataInputStream openFile(Path path) throws IOException {

Preconditions.checkNotNull(fs);

if (!fs.isFile(path)) {

throw new IOException("not a file!");

}

return fs.open(path);

}

// 创建文件

public FSDataOutputStream create(Path path) throws IOException {

return fs.create(path);

}

// 查询目录下所有文件

public List<FileStatus> listFileStatus(String basePath) throws IOException {

return listFileStatus(new Path(basePath));

}

public List<FileStatus> listFileStatus(Path basePath) throws IOException {

Preconditions.checkNotNull(fs);

if (fs.isFile(basePath)) {

throw new IOException("not a dict!");

}

return Arrays.asList(fs.listStatus(basePath));

}

// 文件大小

public Long getLength(String srcDirPath) throws IOException {

Preconditions.checkNotNull(fs);

Long len = 0L;

Path files = new Path(srcDirPath);

FileStatus stats[] = fs.listStatus(files);

for (FileStatus stat : stats) {

if (!stat.getPath().getName().startsWith(".")) {

len += stat.getLen();

}

}

return len;

}

// 下载文件

public void downloadFile(String src, String dst) throws Exception {

Path srcPath = new Path(src);

Path dstPath = new Path(dst);

fs.copyToLocalFile(srcPath, dstPath);

}

// 创建文件夹

public void mkdirs(String path) throws IOException {

Preconditions.checkNotNull(fs);

if (!fs.mkdirs(new Path(path))) {

throw new IOException("mkdir error");

}

}

// 删除目录

public void delete(String path) throws IOException {

Preconditions.checkNotNull(path);

if (StringUtils.isEmpty(path) || path.equals(File.separator)) {

throw new IOException("error path: " + path);

}

if (fs.exists(new Path(path))) {

fs.delete(new Path(path), true);

}

}

// 移动文件

public void move(String srcPath, String destPath) throws IOException {

Preconditions.checkNotNull(fs);

if (!fs.exists(new Path(srcPath))) {

throw new IOException("src not exist!");

}

// 要排除src=desc的情况

if (combinePath(srcPath, "").equals(combinePath(destPath, ""))) {

return;

}

Path src = new Path(srcPath);

Path dest = new Path(destPath);

if (!fs.isFile(src) && fs.isFile(dest)) {

// src是dict, desc是file => 错误

throw new IOException("cannot move a dict to a file: " + srcPath + ", " + destPath);

} else if (fs.isFile(src) && fs.isFile(dest)) {

// src是file, desc是file => mv /a/file.txt /a/b => /a/b/file.txt

fs.rename(src, dest);

} else if (fs.isFile(src) && !fs.isFile(dest)) {

// src是file, desc是dict => mv src dest/

fs.rename(src, dest);

} else {

// src是dict, desc是dict => mv src/* dest/

// 避免dest是src子目录的情况

for (FileStatus f : fs.listStatus(src)) {

fs.rename(new Path(combinePath(srcPath, f.getPath().getName())),

new Path(combinePath(destPath, f.getPath().getName())));

}

}

}

private String combinePath(String parent, String current) {

return parent + (parent.endsWith(File.separator) ? "" : File.separator) + current;

}

// 将csv文件写入hdfs

public void uploadFiles(InputStream in, String path, String fileName) throws IOException {

Preconditions.checkNotNull(fs);

String absoluteFileName = getFileName(path, fileName);

FSDataOutputStream out = fs.create(new Path(absoluteFileName));

IOUtils.copyBytes(in, out, 1024, true);

}

public void uploadLocalFiles(String file, String path, String fileName) throws Exception {

Preconditions.checkNotNull(fs);

FileInputStream in = new FileInputStream(file);

String absoluteFileName = getFileName(path, fileName);

FSDataOutputStream out = fs.create(new Path(absoluteFileName));

IOUtils.copyBytes(in, out, 1024, true);

}

private String getFileName(String path, String fileName) {

StringBuilder sb = new StringBuilder();

sb.append(path);

if (path.endsWith("/")) {

sb.append(fileName);

} else {

sb.append("/").append(fileName);

}

return sb.toString();

}

public void close() throws IOException {

if (fs != null) {

fs.close();

}

}

}

对hdfs的操作是合并一个目录下的小文件。

String sourceDir = args[0];

String contractId = args[1];

String tmpMergeResult = getFile(contractId);

mergeResult(utils, sourceDir, tmpMergeResult);

本地测试都是ok,但是通过maven打成jar包运行时,报错,No FileSystem for scheme: hdfs

FileSystem fs = FileSystem.newInstance(FileSystem.getDefaultUri(conf), conf, "hdfs");

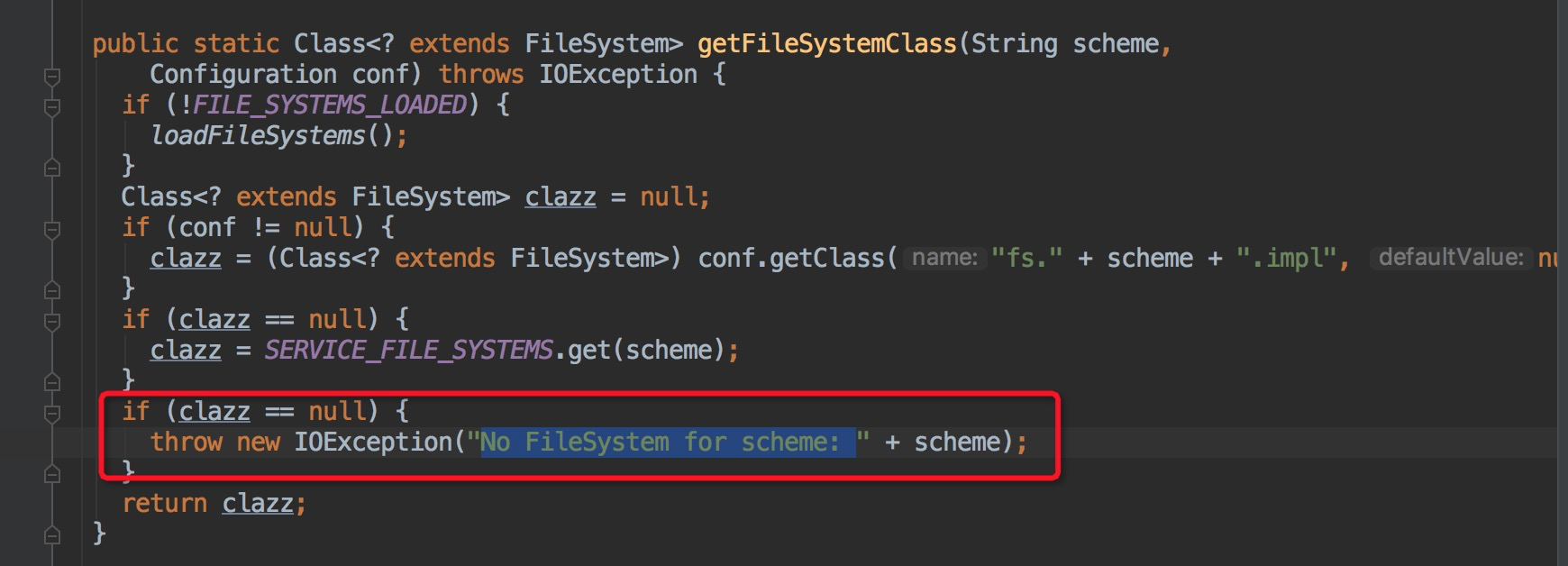

就是在这行代码出错了,经过一步一步debug,抛出异常的地方在:

在运行过程,我根据加载的hadoop配置文件,已经拿到传入的配置是hdfs,那说明没有加入hdfs的文件系统

debug查看,SERVICE_FILE_SYSTEMS中只有4个文件系统,与这位兄台的情况一样。所以我怀疑是maven打包的时候出了问题。我的pom文件配置如下

<dependencies>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>${hadoop.version}</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>${hadoop.version}</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>${hadoop.version}</version>

</dependency>

</dependencies>

这样就会出现上面说的问题,common中也有FileSystem,覆盖了hdfs中的FileSystem,而maven打包的规则是先引用先打包。所以需要将pom中的依赖进行调整

<dependencies>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>${hadoop.version}</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>${hadoop.version}</version>

</dependency>

</dependencies>

只引用这两个依赖就可以完成操作,这样解决问题了。