问题描述

在Eclipse中用插件(而不是手动打包上传服务器方式)运行WordCount程序的过程中出现了如下错误:

DEBUG - LocalFetcher 1 going to fetch: attempt_local938878567_0001_m_000000_0

WARN - job_local938878567_0001

java.lang.Exception: org.apache.hadoop.mapreduce.task.reduce.Shuffle$ShuffleError: error in shuffle in localfetcher#1

at org.apache.hadoop.mapred.LocalJobRunner$Job.runTasks(LocalJobRunner.java:462)

at org.apache.hadoop.mapred.LocalJobRunner$Job.run(LocalJobRunner.java:529)

Caused by: org.apache.hadoop.mapreduce.task.reduce.Shuffle$ShuffleError: error in shuffle in localfetcher#1

at org.apache.hadoop.mapreduce.task.reduce.Shuffle.run(Shuffle.java:134)

at org.apache.hadoop.mapred.ReduceTask.run(ReduceTask.java:376)

at org.apache.hadoop.mapred.LocalJobRunner$Job$ReduceTaskRunnable.run(LocalJobRunner.java:319)

at java.util.concurrent.Executors$RunnableAdapter.call(Unknown Source)

at java.util.concurrent.FutureTask.run(Unknown Source)

at java.util.concurrent.ThreadPoolExecutor.runWorker(Unknown Source)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(Unknown Source)

at java.lang.Thread.run(Unknown Source)

Caused by: java.io.FileNotFoundException: G:/tmp/hadoop-Ferdinand%20Wang/mapred/local/localRunner/Ferdinand%20Wang/jobcache/job_local938878567_0001/attempt_local938878567_0001_m_000000_0/output/file.out.index

at org.apache.hadoop.fs.RawLocalFileSystem.open(RawLocalFileSystem.java:198)

at org.apache.hadoop.fs.FileSystem.open(FileSystem.java:766)

at org.apache.hadoop.io.SecureIOUtils.openFSDataInputStream(SecureIOUtils.java:156)

at org.apache.hadoop.mapred.SpillRecord.<init>(SpillRecord.java:70)

at org.apache.hadoop.mapred.SpillRecord.<init>(SpillRecord.java:62)

at org.apache.hadoop.mapred.SpillRecord.<init>(SpillRecord.java:57)

at org.apache.hadoop.mapreduce.task.reduce.LocalFetcher.copyMapOutput(LocalFetcher.java:124)

at org.apache.hadoop.mapreduce.task.reduce.LocalFetcher.doCopy(LocalFetcher.java:102)

at org.apache.hadoop.mapreduce.task.reduce.LocalFetcher.run(LocalFetcher.java:85)

DEBUG - LocalFetcher 1 going to fetch: attempt_local938878567_0001_m_000000_0

DEBUG - LocalFetcher 1 going to fetch: attempt_local938878567_0001_m_000000_0

原因

其最终的问题在于报错中的这个目录,这个目录是Hadoop底层用代码拼接起来的:

G:/tmp/hadoop-Ferdinand%20Wang/mapred/local/localRunner/Ferdinand%20Wang/jobcache/job_local938878567_0001/attempt_local938878567_0001_m_000000_0/output/file.out.index

注意观察其中的%20 ,根本原因在于 电脑的USERNAME中的用户名中含有空格

所以我们要修改用户名称才能解决这个问题,当然也可以创建一个不含有空格的新的用户

解决问题

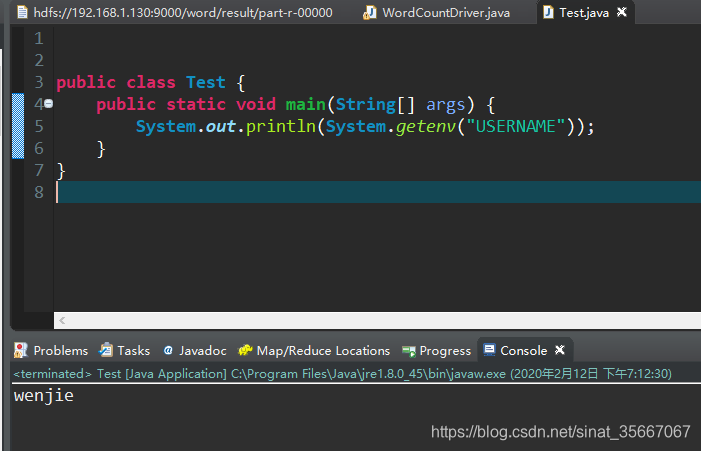

要修改这个USERNAME是不那么容易的,我们可以用一种方法来测试。

System.getenv("USERNAME")

//Java提供了一个方法用来获取环境变量,如果这个获取正确,那么问题就解决了

找了很多博客,发现很多人只是在控制面板修改了用户名称,这必然是没有用的

解决方法如下

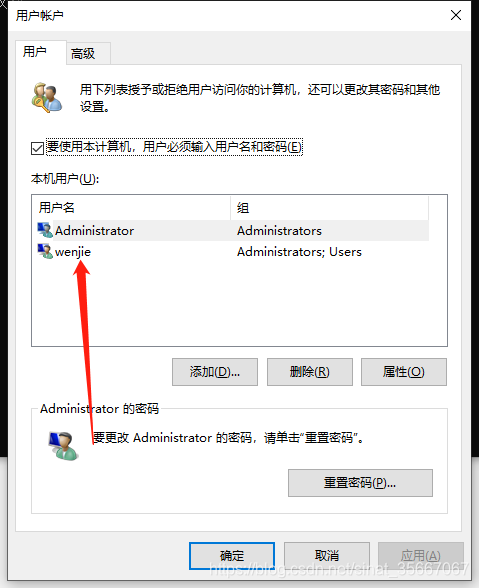

1、点击右下角的【开始】菜单-选择【运行】;或者是在键盘点击【win】+【R】快捷键调出运行;

2、输入【netplwiz】,再点击确定;

3、打开用户账户,双击【Administrator】;

4、输入您想要改的名字;

5、点击右下角的【确定】按钮之后,弹出警告,点击【是】即可。

修改箭头所指的方向,然后重启电脑,大功告成!

思考比编码重要 ——Leslie Lamport