RecoderReader合并当前文件夹下文件到同一文件内

将多个小文件合并成一个文件SequenceFile

1.FileCombineRecoderReader

package

com.cevent.hadoop.mapreduce.inputformat;

import

java.io.IOException;

import

org.apache.hadoop.conf.Configuration;

import

org.apache.hadoop.fs.FSDataInputStream;

import

org.apache.hadoop.fs.FileSystem;

import

org.apache.hadoop.fs.Path;

import

org.apache.hadoop.io.BytesWritable;

import

org.apache.hadoop.io.IOUtils;

import org.apache.hadoop.io.NullWritable;

import

org.apache.hadoop.mapreduce.InputSplit;

import

org.apache.hadoop.mapreduce.RecordReader;

import

org.apache.hadoop.mapreduce.TaskAttemptContext;

import

org.apache.hadoop.mapreduce.lib.input.FileSplit;

/**

* 业务具体实现RecoderReader

* @author cevent

* @date 2020年4月14日

*/

public class WholeFileCombineRecordReader extends RecordReader<NullWritable, BytesWritable>{

private BytesWritable bytesWritableValues=new BytesWritable();

private FileSplit fileSplit;

private Configuration configuration;

//在nextKeyValue读取完毕数据后,记录是否读取,默认未读取

private boolean isProcess=false;

//1.初始化方法:configuration配置方法,必须调用

@Override

public void initialize(InputSplit split, TaskAttemptContext context)

throws

IOException, InterruptedException {

// 1.1获取切片信息:调用fileSplit获取当前的切片split(需要强转)

this.fileSplit=(FileSplit)split;

// 1.2获取配置信息

configuration=context.getConfiguration();

}

//2.读取数据

@Override

public boolean nextKeyValue() throws

IOException, InterruptedException {

//判断是否在读取

if(!isProcess){

//输入流调取

FSDataInputStream dataInputStream=null;

try {

// 2.1按文件整体处理,读取文件系统

FileSystem fileSystem=FileSystem.get(configuration);

//2.2获取切片的路径

Path path=fileSplit.getPath();

//2.3读取切片文件数据的路径

dataInputStream=fileSystem.open(path);

//2.4读取切片的输入流(InputSteam输入流,byte[] 读取的字节数组,int 从index开始读取,int 读取的长度)

//定义字节缓存数组,从获取的切片中得到文件长度

byte [] bufferArr=new byte[(int) fileSplit.getLength()];

IOUtils.readFully(dataInputStream, bufferArr, 0, bufferArr.length);

//2.5设置输出:将读取的文件计入缓存

bytesWritableValues.set(bufferArr, 0, bufferArr.length);

}

finally{

//关闭流

IOUtils.closeStream(dataInputStream);

}

//读取完毕,返回true

isProcess=true;

return true;

}

return false;

}

//3.当前NullWritable,路径及文件名

@Override

public NullWritable getCurrentKey() throws

IOException,

InterruptedException

{

// 直接接收

return NullWritable.get();

}

//4.获取当前文件内容,需要一个BytesWritable存放当前值

@Override

public BytesWritable getCurrentValue() throws

IOException,

InterruptedException

{

//

return bytesWritableValues;

}

//5.progress:当前进程是否在读取数据

@Override

public float getProgress() throws

IOException, InterruptedException {

//

return 0;

}

//6.关闭流资源

@Override

public void close() throws

IOException {

//

}

}

2.FileCombineInputFormat

package

com.cevent.hadoop.mapreduce.inputformat;

import

java.io.IOException;

import

org.apache.hadoop.fs.Path;

import

org.apache.hadoop.io.BytesWritable;

import

org.apache.hadoop.io.NullWritable;

import

org.apache.hadoop.mapreduce.InputSplit;

import

org.apache.hadoop.mapreduce.JobContext;

import

org.apache.hadoop.mapreduce.RecordReader;

import

org.apache.hadoop.mapreduce.TaskAttemptContext;

import

org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

/**

*

FileInputFormat:只作为RecoderReader(实现方法)的调用

*

key=路径+文件名 (先不声明时,取NullWritable)

*

value=文件内容(字节流 BytesWritable)

* @author cevent

* @date 2020年4月14日

*/

public class WholeFileCombineInputFormat extends FileInputFormat<NullWritable, BytesWritable>{

//是否可以切割数据

@Override

protected boolean isSplitable(JobContext context, Path filename) {

//

return false;

}

//调用RecordReader:需要返回一个RecordReader,新建class继承RecordReader

@Override

public RecordReader<NullWritable, BytesWritable> createRecordReader(

InputSplit split, TaskAttemptContext context) throws

IOException,InterruptedException {

// 1.调用初始化方法

WholeFileCombineRecordReader combineRecordReader=new WholeFileCombineRecordReader();

combineRecordReader.initialize(split, context);

return combineRecordReader;

}

}

3.FileCombineMapper

package

com.cevent.hadoop.mapreduce.inputformat;

import

java.io.IOException;

import

org.apache.hadoop.fs.Path;

import

org.apache.hadoop.io.BytesWritable;

import

org.apache.hadoop.io.NullWritable;

import

org.apache.hadoop.io.Text;

import

org.apache.hadoop.mapreduce.Mapper;

import

org.apache.hadoop.mapreduce.lib.input.FileSplit;

/**

*

NullWritable,

BytesWritable, KEYOUT, VALUEOUT

*

=RecordReader的key=NullWritable =value 合并的文件path=Text 合并的文件内容=字节码=BytesWritable

* @author cevent

* @date 2020年4月14日

*/

public class WholeFileCombineMapper extends Mapper<NullWritable, BytesWritable, Text, BytesWritable>{

//setup返回路径作为key

Text keyText=new Text();

//获取切片的路径

@Override

protected void setup(Context context)

throws

IOException, InterruptedException {

// 1.调用FileSplit

FileSplit fileSplit=(FileSplit)context.getInputSplit();

// 2.获取路径

Path path=fileSplit.getPath();

// 3.将路径保存到key

keyText.set(path.toString());

}

@Override

protected void map(NullWritable key, BytesWritable value,Context context)

throws

IOException, InterruptedException {

// 1.控制输出数据

context.write(keyText, value);

}

}

4.FileCombineDriver

package

com.cevent.hadoop.mapreduce.inputformat;

import

org.apache.hadoop.conf.Configuration;

import

org.apache.hadoop.fs.Path;

import

org.apache.hadoop.io.BytesWritable;

import

org.apache.hadoop.io.Text;

import

org.apache.hadoop.mapreduce.Job;

import

org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import

org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.mapreduce.lib.output.SequenceFileOutputFormat;

/**

*

* @author cevent

* @date 2020年4月14日

*/

public class WholeFileCombineDriver {

public static void main(String[] args) throws Exception

{

Configuration configuration=new Configuration();

Job job=Job.getInstance(configuration);

job.setJarByClass(WholeFileCombineDriver.class);

//关联自定义的inputFormat

job.setInputFormatClass(WholeFileCombineInputFormat.class);

//设置输出文件的个,必须是SequenceFile

job.setOutputFormatClass(SequenceFileOutputFormat.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(BytesWritable.class);

job.setMapperClass(WholeFileCombineMapper.class);

FileInputFormat.setInputPaths(job, new Path(args[0]));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

boolean result=job.waitForCompletion(true);

System.exit(result?0:1);

}

}

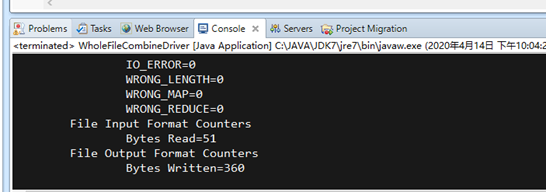

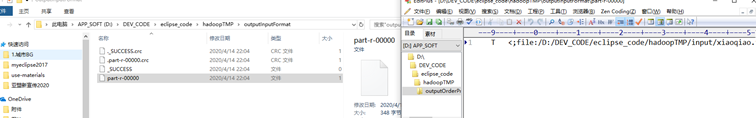

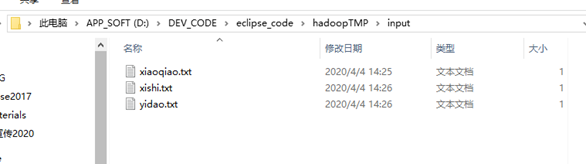

实现合并