下载安装依赖包

yum -y install lzo-devel zlib-devel gcc gcc-c++ autoconf automake libtool openssl-devel fuse-devel cmake

使用root用户安装protobuf ,进入protobuf解压路径

./configure

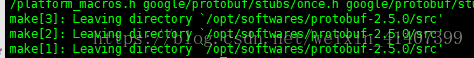

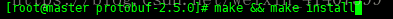

make && make install

使用root用户安装snappy1.1.1

下载地址

http://www.filewatcher.com/m/snappy-1.1.1.tar.gz.1777992-0.html

进入snappy1.1.1解压路径

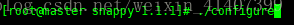

./configure

make && make install

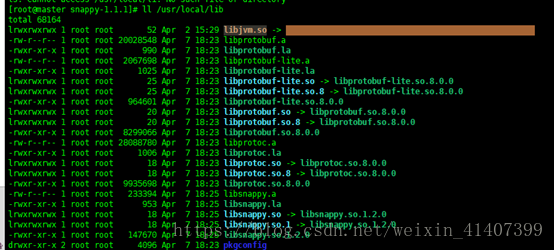

验证是否安装完成

ll /usr/local/lib

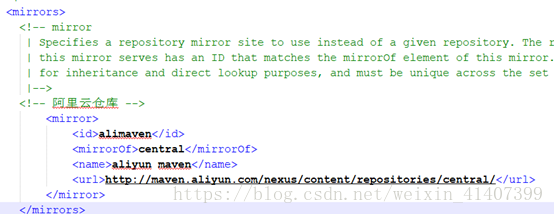

修改mavne 配置文件,使用阿里云服务器,加快编译速度

<mirrors>

<!-- mirror

| Specifies a repository mirror site to use instead of a given repository. The repository that

| this mirror serves has an ID that matches the mirrorOf element of this mirror. IDs are used

| for inheritance and direct lookup purposes, and must be unique across the set of mirrors.

|-->

<!-- 阿里云仓库 -->

<mirror>

<id>alimaven</id>

<mirrorOf>central</mirrorOf>

<name>aliyun maven</name>

<url>http://maven.aliyun.com/nexus/content/repositories/central/</url>

</mirror>

</mirrors>

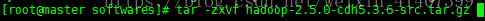

解压源码包,cdh版本hadoop源码包下载地址

http://archive-primary.cloudera.com/cdh5/cdh/5/

进入hadoop 源码所在文件夹

输入指令开始编译,大约1个小时时间

mvn clean package -DskipTests -Pdist,native -Dtar -Drequire.snappy -e -X

编译完成

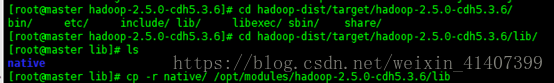

将hadoop-dist/target/hadoop-2.5.0-cdh5.3.6/lib/native复制到hadoop_home下lib/native 里

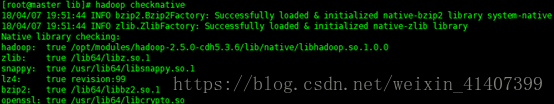

使用hadoop checknative 查看

hadoop checknative

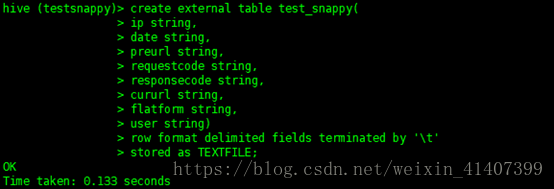

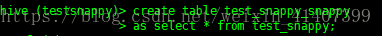

测试 ,创建表

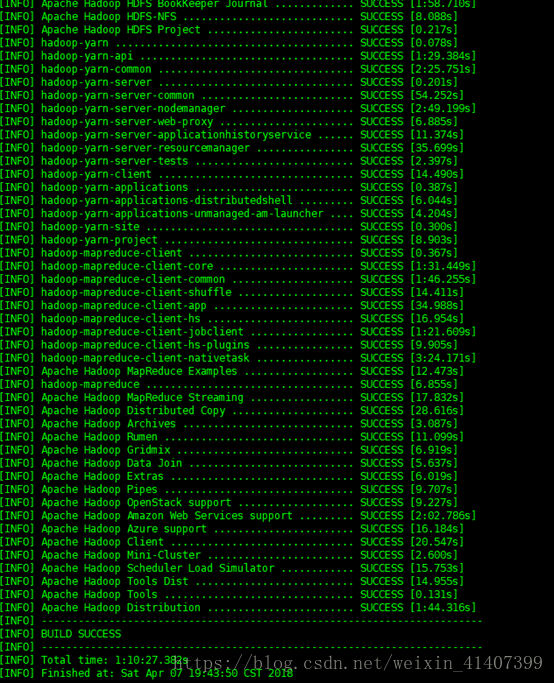

导入数据

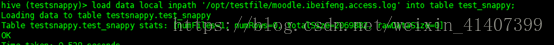

查看文件大小,2m

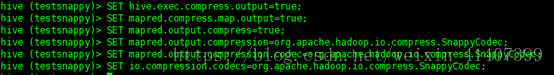

设置输出压缩格式为snappy

SET hive.exec.compress.output=true; SET mapred.compress.map.output=true; SET mapred.output.compress=true; SET mapred.output.compression=org.apache.hadoop.io.compress.SnappyCodec; SET mapred.output.compression.codec=org.apache.hadoop.io.compress.SnappyCodec; SET io.compression.codecs=org.apache.hadoop.io.compress.SnappyCodec;

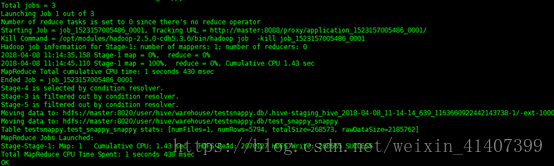

创建snappy格式表

导入数据成功

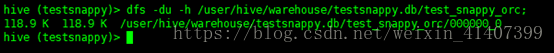

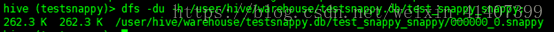

查看hdfs上文件,snappy格式文件已经生产

查看文件大小,262.3k

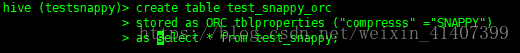

创建orcfile表, SNAPPY一定要大写

创建成功

查看文件大小,118.9k