起因

公司大部分同事电脑没有外网权限,很多小伙伴跟我抱怨聊天木得表情包。

思路来了:下载表情包到本地,ssm快速搭一个简单网站在我的本机服务器上,表情包get。

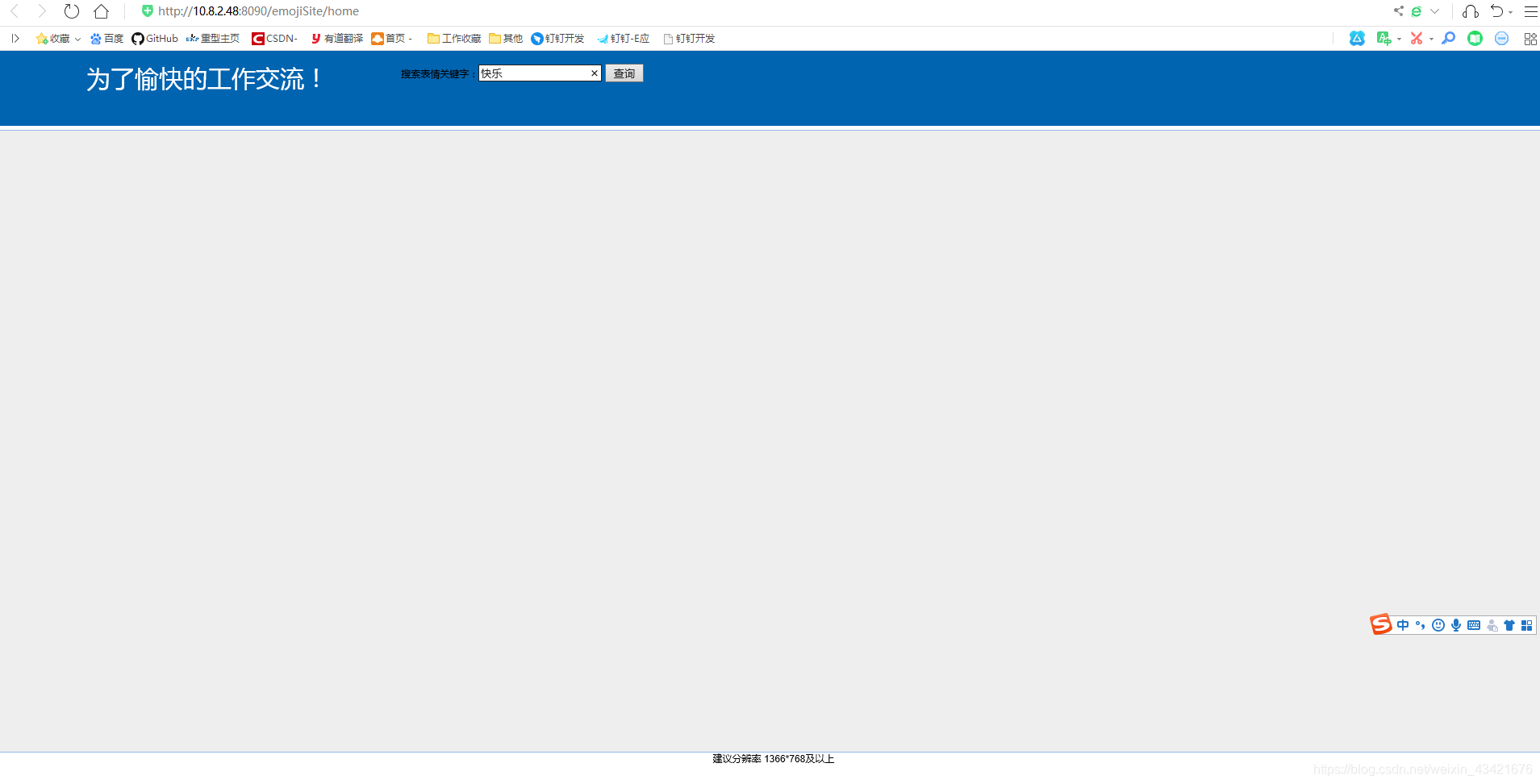

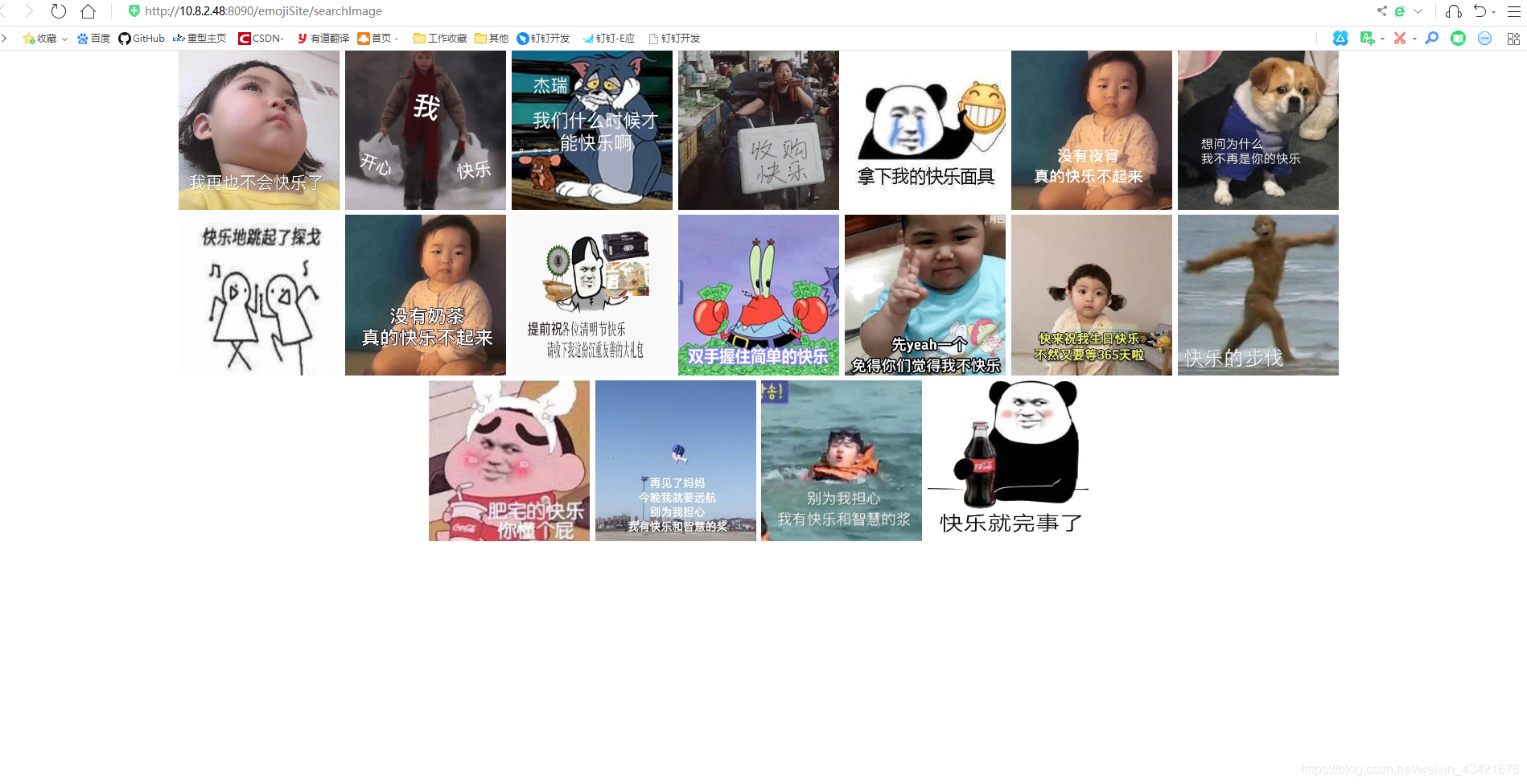

结果

爬表情

这里参考(Ctrl+c)了博主‘井蛙不可语于海’的博客-‘网络爬虫-爬取十万张表情包’,链接如下 (* ̄︶ ̄)

https://blog.csdn.net/qq_39802740/article/details/81416880

- 新建scrapy项目

- items.py

#结构化爬取的数据

import scrapy

class biaoqingbaoItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

url = scrapy.Field() #图片的url

title = scrapy.Field() #图片的标题

page = scrapy.Field() #当前页的页码,用作文件夹名称

name = scrapy.Field() #url中的图片名

pass

- biaoqingbao.py

#爬取数据的核心文件,指定爬取网址,解析页面,爬取逻辑

import scrapy

from items import biaoqingbaoItem

class BiaoqingbaoSpider(scrapy.Spider):

name = 'biaoqingbao'

allowed_domains = ['fabiaoqing.com/biaoqing']

start_urls = ['https://www.fabiaoqing.com/biaoqing/']

def parse(self, response):

divs = response.xpath('//*[@id="bqb"]/div[1]/div') #当前页面的所有表情

next_url = response.xpath('//div[contains(@class,"pagination")]/a[last()-1]/@href').extract_first() #下一页相对URL

base_url = 'https://fabiaoqing.com'

for div in divs:

items = biaoqingbaoItem()

items['url'] = div.xpath('a/img/@data-original').extract_first()

items['name'] = div.xpath('a/img/@data-original').extract_first().split('/')[-1]

items['title'] = div.xpath('a/@title').extract_first()

items['page'] = next_url.split('/')[-1]

yield items

if next_url: # 如果存在下一页则进行翻页

url = base_url + next_url # 拼接字符串

yield scrapy.Request(url, self.parse, dont_filter=True)

- pipelines.py

#下载图片,制定存储规则

import scrapy

from scrapy.pipelines.images import ImagesPipeline

from scrapy.exceptions import DropItem

class DemoPipeline(ImagesPipeline):

# 下载图片

def get_media_requests(self, item, info):

yield scrapy.Request(url=item['url'], meta={'title': item['title'], 'page': item['page'], 'name':item['name']})

def item_completed(self, results, item, info):

# if not results[0][0]:

# raise DropItem('下载失败')

print(results)

return item

#制定存储规则,定义存储文件夹名称,图片名称

def file_path(self, request, response=None, info=None):

# 拆分文件名

#title = request.meta['title'] + '.' + 'jpg'

#page = request.meta['page']

name = request.meta['name']

#filename = u'{0}/{1}'.format(page, title)

return name

import pymysql

class YanguangPipeline(object):

def __init__(self):

# 连接MySQL数据库

self.connect = pymysql.connect(host='10.x.x.xx', user='root', password='xxxxxx', db='biaoqingbao', port=3306)

self.cursor = self.connect.cursor()

def process_item(self, item, spider):

# 往数据库里面写入数据

self.cursor.execute(

"insert into biaoqing(`name`,`url`)VALUES ('{}','{}')".format(item['title'],item['name']))

self.connect.commit()

return item

# 关闭数据库

def close_spider(self, spider):

self.cursor.close()

self.connect.close()

- middlewares.py

#反爬措施之一:使用随机 User-Agent 头

import random

class UserAgentMiddlewares(object):

user_agent_list = [

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.1 (KHTML, like Gecko) Chrome/22.0.1207.1 Safari/537.1"

"Mozilla/5.0 (X11; CrOS i686 2268.111.0) AppleWebKit/536.11 (KHTML, like Gecko) Chrome/20.0.1132.57 Safari/536.11",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.6 (KHTML, like Gecko) Chrome/20.0.1092.0 Safari/536.6",

"Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.6 (KHTML, like Gecko) Chrome/20.0.1090.0 Safari/536.6",

"Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/537.1 (KHTML, like Gecko) Chrome/19.77.34.5 Safari/537.1",

"Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/536.5 (KHTML, like Gecko) Chrome/19.0.1084.9 Safari/536.5",

"Mozilla/5.0 (Windows NT 6.0) AppleWebKit/536.5 (KHTML, like Gecko) Chrome/19.0.1084.36 Safari/536.5",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3",

"Mozilla/5.0 (Windows NT 5.1) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3",

"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_8_0) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1062.0 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1062.0 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.1) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.0 Safari/536.3",

"Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/535.24 (KHTML, like Gecko) Chrome/19.0.1055.1 Safari/535.24",

"Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/535.24 (KHTML, like Gecko) Chrome/19.0.1055.1 Safari/535.24"

]

def process_request(self, request, spider):

agent = random.choice(self.user_agent_list)

request.headers['User-Agent'] = agent

- settings.py

BOT_NAME = 'biaoqingbao'

SPIDER_MODULES = ['biaoqingbao.spiders']

NEWSPIDER_MODULE = 'biaoqingbao.spiders'

# Crawl responsibly by identifying yourself (and your website) on the user-agent

USER_AGENT = 'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/63.0.3239.108 Safari/537.36'

# Obey robots.txt rules

ROBOTSTXT_OBEY = False #不遵循 Robots 协议

IMAGES_STORE = 'image/biaoqing' #设置图片存储位置

DOWNLOAD_DELAY = 0.5 #延时 0.5s 再请求

#注册自定义的反爬中间件

SPIDER_MIDDLEWARES = {

'biaoqingbao.middlewares.UserAgentMiddlewares': 100,

}

#开启,去掉注释即可

ITEM_PIPELINES = {

'biaoqingbao.pipelines.DemoPipeline': 1,

'biaoqingbao.pipelines.YanguangPipeline': 1,

}

RETRY_HTTP_CODES = [500, 502, 503, 504, 400, 403, 404, 408]

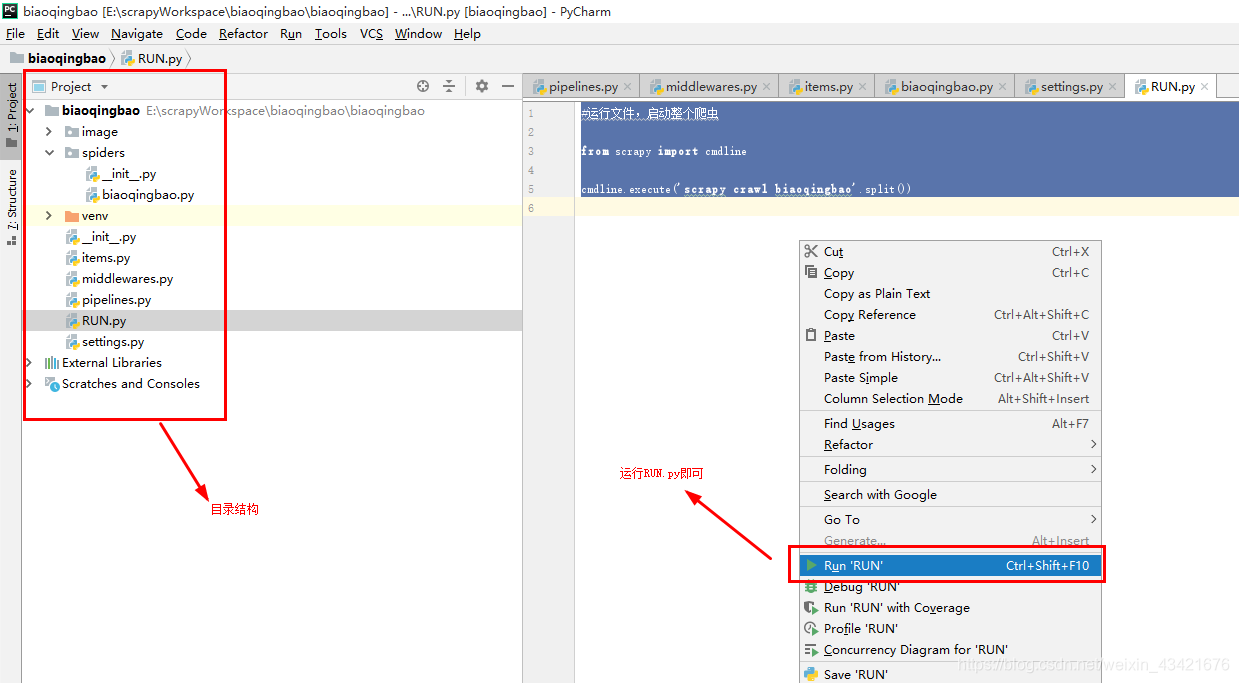

- RUN.py

#运行文件,启动整个爬虫

from scrapy import cmdline

cmdline.execute('scrapy crawl biaoqingbao'.split())

- 新建数据库和表

新建一个名为biaoqingbao的mysql数据库,并创建一个表名为biaoqing的表。

表中字段为 id-主键,name-图片主题名,url-图片存储名。

/*

Navicat Premium Data Transfer

Source Server : Mysql

Source Server Type : MySQL

Source Server Version : 50540

Source Host : localhost:3306

Source Schema : biaoqingbao

Target Server Type : MySQL

Target Server Version : 50540

File Encoding : 65001

Date: 27/11/2019 17:15:50

*/

SET NAMES utf8mb4;

SET FOREIGN_KEY_CHECKS = 0;

-- ----------------------------

-- Table structure for biaoqing

-- ----------------------------

DROP TABLE IF EXISTS `biaoqing`;

CREATE TABLE `biaoqing` (

`id` int(11) NOT NULL AUTO_INCREMENT,

`name` varchar(255) CHARACTER SET utf8 COLLATE utf8_general_ci NULL DEFAULT NULL,

`url` varchar(255) CHARACTER SET utf8 COLLATE utf8_general_ci NULL DEFAULT NULL,

PRIMARY KEY (`id`) USING BTREE

) ENGINE = InnoDB AUTO_INCREMENT = 1664 CHARACTER SET = utf8 COLLATE = utf8_general_ci ROW_FORMAT = Compact;

SET FOREIGN_KEY_CHECKS = 1;

- 运行项目

- 这样就完成了表情包爬取到本地的过程。

- 数据库中存储的name字段用来存储表情包主题信息做模糊查询用。

- url字段则作为下载表情包的文件名和后续放在jsp文件上的路径名。(这么做的原因为了放img链接时英文图片名比较方便。 )

搭网站

爬够了表情包,接下来的工作就是利用本地资源搭建网站并分享快乐

开始

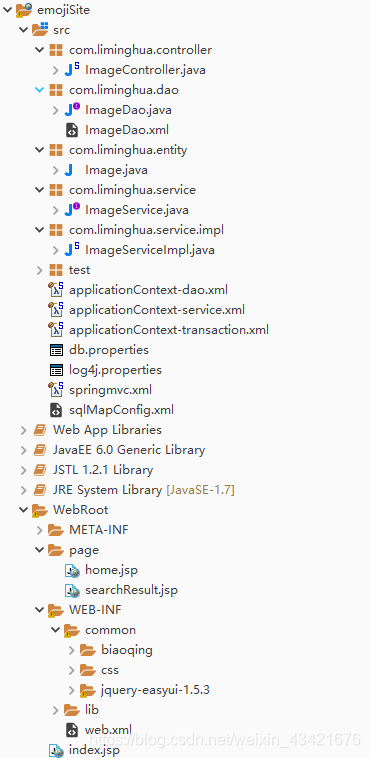

- 创建web项目

- 结构目录

代码可以low,但目录一定要捯饬的漂亮,然后把表情图片粘贴到WEB-INF目录下,这里我用了easyUI的ifram布局,懒得整,也是以前项目粘过来的。

- 配置各种xml文件balabala

SSM框架里的各种配置文件,这里就不细说了,以前写的项目里Ctrl+C,然后改一改用就好了。 - javaBean

实体类要有3个属性,对应我们存在数据库里的图片信息,id作为唯一标识;name作为图片的主题信息和图片标签里的alt信息;imageUrl作为图片链接地址的一部分。(这里的imageUrl实际上是爬虫时存储在本地的表情文件名)

package com.liminghua.entity;

public class Image {

public String id;

public String name;

public String imageUrl;

public String getName() {

return name;

}

public void setName(String name) {

this.name = name;

}

public String getId() {

return id;

}

public void setId(String id) {

this.id = id;

}

public String getImageUrl() {

return imageUrl;

}

public void setImageUrl(String imageUrl) {

this.imageUrl = imageUrl;

}

}

- dao层

这里用了模糊查询,个人建议感兴趣的盆友可以去看看LIKE查询占位符的问题,我这里是在传值时进行了拼接。

package com.liminghua.dao;

import java.util.List;

import com.liminghua.entity.Image;

public interface ImageDao {

public List<Image> searchImage(String name);

}

<?xml version="1.0" encoding="UTF-8" ?>

<!DOCTYPE mapper

PUBLIC "-//mybatis.org//DTD Mapper 3.0//EN"

"http://mybatis.org/dtd/mybatis-3-mapper.dtd">

<mapper namespace="com.liminghua.dao.ImageDao">

<!-- 图片resultmap -->

<resultMap type="image" id="ImageMap">

<id column="id" property="id"/>

<result column="name" property="name"/>

<result column="url" property="imageUrl"/>

</resultMap>

<!-- 图片名模糊查询 -->

<select id="searchImage" resultMap="ImageMap" parameterType="String">

SELECT * FROM biaoqing where name like #{0}

</select>

</mapper>

- service

package com.liminghua.service;

import java.util.List;

import com.liminghua.entity.Image;

public interface ImageService {

List<Image> searchImage(String name);

}

package com.liminghua.service.impl;

import java.util.List;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.stereotype.Service;

import com.liminghua.dao.ImageDao;

import com.liminghua.entity.Image;

import com.liminghua.service.ImageService;

@Service

public class ImageServiceImpl implements ImageService{

@Autowired

ImageDao imageDao;

@Override

public List<Image> searchImage(String ImageServiceImpl) {

return imageDao.searchImage(name);

}

}

- controller

控制器

简单的请求响应

package com.liminghua.controller;

import java.util.List;

import javax.servlet.http.HttpServletRequest;

import javax.servlet.http.HttpServletResponse;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.stereotype.Controller;

import org.springframework.ui.Model;

import org.springframework.web.bind.annotation.RequestMapping;

import com.liminghua.entity.Image;

import com.liminghua.service.ImageService;

@Controller

public class ImageController {

@Autowired

private ImageService imageServiceImpl;

@RequestMapping("home")

public String getHome(HttpServletRequest request, HttpServletResponse reponse,

Model model) throws Exception{

return "home";

}

@RequestMapping("searchImage")

public String searchImage(HttpServletRequest request, HttpServletResponse reponse,

Model model) throws Exception{

String name = request.getParameter("name");

String head = "%";

StringBuffer buffer = new StringBuffer(name);

buffer.insert(0,head);

buffer.append("%");

name = buffer.toString();

List<Image> imageList = imageServiceImpl.searchImage(name);

model.addAttribute("imageList", imageList);

return "searchResult";

}

}

- 前端页面

直接拿来以前写的页面改了一下,没有仔细调,能跑就行

<%@page import="java.sql.Time"%>

<%@ page language="java" import="java.util.*" pageEncoding="UTF-8"%>

<%@ taglib uri="http://java.sun.com/jsp/jstl/core" prefix="c" %>

<%@ taglib uri="http://java.sun.com/jsp/jstl/fmt" prefix="fmt"%>

<%

String root = request.getContextPath();

%>

<!DOCTYPE html>

<html>

<head>

<style>

a{ font-size: 30px; padding-top: 70px; margin: auto; margin-left: 20px; text-align: center; color: white;

text-decoration: none;}

</style>

<meta charset="UTF-8">

<script type= "text/javascript"

src="<%=root%>/common/jquery-easyui-1.5.3/jquery.min.js" ></script>

<script type= "text/javascript"

src="<%=root%>/common/jquery-easyui-1.5.3/jquery.easyui.min.js" ></script>

<script type= "text/javascript"

src="<%=root%>/common/jquery-easyui-1.5.3/locale/easyui-lang-zh_CN.js" ></script>

<link rel="stylesheet" type= "text/css"

href= "<%=root%>/common/jquery-easyui-1.5.3/themes/default/easyui.css" />

<link rel="stylesheet" type= "text/css"

href= "<%=root%>/common/jquery-easyui-1.5.3/themes/icon.css" />

<link rel="stylesheet" type="text/css"

href="<%=root%>/common/css/common.css" />

</head>

<body class="easyui-layout">

<div data-options="region:'north',title:'North Title',split:false, collapsible:false, border:false, noheader:true, minWidth:1024"

style="padding:0 0 0 0; overflow:hidden; height:100px;right:0px;border-width:0px;">

<div class="navBox" id="showDiv">

<div class="nav1Box" style="float: left" >

<a>为了愉快的工作交流!</a>

</div>

<div id="topLimitMenu">

<form action="searchImage" method="post" target="CENTER_IFRAME">

搜索表情关键字:<input type="text" name="name"/>

<input type="submit" value="查询" />

</form>

</div>

</div>

</div>

<div data-options="region:'center',title:''" style="padding:0px;background:#eee;">

</div>

<div data-options="region:'south',split:false" style="height:50px;text-align: center;">

建议分辨率 1366*768及以上

</div>

</body>

</html>

<%@ page language="java" import="java.util.*" pageEncoding="UTF-8"%>

<%@ taglib uri="http://java.sun.com/jsp/jstl/core" prefix="c" %>

<%@ taglib uri="http://java.sun.com/jsp/jstl/fmt" prefix="fmt"%>

<%@page import="com.liminghua.entity.Image"%>

<%@ page import="java.net.URLEncoder" %>

<%

String root = request.getContextPath();

%>

<!DOCTYPE html>

<html>

<head>

<style>

a{ font-size: 30px; padding-top: 70px; margin: auto; margin-left: 20px; text-align: center; color: white;

text-decoration: none;}

</style>

<meta charset="UTF-8">

<script type= "text/javascript"

src="<%=root%>/common/jquery-easyui-1.5.3/jquery.min.js" ></script>

<script type= "text/javascript"

src="<%=root%>/common/jquery-easyui-1.5.3/jquery.easyui.min.js" ></script>

<script type= "text/javascript"

src="<%=root%>/common/jquery-easyui-1.5.3/locale/easyui-lang-zh_CN.js" ></script>

<link rel="stylesheet" type= "text/css"

href= "<%=root%>/common/jquery-easyui-1.5.3/themes/default/easyui.css" />

<link rel="stylesheet" type= "text/css"

href= "<%=root%>/common/jquery-easyui-1.5.3/themes/icon.css" />

<link rel="stylesheet" type="text/css"

href="<%=root%>/common/css/common.css" />

</head>

<body>

<div class="showselect">

<c:forEach items="${imageList}" var="Image">

<img src="<%=request.getContextPath() %>/common/biaoqing/${Image.imageUrl}" alt="${Image.name}" width="200px" height="200px">

</c:forEach>

</div>

</body>

</html>

-

好了,至此大功告成,放在tomcat上跑就ok。

项目访问地址http://localhost:8080/emojiSite/home -

最后附上源码

//download.csdn.net/download/weixin_43421676/12005167

- 木得C币的同学

链接: https://pan.baidu.com/s/1Q5Cy8FprVm-oOdGJ4rWVPg

提取码: jqev