腾讯面试的时候,问了我ResNet开始的7x7卷积能不能换成3个3x3,平时自己是有看到一些说法是可以的,而且换了之后效果更好。但是不是真的如此,我面完后进行了一系列的推导分析,便写了这篇文章简要记录下,如有错误,还望指正。

channel 数目都相同时

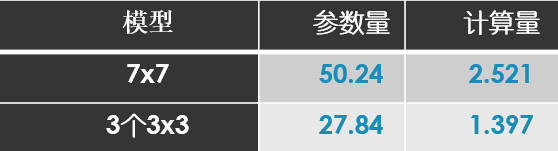

参数量与计算量

如果单纯的只看卷积不考虑BN和激活函数的话(输入与输出的channel=1),参数量可以简单看成 3x3x3=27, 7x7=49,因此显然是3个3x3占优,同理计算量上也是如此,但是一切的前提都是channel数目相同,发现很多人都忽视了这一点。

利用 thop 计算了一下channel=32时的参数量和计算量,显然都是3个3x3占优的

速度上

首先写了下面的一段代码分别在CPU和GPU上进行测试时间

import torch

import torch.nn as nn

from torchsummary import summary

class BasicConv(nn.Module):

def __init__(self, in_planes, out_planes, kernel_size, stride=1, padding=0, dilation=1, groups=1, relu=True,

bn=True, bias=False):

super(BasicConv, self).__init__()

self.out_channels = out_planes

self.conv = nn.Conv2d(in_planes, out_planes, kernel_size=kernel_size, stride=stride, padding=padding,

dilation=dilation, groups=groups, bias=bias)

self.bn = nn.BatchNorm2d(out_planes, eps=1e-5, momentum=0.01, affine=True) if bn else None

self.relu = nn.ReLU(inplace=True) if relu else None

def forward(self, x):

x = self.conv(x)

if self.bn is not None:

x = self.bn(x)

if self.relu is not None:

x = self.relu(x)

return x

class Model7x7(nn.Module):

def __init__(self, channel):

super(Model7x7, self).__init__()

self.conv1 = BasicConv(in_planes=channel, out_planes=channel, kernel_size=7, stride=1, padding=3)

def forward(self, x):

x = self.conv1(x)

return x

class Model3x3(nn.Module):

def __init__(self, channel):

super(Model3x3, self).__init__()

self.conv1 = BasicConv(in_planes=channel, out_planes=channel, kernel_size=3, stride=1, padding=1)

self.conv2 = BasicConv(in_planes=channel, out_planes=channel, kernel_size=3, stride=1, padding=1)

self.conv3 = BasicConv(in_planes=channel, out_planes=channel, kernel_size=3, stride=1, padding=1)

def forward(self, x):

x = self.conv1(x)

x = self.conv2(x)

x = self.conv3(x)

return x

if __name__ == '__main__':

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

channel = 32

#model = Model7x7(channel=channel).to(device) # 0.19 M

model = Model3x3(channel=channel).to(device) # 0.11 M

summary(model, (channel, 224, 224))

model.eval()

x = torch.randn(1, channel, 224, 224).to(device)

# Run inference

import time

start_time = time.time()

for i in range(100):

model(x)

end_time = time.time()

print('Done. (%.3fs)' % ((end_time - start_time) / 10))

CPU测试结果:

channel = 8 时

7x7: 0.850 s

3x3: 0.573 s

CPU上 3 个 3x3 胜出,CPU结果主要与FLOPs相关了,3个3x3稳定胜出。

GPU测试结果:

可以看到随着channel数目比较小的时候,一个7x7是比较占优的,而channel数目越来越大的时候,3x3逐渐变强,channel数目大的时候甩开了7x7卷积。主要原因是channel数目大,FLOPs大,因此时间主要花费在运算上了。

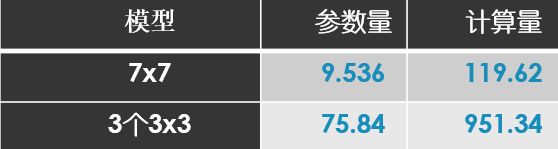

ResNet的Stem部分能否进行替换呢?

当然是可以的,而且精度效果会更好。Bag of Tricks那篇论文有提到这一点:

可以看到把可以把原来的7x7按这样进行如图所示的替换

结果如下图所示,计算量的增加还是非常明显的,精度相比原来提高了一点点。

自己继续用thop计算了一下:

计算的代码如下:

import torch

import torch.nn as nn

from thop import profile

from thop import clever_format

class BasicConv(nn.Module):

def __init__(self, in_planes, out_planes, kernel_size, stride=1, padding=0, dilation=1, groups=1, relu=True,

bn=True, bias=False):

super(BasicConv, self).__init__()

self.out_channels = out_planes

self.conv = nn.Conv2d(in_planes, out_planes, kernel_size=kernel_size, stride=stride, padding=padding,

dilation=dilation, groups=groups, bias=bias)

self.bn = nn.BatchNorm2d(out_planes, eps=1e-5, momentum=0.01, affine=True) if bn else None

self.relu = nn.ReLU(inplace=True) if relu else None

def forward(self, x):

x = self.conv(x)

if self.bn is not None:

x = self.bn(x)

if self.relu is not None:

x = self.relu(x)

return x

class Model7x7(nn.Module):

def __init__(self, channel):

super(Model7x7, self).__init__()

self.conv1 = BasicConv(in_planes=3, out_planes=channel, kernel_size=7, stride=2, padding=3)

def forward(self, x):

x = self.conv1(x)

return x

class Model3x3(nn.Module):

def __init__(self, channel):

super(Model3x3, self).__init__()

self.conv1 = BasicConv(in_planes=3, out_planes=channel, kernel_size=3, stride=2, padding=1)

self.conv2 = BasicConv(in_planes=channel, out_planes=channel, kernel_size=3, stride=1, padding=1)

self.conv3 = BasicConv(in_planes=channel, out_planes=channel, kernel_size=3, stride=1, padding=1)

def forward(self, x):

x = self.conv1(x)

x = self.conv2(x)

x = self.conv3(x)

return x

if __name__ == '__main__':

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

channel = 64

#model = Model7x7(channel=channel).to(device)

model = Model3x3(channel=channel).to(device)

input = torch.randn(1, 3, 224, 224).to(device)

flops, params = profile(model, inputs=(input,))

flops, params = clever_format([flops, params], "%.3f")

print('flops = %s' % flops)

print('params = %s' % params)

model.eval()

# Run inference

import time

start_time = time.time()

for i in range(10):

model(input)

end_time = time.time()

print('Done. (%.3fs)' % ((end_time - start_time) / 10))

可以看到ResNet开头的7x7卷积如果换成3个3x3的话,虽然精度会有一点点的提升,但是参数量计算量都上去了,速度下降也是比较明显的。看来真的没有免费的午餐啊。

为什么ResNet开头的7x7替换成3个3x3后效果更好了?

1.感受野角度

Stem的7x7部分的感受野为:7

而替换成3个3x3后,感受野为:11

1 + (3 - 1) x 1 = 3

3 + (3 - 1) x 2 = 7

7 + (3 - 1) x 2 = 11因此网络感受野变大了,效果更好了