参考来源:

https://blog.miguelgrinberg.com/post/video-streaming-with-flask

https://www.jb51.net/article/63181.htm

工程的所有文件:

想要改成自己获取视频帧并处理的图片,在camera.py文件中修改就可以了。也可以参考最后给出的camera_opencv.py文件和camera_pi.py文件。

文件的代码如下:

index.html

<html>

<head>

<title>web Video</title>

</head>

<body>

<h1>web端实时视频demo</h1>

<img src="{{ url_for('video_feed') }}">

</body>

</html>

app.py

#!/usr/bin/env python

from importlib import import_module

import os

from flask import Flask, render_template, Response

# import camera driver

if os.environ.get('CAMERA'):

Camera = import_module('camera_' + os.environ['CAMERA']).Camera

else:

from camera import Camera

# Raspberry Pi camera module (requires picamera package)

# from camera_pi import Camera

app = Flask(__name__)

@app.route('/')

def index():

"""Video streaming home page."""

return render_template('index.html')

def gen(camera):

"""Video streaming generator function."""

while True:

frame = camera.get_frame()

yield (b'--frame\r\n'

b'Content-Type: image/jpeg\r\n\r\n' + frame + b'\r\n')

@app.route('/video_feed')

def video_feed():

"""Video streaming route. Put this in the src attribute of an img tag."""

return Response(gen(Camera()),

mimetype='multipart/x-mixed-replace; boundary=frame')

if __name__ == '__main__':

app.run(host='0.0.0.0', threaded=True)

base_camera.py

import time

import threading

try:

from greenlet import getcurrent as get_ident

except ImportError:

try:

from thread import get_ident

except ImportError:

from _thread import get_ident

class CameraEvent(object):

"""An Event-like class that signals all active clients when a new frame is

available.

"""

def __init__(self):

self.events = {}

def wait(self):

"""Invoked from each client's thread to wait for the next frame."""

ident = get_ident()

if ident not in self.events:

# this is a new client

# add an entry for it in the self.events dict

# each entry has two elements, a threading.Event() and a timestamp

self.events[ident] = [threading.Event(), time.time()]

return self.events[ident][0].wait()

def set(self):

"""Invoked by the camera thread when a new frame is available."""

now = time.time()

remove = None

for ident, event in self.events.items():

if not event[0].isSet():

# if this client's event is not set, then set it

# also update the last set timestamp to now

event[0].set()

event[1] = now

else:

# if the client's event is already set, it means the client

# did not process a previous frame

# if the event stays set for more than 5 seconds, then assume

# the client is gone and remove it

if now - event[1] > 5:

remove = ident

if remove:

del self.events[remove]

def clear(self):

"""Invoked from each client's thread after a frame was processed."""

self.events[get_ident()][0].clear()

class BaseCamera(object):

thread = None # background thread that reads frames from camera

frame = None # current frame is stored here by background thread

last_access = 0 # time of last client access to the camera

event = CameraEvent()

def __init__(self):

"""Start the background camera thread if it isn't running yet."""

if BaseCamera.thread is None:

BaseCamera.last_access = time.time()

# start background frame thread

BaseCamera.thread = threading.Thread(target=self._thread)

BaseCamera.thread.start()

# wait until frames are available

while self.get_frame() is None:

time.sleep(0)

def get_frame(self):

"""Return the current camera frame."""

BaseCamera.last_access = time.time()

# wait for a signal from the camera thread

BaseCamera.event.wait()

BaseCamera.event.clear()

return BaseCamera.frame

@staticmethod

def frames():

""""Generator that returns frames from the camera."""

raise RuntimeError('Must be implemented by subclasses.')

@classmethod

def _thread(cls):

"""Camera background thread."""

print('Starting camera thread.')

frames_iterator = cls.frames()

for frame in frames_iterator:

BaseCamera.frame = frame

BaseCamera.event.set() # send signal to clients

time.sleep(0)

# if there hasn't been any clients asking for frames in

# the last 10 seconds then stop the thread

if time.time() - BaseCamera.last_access > 10:

frames_iterator.close()

print('Stopping camera thread due to inactivity.')

break

BaseCamera.thread = None

camera.py

import time

from base_camera import BaseCamera

import numpy as np

import cv2

class Camera(BaseCamera):

"""An emulated camera implementation that streams a repeated sequence of"""

@staticmethod

def frames():

# 在这里实现自己视频帧的获取和处理

i = 0

while True:

time.sleep(0.5)

img = np.ones((640, 1080, 3), np.uint8) * 188

cv2.putText(img, str(i), (300, 300), cv2.FONT_HERSHEY_SIMPLEX, 2, (255, 0, 0), 3)

img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

img_encode = cv2.imencode('.jpg', img)[1]

img_byte = img_encode.tobytes()

yield img_byte

i = i + 1

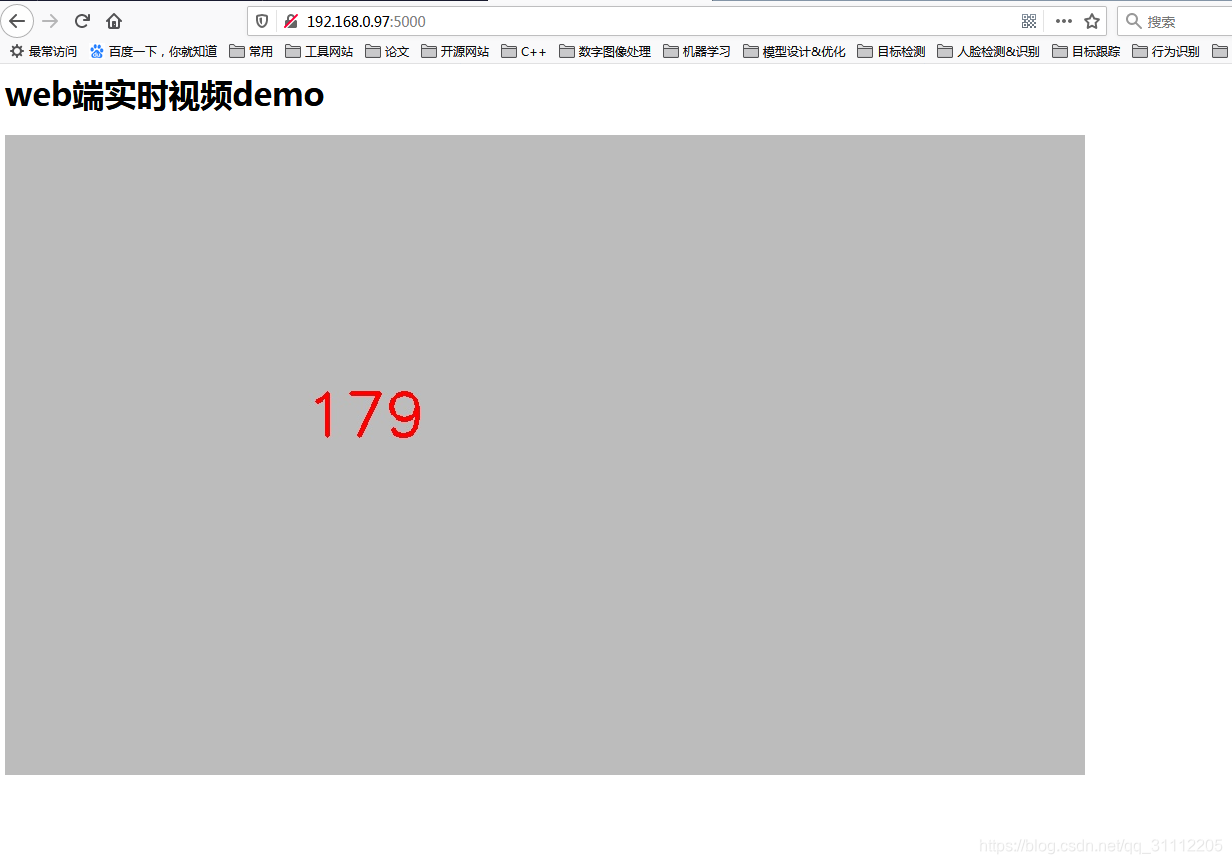

i = i % 1000最后在浏览器的效果如下:

另附:

camera_opencv.py(用opencv实现调用摄像头)

import os

import cv2

from base_camera import BaseCamera

class Camera(BaseCamera):

video_source = 0

def __init__(self):

if os.environ.get('OPENCV_CAMERA_SOURCE'):

Camera.set_video_source(int(os.environ['OPENCV_CAMERA_SOURCE']))

super(Camera, self).__init__()

@staticmethod

def set_video_source(source):

Camera.video_source = source

@staticmethod

def frames():

camera = cv2.VideoCapture(Camera.video_source)

if not camera.isOpened():

raise RuntimeError('Could not start camera.')

while True:

# read current frame

start = cv2.getTickCount()

_, img = camera.read()

# the fps put in image

stop = cv2.getTickCount()

fps = cv2.getTickFrequency() / (stop - start)

fps = '{}: {:.3f}'.format('FPS', fps)

(fps_w, fps_h), baseline = cv2.getTextSize(fps, cv2.FONT_HERSHEY_SIMPLEX, 0.5, 2)

cv2.rectangle(img, (2, 20 - fps_h - baseline), (2 + fps_w, 18), color=(0, 0, 0), thickness=-1)

cv2.putText(img, text=fps, org=(3, 15), fontFace=cv2.FONT_HERSHEY_SIMPLEX,

fontScale=0.5, color=(255, 255, 255), thickness=2)

# encode as a jpeg image and return it

yield cv2.imencode('.jpg', img)[1].tobytes()camera_pi.py(树莓派摄像头模块实现)

import io

import time

import picamera

from base_camera import BaseCamera

class Camera(BaseCamera):

@staticmethod

def frames():

with picamera.PiCamera() as camera:

# let camera warm up

time.sleep(2)

stream = io.BytesIO()

for _ in camera.capture_continuous(stream, 'jpeg',

use_video_port=True):

# return current frame

stream.seek(0)

yield stream.read()

# reset stream for next frame

stream.seek(0)

stream.truncate()